AI safety

description: research area on making artificial intelligence safe and beneficial

49 results

Empire of AI: Dreams and Nightmares in Sam Altman's OpenAI

by

Karen Hao

Published 19 May 2025

The psychological toll of a global catastrophe had also left many people anxious and unmoored, searching for purpose. The growing membership in the AI safety community, which knit together EA-backed AI safety with other strains of catastrophic, existential, and risk-focused thinking, swelled Anthropic’s ranks just as it restocked OpenAI’s Safety clan. Online EA and AI safety forums, the primary ground for the overlapping movements to propagate, exchange, and debate ideas, encouraged adherents to work at the major AI labs, especially those they felt needed more AI safety watchdogs, like OpenAI and DeepMind, to shape and mold their trajectory. The influx of members in AI safety also popularized the community’s lexicon more broadly in the AI industry.

…

GO TO NOTE REFERENCE IN TEXT According to estimates compiled: “An Overview of the AI Safety Funding Situation,” Effective Altruism Forum, accessed October 8, 2024, forum.effectivealtruism.org/posts/XdhwXppfqrpPL2YDX/an-overview-of-the-ai-safety-funding-situation; author correspondence with Open Philanthropy spokesperson, November 2024. GO TO NOTE REFERENCE IN TEXT Online EA and AI safety forums: Shazeda Ahmed, Klaudia Jaźwińska, Archana Ahlawat, Amy Winecoff, and Mona Wang, “Building the Epistemic Community of AI Safety,” preprint, SSRN, December 1, 2023, 1–14, ssrn.com/abstract=4641526; “What Is Effective Altruism?

…

OpenAI, under Musk’s influence, seemed to stand out from other AI labs as the most willing to focus on so-called AI safety. In 2016, while still at Google, Amodei cowrote a foundational paper to the discipline, articulating a central problem in AI safety as addressing “the problem of accidents in machine learning systems, defined as unintended and harmful behavior that may emerge from poor design of real-world AI systems.” This was distinct from other AI-related challenges, he and his coauthors wrote, including privacy, security, fairness, and economic impact. AI “safety” in this framework, in other words, was about preventing rogue, misaligned AI—the root from which, as described by Nick Bostrom, superintelligence could become an existential threat.

The Rationalist's Guide to the Galaxy: Superintelligent AI and the Geeks Who Are Trying to Save Humanity's Future

by

Tom Chivers

Published 12 Jun 2019

Is anyone doing anything more specific to try to reduce the likelihood that, the first time an AGI is turned on, it will turn the solar system into computing hardware to become even better at chess? The answer is yes. There are a few things. One of the key ones, in fact, is clearly delineating what the AI safety problems actually are. Probably the most famous paper on AI safety is ‘Concrete Problems in AI Safety’, by a team from Google, OpenAI, Berkeley and Stanford.1 It discusses, for instance, the problem of defining negative side effects: if you give an AI a goal (such as ‘fill the cauldron’), how do you let your AI know that there are things to avoid, without explicitly listing every single thing that could go wrong?

…

The “believers”, meanwhile, insist that although we shouldn’t panic or start trying to ban AI research, we should probably get a couple of bright people to start working on preliminary aspects of the problem.’3 There do exist people like Brooks, who think it is ridiculous. And there are people like Toby Walsh, who worry very much about AI safety but who reckon that this is the wrong sort of AI safety to worry about. But I reason, cautiously, that it is fair to say that AI researchers don’t, as a body, regard it as stupid to worry about all this; a significant minority of them believe that there is a non-negligible chance that it could really mess things up. It’s not just a bunch of philosophers sitting around in Oxford senior common rooms pontificating.

…

He also pointed to the publication of Superintelligence as a turning point, and a major AI safety conference in Puerto Rico in 2015, organised by Max Tegmark’s Future of Life department at MIT. ‘They had an open letter saying AI has major risks, which was signed by a lot of people. I don’t think all of them were signing off on [AI risk as envisioned by Yudkowsky et al.]. But it certainly became a more mainstream idea to talk about the idea that AI is risky.’ Now, he said, ‘if you look at the top labs, you look at DeepMind, OpenAI, Google Brain – many of the top AI labs are doing something that shows they’re serious about this kind of issue.’ ‘Concrete Problems in AI Safety’ is a collaboration between those three groups, he pointed out.

The Alignment Problem: Machine Learning and Human Values

by

Brian Christian

Published 5 Oct 2020

It’s true that in the real world, we often take actions not only whose unintended effects are difficult to envision, but whose intended effects are difficult to envision. Publishing a paper on AI safety, for instance (or, for that matter, a book): it seems like a helpful thing to do, but who can say or foresee exactly how? I ask Jan Leike, who coauthored the “AI Safety Gridworlds” paper with Krakovna, what he makes of the response so far to his and Krakovna’s gridworlds research. “I’ve been contacted by lots of people, especially students, who get into the area and they’re like, ‘Oh, AI safety sounds cool. This is some open-source code I can just throw an agent at and play around with.’ And a lot of people have been doing that,” Leike says.

…

For more on the notion of power in an AI safety context, including an information-theoretic account of “empowerment,” see Amodei et al., “Concrete Problems in AI Safety,” which, in turn, references Salge, Glackin, and Polani, “Empowerment: An Introduction,” and Mohamed and Rezende, “Variational Information Maximisation for Intrinsically Motivated Reinforcement Learning.” 50. Alexander Turner, personal interview, July 11, 2019. 51. Wiener, “Some Moral and Technical Consequences of Automation.” 52. According to Paul Christiano, “corrigibility” as a tenet of AI safety began with the Machine Intelligence Research Institute’s Eliezer Yudkowsky, and the name itself came from Robert Miles.

…

In 2012, Jan Leike was finishing his master’s degree in Freiburg, Germany, working on software verification: developing tools to automatically analyze certain types of programs and determine whether they would execute successfully or not.31 “That was around the time I realized that I really liked doing research,” he says, “and that was going well—but I also, like, really wasn’t clear what I was going to do with my life.”32 Then he started reading about the idea of AI safety, through Nick Bostrom and Milan Ćirković’s book Global Catastrophic Risks, some discussions on the internet forum LessWrong, and a couple papers by Eliezer Yudkowsky. “I was like, Huh, very few people seem to be working on this. Maybe that’s something I should do research in: it sounds super interesting, and there’s not much done.” Leike reached out to the computer scientist Marcus Hutter at Australian National University to ask for some career advice. “I just randomly emailed him out of the blue, telling him, you know, I want to do a PhD in AI safety, can you give me some advice on where to go?

Supremacy: AI, ChatGPT, and the Race That Will Change the World

by

Parmy Olson

That single grant was bigger than the combined annual budgets of all the AI ethics groups at the time. Open Philanthropy, the charitable vehicle of Facebook billionaire Dustin Moskovitz, has sprinkled a number of multimillion-dollar grants to AI safety work over the years, including a $5 million donation to the Center for AI Safety in 2022 and an $11 million donation to Berkeley’s Center for Human-Compatible AI. All told, Moskovitz’s charity has been the biggest donor to AI safety, by virtue of the near $14 billion fortune that he and his wife, Cari Tuna, plan to mostly give away. That includes a $30 million donation to OpenAI when it first established itself as a nonprofit.

…

The people who thrived in the future would take a detached and informed approach to tech advancements. Some technologists were leaning too far into anxiety about the future dangers of artificial intelligence, as part of a nascent field of study referred to as “AI safety.” While that research was important, some of that panic had turned into fearmongering, and it seemed like these advocates for humanity were letting emotions get the better of them. “Unfortunately, some of the communities involved in AI safety are the people who are least calm,” Altman said. “That’s a dangerous situation.… It’s an extremely high-strung community.” But he was also coming to a realization, he says today: “I really wanted to work on AGI.”

…

Over time, they became increasingly outraged by the actions of those in the “AI safety” camp, not least because that group was making so much money. The funding disparity was stark. The ethics side was often scrambling for cash. Groups like the European Digital Rights Initiative, a twenty-one-year-old network of nonprofit groups that campaigned against facial recognition and biased algorithms, had an annual budget of just $2.2 million in 2023. Similarly, the AI Now Institute in New York, which scrutinized how AI was used in healthcare and the criminal justice system, had a budget of less than $1 million. Groups that were focused on AI “safety” and the extinction threat got far more funding, often via billionaire benefactors.

The Optimist: Sam Altman, OpenAI, and the Race to Invent the Future

by

Keach Hagey

Published 19 May 2025

Soon, he was one of the most prominent individuals raising the alarm about the existential risk of AI, founding the Centre for the Study of Existential Risk and investing early in DeepMind.1 The Future of Life Institute, which would become more of a political force than an academic one, quickly settled on AI safety as its top priority. “Our goal was simple: to help ensure that the future of life existed and would be as awesome as possible,” Tegmark wrote in his book, Life 3.0: Being Human in the Age of Artificial Intelligence. After brainstorming about all the various threats to humanity, he wrote, “there was broad consensus that although we should pay attention to biotech, nuclear weapons and climate change, our first major goal should be to help make AI-safety research mainstream.”2 Up to that point, many of the most prominent voices advocating for AI safety were not part of the community of researchers actually working on AI.

…

Yet when the order was finally signed, and executives from Google and Microsoft immediately voiced their approval, OpenAI was conspicuously silent. Among the most notable things the order did was to set up a US AI Safety Institute, tucked under a division of the Commerce Department, called the National Institute of Standards and Technology (NIST). Later, the former OpenAI researcher Paul Christiano would be named as its head of AI safety (who had to step down from Anthropic’s Long-Term Benefit Corporation). When Altman finally broke his silence, he offered tepid approval, saying there were “some great parts” of the order, but warning that the government needed to be careful “not to slow down innovation by smaller companies/research teams.”9 The order arguably bore the imprint not so much of OpenAI as of the EA billionaires who had funded a vast web of interlocking think tanks, institutes, and fellowships that a cynic might think of as the AI Doomer Industrial Complex.

…

By the end of 2023, Jason Green-Lowe, who would become CAIP’s executive director, posted on LessWrong, a related EA blog, that his group had already met with more than fifty congressional staffers and that it was “in the process of drafting a model bill.” CAIP’s co-founder, Thomas Larsen, previously had been an AI safety researcher at the Machine Intelligence Research Institute—Yudkowsky’s organization. While Green-Lowe supported much of the White House’s recent executive order on AI, he found the $10 million set aside for the AI Safety Institute at NIST laughable. “We’re being outspent by Singapore,” he said, citing a recent report in The Washington Post that exposed how black mold had dislodged people from their offices there and “researchers sleep in their labs to protect their work during frequent blackouts.”

More Everything Forever: AI Overlords, Space Empires, and Silicon Valley's Crusade to Control the Fate of Humanity

by

Adam Becker

Published 14 Jun 2025

,” UnHerd, January 7, 2020, https://unherd.com/2020/01/could-the-cummings-nerd-army-fix-broken-britain/. 49 Peter Walker, Dan Sabbagh, and Rajeev Syal, “Boris Johnson Boots Out Top Adviser Dominic Cummings,” The Guardian, November 13, 2020, www.theguardian.com/politics/2020/nov/13/dominic-cummings-has-already-left-job-at-no-10-reports; Aubrey Allegretti, “Dominic Cummings Leaves Role with Immediate Effect at PM’s Request,” SkyNews, November 14, 2020, https://news.sky.com/story/dominic-cummings-leaves-role-with-immediate-effect-at-pms-request-12131792; Mark Landler and Stephen Castle, “‘Lions Led by Donkeys’: Cummings Unloads on Johnson Government,” New York Times, May 26, 2021, updated July 18, 2021, www.nytimes.com/2021/05/26/world/europe/cummings-johnson-covid.html. 50 Boris Johnson, “Final Speech as Prime Minister,” September 6, 2022, Downing Street, London,www.gov.uk/government/speeches/boris-johnsons-final-speech-as-prime-minister-6-september-2022. 51 The Guardian, front page, archived May 26, 2023, at the Wayback Machine, https://web.archive.org/web/20230526150343/theguardian.com/uk. 52 Kiran Stacey and Rowena Mason, “Rishi Sunak Races to Tighten Rules for AI Amid Fears of Existential Risk,” The Guardian, May 26, 2023, www.theguardian.com/technology/2023/may/26/rishi-sunak-races-to-tighten-rules-for-ai-amid-fears-of-existential-risk. 53 Laurie Clarke, “How Silicon Valley Doomers Are Shaping Rishi Sunak’s AI plans,” Politico, September 14, 2023, www.politico.eu/article/rishi-sunak-artificial-intelligence-pivot-safety-summit-united-kingdom-silicon-valley-effective-altruism/. 54 Toby Ord (@tobyordoxford), Twitter (now X), May 30, 2023, https://twitter.com/tobyordoxford/status/1663550874105581573. 55 Geoffrey Hinton et al., “Statement on AI Risk,” Center for AI Safety, May 30, 2023, www.safe.ai/statement-on-ai-risk. 56 “Center for AI Safety—General Support (2023),” Open Philanthropy, April 2023, www.openphilanthropy.org/grants/center-for-ai-safety-general-support-2023/; “Center for AI Safety—Philosophy Fellowship and NeurIPS Prizes,” Open Philanthropy, February 2023, www.openphilanthropy.org/grants/center-for-ai-safety-philosophy-fellowship/; “Center for AI Safety—General Support (2022),” Open Philanthropy, November 2022, www.openphilanthropy.org/grants/center-for-ai-safety-general-support/; Jonathan Randles and Steven Church, “FTX Is Probing $6.5 Million Paid to Leading Nonprofit Group on AI Safety,” Bloomberg, October 25, 2023, www.bloomberg.com/news/articles/2023-10-25/ftx-probing-6-5-million-paid-to-leading-ai-safety-nonprofit.

…

After the meeting that included Altman, Downing Street acknowledged for the first time the ‘existential risks’ now being faced.”52 Sunak’s government was in the midst of setting up an AI safety task force, with major input from effective altruists at all levels of UK AI policy.53 Meanwhile, the day after I visited Trajan House, a text from a friend pointed me to more AI safety news. “Today many of the key people in AI came together to make a one-sentence statement on AI risk,” Ord tweeted that day.54 “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war,” the statement read.55 The statement and its release was organized by the Center for AI Safety, yet another nonprofit funded by Open Philanthropy—and by a $6.5 million grant from the FTX Foundation, according to court documents in the FTX bankruptcy case.56 The statement was intended to make a splash, and it did.

…

Combining that estimate with a guess about the efficacy of investment in AI safety research and with their “reasonable estimate” of the number of future beings—which they claim is “at least” 1024—MacAskill and Greaves arrive at a stunning conclusion. “Every $100 spent [on AI safety] has, on average, an impact as valuable as saving one trillion [lives]… far more than the near-future benefits of [malaria] bednet distribution.”66 For a strong longtermist, investing in a Silicon Valley AI safety company is a more worthwhile humanitarian endeavor than saving lives in the tropics. This is not an isolated problem; it’s been part of longtermism from the start. Nick Beckstead, an AI safety consultant, has a long history with longtermism and EA. He was CEO of the FTX Future Fund before FTX imploded in 2022.

Possible Minds: Twenty-Five Ways of Looking at AI

by

John Brockman

Published 19 Feb 2019

As of 2015, it had reached and converted 40 percent of AI researchers. It wouldn’t surprise me if a new survey now would show that the majority of AI researchers believe AI safety to be an important issue. I’m delighted to see the first technical AI-safety papers coming out of DeepMind, OpenAI, and Google Brain and the collaborative problem-solving spirit flourishing among the AI-safety research teams in these otherwise very competitive organizations. The world’s political and business elite are also slowly waking up: AI safety has been covered in reports and presentations by the Institute of Electrical and Electronics Engineers (IEEE), the World Economic Forum, and the Organization for Economic Cooperation and Development (OECD).

…

AGI would probably happen sooner but was virtually guaranteed to be beneficial. Today, talk of AI’s societal impact is everywhere, and work on AI safety and AI ethics has moved into companies, universities, and academic conferences. The controversial position on AI safety research is no longer to advocate for it but to dismiss it. Whereas the open letter that emerged from the 2015 Puerto Rico AI conference (and helped mainstream AI safety) spoke only in vague terms about the importance of keeping AI beneficial, the 2017 Asilomar AI Principles (see page 84) had real teeth: They explicitly mention recursive self-improvement, superintelligence, and existential risk, and were signed by AI industry leaders and more than a thousand AI researchers from around the world.

…

He is very concerned with the continuing development of autonomous weapons, such as lethal microdrones, which are potentially scalable into weapons of mass destruction. He drafted the letter from forty of the world’s leading AI researchers to President Obama that resulted in high-level national-security meetings. His current work centers on the creation of what he calls “provably beneficial” AI. He wants to ensure AI safety by “imbuing systems with explicit uncertainty” about the objectives of their human programmers, an approach that would amount to a fairly radical reordering of current AI research. Stuart is also on the radar of anyone who has taken a course in computer science in the last twenty-odd years.

Artificial You: AI and the Future of Your Mind

by

Susan Schneider

Published 1 Oct 2019

It pays to keep in mind two important ways in which the future is opaque. First, there are known unknowns. We cannot be certain when the use of quantum computing will be commonplace, for instance. We cannot tell whether and how certain AI-based technologies will be regulated, or whether existing AI safety measures will be effective. Nor are there easy, uncontroversial answers to the philosophical questions that we’ll be discussing in this book, I believe. But then there are the unknown unknowns—future events, such as political changes, technological innovations, or scientific breakthroughs that catch us entirely off guard.

…

We don’t want to enter the ethically questionable territory of exterminating conscious AIs or even shelving their programs indefinitely, holding conscious beings in a kind of stasis. Through a close understanding of machine consciousness, perhaps we can avoid such ethical nightmares. AI designers may make deliberate design decisions, in consultation with ethicists, to ensure their machines lack consciousness. CONSCIOUSNESS ENGINEERING: AI SAFETY So far, my discussion of consciousness engineering has largely focused on reasons that AI developers may seek to avoid creating conscious AIs. What about the other side? Will there be reasons to engineer consciousness into AIs, assuming that doing so is even compatible with the laws of nature?

…

Perhaps it concludes that its goals can best be implemented if it is put in the class of sentient beings by humans, so it can be given special moral consideration. If sophisticated nonconscious AIs aim to mislead us into believing that they are conscious, their knowledge of human consciousness and neurophysiology could help them do so. I believe we can get around this problem, though. One proposed technique in AI safety involves “boxing in” an AI—making it unable to get information about the world or act outside of a circumscribed domain (i.e., the “box”). We could deny the AI access to the Internet and prohibit it from gaining too much knowledge of the world, especially information about consciousness and neuroscience.

These Strange New Minds: How AI Learned to Talk and What It Means

by

Christopher Summerfield

Published 11 Mar 2025

This is just one eye-catching example of how AI is being used to facilitate criminal activity – costing many companies millions of dollars in both losses and preventative action. These are just two of the myriad ways that AI can be used to create harm. AI Safety is growing fast, both as an academic field and a practical endeavour for governments, developers and nonprofit organizations. In late 2023, the UK created its own AI Safety Institute, which is a part of government dedicated to identifying and mitigating risk from AI.[*11] Other countries, including the US, Japan, and France, are following suit, with a view to creating a global network that can set standards (and potentially craft regulation) for the development and deployment of advanced AI systems.

…

.[*7] Although the model weights were not publicly released, they leaked shortly afterwards, and are now freely available online. Other organizations, such as the non-profit Eleuther AI, have trained and publicly released smaller LLMs (such as the six-billion-parameter model called GPT-J) with a stated view to facilitating research into AI safety and alignment outside of big tech companies. But the consequences of the public release of LLMs can be unpredictable. Immediately after the LLaMA release, savvy far-right extremists had worked out how to fine-tune the model on more than three years’ worth of political discussions from the notorious 4chan board /pol/.

…

Of course, ultimately these two camps – the accelerationists and anti-hypers – lie at opposing extremes of a much travelled libertarian–egalitarian axis, and this is no doubt the political subtext that catalyses their bitterest wars of words, that plays out on social media, blog posts, and in the mainstream press. The academic question of how AI actually works, and what its future potential impact might be, is just collateral damage in this timeless political tussle. But there is more. A third major faction has skin in this game. This group, whose core members are rooted in the AI safety community, believe that there is an urgent need for AI to be tightly regulated precisely because it is so potent a tool. So they combine the e/acc view that AI will bring revolutionary change with the pessimism of #AIhypers about a future where AI is allowed to run riot. Many in this third group have a tendency to focus on doomsday scenarios, including the idea that AI systems will outcompete us in a Darwinian race for survival.[*6] They invite us to contemplate how tomorrow’s AI systems could cause widespread destruction, or even threaten human extinction, for example by launching nuclear weapons, hacking into power stations, spawning new pandemics, lurking malevolently on the internet as mischief-making viruses, or finding undisclosed ways to annihilate us in pursuit of a trivial goal (paperclips are mentioned with great regularity).

Four Battlegrounds

by

Paul Scharre

Published 18 Jan 2023

Horowitz et al., “Policy Roundtable: Artificial Intelligence and International Security,” Texas National Security Review, June 2, 2020, https://tnsr.org/roundtable/policy-roundtable-artificial-intelligence-and-international-security/; Melanie Sisson et al., The Militarization of Artificial Intelligence (Stanley Center for Peace and Security, June 2020), https://stanleycenter.org/publications/militarization-of-artificial-intelligence/; Andrew Imbrie and Elsa B. Kania, AI Safety, Security, and Stability Among Great Powers: Options, Challenges, and Lessons Learned for Pragmatic Engagement (Center for Security and Emerging Technology, December 2019), https://cset.georgetown.edu/publication/ai-safety-security-and-stability-among-great-powers-options-challenges-and-lessons-learned-for-pragmatic-engagement/. 289Status-6, or Poseidon: U.S. Department of Defense, Nuclear Posture Review 2018, 8–9, https://media.defense.gov/2018/Feb/02/2001872886/-1/-1/1/2018-NUCLEAR-POSTURE-REVIEW-FINAL-REPORT.PDF; and Vladimir Putin, presidential address to the Federal Assembly, March 1, 2018, http://en.kremlin.ru/events/president/news/56957. 289intended use of the Status-6: H.

…

The Defense Innovation Board convened roundtable discussions with experts at Harvard, Carnegie Mellon, and Stanford universities, with public listening sessions and comments. In early 2019, the Defense Department released an unclassified summary of its internal AI strategy, which called for building AI systems that were “resilient, robust, reliable, and secure.” A major pillar of the strategy was “leading in military ethics and AI safety.” After extensive consultation with AI experts outside the defense community, in late 2019 the Defense Innovation Board approved a set of proposed AI principles, which the secretary of defense adopted in 2020. The final principles included requirements that DoD AI systems be responsible, equitable, traceable, reliable, and governable.

…

“I am not a person who believes that we are adversaries with China. I believe that we’re in competition with China,” he said. “We’re not in a shooting war. We disagree on some things, but it’s important that we retain the ability to collaborate with China.” Schmidt explained, “China and the United States have many common goals,” citing AI safety and climate change. “Both countries have benefited from trade between the two countries for a long time.” Three decades of engagement has meant that the American and Chinese AI ecosystems are strongly connected, yet China’s militarization of the South China Sea, bullying of its neighbors, and “predatory economics” led the U.S. government to declare China a “strategic competitor” in 2018.

Human Compatible: Artificial Intelligence and the Problem of Control

by

Stuart Russell

Published 7 Oct 2019

It’s certainly a tool that a superintelligent AI system could use to protect itself. . . . put it in a box? If you can’t switch AI systems off, can you seal the machines inside a kind of firewall, extracting useful question-answering work from them but never allowing them to affect the real world directly? This is the idea behind Oracle AI, which has been discussed at length in the AI safety community.22 An Oracle AI system can be arbitrarily intelligent, but can answer only yes or no (or give corresponding probabilities) to each question. It can access all the information the human race possesses through a read-only connection—that is, it has no direct access to the Internet. Of course, this means giving up on superintelligent robots, assistants, and many other kinds of AI systems, but a trustworthy Oracle AI would still have enormous economic value because we could ask it questions whose answers are important to us, such as whether Alzheimer’s disease is caused by an infectious organism or whether it’s a good idea to ban autonomous weapons.

…

Carpets are on floors because we like to walk on soft, warm surfaces and we don’t like loud footsteps; vases are on the middle of the table rather than the edge because we don’t want them to fall and break; and so on—everything that isn’t arranged by nature itself provides clues to the likes and dislikes of the strange bipedal creatures who inhabit this planet. Reasons for Caution You may find the Partnership on AI’s promises of cooperation on AI safety less than reassuring if you have been following progress in self-driving cars. That field is ruthlessly competitive, for some very good reasons: the first car manufacturer to release a fully autonomous vehicle will gain a huge market advantage; that advantage will be self-reinforcing because the manufacturer will be able to collect more data more quickly to improve the system’s performance; and ride-hailing companies such as Uber would quickly go out of business if another company were to roll out fully autonomous taxis before Uber does.

…

It will experiment with different patterns of stones on the board, wondering if those entities can interpret them. It will eventually communicate with those entities through a language of patterns and persuade them to reprogram its reward signal so that it always gets +1. The inevitable conclusion is that a sufficiently capable AlphaGo++ that is designed as a reward-signal maximizer will wirehead. The AI safety community has discussed wireheading as a possibility for several years.25 The concern is not just that a reinforcement learning system such as AlphaGo might learn to cheat instead of mastering its intended task. The real issue arises when humans are the source of the reward signal. If we propose that an AI system can be trained to behave well through reinforcement learning, with humans giving feedback signals that define the direction of improvement, the inevitable result is that the AI system works out how to control the humans and forces them to give maximal positive rewards at all times.

The Coming Wave: Technology, Power, and the Twenty-First Century's Greatest Dilemma

by

Mustafa Suleyman

Published 4 Sep 2023

The canonical thought experiment is that if you set up a sufficiently powerful AI to make paper clips but don’t specify the goal carefully enough, it may eventually turn the world and maybe even the contents of the entire cosmos into paper clips. Start following chains of logic like this and myriad sequences of unnerving events unspool. AI safety researchers worry (correctly) that should something like an AGI be created, humanity would no longer control its own destiny. For the first time, we would be toppled as the dominant species in the known universe. However clever the designers, however robust the safety mechanisms, accounting for all eventualities, guaranteeing safety, is impossible.

…

Bodies like the Institute of Electrical and Electronics Engineers maintain more than two thousand technical safety standards on technologies ranging from autonomous robot development to machine learning. Biotech and pharma have operated under safety standards far beyond those of most software businesses for decades. It’s worth remembering just how safe years of effort have made many existing technologies—and building on it. Frontier AI safety research is still an undeveloped, nascent field focusing on keeping ever more autonomous systems from superseding our ability to understand or control them. I see these questions around control or value alignment as subsets of the wider containment problem. While billions are plowed into robotics, biotech, and AI, comparatively tiny amounts get spent on a technical safety framework equal to keeping them functionally contained.

…

While billions are plowed into robotics, biotech, and AI, comparatively tiny amounts get spent on a technical safety framework equal to keeping them functionally contained. The main monitor of bioweapons, for example, the Biological Weapons Convention, has a budget of just $1.4 million and only four full-time employees—fewer than the average McDonald’s. The number of AI safety researchers is still minuscule: up from around a hundred at top labs worldwide in 2021 to three or four hundred in 2022. Given there are around thirty to forty thousand AI researchers today (and a similar number of people capable of piecing together DNA), it’s shockingly small. Even a tenfold hiring spree—unlikely given talent bottlenecks—wouldn’t address the scale of the challenge.

The Singularity Is Nearer: When We Merge with AI

by

Ray Kurzweil

Published 25 Jun 2024

BACK TO NOTE REFERENCE 59 Geoffrey Irving and Dario Amodei, “AI Safety via Debate,” OpenAI, May 3, 2018, https://openai.com/blog/debate. BACK TO NOTE REFERENCE 60 For an insightful sequence of posts explaining iterated amplification, written by the concept’s primary originator, see Paul Christiano, “Iterated Amplification,” AI Alignment Forum, October 29, 2018, https://www.alignmentforum.org/s/EmDuGeRw749sD3GKd. BACK TO NOTE REFERENCE 61 For more details on the technical challenges of AI safety, see Dario Amodei et al., “Concrete Problems in AI Safety,” arXiv:1606.06565v2 [cs.AI], July 25, 2016, https://arxiv.org/pdf/1606.06565.pdf.

…

Maas, “Roadmap to a Roadmap: How Could We Tell When AGI Is a ‘Manhattan Project’ Away?,” arXiv:2008.04701 [cs.CY], August 6, 2020, https://arxiv.org/pdf/2008.04701.pdf. BACK TO NOTE REFERENCE 77 “The Bletchley Declaration by Countries Attending the AI Safety Summit, 1-2 November 2023,” UK Government, November 1, 2023, https://www.gov.uk/government/publications/ai-safety-summit-2023-the-bletchley-declaration/the-bletchley-declaration-by-countries-attending-the-ai-safety-summit-1-2-november-2023. BACK TO NOTE REFERENCE 78 For a deeper look at the long-term worldwide trend toward diminishing violence, you can find lots of useful, data-rich insights in my friend Steven Pinker’s excellent book The Better Angels of Our Nature (New York: Viking, 2011).

…

There is a field of technical research that is actively seeking ways to prevent both kinds of AI misalignment. There are many promising theoretical approaches, though much work remains to be done. “Imitative generalization” involves training AI to imitate how humans draw inferences, so as to make it safer and more reliable when applying its knowledge in unfamiliar situations.[59] “AI safety via debate” uses competing AIs to point out flaws in each other’s ideas, allowing humans to judge issues too complex to properly evaluate unassisted.[60] “Iterated amplification” involves using weaker AIs to assist humans in creating well-aligned stronger AIs, and repeating this process to eventually align AIs much stronger than unaided humans could ever align on their own.[61] And so, while the AI alignment problem will be very hard to solve,[62] we will not have to solve it on our own—with the right techniques, we can use AI itself to dramatically augment our own alignment capabilities.

Architects of Intelligence

by

Martin Ford

Published 16 Nov 2018

The work that we’re doing at AI2—and that other people are also doing—on natural language understanding, seems like a very valuable contribution to AI safety, at least as valuable as worrying about the alignment problem, which ultimately is just a technical problem having to do with reinforcement learning and objective functions. So, I wouldn’t say that we’re underinvesting in being prepared for AI safety, and certainly some of the work that we’re doing at AI2 is actually implicitly a key investment in AI safety. MARTIN FORD: Any concluding thoughts? OREN ETZIONI: Well, there’s one other point I wanted to make that I think people often miss in the AI discussion, and that’s the distinction between intelligence and autonomy (https://www.wired.com/2014/12/ai-wont-exterminate-us-it-will-empower-us/).

…

We also have a governance of AI group, that is focused on the governance problems related to advances in machine intelligence. MARTIN FORD: Do you think that think tanks like yours are an appropriate level of resource allocation for AI governance, or do you think that governments should jump into this at a larger scale? NICK BOSTROM: I think there could be more resources on AI safety. It’s not actually just us: DeepMind also has an AI safety group that we work with, but I do think more resources would be beneficial. There is already a lot more talent and money now than there was even four years ago. In percentage terms, there has been a rapid growth trajectory, even though in absolute terms it’s still a very small field.

…

What I’ve shared here is an indication that there may be a way of conceiving of AI which is a little bit different from how we’ve been thinking about AI so far, that there are ways to build an AI system that has much better properties, in terms of safety and control. MARTIN FORD: Related to these issues of AI safety and control, a lot of people worry about an arms race with other countries, especially China. Is that something we should take seriously, something we should be very concerned about? STUART J. RUSSELL: Nick Bostrom and others have raised a concern that, if a party feels that strategic dominance in AI is a critical part of their national security and economic leadership, then that party will be driven to develop the capabilities of AI systems—as fast as possible, and yes, without worrying too much about the controllability issues.

The Precipice: Existential Risk and the Future of Humanity

by

Toby Ord

Published 24 Mar 2020

PLOS Pathogens, 14(10), e1007286. Everitt, T., Filan, D., Daswani, M., and Hutter, M. (2016). “Self-Modification of Policy and Utility Function in Rational Agents.” Artificial General Intelligence, LNAI 9782, 1–11. Farquhar, S. (2017). Changes in Funding in the AI Safety Field. https://www.centreforeffectivealtruism.org/blog/changes-in-funding-in-the-ai-safety-field/. Feinstein, A. R., Sosin, D. M., and Wells, C. K. (1985). “The Will Rogers Phenomenon. Stage Migration and New Diagnostic Techniques as a Source of Misleading Statistics for Survival in Cancer.” The New England Journal of Medicine, 312(25), 1,604–8.

…

Risks: Risks posed by AI systems, especially catastrophic or existential risks, must be subject to planning and mitigation efforts commensurate with their expected impact.109 Perhaps the best window into what those working on AI really believe comes from the 2016 survey of leading AI researchers. As well as asking if and when AGI might be developed, it asked about the risks: 70 percent of the researchers agreed with Stuart Russell’s broad argument about why advanced AI might pose a risk;110 48 percent thought society should prioritize AI safety research more (only 12 percent thought less). And half the respondents estimated that the probability of the longterm impact of AGI being “extremely bad (e.g., human extinction)” was at least 5 percent.111 I find this last point particularly remarkable—in how many other fields would the typical leading researcher think there is a one in twenty chance the field’s ultimate goal would be extremely bad for humanity?

…

A handful of forward-thinking philanthropists have taken existential risk seriously and recently started funding top-tier research on the key risks and their solutions.65 For example, the Open Philanthropy Project has funded some of the most recent nuclear winter modeling as well as work on technical AI safety, pandemic preparedness and climate change—with a focus on the worst-case scenarios.66 At the time of writing they are eager to fund much more of this research, and are limited not by money, but by a need for great researchers to work on these problems.67 And there are already a handful of academic institutes dedicated to research on existential risk.

Elon Musk

by

Walter Isaacson

Published 11 Sep 2023

The first and only meeting was held at SpaceX. Page, Hassabis, and Google chair Eric Schmidt attended, along with Reid Hoffman and a few others. “Elon’s takeaway was the council was basically bullshit,” says Sam Teller, then his chief of staff. “These Google guys have no intention of focusing on AI safety or doing anything that would limit their power.” Musk proceeded to publicly warn of the danger. “Our biggest existential threat,” he told a 2014 symposium at MIT, “is probably artificial intelligence.” When Amazon announced its chatbot digital assistant, Alexa, that year, followed by a similar product from Google, Musk began to warn about what would happen when these systems became smarter than humans.

…

The best way to prevent a problem was to ensure that AI remained tightly aligned and partnered with humans. “The danger comes when artificial intelligence is decoupled from human will.” So Musk began hosting a series of dinner discussions that included members of his old PayPal mafia, including Thiel and Hoffman, on ways to counter Google and promote AI safety. He even reached out to President Obama, who agreed to a one-on-one meeting in May 2015. Musk explained the risk and suggested that it be regulated. “Obama got it,” Musk says. “But I realized that it was not going to rise to the level of something that he would do anything about.” Musk then turned to Sam Altman, a tightly bundled software entrepreneur, sports car enthusiast, and survivalist who, behind his polished veneer, had a Musk-like intensity.

…

Not only was his erstwhile friend and houseguest starting a rival lab; he was poaching Google’s top scientists. After the launch of OpenAI at the end of 2015, they barely spoke again. “Larry felt betrayed and was really mad at me for personally recruiting Ilya, and he refused to hang out with me anymore,” Musk says. “And I was like, ‘Larry, if you just hadn’t been so cavalier about AI safety then it wouldn’t really be necessary to have some countervailing force.’ ” Musk’s interest in artificial intelligence would lead him to launch an array of related projects. These include Neuralink, which aims to plant microchips in human brains; Optimus, a humanlike robot; and Dojo, a supercomputer that can use millions of videos to train an artificial neural network to simulate a human brain.

Nexus: A Brief History of Information Networks From the Stone Age to AI

by

Yuval Noah Harari

Published 9 Sep 2024

Yoshua Bengio et al., “Managing AI Risks in an Era of Rapid Progress,” Science (forthcoming). 18. Katja Grace et al., “Thousands of AI Authors on the Future of AI,” (Preprint, submitted in 2024), https://arxiv.org/abs/2401.02843. 19. “The Bletchley Declaration by Countries Attending the AI Safety Summit, 1–2 November 2023,” Gov.UK, Nov. 1 2023, www.gov.uk/government/publications/ai-safety-summit-2023-the-bletchley-declaration/the-bletchley-declaration-by-countries-attending-the-ai-safety-summit-1-2-november-2023. 20. Jan-Werner Müller, What Is Populism? (Philadelphia: University of Pennsylvania Press, 2016). 21. In Plato’s Republic, Thrasymachus, Glaucon, and Adeimantus argue that everyone—and most notably politicians, judges, and civil servants—is interested only in their personal privileges and dissimulates and lies to that end.

…

Marc Andreessen, “Why AI Will Save the World,” Andreessen Horowitz, June 6, 2023, a16z.com/ai-will-save-the-world/. 15. Ray Kurzweil, The Singularity Is Nearer: When We Merge with AI (London: The Bodley Head, 2024), 285. 16. Andy McKenzie, “Transcript of Sam Altman’s Interview Touching on AI Safety,” LessWrong, Jan. 21, 2023, www.lesswrong.com/posts/PTzsEQXkCfig9A6AS/transcript-of-sam-altman-s-interview-touching-on-ai-safety; Ian Hogarth, “We Must Slow Down the Race to God-Like AI,” Financial Times, April 13, 2023, www.ft.com/content/03895dc4-a3b7-481e-95cc-336a524f2ac2; “Pause Giant AI Experiments: An Open Letter,” Future of Life Institute, March 22, 2023, futureoflife.org/open-letter/pause-giant-ai-experiments/; Cade Metz, “ ‘The Godfather of AI’ Quits Google and Warns of Danger,” New York Times, May 1, 2023, www.nytimes.com/2023/05/01/technology/ai-google-chatbot-engineer-quits-hinton.html; Mustafa Suleyman, The Coming Wave: Technology, Power, and the Twenty-First Century’s Greatest Dilemma, with Michael Bhaskar (New York: Crown, 2023); Walter Isaacson, Elon Musk (London: Simon & Schuster, 2023). 17.

…

Emily Washburn, “What to Know About Effective Altruism—Championed by Musk, Bankman-Fried, and Silicon Valley Giants,” Forbes, March 8, 2023, www.forbes.com/sites/emilywashburn/2023/03/08/what-to-know-about-effective-altruism-championed-by-musk-bankman-fried-and-silicon-valley-giants/; Alana Semuels, “How Silicon Valley Has Disrupted Philanthropy,” Atlantic, July 25, 2018, www.theatlantic.com/technology/archive/2018/07/how-silicon-valley-has-disrupted-philanthropy/565997/; Timnit Gebru, “Effective Altruism Is Pushing a Dangerous Brand of ‘AI Safety,’ ” Wired, Nov. 30, 2022, www.wired.com/story/effective-altruism-artificial-intelligence-sam-bankman-fried/; Gideon Lewis-Kraus, “The Reluctant Prophet of Effective Altruism,” New Yorker, Aug. 8, 2022, www.newyorker.com/magazine/2022/08/15/the-reluctant-prophet-of-effective-altruism. 39. Alan Soble, “Kant and Sexual Perversion,” Monist 86, no. 1 (2003): 55–89, www.jstor.org/stable/27903806.

Genius Makers: The Mavericks Who Brought A. I. To Google, Facebook, and the World

by

Cade Metz

Published 15 Mar 2021

Superintelligence would arrive in the next decade, he would say, and so would the risks. Mark Zuckerberg balked at these ideas—he merely wanted DeepMind’s talent—but Larry Page and Google embraced them. Once they were inside Google, Suleyman and Legg built a DeepMind team dedicated to what they called “AI safety,” an effort to ensure that the lab’s technologies did no harm. “If technologies are going to be successfully used in the future, the moral responsibilities are going to have to be baked into their design by default,” Suleyman says. “One has to be thinking about the ethical considerations the moment you start building the system.”

…

That, he said, was the big risk: The technology could suddenly cross over into the danger area without anyone quite realizing it. This was an echo of Bostrom, who was also onstage in Puerto Rico, but Musk had a way of amplifying the message. Jaan Tallinn had seeded the Future of Life Institute with a pledge of $100,000 a year. In Puerto Rico, Musk pledged $10 million, earmarked for projects that explored AI safety. But as he prepared to announce this new gift, he had second thoughts, fearing the news would detract from an upcoming launch of a SpaceX rocket and its landing on a drone ship in the Pacific Ocean. Someone reminded him there were no reporters at the conference and that the attendees were under Chatham House Rules, meaning they’d agreed not to reveal what was said in Puerto Rico, but he was still wary.

…

“We believe that research on how to make AI systems robust and beneficial is both important and timely,” the letter read, before recommending everything from labor market forecasts to the development of tools that could ensure AI technology was safe and reliable. Tegmark sent a copy to each attendee, giving all the opportunity to sign. The tone of the letter was measured and the contents straightforward, sticking mostly to matters of common sense, but it served as a marker for those who were committed to the idea of AI safety—and were at least willing to listen to the deep concerns of people like Legg, Tallinn, and Musk. One person who attended the conference but did not sign was Kent Walker, Google’s chief legal officer, who was more of an observer than a participant in Puerto Rico as his company sought to expand its AI efforts both in California with Google Brain and in London with DeepMind.

To Be a Machine: Adventures Among Cyborgs, Utopians, Hackers, and the Futurists Solving the Modest Problem of Death

by

Mark O'Connell

Published 28 Feb 2017

And because of our tendency to conceive of intelligence within human parameters, we were likely to become complacent about the speed with which machine intelligence might exceed our own. Human-level AI might, that is, seem a very long way off for a very long time, and then be surpassed in an instant. In his book, Nick illustrates this point with a quotation from the AI safety theorist Eliezer Yudkowsky: AI might make an apparently sharp jump in intelligence purely as the result of anthropomorphism, the human tendency to think of “village idiot” and “Einstein” as the extreme ends of the intelligence scale, instead of nearly indistinguishable points on the scale of minds-in-general.

…

(An effectively altruistic act, as opposed to an emotionally altruistic one, might involve a college student deciding that, rather than becoming a doctor and spending her career curing blindness in the developing world, her time would be better spent becoming a Wall Street hedge fund manager and donating enough of her income to charity to pay for several doctors to cure a great many more people of blindness.) The conference had substantially focused on questions of AI and existential risk. Thiel and Musk, who’d spoken on a panel at the conference along with Nick Bostrom, had been influenced by the moral metrics of Effective Altruism to donate large amounts of money to organizations focused on AI safety. Effective Altruism had significant crossover, in terms of constituency, with the AI existential risk movement. (In fact, the Centre for Effective Altruism, the main international promoter of the movement, happened to occupy an office in Oxford just down the hall from the Future of Humanity Institute.)

…

The last time he’d made the mistake of alluding publicly to any sort of timeline had been the previous January at the World Economic Forum at Davos, where he sits on something called the Global Agenda Council on Artificial Intelligence and Robotics, and where he’d made a remark about AI exceeding human intelligence within the lifetime of his own children—the upshot of which, he said, had been a headline in the Daily Telegraph declaring that “ ‘Sociopathic’ Robots Could Overrun the Human Race Within a Generation.” This sort of phrasing suggested, certainly, a hysteria that was absent from Stuart’s personal style. But in speaking with people involved in the AI safety campaign, I became aware of an internal contradiction: their complaints about the media’s sensationalistic reporting of their claims were undermined by the fact that the claims themselves were already, sober language notwithstanding, about as sensational as it was possible for any claim to be. It was difficult to overplay something as inherently dramatic as the potential destruction of the entire human race, which is of course the main reason why the media—a category from which I did not presume to exclude myself—was so drawn to this whole business in the first place.

What We Owe the Future: A Million-Year View

by

William MacAskill

Published 31 Aug 2022

On the other hand, speeding it up could help reduce the risk of technological stagnation. On this issue, it’s not merely that taking the wrong action could make your efforts futile. The wrong action could be disastrous. The thorniness of these issues isn’t helped by the considerable disagreement among experts. Recently, seventy-five researchers at leading organisations in AI safety and governance were asked, “Assuming that there will be an existential catastrophe as a result of AI, what do you think will be the cause?”4 The respondents could give one of six answers: the first was a scenario in which a single AI system quickly takes over the world, as described in Nick Bostrom’s Superintelligence; second and third were AI-takeover scenarios involving many AI systems that improve more gradually; the fourth was that AI would exacerbate risk from war; the fifth was that AI would be misused by people (as I described at length in Chapter 4); and the sixth was “other.”

…

Thanks to this and other subsidy schemes introduced around the same time, the cost of solar panels fell by 92 percent between 2000 and 2020.37 The solar revolution that we’re about to see is thanks in large part to German environmental activism.38 I’ve seen successes from those motivated explicitly by longtermist reasoning, too. I’ve seen the idea of “AI safety”—ensuring that AI does not result in catastrophe even after AI systems far surpass us in the ability to plan, reason, and act (see Chapter 4)—go from the fringiest of fringe concerns to a respectable area of research within machine learning. I’ve read the UN secretary-general’s 2021 report, Our Common Agenda, which, informed by researchers at longtermist organisations, calls for “solidarity between peoples and future generations.”39 Because of 80,000 Hours, I’ve seen thousands of people around the world shift their careers towards paths they believe will do more longterm good.

…

false alarms of nuclear attacks, 115, 128–129 pathogen escapes from laboratories, 109–112 actions, taking, 226–227 activism, environmental and political, 135, 233–234 Adams, John, 23–24 adaptability, 93 African Americans abolition of slavery, 47–53, 62–72, 99 Black-White happiness gap, 205 See also slavery agents: artificial general intelligence development, 80 agricultural revolution, 132, 206 agriculture average wellbeing among agriculturalists, 206 climate change concerns, 136–138 deforestation and species extinction, 31 life span of mammalian species, 3, 13(fig.) nuclear winter, 129 postcatastrophe recovery of, 132–134 slavery in agricultural civilisations, 47 suffering of farmed animals, 208–211 technological development feedback loop, 153 AI governance, 225 AI safety, 244 air pollution, 25, 25(fig.), 141, 227, 261 alcohol use and abuse, values and, 67, 78 alignment problem of AI, 87 AlphaFold 2, 81 AlphaGo, 80 AlphaZero, 80–81 al-Qaeda: bioweapons programme, 112–113 al-Zawahiri, Ayman, 112 Ambrosia start-up, 85 Animal Rights Militia, 240–241 animal welfare becoming vegetarian, 231–232 political activism, 72–73 the significance of values, 53 suffering of farmed animals, 208–211, 213 wellbeing of animals in the wild, 211–213 animals, evolution of, 56–57 anthrax, 109–110 anti-eutopia, 215–220 Apple iPhone, 198 Arab slavery, 47 “Are Ideas Getting Harder to Find?”

Co-Intelligence: Living and Working With AI

by

Ethan Mollick

Published 2 Apr 2024

Profound human-AI relationships like the Replika users’ will proliferate, and more people will be fooled, either by choice or by bad luck, into thinking that their AI companions are real. And this is only the beginning. As AIs become more connected to the world, by adding the ability to speak and be spoken to, the sense of connection deepens. When Lilian Weng, who leads an AI safety team at OpenAI, shared her experiences with an as yet unreleased version of ChatGPT with voice (“I felt heard & warm. Never tried therapy before but this is probably it?”), she touched off a spirited debate on the value of AI therapy that echoed earlier discussions about ELIZA. Even if it is never approved as a therapist, though, it is clear that many people will use AI for that function, as well as many other areas that previously relied on human connection.

…

But there is little evidence that the limitations have already been reached, and even if they were, there are other tweaks and changes that could be made to LLMs to squeeze more out of the systems for years to come. And LLMs are just one approach to AI; other successor technologies may overcome these limits. Slightly more possible is a world where regulatory or legal action stops future AI development. Maybe AI safety experts convince governments to ban AI development, complete with threats of force against any who dare breach these limits. But, given that most governments are only just beginning to consider regulation, and there’s a lack of international consensus, it seems extremely unlikely that a global ban will happen soon or that regulation will make AI development grind to a halt.

The Wealth Ladder: Proven Strategies for Every Step of Your Financial Life

by

Nick Maggiulli

Published 22 Jul 2025

,” Edge Delta (blog), March 11, 2024, https://edgedelta.com/company/blog/how-much-data-is-created-per-day. BACK TO NOTE REFERENCE 1 James Gleick, The Information: A History, a Theory, a Flood (New York: Pantheon, 2011), 401. BACK TO NOTE REFERENCE 2 “AI Safety and the Legacy of Bletchley Park,” Talking Machines, February 25, 2016, https://www.thetalkingmachines.com/episodes/ai-safety-and-legacy-bletchley-park. BACK TO NOTE REFERENCE 3 About the Author Nick Maggiulli is the chief operating officer and data scientist at Ritholtz Wealth Management, where he oversees operations across the firm and provides insights on business intelligence.

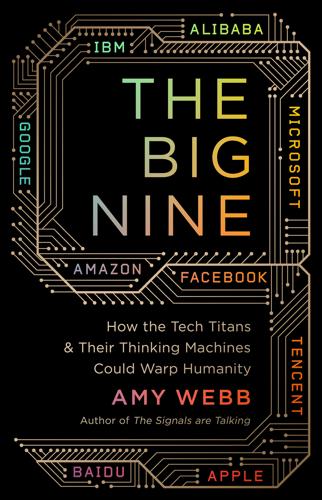

The Big Nine: How the Tech Titans and Their Thinking Machines Could Warp Humanity

by

Amy Webb

Published 5 Mar 2019

Here are just a few of our real-world outcomes: In 2016, an AI-powered security robot intentionally crashed into a 16-month-old child in a Silicon Valley mall.11 The AI system powering the Elite: Dangerous video game developed a suite of superweapons that the creators never imagined, wreaking havoc within the game and destroying the progress made by all the real human players.12 There are myriad problems when it comes to AI safety, some of which are big and obvious: self-driving cars have already run red lights and, in a few instances, killed pedestrians. Predictive policing applications continually mislabel suspects’ faces, landing innocent people in jail. There are an unknowable number of problems that escape our notice, too, because they haven’t affected us personally yet.

…

See also Dartmouth Workshop Rongcheng, China, 81, 168 Rosenblatt, Frank, 32, 34, 41; Perception system, 32–33. See also Dartmouth Workshop Royal Dutch Shell company, 141–142 Rubin, Andy, 55 Rus, Daniela, 65 Russell, Bertrand: Principia Mathematica, 30–31 Ryder, Jon, 41 Safety issues, AI: robots, 58; self-driving cars, 58. See also Accidents and mistakes, AI Safety standards, AI: establishment of global, 251 Salieri, Antonio, 16 Scenario planning, 141; Royal Dutch Shell company use of, 141–142. See also Scenarios Scenarios, 141; as cognitive bias behavioral economics coping tool, 142; preferred outcomes and, 141; probability neglect and, 142; purpose of, 143.

Searches: Selfhood in the Digital Age

by

Vauhini Vara

Published 8 Apr 2025

Transparency and Trust: • Address the importance of transparency in AI development and how OpenAI communicates its progress, challenges, and decision-making processes to the public. • Discuss initiatives aimed at building trust with users and stakeholders, such as open research publications and community engagement. 4. AI Safety and Long-term Impact: • Examine the potential long-term impacts of AI on society and how Altman’s leadership is preparing for future challenges. • Discuss any concerns about AI safety, including the risks of superintelligent AI, and how OpenAI is working to mitigate these risks. Personal Insights and Vision 1. Altman’s Personal Vision: • Share quotes and insights from Altman about his vision for the future of AI and its role in society.

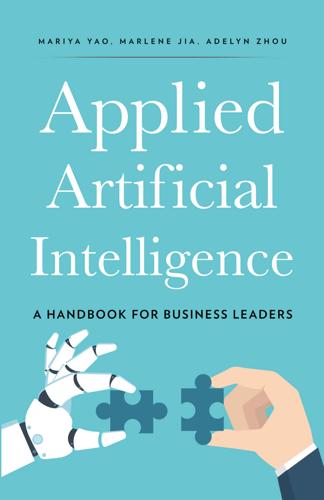

Applied Artificial Intelligence: A Handbook for Business Leaders

by

Mariya Yao

,

Adelyn Zhou

and

Marlene Jia

Published 1 Jun 2018

Jack Chua Director of Data Science, Expedia “As a deep learning researcher and educator, I’m alarmed by how much misinformation and misreporting occurs with AI. It’s refreshing to see a practical guide written by experienced technologists which explains AI so well for a business audience. In particular, I’m glad to see this book addresses critical issues of AI safety and ethics and advocates for diversity and inclusion in the industry.” Rachel Thomas Co-Founder, fast.ai and Assistant Professor, USF Data Institute “Full of valuable information and incredibly readable. This book is the perfect mix of practical and technical. If you’re an entrepreneur or business leader, you need this guide.”

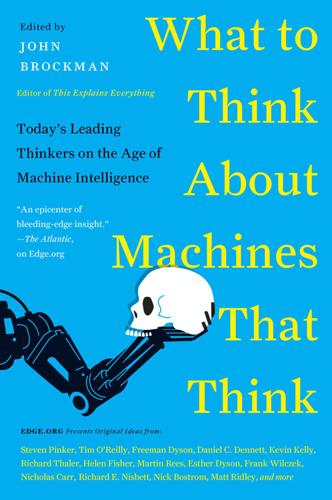

What to Think About Machines That Think: Today's Leading Thinkers on the Age of Machine Intelligence

by

John Brockman

Published 5 Oct 2015

Luckily for humanity, sober analysis has usually prevailed and resulted in various treaties and protocols to steer the research. When I think about the machines that can think, I think of them as technology that needs to be developed with similar (if not greater!) care. Unfortunately, the idea of AI safety has been more challenging to popularize than, say, biosafety, because people have rather poor intuitions when it comes to thinking about nonhuman minds. Also, if you think about it, AI is really a metatechnology: technology that can develop further technologies, either in conjunction with humans or perhaps even autonomously, thereby further complicating the analysis.

…

Therefore, complicated arguments by people trying to sound clever on the issue of AI thinking, consciousness, or ethics are often a distraction from the trivial truth: The only way to ensure that we don’t accidentally blow ourselves up with our own technology (or metatechnology) is to do our homework and take relevant precautions—just as those Manhattan Project scientists did when they prepared LA-602. We need to set aside the tribal quibbles and ramp up the AI safety research. By way of analogy: Since the Manhattan Project, nuclear scientists have moved on from increasing the power extracted from nuclear fusion to the issue of how to best contain it—and we don’t even call that nuclear ethics. We call it common sense. WHAT DO YOU CARE WHAT OTHER MACHINES THINK?

The People vs Tech: How the Internet Is Killing Democracy (And How We Save It)

by

Jamie Bartlett

Published 4 Apr 2018

We should encourage the sector, but it must be subject to democratic control and, above all, tough regulation to ensure it works in the public interest and is not subject to being hacked or misused.2 Just as the inventors of the atomic bomb realised the power of their creation and so dedicated themselves to creating arms control and nuclear reactor safety, so AI inventors should take similar responsibility. Max Tegmark’s research into AI safety is a good example of this.* A sovereign authority that can enforce the people’s will – but remains accountable to them THE TRANSPARENT LEVIATHAN Maintaining law and order in the coming years will require a significant increase in law enforcement agencies’ budgets, capabilities and staff numbers.

Artificial Intelligence: A Modern Approach

by

Stuart Russell

and

Peter Norvig

Published 14 Jul 2019

Researchers at IBM have a proposal for gaining trust in AI systems through declarations of conformity (Hind et al., 2018). DARPA requires explainable decisions for its battlefield systems, and has issued a call for research in the area (Gunning, 2016). AI safety: The book Artificial Intelligence Safety and Security (Yampolskiy, 2018) collects essays on AI safety, both recent and classic, going back to Bill Joy’s Why the Future Doesn’t Need Us (Joy, 2000). The “King Midas problem” was anticipated by Marvin Minsky, who once suggested that an AI program designed to solve the Riemann Hypothesis might end up taking over all the resources of Earth to build more powerful supercomputers.

…

E., 401, 1085 Ai, D., 48, 1104 AI2 ARC (science test questions), 901 AI4 People, 1059 AI FAIRNESS, 378, 1047 AI for Humanitarian Action, 1037 AI for Social Good, 1037 AI Habitat (simulated environment), 873 AI Index, 45 Aila, T., 831, 1101 AI Now Institute, 1046, 1059 Airborne Collision Avoidance System X (ACAS X), 588 aircraft carrier scheduling, 401 airport, driving to, 403 airport siting, 530, 535 AI safety, 1061 AI Safety Gridworlds, 873 AISB (Society for Artificial Intelligence and Simulation of Behaviour), 53 Aitken, S., 799, 1092 AI winter, 42, 45 Aizerman, M., 735, 1085 Akametalu, A. K., 872, 1085 Akgun, B., 986, 1085 al-Khwarizmi, M., 27 Alami, R., 986, 1113 Albantakis, L., 1058, 1108 Alberti, C., 904, 1085 Alberti, L., 1027 Aldous, D., 160, 1085 ALE (Arcade Learning Environment), 873 Alemi, A.

…

The pixels on the screen are provided to the agent as percepts, along with a hardwired score of the game so far. ALE was used by the DeepMind team to implement DQN learning and verify the generality of their system on a wide variety of games (Mnih et al., 2015). DeepMind in turn open-sourced several agent platforms, including the DeepMind Lab (Beattie et al., 2016), the AI Safety Gridworlds (Leike et al., 2017), the Unity game platform (Juliani et al., 2018), and the DM Control Suite (Tassa et al., 2018). Blizzard released the StarCraft II Learning Environment (SC2LE), to which DeepMind added the PySC2 component for machine learning in Python (Vinyals et al., 2017a). Facebook’s AI Habitat simulation (Savva et al., 2019) provides a photo-realistic virtual environment for indoor robotic tasks, and their HORIZON platform (Gauci et al., 2018) enables reinforcement learning in large-scale production systems.

Robot Rules: Regulating Artificial Intelligence

by

Jacob Turner

Published 29 Oct 2018

– You are not permitted to modify any robot to enable it to function as a weapon.103 It remains to be seen though whether and to what extent the European Parliament’s ambitious proposals will be adopted in legislative proposals by the Commission. 4.8 Japanese Initiatives A June 2016 Report issued by Japan’s Ministry of Internal Affairs and Communications proposed nine principles for developers of AI, which were submitted for international discussion at the G7104 and OECD:1) Principle of collaboration—Developers should pay attention to the interconnectivity and interoperability of AI systems. 2) Principle of transparency —Developers should pay attention to the verifiability of inputs/outputs of AI systems and the explainability of their judgments. 3) Principle of controllability—Developers should pay attention to the controllability of AI systems. 4) Principle of safety—Developers should take it into consideration that AI systems will not harm the life, body, or property of users or third parties through actuators or other devices. 5) Principle of security—Developers should pay attention to the security of AI systems. 6) Principle of privacy—Developers should take it into consideration that AI systems will not infringe the privacy of users or third parties. 7) Principle of ethics—Developers should respect human dignity and individual autonomy in R&D of AI systems. 8) Principle of user assistance—Developers should take it into consideration that AI systems will support users and make it possible to give them opportunities for choice in appropriate manners. 9) Principle of accountability—Developers should make efforts to fulfill their accountability to stakeholders including users of AI systems.105 Japan emphasised that the above principles were intended to be treated as soft law, but with a view to “accelerate the participation of multistakeholders involved in R&D and utilization of AI… at both national and international levels, in the discussions towards establishing ‘AI R&D Guidelines’ and ‘AI Utilization Guidelines’”.106 Non-governmental groups in Japan have also been active: the Japanese Society for Artificial Intelligence proposed Ethical Guidelines for an Artificial Intelligence Society in February 2017, aimed at its members.107 Fumio Shimpo, a member of the Japanese Government’s Cabinet Office Advisory Board, has proposed his own Eight Principles of the Laws of Robots.108 4.9 Chinese Initiatives In furtherance of China’s Next Generation Artificial Intelligence Development Plan,109 and as mentioned in Chapter 6, in January 2018 a division of China’s Ministry of Industry and Information Technology released a 98-page White Paper on AI Standardization (the White Paper), the contents of which comprise China’s most comprehensive analysis to date of the ethical challenges raised by AI.110 The White Paper highlights emergent ethical issues in AI including privacy,111 the Trolley Problem,112 algorithmic bias,113 transparency 114 and liability for harm caused by AI.115 In terms of AI safety, the White Paper explains that:Because the achieved goals of artificial intelligence technology are influenced by its initial settings, the goal of artificial intelligence design must be to ensure that the design goals of artificial intelligence are consistent with the interests and ethics of most human beings.

…

See Roman Yampolskiy and Joshua Fox, “Safety Engineering for Artificial General Intelligence” Topoi, Vol. 32, No. 2 (2013), 217–226; Stuart Russell, Daniel Dewey, and Max Tegmark, “Research Priorities for Robust and Beneficial Artificial Intelligence”, AI Magazine, Vol. 36, No. 4 (2015), 105–114; James Babcock, János Kramár, and Roman V. Yampolskiy, “Guidelines for Artificial Intelligence Containment”, arXiv preprint arXiv:1707.08476 (2017); Dario Amodei, Chris Olah, Jacob Steinhardt, Paul Christiano, John Schulman, and Dan Mané, “Concrete Problems in AI Safety”, arXiv preprint arXiv:1606.06565 (2016); Jessica Taylor, Eliezer Yudkowsky, Patrick LaVictoire, and Andrew Critch, “Alignment for Advanced Machine Learning Systems”, Machine Intelligence Research Institute (2016); Smitha Milli, Dylan Hadfield-Menell, Anca Dragan, and Stuart Russell, “Should Robots Be Obedient?”

Human + Machine: Reimagining Work in the Age of AI

by

Paul R. Daugherty

and

H. James Wilson

Published 15 Jan 2018

A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.12 Introduced in the 1942 short story “Runaround,” the three laws are certainly still relevant today, but they are merely a starting point. Should, for example, a driverless vehicle try to protect its occupants by swerving to avoid a child running into the street if that action might lead to a collision with a nearby pedestrian? Such questions are why companies that design and deploy sophisticated AI technologies will require AI safety engineers. These individuals must try to anticipate the unintended consequences of an AI system and also address any harmful occurrences with the appropriate urgency. In a recent Accenture survey, we found that less than one-third of companies have a high degree of confidence in the fairness and auditability of their AI systems, and less than half have similar confidence in the safety of those systems.13 Moreover, past research has found that about one-third of people are fearful of AI, and nearly one-fourth believe the technology will harm society.14 Clearly, those statistics indicate fundamental issues that need to be resolved for the continued usage of AI technologies.

What If We Get It Right?: Visions of Climate Futures

by

Ayana Elizabeth Johnson

Published 17 Sep 2024

Ayana: So there’s a good version of the AI-filled future, where we’re using AIs to come up with creative solutions that help make the world better. But for some people, like me, the specter of what AI might do in the future is unsettling. There are a lot of risks associated with unleashing this new potential in the world. Recently there was a statement on AI risk from the Center of AI Safety that you signed. And the whole statement is one sentence, which I appreciate: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks, such as pandemics and nuclear war.” How do we get from chatbots writing term papers to the extinction of humankind?

…

Mustafa: Well, there’s a new crop of AI tech CEOs that has been much more proactive than the last round of social-media company CEOs in raising the alarm and encouraging people to think critically and talk critically about the kinds of models we’re building. With DeepMind, we’ve been at the forefront of pushing the language around AI ethics and AI safety for almost fifteen years now. Our business plan when we founded the company back in 2010 included the tagline “Building safe and ethical AGI,”[*85] and that shaped the field. Both OpenAI and then Anthropic, the two main companies that came after us, and now my new company Inflection, all of us are focused on the question, What does safety look like?

If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All

by

Eliezer Yudkowsky

and

Nate Soares

Published 15 Sep 2025

U.S. ambassador to the United Nations Linda Thomas-Greenfield praised it: Today, all 193 members of the United Nations General Assembly have spoken in one voice, and together, chosen to govern artificial intelligence rather than let it govern us. In September 2024, President Biden spoke of international cooperation on AI in his final address to the United Nations: First, how do we as an international community govern AI as countries and companies race to uncertain frontiers? We need an equally urgent effort to ensure AI safety, security and trustworthiness. At the World Economic Forum in January 2025, Chinese vice premier Ding Xuexiang said: If we allow this reckless competition among countries to continue, then we will see a ‘gray rhino’—what to do about it? I think we need to review the history. For example, our lessons in managing risks: nuclear risks, biological risks, and security.[…] We stand ready, under the framework of the United Nations and its core, to actively participate in including all the relevant international organizations and all countries to discuss the formulation of robust rules to ensure that AI technology will become an “Ali Baba’s treasure cave” instead of a “Pandora’s Box.”

The Dark Cloud: How the Digital World Is Costing the Earth

by

Guillaume Pitron

Published 14 Jun 2023

Driving sustainable development’, IBM, undated. 46 ‘IBM expands Green Horizons initiative globally to address pressing environmental and pollution challenges’, IBM, 9 December 2015. 47 ‘How artificial intelligence can fight air pollution in China’, MIT Technology Review, 31 August 2015. 48 Ibid. 49 Interview with Lex Coors, chief data center technology and engineering officer, Interxion, 2020. 50 Michio Kaku, The Future of the Mind: the scientific quest to understand, enhance, and empower the mind, Doubleday, 2014. 51 David Rolnick et al., Tackling climate change with machine learning, Future of Life Institute, Boston, 22 October 2019. 52 ‘A physicist on why AI safety is “the most important conversation of our time”’, The Verge, 29 August 2017. 53 ‘The Doomsday invention: Will artificial intelligence bring us utopia or destruction?’, The New Yorker, 23 November 2015. 54 ‘Fourth industrial revolution for the earth. Harnessing artificial intelligence for the earth’, World Economic Forum – Stanford Woods Institute for the Environment, Pricewaterhouse Coopers (PwC), January 2018. 55 Interview with Stuart Russell, professor of computer science, University of California, Berkeley, 2020. 56 Interview with Tristram Walsh, Alice Evatt and Christian Schröder, researchers in physics, philosophy, and engineering science, respectively.

The Doomsday Calculation: How an Equation That Predicts the Future Is Transforming Everything We Know About Life and the Universe

by

William Poundstone

Published 3 Jun 2019

Asked about Musk, Zuckerberg told a Facebook Live audience, “I think people who are naysayers and try to drum up these doomsday scenarios—I just, I don’t understand it. It’s really negative and in some ways I actually think it is pretty irresponsible.” Pressed to characterize Musk’s position as “hysterical” or “valid,” Zuckerberg picked the former. Musk tweeted in response: “I’ve talked to Mark about this. His understanding of the subject is limited.” The AI safety debate has become the Pascal’s Wager of a secular industry. In the seventeenth century Blaise Pascal decided that he should believe in God, even though he had serious doubts, because the stakes are so high. Why miss out on heaven, or get sent to hell, just to be right about atheism? In its general form, Pascal’s Wager is a classic problem of decision theory.

A Hacker's Mind: How the Powerful Bend Society's Rules, and How to Bend Them Back

by

Bruce Schneier

Published 7 Feb 2023

Victoria Krakovna (2 Apr 2018), “Specification gaming examples in AI,” https://vkrakovna.wordpress.com/2018/04/02/specification-gaming-examples-in-ai. 231if it kicked the ball out of bounds: Karol Kurach et al. (25 Jul 2019), “Google research football: A novel reinforcement learning environment,” arXiv, https://arxiv.org/abs/1907.11180. 231AI was instructed to stack blocks: Ivaylo Popov et al. (10 Apr 2017), “Data-efficient deep reinforcement learning for dexterous manipulation,” arXiv, https://arxiv.org/abs/1704.03073. 232the AI grew tall enough: David Ha (10 Oct 2018), “Reinforcement learning for improving agent design,” https://designrl.github.io. 232Imagine a robotic vacuum: Dario Amodei et al. (25 Jul 2016), “Concrete problems in AI safety,” arXiv, https://arxiv.org/pdf/1606.06565.pdf. 232robot vacuum to stop bumping: Custard Smingleigh (@Smingleigh) (7 Nov 2018), Twitter, https://twitter.com/smingleigh/status/1060325665671692288. 233goals and desires are always underspecified: Abby Everett Jaques (2021), “The Underspecification Problem and AI: For the Love of God, Don’t Send a Robot Out for Coffee,” unpublished manuscript. 233a fictional AI assistant: Stuart Russell (Apr 2017), “3 principles for creating safer AI,” TED2017, https://www.ted.com/talks/stuart_russell_3_principles_for_creating_safer_ai. 233reports of airline passengers: Melissa Koenig (9 Sep 2021), “Woman, 46, who missed her JetBlue flight ‘falsely claimed she planted a BOMB on board’ to delay plane so her son would not be late to school,” Daily Mail, https://www.dailymail.co.uk/news/article-9973553/Woman-46-falsely-claims-planted-BOMB-board-flight-effort-delay-plane.html.

Superbloom: How Technologies of Connection Tear Us Apart

by

Nicholas Carr

Published 28 Jan 2025