Alan Turing

description: British mathematician, contributions to computer science and AI

381 results

The Secret Life of Bletchley Park: The WWII Codebreaking Centre and the Men and Women Who Worked There

by

Sinclair McKay

Published 24 May 2010

But it was also at the Park that Turing was to find a rare sort of freedom, before the narrow, repressive culture of the post-war years closed in on him and apparently led to his early death. ‘Turing,’ commented Stuart Milner-Barry, ‘was a strange and ultimately a tragic figure.’ That is one view. Certainly his life was short, and it ended extremely unhappily. But in a number of other senses, Alan Turing was an inspirational figure. ‘Alan Turing was unique,’ recalled Peter Hilton. ‘What you realise when you get to know a genius well is that there is all the difference between a very intelligent person and a genius. With very intelligent people, you talk to them, they come out with an idea, and you say to yourself, if not to them, I could have had that idea.

…

As a result of the ‘sheet’ system and the ‘cillis’, and thanks to the crucial involvement of the Polish codebreakers, Bletchley Park’s first break into current military Enigma traffic – as opposed to old messages – came in January 1940. Alan Turing had been sent to Paris to confer with the Poles about such matters as wheel changes in the Enigma machine, taking with him some of the Zygalski sheets. In those few days, they managed to crack an Enigma key via this method. One of the Polish mathematicians, Marian Rejewski, remembered his dealings with Turing: ‘We treated Alan Turing as a younger colleague who had specialised in mathematical logic and was just starting out in cryptology.’7 At the time, he was not aware that Turing had quietly been making some astounding cryptographical leaps off his own back.

…

Now, according to Jack Copeland, convoy re-routings ‘based on Hut 8 decrypts were so successful that for the first twenty-three days [of June], the north Atlantic U-boats made not a single sighting of a convoy’.2 In the midst of these events, Joan Murray gave a short description of Alan Turing, and his own gentle abstraction. ‘I can remember Alan Turing coming in as usual for a day’s leave,’ she wrote, ‘doing his own mathematical research at night, in the warmth and light of the office, without interrupting the routine of daytime sleep.’ Another veteran recalls Turing’s abstraction when being congratulated for his work by a senior ranking officer, while later, Hugh Alexander was to say of Turing’s role that ‘Turing thought it [naval Enigma] could be broken because it would be so interesting to break it … Turing first got interested in the problem for the typical reason that “no one else was doing anything about it and I could have it to myself.”’3 No better example then, of the partnership between unfettered mathematical inquiry and the national interest.

The Man Who Invented the Computer

by

Jane Smiley

Published 18 Oct 2010

He also moved his office from the mathematics department to the new physics building, which was more spacious and more practically oriented. According to Burton, he felt that mathematics as a field was moving in the wrong direction—toward greater and greater abstraction—while physicists continued to be interested in concrete problems. In the meantime, Alan Turing was wrestling with similar dissatisfactions. Alan Turing’s life at Sherborne was punctuated at the end with tragedy—in the winter of his last year (1930), his dearest friend, Christopher Morcom, died of tuberculosis. Morcom, slightly older and gifted with the star power that eluded Turing, had won many prizes at Sherborne, and then a scholarship to Trinity College.

…

Turing also began thinking again about the Liverpool tide-predicting machine. The machine Alan Turing was thinking of (and received forty pounds sterling to develop) would use weights and counterweights attached to rotating gears to set up problems. Their solutions would be measured by a comparison of weights—an analog idea. Turing and a colleague worked on this machine in their office at Cambridge through the summer of 1939, but in the fall, after the German invasion of Poland, Turing went to Bletchley Park to aid in the breaking of the Enigma. Yet another, and still more obscure, inventor of the computer, one whom Alan Turing would soon know very well, was Tommy Flowers.

…

Watson, Jr., later said, “If Aiken and my father had had revolvers they would both have been dead.” Hard feelings lingered for years afterward. Alan Turing is now a famous man—the subject of biographies, papers, an opera, and at least one play, but his work at Bletchley Park breaking the Enigma code did not come to light until the 1970s, and then, at first, only by means of popular books that did not actually mention him, or mentioned him in cryptic ways (F. W. Winterbotham, The Ultra Secret, 1974; A. Cave Brown, Bodyguard of Lies, 1975), or in specialized publications that did mention him directly (Brian Randell, “On Alan Turing and the Origins of Digital Computers,” 1972; Brian Randell, editor, The Origins of Digital Computers: Selected Papers, 1973).

Turing's Cathedral

by

George Dyson

Published 6 Mar 2012

Good to Sara Turing, December 9, 1956, AMT; Robin Gandy, “The Confluence of Ideas in 1936,” in Rolf Herken, ed., The Universal Turing Machine: A Half-Century Survey (Oxford: Oxford University Press, 1988), p. 85. 11. Alan Turing, “On Computable Numbers, with an Application to the Entscheidungsproblem,” Proceedings of the London Mathematical Society, ser. 2, vol. 42 (1936–1937): 230. 12. Ibid., p. 231. 13. Ibid., p. 250. 14. Ibid., p. 241. 15. Newman, “Max Newman—Mathematician, Codebreaker, and Computer Pioneer,” p. 178; Max Newman to Alonzo Church, May 31, 1936, in Andrew Hodges, Alan Turing: The Enigma (New York: Simon and Schuster, 1983), pp. 111–12. 16. Alan Turing to Sara Turing, October 6, 1936, AMT; Alan Turing to Sara Turing, February 22, 1937, AMT. 17.

…

Gladwin, June 18, 2002, in “Cryptanalytic Co-operation Between the UK and the USA,” in Christof Teuscher, ed., Alan Turing: Life and Legacy of a Great Thinker (New York: Springer-Verlag, 2002), p. 472. 35. John R. Womersley, Mathematics Division, National Physical Laboratory, “A.C.E. Project: Origin and Early History,” November 26, 1946, AMT. 36. Ibid. 37. Ibid. 38. Max Newman to John von Neumann, February 8, 1946, VNLC. 39. Alan Turing, “Report on visit to U.S.A., January 1st–20th, 1947,” AMT. 40. Sara Turing, Alan M. Turing, p. 56. 41. Alan Turing, “Proposed Electronic Calculator,” n.d., ca. 1946, p. 19, AMT. 42. Sara Turing, Alan M. Turing, p. 78. 43. Alan Turing, “Proposed Electronic Calculator,” p. 47; Alan Turing, “Lecture to the London Mathematical Society on 20 February 1947,” p. 9. 44.

…

Herman Goldstine, interview with Nancy Stern; Julian Bigelow, interview with Nancy Stern. 22. Julian Bigelow, interview with Nancy Stern. 23. Malcolm MacPhail to Andrew Hodges, December 17, 1977, in Hodges, Alan Turing, p. 138. 24. Turing, “Systems of Logic Based on Ordinals,” p. 161. 25. Ibid., pp. 172–73. 26. Ibid., pp. 214–15. 27. Ibid., p. 215. 28. Alan Turing to Sara Turing, October 14, 1936, AMT. 29. Alan Turing to Philip Hall, n.d., ca. 1938, AMT. 30. I. J. Good, “Pioneering Work on Computers at Bletchley,” in Metropolis, Howlett, and Rota, eds., A History of Computing in the Twentieth Century, p. 35. 31.

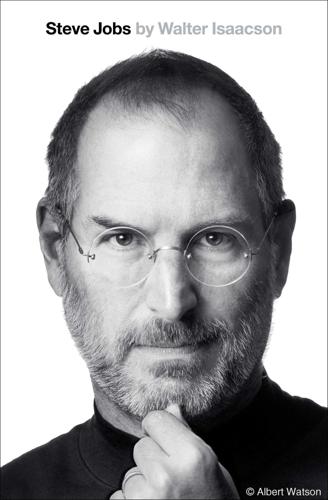

The Innovators: How a Group of Inventors, Hackers, Geniuses and Geeks Created the Digital Revolution

by

Walter Isaacson

Published 6 Oct 2014

Turing, published in 2012); Simon Lavington, editor, Alan Turing and His Contemporaries (BCS, 2012). 2. John Turing in Sara Turing, Alan M. Turing, 146. 3. Hodges, Alan Turing, 590. 4. Sara Turing, Alan M. Turing, 56. 5. Hodges, Alan Turing, 1875. 6. Alan Turing to Sara Turing, Feb. 16, 1930, Turing archive; Sara Turing, Alan M. Turing, 25. 7. Hodges, Alan Turing, 2144. 8. Hodges, Alan Turing, 2972. 9. Alan Turing, “On Computable Numbers,” Proceedings of the London Mathematical Society, read on Nov. 12, 1936. 10. Alan Turing, “On Computable Numbers,” 241. 11. Max Newman to Alonzo Church, May 31, 1936, in Hodges, Alan Turing, 3439; Alan Turing to Sara Turing, May 29, 1936, Turing Archive. 12.

…

See also “The Chinese Room Argument,” The Stanford Encyclopedia of Philosophy, http://plato.stanford.edu/entries/chinese-room/. 96. Hodges, Alan Turing, 11305; Max Newman, “Alan Turing, An Appreciation,” the Manchester Guardian, June 11, 1954. 97. M. H. A. Newman, Alan M. Turing, Sir Geoffrey Jefferson, and R. B. Braithwaite, “Can Automatic Calculating Machines Be Said to Think?” 1952 BBC broadcast, reprinted in Stuart Shieber, editor, The Turing Test: Verbal Behavior as the Hallmark of Intelligence (MIT, 2004); Hodges, Alan Turing, 12120. 98. Hodges, Alan Turing, 12069. 99. Hodges, Alan Turing, 12404. For discussions of Turing’s suicide and character, see Robin Gandy, unpublished obituary of Alan Turing for the Times, and other items in the Turing Archives, http://www.turingarchive.org/.

…

Maurice Wilkes, “How Babbage’s Dream Came True,” Nature, Oct. 1975. 86. Hodges, Alan Turing, 10622. 87. Dyson, Turing’s Cathedral, 2024. See also Goldstine, The Computer from Pascal to von Neumann, 5376. 88. Dyson, Turing’s Cathedral, 6092. 89. Hodges, Alan Turing, 6972. 90. Alan Turing, “Lecture to the London Mathematical Society,” Feb. 20, 1947, available at http://www.turingarchive.org/; Hodges, Alan Turing, 9687. 91. Dyson, Turing’s Cathedral, 5921. 92. Geoffrey Jefferson, “The Mind of Mechanical Man,” Lister Oration, June 9, 1949, Turing Archive, http://www.turingarchive.org/browse.php/B/44. 93. Hodges, Alan Turing, 10983. 94. For an online version, see http://loebner.net/Prizef/TuringArticle.html. 95.

Einstein's Fridge: How the Difference Between Hot and Cold Explains the Universe

by

Paul Sen

Published 16 Mar 2021

By measuring how rapidly the cells reproduce: “Minimum Energy of Computing, Fundamental Considerations” by Victor Zhirnov, Ralph Cavin, and Luca Gammaitoni, chapter from the book ICT—Energy—Concepts Towards Zero—Power Information and Communication Technology. Chapter Eighteen: The Mathematics of Life a mathematical model: From “The Chemical Basis of Morphogenesis” by Alan Turing, Philosophical Transactions of the Royal Society of London, Series B 237 (1952–54). He is best known for his pivotal role: See a range of biographies including The Man Who Knew Too Much: Alan Turing and the Invention of the Computer by David Leavitt and Alan Turing: The Enigma by Andrew Hodges. “There should be no question in”: See Cryptographic History of Work on the German Naval Enigma by Hugh Alexander. rightly celebrated: For example, Breaking the Code, a play by Hugh Whitmore; Britain’s Greatest Codebreaker, broadcast on UK Channel 4; and The Imitation Game, film starring Benedict Cumberbatch.

…

Willard Gibbs, vols. 1 and 2 The Second Physicist: On the History of Theoretical Physics in Germany by Christa Jungnickel and Russell McCormmach Willard Gibbs by Muriel Rukeyser Part Three: The Consequences of Thermodynamics—Chapters Thirteen to Nineteen Alan Turing: The Enigma by Andrew Hodges Alan Turing: The Enigma Man by Nigel Cawthorne Alan Turing: The Life of a Genius by Dermot Turing The Black Hole War: My Battle with Stephen Hawking to Make the World Safer for Quantum Mechanics by Leonard Susskind Black Holes and Time Warps: Einstein’s Outrageous Legacy by Kip S. Thorne A Brief History of Time: From the Big Bang to Black Holes by Stephen Hawking The Bumpy Road: Max Planck from Radiation Theory to the Quantum, 1896–1906 by Massimiliano Badino Einstein: His Life and Universe by Walter Isaacson Einstein and the Quantum: The Quest of the Valiant Swabian by A.

…

There was another consequence of working on SIGSALY. For in the later months of 1942 on into 1943, Shannon met, almost daily, the one other person in the world in the fields of cryptography, communication, and computing who was his equal and his intellectual soul mate, the great British mathematician and code breaker Alan Turing. By late 1942, Alan Turing had already played a pivotal role in helping the British crack Enigma, the encryption system used by the Germans to protect their military communications, thereby establishing his reputation as Britain’s leading cryptographer. So, when the American intelligence authorities informed their British counterparts about SIGSALY, they sent Turing to Bell Labs to vet it.

Darwin Among the Machines

by

George Dyson

Published 28 Mar 2012

.: Open Court, 1902), 254. 53.Leibniz to Caroline, Princess of Wales, ca. 1716, in Alexander, Correspondence, 191. CHAPTER 4 1.Alan Turing, “Computing Machinery and Intelligence,” Mind 59 (October 1950): 443. 2.A. K. Dewdney, The Turing Omnibus (Rockville, Md.: Computer Science Press, 1989), 389. 3.Robin Gandy, “The Confluence of Ideas in 1936,” in Rolf Herken, ed., The Universal Turing Machine: A Half-century Survey (Oxford: Oxford University Press, 1988), 85. 4.Alan Turing, “On Computable Numbers, with an Application to the Entscheidungsproblem,” Proceedings of the London Mathematical Society, 2d ser. 42 (1936–1937); reprinted, with corrections, in Martin Davis, ed., The Undecidable (Hewlett, N.Y.: Raven Press, 1965), 117. 5.Ibid., 136. 6.Kurt Gödel, 1946, “Remarks Before the Princeton Bicentennial Conference on Problems in Mathematics,” reprinted in Davis, The Undecidable, 84. 7.W.

…

Hinsley and Alan Stripp, eds., Codebreakers: The Inside Story of Bletchley Park, 2d ed. (Oxford: Clarendon Press, 1994), 164. 30.Hodges, Turing, 278. 31.Irving J. Good, “Turing and the Computer,” review of Alan Turing: The Enigma, by Andrew Hodges, Nature 307 (1 February 1984): 663. 32.Brian Randell, “The Colossus,” in Metropolis, Howlett, and Rota, History of Computing, 78. 33.Hilton, “Reminiscences,” 293. 34.Alan Turing, “Proposal for the Development in the Mathematics Division of an Automatic Computing Engine (ACE),” reprinted in B. E. Carpenter and R. W. Doran, eds., A. M. Turing’s A.C.E. Report of 1946 and Other Papers, Charles Babbage Reprint Series for the History of Computing, vol. 10 (Cambridge: MIT Press, 1986), 20–105. 35.Hodges, Turing, 307. 36.Carpenter and Doran, Turing’s A.C.E.

…

Report, 2. 37.Sara Turing, Alan M. Turing (Cambridge: W. Heffer & Sons, 1959), 78. 38.M. H. A. Newman, quoted in Good, “Turing and the Computer,” 663. 39.Alan Turing, “Lecture to the London Mathematical Society on 20 February 1947,” in Carpenter and Doran, Turing’s A.C.E. Report, 112. 40.Ibid., 106. 41.J. H. Wilkinson, “Turing’s Work at the National Physical Laboratory,” in Metropolis, Howlett, and Rota, History of Computing, 111. 42.Alan Turing, “Intelligent Machinery,” report submitted to the National Physical Laboratory, 1948, in Donald Michie, ed., Machine Intelligence, vol. 5 (1970), 3. 43.Turing, “Lecture,” 124. 44.Turing, “Intelligent Machinery,” 4. 45.Turing, “Lecture,” 123. 46.Turing, “Intelligent Machinery,” 9. 47.Ibid., 23. 48.Turing, “Computing Machinery,” 456. 49.Turing, “Intelligent Machinery,” 21–22. 50.Turing, “Systems of Logic Based on Ordinals,” Proceedings of the London Mathematical Society, 2d ser. 45 (1939); reprinted in Davis, The Undecidable, 209. 51.John von Neumann, 1948, “The General and Logical Theory of Automata,” in Lloyd A.

Turing's Vision: The Birth of Computer Science

by

Chris Bernhardt

Published 12 May 2016

I also thank Marie Lee, Kathleen Hensley, Virginia Crossman, and everyone at the MIT Press for their encouragement and help in transforming my rough proposal into this current book. Introduction Several biographies of his life have been published. He has been portrayed on stage by Derek Jacobi and in film by Benedict Cumberbatch. Alan Turing, if not famous, is certainly well known. Many people now know that his code breaking work during World War II played a pivotal role in the defeat of Germany. They know of his tragic death by cyanide, and perhaps of the test he devised for determining whether computers can think. Slightly less well known is the fact that the highest award in computer science is the ACM A.M.

…

We conclude with the text of Gordon Brown’s apology on behalf of the British government. 1 Background “Mathematics, rightly viewed, possesses not only truth, but supreme beauty — a beauty cold and austere, like that of sculpture, without appeal to any part of our weaker nature, without the gorgeous trappings of painting or music, yet sublimely pure, and capable of a stern perfection such as only the greatest art can show.” Bertrand Russell1 In 1935, at the age of 22, Alan Turing was elected a Fellow at King’s College, Cambridge. He had just finished his undergraduate degree in mathematics. He was bright and ambitious. As an undergraduate he had proved the Central Limit Theorem, probably the most fundamental result in statistics. This theorem explains the ubiquity of the normal distribution and why it occurs in so many guises.

…

This is what we do in the next chapter where we allow the machine to write on the tape. This seemingly small change gives rise to an immense change in computational power. 4 Turing Machines “The idea behind digital computers may be explained by saying that these machines are intended to carry out any operations which could be done by a human computer.”1 Alan Turing We now return to Turing at Cambridge in 1935. He wanted to prove Hilbert wrong by constructing a decision problem that was beyond the capability of any algorithm to answer correctly in every case. Since there was no definition of what it meant for a procedure to be an algorithm, his first step was to define this clearly.

Tools for Thought: The History and Future of Mind-Expanding Technology

by

Howard Rheingold

Published 14 May 2000

This is the kernel of the concept of stored programming, and although the ENIAC team was officially the first to describe an electronic computing device in such terms, it should be noted that the abstract version of exactly the same idea was proposed in Alan Turing's 1936 paper in the form of the single tape of the universal Turing machine. And at the same time the Pennsylvania group was putting together the EDVAC report, Turing was thinking again about the concept of stored programs: So the spring of 1945 saw the ENIAC team on one hand, and Alan Turing on the other, arrive naturally at the idea of constructing a universal machine with a single "tape." . . . But when Alan Turing spoke of "building a brain," he was working and thinking alone in his spare time, pottering around in a British back garden shed with a few pieces of equipment grudgingly conceded by the secret service.

…

For nearly two years after his arrest, during which time the homophobic and "national security" pressures grew even stronger, Turing worked with the ironic knowledge that he was being destroyed by the very government his wartime work had been instrumental in preserving. In June, 1954, Alan Turing lay down on his bed, took a bite from an apple, dipped it in cyanide, and bit again. Like Ada, Alan Turing's unconventionality was part of his undoing, and like her he saw the software possibilities that stretched far beyond the limits of the computing machinery available at the time. Like her, he died too young. Other wartime research projects and other brilliant mathematicians were aware of Turing's work, particularly in the United States, where scientists were suddenly emerging into the nuclear age as figures of power.

…

Although Boole's lifework was to translate his inspiration into an algebraic system, he continued to be so impressed with the suddenness and force of the revelation that hit him that day in the meadow that he also wrote extensively about the powers of the unconscious mind. After his death Boole's widow turned these ideas into a kind of human potential cult, a hundred years before the "me decade." Alan Turing solved one of the most crucial mathematical problems of the modern era at the age of twenty-four, creating the theoretical basis for computation in the process. Then he became the top code-breaker in the world--when he wasn't bicycling around wearing a gas mask or running twenty miles with an alarm clock tied around his waist.

War of Shadows: Codebreakers, Spies, and the Secret Struggle to Drive the Nazis From the Middle East

by

Gershom Gorenberg

Published 19 Jan 2021

When he brought his idea to Dilly, it turned out, a team led by Cambridge mathematician John Jeffreys was already busy in the cottage, punching sheets with a machine built for the purpose. The noise was driving Alan Turing nuts. Turing moved to a loft in the cottage where the stable boys once slept. He climbed up by rope ladder and lowered a basket tied to a rope when he wanted coffee sent up.40 A loft and a coffee cup lowered by rope to the world of people: this arrangement fit Alan Turing. He was twenty-seven. He had not fit into his English boarding school as a boy. He did not like to play cricket. He ran long distances, mostly alone. Contrary to myths that grew up about Turing, he did not have a problem understanding people’s feelings.

…

On the shared love of danger, see Reuth, Rommel, 15–16; Fraser, Knight’s Cross, 142–143. 39. “Hitler, in Warsaw”; Reuth, Rommel, 39. 40. Welchman, Hut Six, 71–72; Mavies [sic] Batey, “Marian and Dilly,” in Ciechanowski et al., Rejewski, 72. 41. Andrew Hodges, Alan Turing: The Enigma (London: Vintage, 2014), 30–32, 73–74, 99–100, 263–265, Kindle; David Boyle, Alan Turing: Unlocking the Enigma (Endeavour, 2014), 17, 21–27, 55–59, Kindle. 42. HW 14/2, “Enigma—Position,” November 1, 1939. 43. HW 14/2, “Investigation of German Military Cyphers: Progress Report,” November 7, 1939. 44. HW 14/1, Denniston to “The Director” [Sinclair], September 16, 1939. 45.

…

Interviews with Lottie Milvain (née Dudley-Smith) and Tempe Denzer; “Death of Cdr. R. Dudley-Smith,” Gloucestershire Echo, October 3, 1967, courtesy of Lottie Milvain, page number not preserved. 19. Dermot Turing, XY&Z: The Real Story of How Enigma Was Broken (Stroud, Gloucestershire, UK: History Press, 2018), 277–281. 20. Hodges, Alan Turing, chap. 6–8; Boyle, Alan Turing, chap. 6–7. 21. “Cdr Edward Wilfred Harry ‘Jumbo’ Travis,” Bletchley Park, https://rollofhonour.bletchleypark.org.uk/search/record-detail/9170 (accessed August 31, 2015); “The End of Denniston’s Career, and His Legacy,” GCHQ, www.gchq.gov.uk/features/end-dennistons-career-and-his-legacy (accessed January 11. 2018). 22.

Possible Minds: Twenty-Five Ways of Looking at AI

by

John Brockman

Published 19 Feb 2019

The history of computing can be divided into an Old Testament and a New Testament: before and after electronic digital computers and the codes they spawned proliferated across the Earth. The Old Testament prophets, who delivered the underlying logic, included Thomas Hobbes and Gottfried Wilhelm Leibniz. The New Testament prophets included Alan Turing, John von Neumann, Claude Shannon, and Norbert Wiener. They delivered the machines. Alan Turing wondered what it would take for machines to become intelligent. John von Neumann wondered what it would take for machines to self-reproduce. Claude Shannon wondered what it would take for machines to communicate reliably, no matter how much noise intervened.

…

During World War II, he developed techniques for aiming antiaircraft fire by making models that could predict the future trajectory of an airplane by extrapolating from its past behavior. In Cybernetics and in The Human Use of Human Beings, Wiener notes that this past behavior includes quirks and habits of the human pilot, thus a mechanized device can predict the behavior of humans. Like Alan Turing, whose Turing Test suggested that computing machines could give responses to questions that were indistinguishable from human responses, Wiener was fascinated by the notion of capturing human behavior by mathematical description. In the 1940s, he applied his knowledge of control and feedback loops to neuromuscular feedback in living systems, and was responsible for bringing Warren McCulloch and Walter Pitts to MIT, where they did their pioneering work on artificial neural networks.

…

Steve Omohundro has pointed to a further difficulty, observing that intelligent entities must act to preserve their own existence. This tendency has nothing to do with a self-preservation instinct or any other biological notion; it’s just that an entity cannot achieve its objectives if it’s dead. According to Omohundro’s argument, a superintelligent machine that has an off switch—which some, including Alan Turing himself, in a 1951 talk on BBC Radio 3, have seen as our potential salvation—will take steps to disable the switch in some way.* Thus we may face the prospect of superintelligent machines—their actions by definition unpredictable by us and their imperfectly specified objectives conflicting with our own—whose motivations to preserve their existence in order to achieve those objectives may be insuperable. 1001 REASONS TO PAY NO ATTENTION Objections have been raised to these arguments, primarily by researchers within the AI community.

When Einstein Walked With Gödel: Excursions to the Edge of Thought

by

Jim Holt

Published 14 May 2018

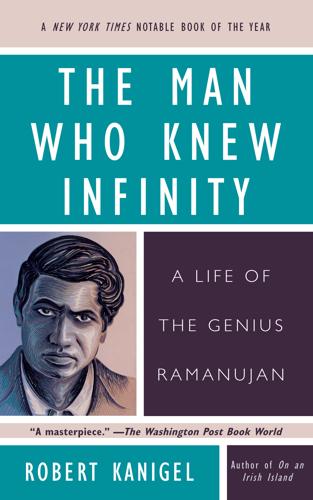

Joseph Warren Dauben, Abraham Robinson: The Creation of Nonstandard Analysis, a Personal and Mathematical Odyssey (Princeton, 1995). 14. THE ADA PERPLEX: WAS BYRON’S DAUGHTER THE FIRST CODER? Dorothy Stein, Ada: A Life and Legacy (MIT, 1987). Benjamin Woolley, The Bride of Science: Romance, Reason, and Byron’s Daughter (McGraw-Hill, 1999). 15. ALAN TURING IN LIFE, LOGIC, AND DEATH Andrew Hodges, Alan Turing: The Enigma (Walker, 2000). David Leavitt, The Man Who Knew Too Much: Alan Turing and the Invention of the Computer (Norton, 2006). Martin Davis, Engines of Logic: Mathematics and the Origin of the Computer (Norton, 2000). 16. DR. STRANGELOVE MAKES A THINKING MACHINE George Dyson, Turing’s Cathedral: The Origins of the Digital Universe (Pantheon, 2012).

…

Or that the first functioning computer should consist not of mechanical components or vacuum tubes but of unemployed pompadour dressers? Such are the froufrou antecedents of the computer era—an era that can claim as its original publicist a nervy young woman, a poet’s daughter, who saw herself as a fairy. 15 Alan Turing in Life, Logic, and Death On June 8, 1954, Alan Turing, a forty-one-year-old research scientist at the University of Manchester, was found dead by his housekeeper. Before getting into bed the night before, he had taken a few bites out of an apple that was, apparently, laced with cyanide. At an inquest a few days later, his death was ruled a suicide.

…

Although the report contained design ideas from the ENIAC inventors, von Neumann was listed as the sole author, which occasioned some grumbling among the uncredited. And the report had another curious omission. It failed to mention the man who, as von Neumann well knew, had originally worked out the possibility of a universal computer: Alan Turing. An Englishman nearly a decade younger than von Neumann, Alan Turing came to Princeton in 1936 to earn a Ph.D. in mathematics. Earlier that year, at the age of twenty-three, he had resolved a deep problem in logic called the decision problem. The problem traces its origins to the seventeenth-century philosopher Leibniz, who dreamed of “a universal symbolistic in which all truths of reason would be reduced to a kind of calculus.”

The Information: A History, a Theory, a Flood

by

James Gleick

Published 1 Mar 2011

♦ SAID NOTHING TO EACH OTHER ABOUT THEIR WORK: Shannon interview with Robert Price: “A Conversation with Claude Shannon: One Man’s Approach to Problem Solving,” IEEE Communications Magazine 22 (1984): 125; cf. Alan Turing to Claude Shannon, 3 June 1953, Manuscript Division, Library of Congress. ♦ “NO, I’M NOT INTERESTED IN DEVELOPING A POWERFUL BRAIN”: Andrew Hodges, Alan Turing: The Enigma (London: Vintage, 1992), 251. ♦ “A CONFIRMED SOLITARY”: Max H. A. Newman to Alonzo Church, 31 May 1936, quoted in Andrew Hodges, Alan Turing, 113. ♦ “THE JUSTIFICATION … LIES IN THE FACT”: Alan M. Turing, “On Computable Numbers, with an Application to the Entscheidungsproblem,” Proceedings of the London Mathematical Society 42 (1936): 230–65

…

♦ “YOU SEE … THE FUNNY LITTLE ROUNDS”: letter from Alan Turing to his mother and father, summer 1923, AMT/K/1/3, Turing Digital Archive, http://www.turingarchive.org. ♦ “IN ELEMENTARY ARITHMETIC THE TWO-DIMENSIONAL CHARACTER”: Alan M. Turing, “On Computable Numbers,” 230–65. ♦ “THE THING HINGES ON GETTING THIS HALTING INSPECTOR”: “On the Seeming Paradox of Mechanizing Creativity,” in Douglas R. Hofstadter, Metamagical Themas: Questing for the Essence of Mind and Pattern (New York: Basic Books, 1985), 535. ♦ “IT USED TO BE SUPPOSED IN SCIENCE”: “The Nature of Spirit,” unpublished essay, 1932, in Andrew Hodges, Alan Turing, 63. ♦ “ONE CAN PICTURE AN INDUSTRIOUS AND DILIGENT CLERK”: Herbert B.

…

♦ “ONE CAN PICTURE AN INDUSTRIOUS AND DILIGENT CLERK”: Herbert B. Enderton, “Elements of Recursion Theory,” in Jon Barwise, Handbook of Mathematical Logic (Amsterdam: North Holland, 1977), 529. ♦ “A LOT OF PARTICULAR AND INTERESTING CODES”: Alan Turing to Sara Turing, 14 October 1936, quoted in Andrew Hodges, Alan Turing, 120. ♦ “THE ENEMY KNOWS THE SYSTEM BEING USED”: “Communication Theory of Secrecy Systems” (1948), in Claude Elwood Shannon, Collected Papers, ed. N. J. A. Sloane and Aaron D. Wyner (New York: IEEE Press, 1993), 90. ♦ “FROM THE POINT OF VIEW OF THE CRYPTANALYST”: Ibid., 113. ♦ “THE MERE SOUNDS OF SPEECH”: Edward Sapir, Language: An Introduction to the Study of Speech (New York: Harcourt, Brace, 1921), 21

The Golden Ticket: P, NP, and the Search for the Impossible

by

Lance Fortnow

Published 30 Mar 2013

Biology is a computer: it takes a DNA sequence to produce proteins that perform the necessary functions that make life possible. What about the process we call computation? Is there anything we can’t compute? That mystery was solved before we even had digital computers by the great mathematician Alan Turing in 1936. Turing wondered how mathematicians thought, and came up with a formal mathematical model of that thought process, a model we now call the Turing machine, which has become the standard model of computation. Alan Turing was born in London in 1912. In the early 1930s he attended King’s College, Cambridge University, where he excelled in mathematics. During that time he thought of computation in terms of himself as a mathematician.

…

Mathematics In 1928 the renowned German mathematician David Hilbert put forth his great Entscheidungsproblem, a challenge to find an algorithmic procedure to find the truth or falsehood of any mathematical statement. In 1931 Kurt Gödel showed there must be some statements that any given set of axioms could not prove true or false at all. Influenced by this work, a few years later Alonzo Church and Alan Turing independently showed that no such algorithm exists. What if we restrict ourselves to relatively short proofs, say, of the kind that could fit in a short book? We can solve this computationally by looking at all possible short proofs for some mathematical statement. This is an NP question since we can recognize a good proof when we see one but finding one in practice remains difficult.

…

In one fell swoop, Karp tied together all these famous difficult-to-solve computational problems. From that point on, the P versus NP question took center stage. Every year the Association for Computing Machinery awards the ACM Turing Award, the computer science equivalent of the Nobel Prize, named for Alan Turing, who gave computer science its foundations in the 1930s. In 1982 the ACM presented the Turing Award to Stephen Cook for his work formulating the P versus NP problem. But one Turing Award for the P versus NP problem is not enough, and in 1985 Richard Karp received the award for his work on algorithms, most notably for the twenty-one NP-complete problems.

In Our Own Image: Savior or Destroyer? The History and Future of Artificial Intelligence

by

George Zarkadakis

Published 7 Mar 2016

AD 50: Hero of Alexandria designs first mechanical automata. 1275: Ramon Lull invents Ars Magna, a logical machine. 1637: Descartes declares cogito ergo sum (‘I think therefore I am’). 1642: Blaise Pascal invents the Pascaline, a mechanical cal-culator. 1726: Jonathan Swift publishes Gulliver’s Travels, which includes the description of a machine that can write any book. 1801: Joseph Marie Jacquard invents a textiles loom that uses punched cards. 1811: Luddite movement in Great Britain against the auto-mation of manual jobs. 1818: Mary Shelley publishes Frankenstein. 1835: Joseph Henry invents the electronic relay that allows electrical automation and switching. 1842: Charles Babbage lectures at the University of Turin, where he describes the Analytical Engine. 1843: Ada Lovelace writes the first computer program. 1847: George Boole invents symbolic and binary logic. 1876: Alexander Graham Bell invents the telephone. 1879: Thomas Edison invents the light bulb. 1879: Gottlob Frege invents predicate logic and calculus. 1910: Bertrand Russell and Alfred North Whitehead publish Principia Mathematica. 1917: Karel Capek coins the term ‘robot’ in his play R.U.R. 1921: Ludwig Wittgenstein publishes Tractatus Logico-philosopicus. 1931: Kurt Gödel publishes The Incompleteness Theorem. 1937: Alan Turing invents the ‘Turing machine’. 1938: Claude Shannon demonstrates that symbolic logic can be implemented using electronic relays. 1941: Konrad Zuse constructs Z3, the first Turing-complete computer. 1942: Alan Turing and Claude Shannon work together at Bell Labs. 1943: Warren McCulloch and Walter Pitts demonstrate the equivalence between electronics and neurons. 1943: IBM funds the construction of Harvard Mark 1, the first program-controlled calculator. 1943: Charles Wynn-Williams and others create the computer Colossus at Bletchley Park. 1945: John von Neumann suggests a computer architecture whereby programs are stored in the memory. 1946: ENIAC, the first electronic general-purpose computer, is built. 1947: Invention of the transistor at Bell Labs. 1948: Norbert Wiener publishes Cybernetics. 1950: Alan Turing proposes the ‘Turing Test’. 1950: Isaac Asimov publishes I, Robot. 1952: Alan Turing commits suicide with cyanide-laced apple. 1952: Herman Carr produces the first one-dimensional MRI image. 1953: Claude Shannon hires Marvin Minsky and John McCarthy at Bell Labs. 1953: Ludwig Wittgenstein’s Philosophical Investigations pub-lished in German (two years after his death). 1956: The Dartmouth conference; the term ‘Artificial Intel-ligence’ is coined by John McCarthy. 1957: Allen Newell and Herbert Simon build the ‘General Problem Solver’. 1958: John McCarthy creates LISP programming language. 1959: John McCarthy and Marvin Minsky establish AI lab at MIT. 1963: The US government awards $2.2 million to AI lab at MIT for machine-aided cognition. 1965: Hubert Dreyfus argues against the possibility of Artificial Intelligence. 1969: Stanley Kubrick introduces HAL in the film 2001: A Space Odyssey. 1971: Leon Chua envisions the memristor. 1972: Alain Colmerauer develops Prolog programming language. 1973: The Lighthill report influences the British government to abandon research in AI. 1976: Hans Moravec builds the ‘Stanford Cart’, the first auto-nomous vehicle.

…

AD 50: Hero of Alexandria designs first mechanical automata. 1275: Ramon Lull invents Ars Magna, a logical machine. 1637: Descartes declares cogito ergo sum (‘I think therefore I am’). 1642: Blaise Pascal invents the Pascaline, a mechanical cal-culator. 1726: Jonathan Swift publishes Gulliver’s Travels, which includes the description of a machine that can write any book. 1801: Joseph Marie Jacquard invents a textiles loom that uses punched cards. 1811: Luddite movement in Great Britain against the auto-mation of manual jobs. 1818: Mary Shelley publishes Frankenstein. 1835: Joseph Henry invents the electronic relay that allows electrical automation and switching. 1842: Charles Babbage lectures at the University of Turin, where he describes the Analytical Engine. 1843: Ada Lovelace writes the first computer program. 1847: George Boole invents symbolic and binary logic. 1876: Alexander Graham Bell invents the telephone. 1879: Thomas Edison invents the light bulb. 1879: Gottlob Frege invents predicate logic and calculus. 1910: Bertrand Russell and Alfred North Whitehead publish Principia Mathematica. 1917: Karel Capek coins the term ‘robot’ in his play R.U.R. 1921: Ludwig Wittgenstein publishes Tractatus Logico-philosopicus. 1931: Kurt Gödel publishes The Incompleteness Theorem. 1937: Alan Turing invents the ‘Turing machine’. 1938: Claude Shannon demonstrates that symbolic logic can be implemented using electronic relays. 1941: Konrad Zuse constructs Z3, the first Turing-complete computer. 1942: Alan Turing and Claude Shannon work together at Bell Labs. 1943: Warren McCulloch and Walter Pitts demonstrate the equivalence between electronics and neurons. 1943: IBM funds the construction of Harvard Mark 1, the first program-controlled calculator. 1943: Charles Wynn-Williams and others create the computer Colossus at Bletchley Park. 1945: John von Neumann suggests a computer architecture whereby programs are stored in the memory. 1946: ENIAC, the first electronic general-purpose computer, is built. 1947: Invention of the transistor at Bell Labs. 1948: Norbert Wiener publishes Cybernetics. 1950: Alan Turing proposes the ‘Turing Test’. 1950: Isaac Asimov publishes I, Robot. 1952: Alan Turing commits suicide with cyanide-laced apple. 1952: Herman Carr produces the first one-dimensional MRI image. 1953: Claude Shannon hires Marvin Minsky and John McCarthy at Bell Labs. 1953: Ludwig Wittgenstein’s Philosophical Investigations pub-lished in German (two years after his death). 1956: The Dartmouth conference; the term ‘Artificial Intel-ligence’ is coined by John McCarthy. 1957: Allen Newell and Herbert Simon build the ‘General Problem Solver’. 1958: John McCarthy creates LISP programming language. 1959: John McCarthy and Marvin Minsky establish AI lab at MIT. 1963: The US government awards $2.2 million to AI lab at MIT for machine-aided cognition. 1965: Hubert Dreyfus argues against the possibility of Artificial Intelligence. 1969: Stanley Kubrick introduces HAL in the film 2001: A Space Odyssey. 1971: Leon Chua envisions the memristor. 1972: Alain Colmerauer develops Prolog programming language. 1973: The Lighthill report influences the British government to abandon research in AI. 1976: Hans Moravec builds the ‘Stanford Cart’, the first auto-nomous vehicle.

…

It is a game of deception. The man in the first room will try to convince the judge of his manhood. The woman will impersonate the man, counteract his claims, and do her outmost to deceive the judge into believing that she is the man. The judge must guess correctly who is who. The English mathematician Alan Turing, one of the fathers of Artificial Intelligence, proposed this test in a landmark 1950 paper,1 noting that if one were to slightly modify this ‘imitation game’ and, instead of the woman there was a machine in the second room, then one had the best test for judging whether that machine was intelligent.

A Mind at Play: How Claude Shannon Invented the Information Age

by

Jimmy Soni

and

Rob Goodman

Published 17 Jul 2017

“We talked not at all”: Price, “Oral History: Claude E. Shannon.” “I reached New York” . . . “I had been intending”: Alan Turing, “Alan Turing’s Report from Washington DC, November 1942.” “incomplete alliance”: Andrew Hodges, “Alan Turing as UK-USA Link, 1942 Onwards,” Alan Turing Internet Scrapbook, www.turing.org.uk/scrapbook/ukusa.html. “I am persuaded”: Turing, “Alan Turing’s Report from Washington DC, November 1942.” “we would talk about”: Price, “Oral History: Claude E. Shannon.” “Well, back in ’42” . . . “a very, very impressive guy”: Shannon, interviewed by Hagemeyer, February 28, 1977. “While there we went over”: Price, “Oral History: Claude E.

…

“The Bush Differential Analyzer and Its Applications.” Nature 146 (September 7, 1940): 319–23. Hatch, David A., and Robert Louis Benson. “The Korean War: The SIGINT Background.” National Security Agency. www.nsa.gov/public_info/declass/korean_war/sigint_bg.shtml. Hodges, Andrew. Alan Turing: The Enigma. Princeton, NJ: Princeton University Press, 1983. ———. “Alan Turing as UK-USA Link, 1942 Onwards.” Alan Turing Internet Scrapbook. www.turing.org.uk/scrapbook/ukusa.html. Horgan, John. “Claude E. Shannon: Unicyclist, Juggler, and Father of Information Theory.” Scientific American, January 1990. ———. “Poetic Masterpiece of Claude Shannon, Father of Information Theory, Published for the First Time.”

…

“Accept distortion for security”: Dave Tompkins, How to Wreck a Nice Beach: The Vocoder from World War II to Hip-Hop, The Machine Speaks (Chicago: Stop Smiling Books, 2011), 63. “Members working on the job”: Andrew Hodges, Alan Turing: The Enigma (Princeton, NJ: Princeton University Press, 1983), 247. “It worked”: Ibid., 312. “At a recent world fair”: Bush, “As We May Think.” “Phrt fdygui”: Sterling, “Churchill and Intelligence,” 34. “not a lot of laboratories”: Shannon, interviewed by Hagemeyer, February 28, 1977. “a very down to earth discipline”: Shannon, interviewed by Hagemeyer, February 28, 1977. Chapter 12: Turing “Here [Turing] met a person”: Hodges, Alan Turing, 314. “I think Turing had” . . . “We talked not at all”: Price, “Oral History: Claude E.

Human Compatible: Artificial Intelligence and the Problem of Control

by

Stuart Russell

Published 7 Oct 2019

This was one of the arguments against AI that was refuted by Alan Turing, “Computing machinery and intelligence,” Mind 59 (1950): 433–60. 2. The earliest known article on existential risk from AI was by Richard Thornton, “The age of machinery,” Primitive Expounder IV (1847): 281. 3. “The Book of the Machines” was based on an earlier article by Samuel Butler, “Darwin among the machines,” The Press (Christchurch, New Zealand), June 13, 1863. 4. Another lecture in which Turing predicted the subjugation of humankind: Alan Turing, “Intelligent machinery, a heretical theory” (lecture given to the 51 Society, Manchester, 1951).

…

Just by typing, you can create programs that turn the box into something new, perhaps something that magically synthesizes moving images of oceangoing ships hitting icebergs or alien planets with tall blue people; type some more, and it translates English into Chinese; type some more, and it listens and speaks; type some more, and it defeats the world chess champion. This ability of a single box to carry out any process that you can imagine is called universality, a concept first introduced by Alan Turing in 1936.31 Universality means that we do not need separate machines for arithmetic, machine translation, chess, speech understanding, or animation: one machine does it all. Your laptop is essentially identical to the vast server farms run by the world’s largest IT companies—even those equipped with fancy, special-purpose tensor processing units for machine learning.

…

Fortunately for us, we have a distinct advantage over machines when it comes to knowing how other humans feel and how they will react. Nearly every human knows what it’s like to hit one’s thumb with a hammer or to feel unrequited love. Counteracting this natural human advantage is a natural human disadvantage: the tendency to be fooled by appearances—especially human appearances. Alan Turing warned against making robots resemble humans:34 I certainly hope and believe that no great efforts will be put into making machines with the most distinctively human, but non-intellectual, characteristics such as the shape of the human body; it appears to me quite futile to make such attempts and their results would have something like the unpleasant quality of artificial flowers.

The Road to Conscious Machines

by

Michael Wooldridge

Published 2 Nov 2018

We have many possible choices for the beginning of AI, but for me the beginning of the AI story coincides with the beginning of the story of computing itself, for which we have a pretty clear starting point: King’s College, Cambridge, in 1935, and a brilliant but unconventional young student called Alan Turing. Cambridge, 1935 It is hard to imagine now, because he is about as famous as any mathematician could ever hope to be, but until the 1980s, the name of Alan Turing was virtually unknown outside the fields of mathematics and computer science. While students of mathematics and computing might have come across Turing’s name in their studies, they would have known little about the full extent of his achievements, or his tragic, untimely death.

…

If you are interested in detailed questions of history and the way in which the field evolved, the book you want is Nils Nilsson’s The Quest for Artificial Intelligence (Cambridge University Press, 2010). This is a superlative historical guide to the many threads of modern AI, written by one of the field’s greatest researchers. There are now several books about the life of Alan Turing, but the best by far is the one that gave the world the Turing story: Andrew Hodges’ Alan Turing: The Enigma (Burnett Books/Hutchinson, 1983). As an undergraduate, I very much enjoyed the three-volume Handbook of Artificial Intelligence published by William Kaufmann & Heuristech Press (Volume I, ed. Avron Barr and Edward A. Feigenbaum, 1981; Volume II, ed.

…

So, why has AI proved to be so difficult? To understand the answer to this question, we need to understand what computers are and what computers can do, at their most fundamental level. This takes us into the realm of some of the deepest questions in mathematics, and the work of one of the greatest minds of the twentieth century: Alan Turing. The History of AI My second main goal in this book is to tell you the story of AI from its inception. Every story must have a plot, and we are told there are really only seven basic plots for all the stories in existence, so which of these best fits the story of AI? Many of my colleagues would dearly like it to be ‘Rags to Riches’, and it has certainly turned out that way for a clever (or lucky) few.

Pandas for Everyone: Python Data Analysis

by

Unknown

# for a Series scientist_names_from_pickle = pd.read_pickle(’../output/scientists_names_ 0 Rosaline Franklin 1 William Gosset 2 Florence Nightingale 3 Marie Curie 4 Rachel Carson 5 John Snow 6 Alan Turing 7 Johann Gauss Name: Name, dtype: object # for a DataFrame scientists_from_pickle = pd.read_pickle(’../output/scientists_df.pickle print(scientists_from_pickle) 0 1 2 3 4 5 6 7 0 1 2 3 4 5 6 7 Name Rosaline Franklin William Gosset Florence Nightingale Marie Curie Rachel Carson John Snow Alan Turing Johann Gauss born_dt 1920-07-25 1876-06-13 1820-05-12 1867-11-07 1907-05-27 1813-03-15 1912-06-23 1777-04-30 died_dt 1958-04-16 1937-10-16 1910-08-13 1934-07-04 1964-04-14 1858-06-16 1954-06-07 1855-02-23 Born 1920-07-25 1876-06-13 1820-05-12 1867-11-07 1907-05-27 1813-03-15 1912-06-23 1777-04-30 age_days_dt 13779 days 22404 days 32964 days 24345 days 20777 days 16529 days 15324 days 28422 days Died 1958-04-16 1937-10-16 1910-08-13 1934-07-04 1964-04-14 1858-06-16 1954-06-07 1855-02-23 Age 66 56 41 77 90 45 37 61 Occupation Chemist Statistician Nurse Chemist Biologist Physician Computer Scientist Mathematician age_years_dt 37.0 61.0 90.0 66.0 56.0 45.0 41.0 77.0 You will see pickle files saved as .p, . pkl, or . pickle. 2.8.2 CSV Comma-separated values (CSV) are the most flexible data storage type.

…

OTHERWISE NEED TO FIND ANOTHER DATASET first_half = second_half scientists[: 4] = scientists[ 4 :] print(first_half) 0 1 2 3 Name Rosaline Franklin William Gosset Florence Nightingale Marie Curie Born 1920-07-25 1876-06-13 1820-05-12 1867-11-07 Died 1958-04-16 1937-10-16 1910-08-13 1934-07-04 Age 37 61 90 66 Occupation Chemist Statistician Nurse Chemist print(second_half) 4 5 6 7 Name Rachel Carson John Snow Alan Turing Johann Gauss Born 1907-05-27 1813-03-15 1912-06-23 1777-04-30 Died 1964-04-14 1858-06-16 1954-06-07 1855-02-23 Age 56 45 41 77 Occupation Biologist Physician Computer Scientist Mathematician print(first_half + second_half) 0 1 2 3 4 5 6 7 Name Born Died NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN Age Occupation NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN print(scientists * 2) 0 1 2 3 4 5 6 7 Name Rosaline FranklinRosaline Franklin William GossetWilliam Gosset Florence NightingaleFlorence Nightingale Marie CurieMarie Curie Rachel CarsonRachel Carson John SnowJohn Snow Alan TuringAlan Turing Johann GaussJohann Gauss 0 Died 1958-04-161958-04-16 Age 74 Born 1920-07-251920-07-25 1876-06-131876-06-13 1820-05-121820-05-12 1867-11-071867-11-07 1907-05-271907-05-27 1813-03-151813-03-15 1912-06-231912-06-23 1777-04-301777-04-30 Occupation ChemistChemist \ 1 2 3 4 5 6 7 1937-10-161937-10-16 1910-08-131910-08-13 1934-07-041934-07-04 1964-04-141964-04-14 1858-06-161858-06-16 1954-06-071954-06-07 1855-02-231855-02-23 122 180 132 112 90 82 154 StatisticianStatistician NurseNurse ChemistChemist BiologistBiologist PhysicianPhysician Computer ScientistComputer Scientist MathematicianMathematician 2.7 Making changes to Series and DataFrames 2.7.1 Add additional columns Now that we know various ways of subsetting and slicing our data (See table 2–1), we should now be able to find values of interest to assign new values to them.

…

We can recalculate the ‘real’ age using datetime arithmetic. 6 https://docs.python.org/3.5/library/random.html#random.shuffle # subtracting dates will give us number of days scientists[’age_days_dt’] = (scientists[’died_dt’] - scientists[ print(scientists) 0 1 2 3 4 5 6 7 Name Rosaline Franklin William Gosset Florence Nightingale Marie Curie Rachel Carson John Snow Alan Turing Johann Gauss 0 1 2 3 4 5 6 7 born_dt 1920-07-25 1876-06-13 1820-05-12 1867-11-07 1907-05-27 1813-03-15 1912-06-23 1777-04-30 died_dt 1958-04-16 1937-10-16 1910-08-13 1934-07-04 1964-04-14 1858-06-16 1954-06-07 1855-02-23 Born 1920-07-25 1876-06-13 1820-05-12 1867-11-07 1907-05-27 1813-03-15 1912-06-23 1777-04-30 Died 1958-04-16 1937-10-16 1910-08-13 1934-07-04 1964-04-14 1858-06-16 1954-06-07 1855-02-23 Age 66 56 41 77 90 45 37 61 Occupation Chemist Statistician Nurse Chemist Biologist Physician Computer Scientist Mathematician age_days_dt 13779 days 22404 days 32964 days 24345 days 20777 days 16529 days 15324 days 28422 days # we can convert the value to just the year # using the astype method scientists[’age_years_dt’] = scientists[’age_days_dt’].astype(’ print(scientists) 0 1 2 Name Rosaline Franklin William Gosset Florence Nightingale Born 1920-07-25 1876-06-13 1820-05-12 Died 1958-04-16 1937-10-16 1910-08-13 Age 66 56 41 Occupation Chemist Statistician Nurse 3 4 5 6 7 0 1 2 3 4 5 6 7 Marie Curie Rachel Carson John Snow Alan Turing Johann Gauss born_dt 1920-07-25 1876-06-13 1820-05-12 1867-11-07 1907-05-27 1813-03-15 1912-06-23 1777-04-30 died_dt 1958-04-16 1937-10-16 1910-08-13 1934-07-04 1964-04-14 1858-06-16 1954-06-07 1855-02-23 1867-11-07 1907-05-27 1813-03-15 1912-06-23 1777-04-30 1934-07-04 1964-04-14 1858-06-16 1954-06-07 1855-02-23 age_days_dt 13779 days 22404 days 32964 days 24345 days 20777 days 16529 days 15324 days 28422 days 77 90 45 37 61 Chemist Biologist Physician Computer Scientist Mathematician age_years_dt 37.0 61.0 90.0 66.0 56.0 45.0 41.0 77.0 Note We could’ve directly assigned the column to the datetime converted, but the point is an assignment still needed to be preformed.

Thinking Machines: The Inside Story of Artificial Intelligence and Our Race to Build the Future

by

Luke Dormehl

Published 10 Aug 2016

It’s about the nature of creativity, the future of employment, and what happens when all knowledge is data and can be stored electronically. It’s about what we’re trying to do when we make machines smarter than we are, how humans still have the edge (for now), and the question of whether you and I aren’t thinking machines of a sort as well. The pioneering British mathematician and computer scientist Alan Turing predicted in 1950 that by the end of the twentieth century, ‘the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted’. Like many futurist predictions about technology, he was optimistic in his timeline – although he wasn’t off by too much.

…

Compared with the unreliable memory of humans, a machine capable of accessing thousands of items in the span of microseconds had a clear advantage. There are entire books written about the birth of modern computing, but three men stand out as laying the philosophical and technical groundwork for the field that became known as Artificial Intelligence: John von Neumann, Alan Turing and Claude Shannon. A native of Hungary, von Neumann was born in 1903 into a Jewish banking family in Budapest. In 1930, he arrived at Princeton University as a maths teacher and, by 1933, had established himself as one of six professors in the new Institute for Advanced Study in Princeton: a position he stayed in until the day he died.

…

Unlike some of his contemporaries, he did not believe a computer would be able to think in the way that a human can, but he did help establish the parallels that exist with human physiognomy. The parts of a computer, he wrote in one paper, ‘correspond to the associative neurons in the human nervous system. It remains to discuss the equivalents of the sensory or afferent and the motor or efferent neurons.’ Others would happily take up the challenge. Alan Turing, meanwhile, was a British mathematician and cryptanalyst. During the Second World War, he led a team for the Government Code and Cypher School at Britain’s secret code-breaking centre, Bletchley Park. There he came up with various techniques for cracking German codes, most famously an electromechanical device capable of working out the settings for the Enigma machine.

12 Bytes: How We Got Here. Where We Might Go Next

by

Jeanette Winterson

Published 15 Mar 2021

Artificial intelligence was the term coined in the mid-1950s by John McCarthy – an American computing expert – who, like his friend Marvin Minsky, believed computers could achieve human levels of intelligence by the 1970s. Alan Turing had thought the year 2000 was realistic. Yet from the coining of a term – AI – 40 years would pass before IBM’s Deep Blue beat Kasparov at chess in 1997. That’s because computational power is the sum of computer storage (memory) and processing speed. Simply, computers weren’t powerful enough to do what McCarthy, Minsky and Turing knew they would be able to do. And before those men, there was Ada Lovelace, the early-19th century genius who inspired Alan Turing to devise the Turing Test – when we can no longer tell the difference between AI and bio-human.

…

Set against that thought is the fact that the biggest touring bands in the world are still old-fashioned (and increasingly old-aged) guys who write their music and play their instruments. But this is probably the end of an era. As David Cope puts it: ‘The question isn’t whether computers possess a soul but if we possess one.’ * * * Alan Turing, the British mathematician who designed and built the Enigma code-breaking machine at Bletchley Park (Turing was played by Benedict Cumberbatch in the movie The Imitation Game), wasn’t interested in whether or not a computer could have, or would have, a soul, but he was interested in whether or not a computer could originate (as well as learn) independently of human input.

…

Turing thought that machine intelligence would pass his test by the year 2000. That hasn’t happened, but we are getting closer – and while we are getting closer we might decide, or AI might decide, that it doesn’t matter. * * * Mary Shelley may be closer to the world that is to come than either Ada Lovelace or Alan Turing. A new kind of life-form may not need to be like a human at all (the cute helper bot or the virtual digital assistant may be just a distraction, a sideline, a bridge. Pure intelligence will be other) – and that’s something that is achingly, heartbreakingly, clear in Frankenstein. The monster is initially designed to be ‘like’ us.

Decoding Organization: Bletchley Park, Codebreaking and Organization Studies

by

Christopher Grey

Published 22 Mar 2012

Decoding Organization How was Bletchley Park made as an organization? How was signals intelligence constructed as a field? What was Bletchley Park’s culture and how was its work co-ordinated? Bletchley Park was not just the home of geniuses such as Alan Turing, it was also the workplace of thousands of other people, mostly women, and their organization was a key component in the cracking of Enigma. Challenging many popular perceptions, this book examines the hitherto unexamined complexities of how 10,000 people were brought together in complete secrecy during World War II to work on ciphers. Unlike most organizational studies, this book decodes, rather than encodes, the processes of organization and examines the structures, cultures and the work itself of Bletchley Park using archive and oral history sources.

…

The BP site is now a major museum attracting many thousands of visitors each year and is regularly in the news because of the enduring interest in its codebreaking achievements and contribution to the conduct of WW2, its role in the development of computing and not least because of public interest in its best known luminary, Alan Turing (Hodges, 1982). There is a stream of popular literature explaining what happened at BP (e.g. Smith, 1998; McKay, 2010) and a growing number of reminiscences of those who worked there (e.g. Welchman, 1982; Hinsley and Stripp, 1993; Calvocoressi, 2001; Page, 2002, 2003; Hill, 2004; Luke, 2005; Watkins, 2006; Paterson, 2007; Hogarth, 2008; Thirsk, 2008; Briggs, 2011; Pearson, 2011)4.

…

Certainly it is significant that, as mentioned in the previous chapter, the van Cutsem report was commissioned not by Denniston but jointly by the DMI and ‘C’37. Perhaps even more damaging was one of the most famous events in the history of BP. This was the frustration about lack of resources which led Gordon Welchman, Stuart Milner-Barry, Alan Turing and Hugh Alexander to bypass Denniston (and ‘C’) to appeal directly to Winston Churchill (who had recently visited BP) in a letter handdelivered to 10 Downing Street on 21 October 1941. The symbolism of that date – Trafalgar Day – would surely not have been lost on Churchill. At all events, his response, accompanied, famously, by the injunction to ‘action this day’ was ‘[m]ake sure they have all they want on extreme priority and report to me that this has been done’38.

One Day in August: Ian Fleming, Enigma, and the Deadly Raid on Dieppe

by

David O’keefe

Published 5 Nov 2020

Ian Fleming in naval uniform 3. Rear Admiral John Godfrey 4. Admiral Karl Dönitz 5. The naval four-rotor Enigma machine 6. The ‘Morrison Wall’ at Bletchley Park 7. Two sets of spare rotor wheels for the Enigma machine 8. Three Enigma rotor wheels laid out on their sides 9. Frank Birch 10. Alan Turing 11. A captured Enigma wheel 12. Harry Hinsley, Sir Edward Travis and John Tiltman 13. Major General Hamilton Roberts 14. Captain Peter Huntington-Whiteley 15. No. 10 Platoon of X Company, Royal Marines 16. Captain John ‘Jock’ Hughes-Hallett 17. R.E.D. ‘Red’ Ryder 18. An aerial reconnaissance photo of Dieppe harbour 19.

…

It was the painstaking assembly of minuscule pieces of evidence – balanced, sorted and weighed against other evidence – that has finally allowed me to tell the ‘untold’ story of Dieppe. New technologies such as the internet, the microchip and digitization – ones that would have delighted Charles Babbage, Alan Turing, Frank Birch and Ian Fleming – have allowed me to consult more than 150,000 pages of documents from archives on two continents and over 50,000 pages of published primary and secondary source material for this book. The methodology I employ is straightforward, based in large part on the sage advice of a multitude of mentors in the historical realm and, perhaps rather ironically, on the musings of Colonel Peter Wright, the man who served as General Ham Roberts’s intelligence officer aboard the Calpe.

…

In this context, there can be little doubt that the ‘intelligence booty’ Fleming sought in Ruthless was akin to the Holy Grail for Bletchley Park. Fleming had dreamed up the operation to assist the gifted cryptanalysts who worked in Bletchley’s Naval Section – brilliant mathematicians, physicists and classical scholars such as Dillwyn ‘Dilly’ Knox, Alan Turing and Peter Twinn. These men now found themselves stymied in their critical struggle to break into German naval communications enciphered on a specially designed Enigma encryption machine. Despite their impressive intellectual efforts, they desperately needed ‘cribs,’ or ‘cheats’ – plain-language German text – that they could match up with a stretch of ciphertext and thus discover the daily ‘key’ setting, or password, which would unlock the contents of the top-secret German messages.

Emergence

by

Steven Johnson

If we could only figure out how the Dictyostelium pull it off, maybe we would gain some insight on our own baffling togetherness. “I was at Sloan-Kettering in the biomath department—and it was a very small department,” Keller says today, laughing. While the field of mathematical biology was relatively new in the late sixties, it had a fascinating, if enigmatic, precedent in a then-little-known essay written by Alan Turing, the brilliant English code-breaker from World War II who also helped invent the digital computer. One of Turing’s last published papers, before his death in 1954, had studied the riddle of “morphogenesis”—the capacity of all life-forms to develop ever more baroque bodies out of impossibly simple beginnings.

…

People had been thinking about emergent behavior in all its diverse guises for centuries, if not millennia, but all that thinking had consistently been ignored as a unified body of work—because there was nothing unified about its body. There were isolated cells pursuing the mysteries of emergence, but no aggregation. Indeed, some of the great minds of the last few centuries—Adam Smith, Friedrich Engels, Charles Darwin, Alan Turing—contributed to the unknown science of self-organization, but because the science didn’t exist yet as a recognized field, their work ended up being filed on more familiar shelves. From a certain angle, those taxonomies made sense, because the leading figures of this new discipline didn’t even themselves realize that they were struggling to understand the laws of emergence.

…

Nearly a hundred years later, the area has christened itself the Gay Village and actively promotes its coffee bars and boutiques as a must-see Manchester tourist destination, like Manhattan’s Christopher Street and San Francisco’s Castro. The pattern is now broadcast to a wider audience, but it has not lost its shape. But even at a lower amplitude, that signal was still loud enough to attract the attention of another of Manchester’s illustrious immigrants: the British polymath Alan Turing. As part of his heroic contribution to the war effort, Turing had been a student of mathematical patterns, designing the equations and the machines that cracked the “unbreakable” German code of the Enigma device. After a frustrating three-year stint at the National Physical Laboratory in London, Turing moved to Manchester in 1948 to help run the university’s embryonic computing lab.

The Secrets of Station X: How the Bletchley Park codebreakers helped win the war

by

Michael Smith

Published 30 Oct 2011

The main problem for Knox was what he called ‘the QWERTZU’, by which he meant the way in which the letters on the keyboard of the Wehrmacht Enigma machines were wired to the letters on the wheels inside the machine, and he left the meeting in Paris none the wiser. One good thing did however come out of the January 1939 meeting. It became clear that the Poles were using mathematicians to try to break Enigma and, when they returned to the UK, Denniston recruited two mathematicians to assist Knox. One was Alan Turing, a 27-year-old fellow of King’s College, Cambridge, who began working part-time, coming in on occasional days with the intention of joining full time when the war began. The other was Peter Twinn, a 23-year-old mathematician from Brasenose College, Oxford, who started work immediately. ‘When I joined GC&CS in early February 1939 and went to join Dilly Knox to work on the German services’ Enigma traffic, the outlook was not encouraging,’ Twinn recalled.

…

But his good humour soon returned after they told him that the keys were wired up to the encypherment mechanism in alphabetical order, A to A, B to B, etc. Although one female codebreaker had suggested this as a possibility, it had never been seriously considered, Twinn recalled. ‘It was such an obvious thing to do, really a silly thing to do, that nobody, not Dilly Knox or Tony Kendrick or Alan Turing, ever thought it worthwhile trying,’ he recalled. ‘I know in retrospect it looks daft. I can only say that’s how it struck all of us and none of the others were idiots.’ A few weeks later the Poles gave both the French and British codebreakers clones of the steckered Enigma. Bertrand, who had been given both machines and asked to pass one on to the British, later described taking the British copy to London on the Golden Arrow express train on 16 August 1939.

…

The 21-year-old from Belfast was brought in at the end of January 1940 by Gordon Welchman, who had been his mathematics tutor at Sidney Sussex College, Cambridge, and recognised that he had exceptional talent as a mathematician. He was one of the new boys trying to think of ways to break into the Red. After being taught ‘the mysteries of the Enigma’ by Alan Turing and Tony Kendrick, Herivel was sent to Hut 6. ‘I had been recruited by Welchman and I was going to work in his show,’ Herivel recalled. I do remember that when I came to Hut 6, we were doing very badly in breaking into the Red code. Every evening, when I went back to my digs and when I’d had my supper, I would sit down in front of the fire and put my feet up and think of some method of breaking into the Red.

The Code Book: The Science of Secrecy From Ancient Egypt to Quantum Cryptography

by

Simon Singh

Published 1 Jan 1999

There were many great cryptanalysts and many significant breakthroughs, and it would take several large volumes to describe the individual contributions in detail. However, if there is one figure who deserves to be singled out, it is Alan Turing, who identified Enigma’s greatest weakness and ruthlessly exploited it. Thanks to Turing, it became possible to crack the Enigma cipher under even the most difficult circumstances. Alan Turing was conceived in the autumn of 1911 in Chatrapur, a town near Madras in southern India, where his father Julius Turing was a member of the Indian civil service. Julius and his wife Ethel were determined that their son should be born in Britain, and returned to London, where Alan was born on June 23, 1912.

…

Chapter 4 Hinsley, F.H., British Intelligence in the Second World War: Its Influence on Strategy and Operations (London: HMSO, 1975). The authoritative record of intelligence in the Second World War, including the role of Ultra intelligence. Hodges, Andrew, Alan Turing: The Enigma (London: Vintage, 1992). The life and work of Alan Turing. One of the best scientific biographies ever written. Kahn, David, Seizing the Enigma (London: Arrow, 1996). Kahn’s history of the Battle of the Atlantic and the importance of cryptography. In particular, he dramatically describes the “pinches” from U-boats which helped the codebreakers at Bletchley Park.

…

He replies, “It’s about right and wrong. In general terms. It’s a technical paper in mathematical logic, but it’s also about the difficulty of telling right from wrong. People think—most people think—that in mathematics we always know what is right and what is wrong. Not so. Not any more.” Figure 47 Alan Turing. (photo credit 4.4) In his attempt to identify undecidable questions, Turing’s paper described an imaginary machine that was designed to perform a particular mathematical operation, or algorithm. In other words, the machine would be capable of running through a fixed, prescribed series of steps which would, for example, multiply two numbers.

Our Final Invention: Artificial Intelligence and the End of the Human Era

by

James Barrat

Published 30 Sep 2013

The heroes of Bletchley Park: Hinsley, Harry, “The Influence of ULTRA in the Second World War,” Babbage Lecture Theatre, Computer Laboratory, last modified November 26, 1996, http://www.cl.cam.ac.uk/research/security/Historical/hinsley.html (accessed September 6, 2011). At Bletchley Turing: Banks, “A Conversation with I. J. Good.” I won’t say that what Turing did: McKittrick, David, “Jack Good: Cryptographer whose work with Alan Turing at Bletchley Park was crucial to the War effort,” The Independent, sec. obituaries, May 14, 2009, http://www.independent.co.uk/news/obituaries/jack-good-cryptographer-whose-work-with-alan-turing-at-bletchley-park-was-crucial-to-the-war-effort-1684506.html (accessed September 5, 2011). In 1957, MIT psychologist: McCorduck, Pamela, Machines Who Think, A Personal Inquiry into the History and Prospects of Artificial Intelligence (San Francisco: W.

…

To meet our definition of general intelligence a computer would need ways to receive input from the environment, and provide output, but not a lot more. It needs ways to manipulate objects in the real world. But as we saw in the Busy Child scenario, a sufficiently advanced intelligence can get someone or something else to manipulate objects in the real world. Alan Turing devised a test for human-level intelligence, now called the Turing test, which we will explore later. His standard for demonstrating human-level intelligence called only for the most basic keyboard-and-monitor kind of input and output devices. The strongest argument for why advanced AI needs a body may come from its learning and development phase—scientists may discover it’s not possible to “grow” AGI without some kind of body.

…

He may be a genius, but he’s not a thousand times more intelligent than the smartest human, as an ASI could be. Bad or indifferent ASI needs to get out of the box just once. The AI-Box Experiment also fascinated me because it’s a riff on the venerable Turing test. Devised in 1950 by mathematician, computer scientist, and World War II code breaker Alan Turing, the eponymous test was designed to determine whether a machine can exhibit intelligence. In it, a judge asks both a human and a computer a set of written questions. If the judge cannot tell which respondent is the computer and which is the human, the computer “wins.” But there’s a twist. Turing knew that thinking is a slippery subject, and so is intelligence.

The Most Human Human: What Talking With Computers Teaches Us About What It Means to Be Alive

by

Brian Christian

Published 1 Mar 2011

Ramachandran and Sandra Blakeslee, Phantoms in the Brain: Probing the Mysteries of the Human Mind (New York: William Morrow, 1998). 10 Alan Turing, “On Computable Numbers, with an Application to the Entscheidungsproblem,” Proceedings of the London Mathematical Society, 1937, 2nd ser., 42, no. 1 (1937), pp. 230–65. 11 Ada Lovelace’s remarks come from her translation (and notes thereupon) of Luigi Federico Menabrea’s “Sketch of the Analytical Engine Invented by Charles Babbage, Esq.,” in Scientific Memoirs, edited by Richard Taylor (London, 1843). 12 Alan Turing, “Computing Machinery and Intelligence,” Mind 59, no. 236 (October 1950), pp. 433–60. 13 For more on the idea of “radical choice,” see, e.g., Sartre, “Existentialism Is a Humanism,” especially Sartre’s discussion of a painter wondering “what painting ought he to make” and a student who came to ask Sartre’s advice about an ethical dilemma. 14 Aristotle’s arguments: See, e.g., The Nicomachean Ethics. 15 For a publicly traded company: Nobel Prize winner, and (says the Economist) “the most influential economist of the second half of the 20th century,” Milton Friedman wrote a piece in the New York Times Magazine in 1970 titled “The Social Responsibility of Business Is to Increase Its Profits.”

…

Fortunately, I am human; unfortunately, it’s not clear how much that will help. The Turing Test Each year, the artificial intelligence (AI) community convenes for the field’s most anticipated and controversial annual event—a competition called the Turing test. The test is named for British mathematician Alan Turing, one of the founders of computer science, who in 1950 attempted to answer one of the field’s earliest questions: Can machines think? That is, would it ever be possible to construct a computer so sophisticated that it could actually be said to be thinking, to be intelligent, to have a mind? And if indeed there were, someday, such a machine: How would we know?

…

The Illegitimacy of the Figurative When Claude Shannon met Betty at Bell Labs in the 1940s, she was indeed a computer. If this sounds odd to us in any way, it’s worth knowing that nothing at all seemed odd about it to them. Nor to their co-workers: to their Bell Labs colleagues their romance was a perfectly normal one, typical even. Engineers and computers wooed all the time. It was Alan Turing’s 1950 paper “Computing Machinery and Intelligence” that launched the field of AI as we know it and ignited the conversation and controversy over the Turing test (or the “Imitation Game,” as Turing initially called it) that has continued to this day—but modern “computers” are nothing like the “computers” of Turing’s time.

Complexity: A Guided Tour

by

Melanie Mitchell

Published 31 Mar 2009

As the mathematician and writer Andrew Hodges notes: “This was an amazing new turn in the enquiry, for Hilbert had thought of his programme as one of tidying up loose ends. It was upsetting for those who wanted to find in mathematics something that was perfect and unassailable….” Turing Machines and Uncomputability While Gödel dispatched the first and second of Hilbert’s questions, the British mathematician Alan Turing killed off the third. In 1935 Alan Turing was a twenty-three-year-old graduate student at Cambridge studying under the logician Max Newman. Newman introduced Turing to Gödel’s recent incompleteness theorem. When he understood Gödel’s result, Turing was able to see how to answer Hilbert’s third question, the Entscheidungsproblem, and his answer, again, was “no.”

…

Proceedings of the National Academy of Sciences, USA, 101(4), 2004, pp. 918–922. “there is no such thing as an unsolvable problem”: Quoted in Hodges, A., Alan Turing: The Enigma, New York: Simon & Schuster, 1983, p. 92. “Gödel’s proof is complicated”: For excellent expositions of the proof, see Nagel, E. and Newman, J. R., Gödel’s Proof. New York: New York University, 1958; and Hofstadter, D. R., Gödel, Escher, Bach: an Eternal Golden Braid. New York: Basic Books, 1979. “This was an amazing new turn”: Hodges, A., Alan Turing: The Enigma. New York: Simon & Schuster, 1983, p. 92. “Turing killed off the third”: Another mathematician, Alonzo Church, also proved that there are undecidable statements in mathematics, but Turing’s results ended up being more influential.

…

Following the intuition of Leibniz of more than two centuries earlier, Turing formulated his definition by thinking about a powerful calculating machine—one that could not only perform arithmetic but also could manipulate symbols in order to prove mathematical statements. By thinking about how humans might calculate, he constructed a mental design of such a machine, which is now called a Turing machine. The Turing machine turned out to be a blueprint for the invention of the electronic programmable computer. Alan Turing, 1912–1954 (Photograph copyright ©2003 by Photo Researchers Inc. Reproduced by permission.) A QUICK INTRODUCTION TO TURING MACHINES As illustrated in figure 4.1, a Turing machine consists of three parts: (1) A tape, divided into squares (or “cells”), on which symbols can be written and from which symbols can be read.

Nine Algorithms That Changed the Future: The Ingenious Ideas That Drive Today's Computers

by

John MacCormick

and

Chris Bishop

Published 27 Dec 2011

In the next section, we encounter neural networks: a pattern recognition technique in which the learning phase is not only significant, but directly inspired by the way humans and other animals learn from their surroundings. NEURAL NETWORKS The remarkable abilities of the human brain have fascinated and inspired computer scientists ever since the creation of the first digital computers. One of the earliest discussions of actually simulating a brain using a computer was by Alan Turing, a British scientist who was also a superb mathematician, engineer, and code-breaker. Turing's classic 1950paper, entitled Computing Machinery and Intelligence, is most famous for a philosophical discussion of whether a computer could masquerade as a human. The paper introduced a scientific way of evaluating the similarity between computers and humans, known these days as a “Turing test.”

…

Two mathematicians, one American and one British, independently discovered uncomputable problems in the late 1930s—several years before the first real computers emerged during the Second World War. The American was Alonzo Church, whose groundbreaking work on the theory of computation remains fundamental to many aspects of computer science. The Briton was none other than Alan Turing, who is commonly regarded as the single most important figure in the founding of computer science. Turing's work spanned the entire spectrum of computational ideas, from intricate mathematical theory and profound philosophy to bold and practical engineering. In this chapter, we will follow in the footsteps of Church and Turing on a journey that will eventually demonstrate the impossibility of using a computer for one particular task.

…