Northpointe / Correctional Offender Management Profiling for Alternative Sanctions

40 results

Technically Wrong: Sexist Apps, Biased Algorithms, and Other Threats of Toxic Tech

by

Sara Wachter-Boettcher

Published 9 Oct 2017

See also gender bias; political bias; racial bias in algorithms, 144–145, 176 in default settings, 35–38, 61 of Facebook’s creators, 168–172 of Twitter’s creators, 150, 158–160 binary choices, 62 Black Lives Matter movement, 81 Bouie, Jamelle, 61 Brown, Mike, 163 Brown Eyes, Lance, 54 Butterfield, Stewart, 190–191 BuzzFeed, 157, 165–166 cares about us (CAU) metric, 97 caretaker speech, 114–115 celebrations. See misplaced celebrations and humor COMPAS (Correctional Offender Management Profiling for Alternative Sanctions), 119–121, 125–129, 136, 145 computer science, and tech industry pipeline, 21–26, 181–182 Cook, Tim, 19 Cooper, Sarah, 24 Correctional Offender Management Profiling for Alternative Sanctions (COMPAS), 119–121, 125–129, 136, 145 Costolo, Dick, 148 Cramer, Jim, 158 Creepingbear, Shane, 53–56 Criado-Perez, Caroline, 156 criminal justice and COMPAS, 119–121, 125–129, 136, 145 predictive policing software, 102 sentencing algorithms for, 10 culture fit, 24–25, 25, 189 curators, of Trending Facebook feature, 165–169, 172 daily active users (DAUs) metric, 74, 97–98 Daniels, Gilbert S., 39 Dash, Anil, 9, 187 data.

…

Police say he ran from them, along the way throwing away a baggie that they suspected contained cocaine. Fugett had a record too: in 2010 he had been charged with felony attempted burglary.1 You might think these men have similar criminal profiles: they’re from the same place, born less than a year apart, charged with similar crimes. But according to software called Correctional Offender Management Profiling for Alternative Sanctions, or COMPAS, these men aren’t the same at all. COMPAS rated Parker a 10, the highest risk there is for recidivism. It rated Fugett only a 3. Fugett has since been arrested three more times: twice in 2013, for possessing marijuana and drug paraphernalia, and once in 2015, during a traffic stop, when he was arrested on a bench warrant and admitted he was hiding eight baggies of marijuana in his boxers.

…

So that’s the data going into the algorithm—the facets that Northpointe says indicate future criminality. But what about the steps that the algorithm itself takes to arrive at a score? It turns out that those have their problems as well. After ProPublica released its report, several groups of researchers, each working independently at different institutions, decided to take a closer look at ProPublica’s findings. They didn’t find a clear origin for the bias—a specific piece of the algorithm gone wrong. Instead, they found that ProPublica and Northpointe were simply looking at the concept of “fairness” in very different ways. At Northpointe, fairness was defined as parity in accuracy: the company tuned its model to ensure that people of different races who were assigned the same score also had the same recidivism rates.

The Alignment Problem: Machine Learning and Human Values

by

Brian Christian

Published 5 Oct 2020

In judiciaries across the country, more and more judges are coming to rely on algorithmic “risk-assessment” tools to make decisions about things like bail and whether a defendant will be held or released before trial. Parole boards are using them to grant or deny parole. One of the most popular of these tools was developed by the Michigan-based firm Northpointe and goes by the name Correctional Offender Management Profiling for Alternative Sanctions—COMPAS, for short.5 COMPAS has been used by states including California, Florida, New York, Michigan, Wisconsin, New Mexico, and Wyoming, assigning algorithmic risk scores—risk of general recidivism, risk of violent recidivism, and risk of pretrial misconduct—on a scale from 1 to 10.

…

For more on Brennan and Wells’s early 1990s work on inmate classification in jails, see Brennan and Wells, “The Importance of Inmate Classification in Small Jails.” 11. Harcourt, Against Prediction. 12. Burke, A Handbook for New Parole Board Members. 13. Northpointe founders Tim Brennan and Dave Wells developed the tool that they called COMPAS in 1998. For more details on COMPAS, see Brennan, Dieterich, and Oliver, “COMPAS,” as well as Brennan and Dieterich, “Correctional Offender Management Profiles for Alternative Sanctions (COMPAS).” COMPAS is described as a “fourth-generation” tool by Andrews, Bonta, and Wormith, “The Recent Past and Near Future of Risk and/or Need Assessment.” One of the leading “third-generation” risk-assessment tools prior to COMPAS is called the Level of Service Inventory (or LSI), which was followed by the Level of Service Inventory–Revised (LSI-R).

…

Breland, Keller, and Marian Breland. “The Misbehavior of Organisms.” American Psychologist 16, no. 11 (1961): 681–84. Brennan, T., W. Dieterich, and W. Oliver. “COMPAS: Correctional Offender Management for Alternative Sanctions.” Northpointe Institute for Public Management, 2007. Brennan, Tim, and William Dieterich. “Correctional Offender Management Profiles for Alternative Sanctions (COMPAS).” In Handbook of Recidivism Risk/Needs Assessment Tools, 49–75. Wiley Blackwell, 2018. Brennan, Tim, and Dave Wells. “The Importance of Inmate Classification in Small Jails.” American Jails 6, no. 2 (1992): 49–52.

The Age of Entitlement: America Since the Sixties

by

Christopher Caldwell

Published 21 Jan 2020

Developed by computer statisticians at a company called Northpointe in Colorado and used in Broward County, Florida, and elsewhere, the Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) software used algorithms to decide whether to release, parole, or continue to lock up a given prisoner. There were serious constitutional problems with using private software packages that way. Tech companies resist divulging the algorithms that account for much of their products’ value. Google’s are top-secret, and so were Northpointe’s. A convict could thus be denied an explicit explanation of the grounds on which he received harsher or more lenient treatment.

…

W., 162, 178 drug abuse and, 242 economic policy and, 179 Bush, George W., 177, 180, 243 economic policy and, 179, 182 Iraq War and, 184 presidential election of 2004 and, 84 busing (for school integration), 22, 77, 146 Cadillac, 88 Calvin Klein, 216–217 Cameron, David, 213 Campanis, Al, 153–156, 186 capitalism, 51, 101, 104, 111, 126, 130, 161, 192, 199, 205 Carnegie, Andrew, 204–205, 207 Carson, Rachel, 4 Carter, Jimmy, 80, 103, 110 Carter, Rosalynn, 65 Case, Anne, 241 Casey, George W., Jr., 189 Castile, Philando, 266 Catholic church, 79 CBS, 154, 155 Celler, Emanuel, 113 censorship, 155, 156 Centers for Disease Control, 192 Central Intelligence Agency (CIA), 194 “Charlie Freak” (song), 242 Cheddar, 203 Cheney, Richard, 104 Chideya, Farai, 249 child care, 51 childbearing, 59, 60 children’s rights, 35 China, 67, 70, 71, 126 Christakis, Erika, 270–273 Christakis, Nicholas, 270–273 Christianity, 79–80 fundamentalism, 79 evangelism, 79–80 Christianity Today, 56 Christie, Chris, 206 Chrysler Corporation, 88 cigarette smoking, 38, 43, 129, 170 Citibank, 180, 223 Citizens Band radio, 97–98 City College of New York, 157 Civil Rights Act of 1866, 26 Civil Rights Act of 1964, 3, 8–9, 12, 14, 18–22, 26, 28, 32, 33, 44, 57, 69, 116, 146, 147, 159, 172, 229, 231, 236, 238, 277, 278 Civil Rights Commission, 9 civil rights movement, 3–6, 8–12, 15–35, 43, 65, 72, 89, 99, 109, 171, 179, 207, 229, 268 as a “Second Reconstruction,” 11 (see also human rights, Jim Crow, race riots, racism/racial discrimination) Civil War, 8, 11, 13, 78, 121, 211 Clairol, 45 Clark, Kenneth (art historian), 248 Clark, Kenneth (psychologist), 254 Clement, Paul, 225 Clifford, Clark, 69 Clinton, Bill, 101, 137, 169, 175, 176, 205, 220, 222, 226 civil rights and, 179 economic policy and, 177, 182 presidency of, 102 presidential election of 1992 and, 163 State of the Union address (1966) by, 177 CNN, 163, 170 Coates, Ta-Nehisi, 248, 249, 253, 257, 263, 268 Coca-Cola, 223 Cochran, Johnnie, 260 Cold War, 99, 135, 159, 161–163, 209, 235 Cole, David, 221 Coleridge, Samuel Taylor, 137 Columbia University, 76, 157 Comfort, Alex The Joy of Sex, 49 Commentary magazine, 33 Communism, 5, 70, 160, 165, 248–249 Community Reinvestment Act of 1977, 180 computers, 39, 132–136, 138, 200–202 Concorde, 133 Congress of Industrial Organizations (CIO), 16 conservatism, 82, 95–97, 100, 130, 140, 163, 164, 169 Constitution of the United States, 6, 13–17, 25, 34, 64, 150, 159, 194, 222, 229, 277 1st Amendment, 14, 123, 158, 231, 278 4th Amendment, 193–94 13th Amendment, 5 14th Amendment, 5, 13, 14, 57, 211 Consumer Financial Protection Bureau, 211 “Convoy” (song), 98 Cook, Paul, 87–88 Cordray, Richard, 211 Cornell University, 30–31, 273 corporations, 129, 174, 175 Correctional Offender Management Profiling for Alternative Sanctions (COMPAS), 200–202 Coughlin, Charles, 190 Coulter, Ann, 279 Counterculture, 79–82, 88, 95 Cox, Harvey, 79 crack (cocaine), 29, 242 credit, 108, 182 Crenshaw, Kimberlé, 22, 34 crime, 29, 89, 260, 261 Cropsey, Joseph, 143 Cross, Irv, 155 Crowley, James, 186–187 Cullman, Lewis B., 129 “cultural appropriation,” 247–248 Curtatone, Joseph, 265 cyberspace, 190, 194 Daily Mail, 256 Dallas, Texas, 266, 267 Davies, Ray, 62, 133 Davis, Jefferson, 184 Deaton, Angus, 241 Defense Advanced Research Projects Agency (DARPA), 129 Defense of Marriage Act (DOMA) of 1996, 168–169, 220, 222–224, 227, 228 Dell Computer, 209, 223 democracy, 159, 170, 215 Democratic National Convention 1948, 26 1968, 72, 75, 157 2004, 188 Denny, Reginald, 259 desegregation, 4, 10, 13–14, 21, 35, 109, 146, 149 busing (for school integration), 22, 77, 146 of schools, 4, 13–14, 77 (see also Jim Crow, race riots, racism/racial discrimination, segregation) Deutsche Bank, 232 DeVos family, 209 Dickinson, Emily, 153 Diem, Ngo Dinh, 4 Dinesen, Isak (Karen Blixen), 130 diversity, 143–144, 146, 148, 155, 159–161, 166, 169, 170, 189, 200, 202–204, 208, 240, 261, 275 ethnic-studies departments, 157 “intersectionality,” 120 divorce, 101 Dixmoor, Illinois, 28 Doe v.

…

A convict could thus be denied an explicit explanation of the grounds on which he received harsher or more lenient treatment. But in this case the complaint involved not Northpointe’s property rights or its transparency but its results. The COMPAS algorithm tended to assess black inmates as more likely to reoffend than whites. Problem was, they were. Any accurate system gauging the probabilities of reoffending would have shown a similar imbalance. But neutrality was no defense. A Guardian report described the working of the COMPAS software as “stunning,” “frightening,” and “nefarious.” It is worth again recalling Alan David Freeman’s distinction between the “perpetrator” perspective on civil rights (which seeks only to eliminate bias, and will leave things alone when bias cannot be proved) and the “victim” perspective (which assumes bias, and seeks to eliminate the inequality associated with it).

Artificial Unintelligence: How Computers Misunderstand the World

by

Meredith Broussard

Published 19 Apr 2018

A prominent example of algorithmic accountability reporting is ProPublica’s story “Machine Bias,” published in 2016.6 ProPublica reporters found that an algorithm used in judicial sentencing was biased against African Americans. Police administered a questionnaire to people who were arrested, and the answers were fed into a computer. The Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) algorithm then spit out a score that “predicted” how likely the person was to commit a crime in the future. The score was given to judges in the hopes that the score would allow judges to make more “objective,” data-driven decisions about sentencing. However, this resulted in African Americans receiving longer jail sentences than whites.

…

W., 46–47 Angwin, Julia, 154–156 App hackathons, 165–174 Apple Watch, 157 Artificial intelligence (AI) beginnings, 69–73 expert systems, 52–53, 179 fantasy of, 132 in film, 31, 32, 198 foundations of, 9 future of, 194–196 games and, 33–37 general, 10–11, 32 narrow, 10–11, 32–33, 97 popularity of, 90 real vs. imagined, 31–32 research, women in, 158 sentience challenge in, 129 Asimov, Isaac, 71 Assembly language, 24 Association for Computing Machinery (ACM), 145 Astrolabe, 76 Asymmetry, positive, 28 Automation technology, 176–177 Autopilot, 121 Availability heuristic, 96 Babbage, Charles, 76–77 Bailiwick (Broussard), 182–185, 190–191, 193 Barlow, John Perry, 82–83 Bell Labs, 13 Bench, Shane, 84 Ben Franklin Racing Team (Little Ben), 122–127 Berkman Klein Center (Harvard), 195 Berners-Lee, Tim, 4–5, 47 Bezos, Jeff, 73, 115 Bias in algorithms, 44, 150, 155–157 in algorithms, racial, 44, 155–156 genius myth and, 83–84 programmers and, 155–158 in risk ratings, 44, 155–156 in STEM fields, 83–84 Bill & Melinda Gates Foundation, 60–61, 157 Bipartisan Campaign Reform Act, 180 Bitcoin, 159 Bizannes, Elias, 165, 166, 171 Blow, Charles, 95 Boggs, David, 67–68 Boole, George, 77 Boolean algebra, 77 Borden, Brisha, 154–155 Borsook, Paulina, 82 Bowhead Systems Management, 137 boyd, danah, 195 Bradley, Earl, 43 Brains 19–20, 95, 128–129, 132, 144, 150 Brand, Stewart, 5, 29, 70, 73, 81–82 Brin, Sergei, 72, 151 Brown, Joshua D., 140, 142 Bump, Philip, 186 Burroughs, William S., 77 Burroughs, William Seward, 77 Calculation vs. consciousness, 37 Cali-Fame, 186 California, drug use in, 158–159 Cameron, James, 95 Campaign finance, 177–186, 191 Čapek, Karel, 129 Caprio, Mike, 170–171 Carnegie Mellon University, autonomous vehicle research ALVINN, 131 University Racing Team (Boss), 124, 126–127, 130–131 Cars deaths associated with, 136–138, 146 distracted driving of, 146 human-centered design for, 147 Cars, self-driving 2005 Grand Challenge, 123–124 2007 Grand Challenge, 122–127 algorithms in, 139 artificial intelligence in, 129–131, 133 deaths in, 140 driver-assistance technology from, 135, 146 economics of, 147 experiences in, 121–123, 125–126, 128 fantasy of, 138, 142, 146 GPS hacking, 139 LIDAR guidance system, 139 machine ethics, 144–145, 147 nausea in, 121–123 NHTSA categories for, 134 problems/limitations, 138–140, 142–146 research funding, 133 SAE standards for levels of automation, 134–135 safety, 136–137, 140–142, 143, 146 sentience in, 132 Uber’s use of, 139 Udacity open-source car competition, 135 Waymo technology, 136 CERN, 4–5 Cerulo, Karen A., 28 Chess, 33 Children’s Online Privacy Protection Act (COPPA), 63–64 Chinese Room argument, 38 Choxi, Heteen, 122 Christensen, Clayton, 163 Chrome, 25, 26 Citizens United, 177, 178, 180 Clarke, Arthur C., 71–72 Client-server model, 27 Clinkenbeard, John, 172 Cloud computing, 26, 52, 196 Cohen, Brian, 56–57 Collins, John, 117 Common Core State Standards, 60–61 Communes, 5, 10 Computer ethics, 144–145 Computer Go, 34–36 Computers assumptions about vs. reality of, 8 components, identifying, 21–22 consciousness, 17 early, 196–199 human, 77–78, 198 human brains vs., 19–20, 128–129, 132, 144, 150 human communication vs., 169–170 human mind vs., 38 imagination, 128 limitations, 6–7, 27–28, 37–39 memory, 131 modern-day, development of, 75–79 operating systems, 24–25 in schools, 63–65 sentience, 17, 129 Computer science bias in, 79 ethical training, 145 explaining the world through, 118 women in, 5 Consciousness vs. calculation, 37 Constants in programming, 88 Content-management system (CMS), 26 Cooper, Donna, 58 Copeland, Jack, 74–75 Correctional Offender Management Profiling for Alternative Sanctions (COMPAS), 44, 155–156 Cortana, 72 Counterculture, 5, 81–82 Cox, Amanda, 41–42 Crawford, Kate, 194 Crime reporting, 154–155 CTB/McGraw-Hill, 53 Cumberbatch, Benedict, 74 Cyberspace activism, 82–83 DarkMarket, 159 Dark web, 82 Data on campaign finance, 178–179 computer-generated, 18–19 defined, 18 dirty, 104 generating, 18 people and, 57 social construction of, 18 unreasonable effectiveness of, 118–119, 121, 129 Data & Society, 195 DataCamp, 96 Data density theory, 169 Data journalism, 6, 43–47, 196 Data Journalism Awards, 196 Data journalism stories cost-benefit of, 47 on inflation, 41–42 Parliament members’ expenses, 46 on police speeding, 43 on police stops of people of color, 43 price discrimination, 46 on sexual abuse by doctors, 42–43 Data Privacy Lab (Harvard), 195 Data Recognition Corporation (DRC), 53 Datasets in machine learning, 94–95 Data visualizations, 41–42 Deaths distracted driving accidents, 146 from poisoning, 137 from road accidents, 136–138 in self-driving cars, 140 Decision making computational, 12, 43, 150 data-driven, 119 machine learning and, 115–116, 118–119 subjective, 150 Deep Blue (IBM), 33 Deep learning, 33 Defense Advanced Research Projects Agency (DARPA) Grand Challenge, 123, 131, 133, 164 Desmond, Matthew, 115 Detroit race riots story, 44 Dhondt, Rebecca, 58 Diakopoulos, Nicholas, 46 Difference engine, 76 Differential pricing and race, 116 Digital age, 193 Digital revolution, 193–194 Dinakar, Karthik, 195 Django, 45, 89 DocumentCloud, 52, 196 Domino’s, 170 Drone technology, 67–68 Drug marketplace, online, 159–160 Drug use, 80–81, 158–160 Duncan, Arne, 51 Dunier, Mitchell, 115 Edison, Thomas, 77 Education change, implementing in, 62–63 Common Core State Standards, 60–61 competence bar in, 150 computers in schools, 63–65 equality in, 77–78 funding, 60 supplies, availability of, 58 technochauvinist solutions for, 63 textbook availability, 53–60 unpredictability in, 62 18F, 178–179 Electronic Frontier Foundation, 82 Elevators, 156–157 Eliza, 27–28 Emancipation Proclamation, 78 Engelbart, Doug, 25, 80–81 Engineers, ethical training, 145 ENIAC, 71, 194, 196–199 Equality in education, 77–78 techno hostility toward, 83 technological, creating, 87 technology vs., 115, 156 for women, 5, 77–78, 83–85, 158 Essa, Irfan, 46 Ethics, 144–145, 147 EveryBlock, 46 Expertise, cognitive fallacies associated, 83 Expert systems, 52–53, 179 Facebook, 70, 83, 152, 158, 197 Facial recognition, 157 Fact checking, 45–46 Fake news, 154 Family Educational Rights and Privacy Act (FERPA), 63–64 FEC, McCutcheon v., 180 FEC, Speechnow.org v., 180 FEC.gov, 178–179 Film, AI in, 31, 32, 198 FiveThirtyEight.com, 47 Foote, Tully, 122–123, 125 Ford Motor Company, 140 Fowler, Susan, 74 Fraud campaign finance, 180 Internet advertising, 153–154 Free press, role of, 44 Free speech, 82 Fuller, Buckminster, 74 Futurists, 89–90 Games, AI and, 33–37 Gates, Bill, 61 Gates, Melinda, 157–158 Gawker, 83 Gender equality, hostility toward, 83 Gender gap, 5, 84–85, 115, 158 Genius, cult of, 75 Genius myth, 83–84 Ghost-in-the-machine fallacy, 32, 39 Giffords, Gabby, 19–20 GitHub, 135 Go, 33–37 Good Old-Fashioned Artificial Intelligence (GOFAI), 10 Good vs. popular, 149–152, 160 Google, 72 Google Docs, 25 Google Maps API, 46 Google Street View, 131 Google X, 138, 151, 158 Government campaign finance, 177–186, 191 cyberspace activism, antigovernment ideology, 82–83 tech hostility toward, 82–83 Graphical user interface (GUI), 25, 72 Greyball, 74 Guardian, 45, 46 Hackathons, 165–174 Hackers, 69–70, 82, 153–154, 169, 173 Halevy, Alon, 119 Hamilton, James T., 47 Harley, Mike, 140 Harris, Melanie, 58–59 Harvard, Andrew, 184 Harvard University Berkman Klein Center, 195 Data Privacy Lab, 195 mathematics department, 84 “Hello, world” program, 13–18 Her, 31 Hern, Alex, 159 Hernandez, Daniel, Jr., 19 Heuristics, 95–96 Hillis, Danny, 73 Hippies, 5, 82 HitchBOT, 69 Hite, William, 58 Hoffman, Brian, 159 Holovaty, Adrian, 45–46 Home Depot, 46, 115, 155 Hooke, Robert, 88 Houghton Mifflin Harcourt (HMH) HP, 157 Hugo, Christoph von, 145 Human-centered design, 147, 177 Human computers, 77–78, 198 Human error, 136–137 Human-in-the-loop systems, 177, 179, 187, 195 Hurst, Alicia, 164 Illinois quarter, 153–154 Imagination, 89–90, 128 Imitation Game, The (film), 74 Information industry, annual pay, 153 Injury mortality, 137 Innovation computational, 25 disruptive, 163, 171 funding, 172–173 hackathons and, 166 Instacart, 171 Intelligence in machine learning Interestingness threshold, 188 International Foundation for Advanced Study, 81 Internet advertising model, 151 browsers, 25, 26 careers, annual pay rates, 153 core values, 150 drug marketplace, 159–160 early development of the, 5, 81 fraud, 153–154 online communities, technolibertarianism in culture of, 82–83 rankings, 72, 150–152 Internet Explorer, 25 Internet pioneers, inspiration for, 5, 81–82 Internet publishing industry, annual pay, 153 Internet search, 72, 150–152 Ito, Joi, 147, 195 Jacquard, Joseph Marie, 76 Java, 89 JavaScript, 89 Jobs, Steve, 25, 70, 72, 80, 81 Jones, Paul Tudor, 187–188 Journalism.

…

Each of these people was given a future risk rating when they were arrested—a move familiar from a movie. Borden, who is black, was rated high risk. Prater, who is white, was rated low risk. The risk algorithm, COMPAS, attempted to measure which detainees are at risk of recidivism, or reoffending. Northpointe, the company that developed COMPAS, is one of many such companies that are trying to use quantitative methods to enhance policing. It’s not malicious; most of the companies hire well-intentioned criminologists who believe they are operating within the bounds of data-driven, scientific thinking on criminal behavior.

Outnumbered: From Facebook and Google to Fake News and Filter-Bubbles – the Algorithms That Control Our Lives

by

David Sumpter

Published 18 Jun 2018

Black defendants who didn’t go on to commit a crime were more likely to be wrongly classified as high risk than white defendants. When Julia and her colleagues published their article, Tim and Northpointe were quick to respond. They wrote a research report arguing that the ProPublica analysis was wrong.4 They argued that COMPAS held the same standards as other tried and tested algorithms. They claimed that Julia and her colleagues had misunderstood what it means for an algorithm to make an error and that their algorithm was ‘well-calibrated’ for white and black defendants. The debate between Northpointe and ProPublica made me realise just how complicated the issue of bias was. These were smart people, and their exchange of words covered nearly 100 pages of arguments and counter-arguments.

…

Julia and her ProPublica colleagues’ argument about false positives and false negatives is powerful, but Tim and his Northpointe colleagues’ counter-argument about algorithm calibration is solid. Given the same table of data, two separate groups of professional statisticians had drawn opposite conclusions. Neither of them had made a mistake in their calculations. Which of them was correct? It took two Stanford PhD students, Sam Corbett-Davies and Emma Pierson, working together with two professors, Avi Feller and Sharad Goel, to solve the puzzle.6 They confirmed Northpointe’s claim that Table 6.1 showed the COMPAS algorithm gave equally good predictions, independent of race.

…

Evaluating the predictive validity of the COMPAS risk and needs assessment system. Criminal Justice and Behavior, 36(1), 21–40. 3 www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing 4 Dieterich, W., Mendoza, C. and Brennan, T. 2016. COMPAS risk scales: Demonstrating accuracy equity and predictive parity. Technical report, Northpointe, July 2016. www.northpointeinc.com/northpointe-analysis. 5 www.propublica.org/article/how-we-analyzed-the-compas-recidivism-algorithm 6 Corbett-Davies, S., Pierson, E., Feller, A., Goel, S. and Huq, A. (2017) ‘Algorithmic decision making and the cost of fairness.’ In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 797-806.

The Art of Statistics: How to Learn From Data

by

David Spiegelhalter

Published 2 Sep 2019

• Lack of transparency: Some algorithms may be opaque due to their sheer complexity. But even simple regression-based algorithms become totally inscrutable if their structure is private, perhaps through being a proprietary commercial product. This is one of the major complaints about so-called recidivism algorithms, such as Northpointe’s Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) or MMR’s Level of Service Inventory—Revised (LSI-R).5 These algorithms produce a risk-score or category that can be used to guide probation decisions and sentencing, and yet the way in which the factors are weighted is unknown. Furthermore, since information about upbringing and past criminal associates is collected, decisions are not solely based on a personal criminal history but background factors that have been shown to be associated with future criminality, even if the underlying common factor is poverty and deprivation.

The Art of Statistics: Learning From Data

by

David Spiegelhalter

Published 14 Oct 2019

Lack of transparency: Some algorithms may be opaque due to their sheer complexity. But even simple regression-based algorithms become totally inscrutable if their structure is private, perhaps through being a proprietary commercial product. This is one of the major complaints about so-called recidivism algorithms, such as Northpointe’s Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) or MMR’s Level of Service Inventory – Revised (LSI-R).5 These algorithms produce a risk-score or category that can be used to guide probation decisions and sentencing, and yet the way in which the factors are weighted is unknown. Furthermore, since information about upbringing and past criminal associates is collected, decisions are not solely based on a personal criminal history but background factors that have been shown to be associated with future criminality, even if the underlying common factor is poverty and deprivation.

Code Dependent: Living in the Shadow of AI

by

Madhumita Murgia

Published 20 Mar 2024

Apart from the unanticipated consequences of AI systems like ProKid, there’s also been a wider debate about whether AI-made decisions, such as predicting a person’s criminality or risk of recidivism, are any fairer than human ones. A Washington Post analysis of the COMPAS system found that ProPublica’s conclusions of racism in the software were partly due to a different expectation of fairness from that of Northpointe, the owners of COMPAS.16 Northpointe’s definition was that their scores essentially implied the same risk of recidivism, regardless of the defendant’s race. But because black defendants went on to reoffend at higher rates overall, it meant more black defendants were classified as high-risk. Meanwhile, ProPublica thought it unfair that black defendants who did not go on to reoffend were therefore subjected to harsher treatment by the legal system than their white counterparts.

…

Q. ref1 Massachusetts Institute of Technology ref1 Material Bank ref1 Mbembe, Achille ref1, ref2 Meareg, Abraham ref1 Megvii ref1 Meituan ref1, ref2 Mejias, Ulises: The Costs of Connection ref1, ref2 Mercado Libre ref1 Merck ref1 Meredith, Sarah ref1 Meta ref1 advertising and ref1 communal violence and ref1 content moderators ref1, ref2 databases of faces and ref1 ethics policy ref1 metaverse and ref1 Sama and ref1, ref2, ref3, ref4, ref5 violence-inciting content ref1 see also Facebook Metiabruz ref1 Metropolitan Police ref1, ref2, ref3, ref4 Mexico ref1, ref2, ref3, ref4, ref5 Miceli, Milagros ref1, ref2, ref3, ref4 Microsoft Azure software ref1, ref2 Bing ref1 Bletchley Park summit (2023) and ref1 ethical AI charter ref1 face recognition systems and ref1, ref2 gig workers and ref1 Microsoft-Salta predictive system/public policy design and ref1, ref2 OpenAI and ref1 Rome Call and ref1, ref2, ref3 Midjourney ref1, ref2 Mighty AI ref1 migration ref1, ref2, ref3, ref4, ref5, ref6, ref7, ref8, ref9, ref10, ref11, ref12, ref13, ref14 Ministry of Early Childhood, Argentina ref1 Mort, Helen ref1, ref2, ref3, ref4, ref5, ref6, ref7, ref8, ref9 A Line Above the Sky ref1 ‘Deepfake: A Pornographic Ekphrastic’ ref1 ‘This Is Wild’ ref1, ref2 Mosul, Iraq ref1 Motaung, Daniel ref1, ref2, ref3, ref4, ref5 Mothers (short film) ref1 ‘move fast and break things’ ref1 Movement, The ref1 Mozur, Paul ref1 M-PESA ref1, ref2 MRIs ref1 Mukasa, Dorothy ref1 multi-problem families ref1 Mumbai, India ref1, ref2, ref3, ref4 Murati, Mira ref1 Museveni, Yoweri ref1 Musk, Elon ref1, ref2 Muslims ref1, ref2, ref3, ref4, ref5, ref6, ref7, ref8, ref9, ref10, ref11, ref12, ref13 Mutsaers, Paul ref1 Mutemi, Mercy ref1, ref2 My Image My Choice ref1 Nairobi, Kenya ref1, ref2 facial recognition in ref1 gig workers in ref1, ref2, ref3, ref4 Sama in ref1, ref2, ref3, ref4, ref5 Nandurbar, Western India ref1, ref2, ref3 National Health Service ref1, ref2 National Union of Professional App-Based Transport Workers ref1 National University of Defense Technology, China ref1 Navajo Nation ref1, ref2 NEC ref1 necropolitics ref1 Neruda, Pablo ref1 Netflix ref1 New Delhi, India ref1, ref2, ref3 New York Fashion Week ref1 New York Times ref1, ref2, ref3 Neymar ref1 Ngito, Benjamin ref1 Ni un Repartidor Menos (Not one Delivery Worker Killed) ref1 9/11 ref1, ref2 ‘no-fly’ zones ref1 Noble, Safiya Umoja ref1 non-disclosure agreements (NDAs) ref1, ref2, ref3, ref4 Northpointe ref1 Not Your Porn ref1 Notting Hill carnival ref1 NTech Labs ref1 Obama, Barack ref1 Obermeyer, Ziad ref1 Oculus Quest 2 virtual reality headset ref1 Ofqual ref1 Ola Cabs ref1, ref2 Olympics (2012) ref1, ref2 One Card System ref1 Online Safety Bill, UK ref1 OpenAI AI alignment and ref1 Bletchley Park summit and ref1 ChatGPT and ref1, ref2, ref3, ref4, ref5 creativity and ref1, ref2 Rome Call and ref1 Sama and ref1, ref2 Operation Condor ref1 Optum ref1 organ-allocation algorithm ref1 Orwell, George ref1, ref2 osteoarthritis ref1 outsourcing ref1, ref2, ref3, ref4, ref5, ref6, ref7, ref8, ref9, ref10, ref11 Ovcha Kupel refugee camp ref1 Oxford Internet Institute ref1 pain, African Americans and ref1 Palestine ref1 Parkinson’s disease ref1 Parks, Nijer ref1, ref2 Parvati (tuberculosis patient) ref1, ref2, ref3, ref4 Peled, Nirit ref1 Pena, Paz ref1, ref2 Perth, Western Australia ref1, ref2, ref3, ref4, ref5, ref6, ref7 Photoshop ref1, ref2, ref3 physiognomy ref1 Pi ref1 pilot programmes abandonment of ref1, ref2 ‘graveyard of pilots’ ref1 public disclosure of ref1 replacement of with human solution ref1 PimEyes ref1 policing CCTV cameras and see CCTV Crime Anticipation System ref1 De Moeder Is de Sleutel (The Mother Is the Key) ref1 diffuse policing ref1 facial recognition and see facial recognition forgiveness and ref1 predictive policing algorithms ref1 ProKid ref1, ref2, ref3 Right To Be Forgotten and ref1 Polosukhin, Illia ref1, ref2 Pontifical Academy of Sciences ref1 Pontifical Gregorian University ref1 pornography deepfake see deepfakes revenge porn ref1, ref2, ref3, ref4, ref5 Portal De La Memoria ref1 Posada, Julian ref1 pregnancy, teenage ref1 abandonment of AI pilot in Argentina ref1 abortion and ref1, ref2, ref3, ref4, ref5, ref6, ref7 digital welfare state and ref1 Microsoft Azure software and ref1 public disclosure of AI pilot in Argentina ref1 replacement of AI pilot with human solution in Argentina ref1 Princeton ref1 ProKid ref1, ref2, ref3 ProPublica ref1, ref2 proxy agents ref1, ref2 PTSD (post-traumatic stress disorder) ref1, ref2 public disclosure ref1, ref2 public-private surveillance state ref1 pulse oximeter ref1 Puma ref1 Puna Salteña, Andes ref1 qTrack ref1, ref2, ref3 Qure.ai ref1, ref2, ref3, ref4 qXR ref1 race facial recognition and ref1, ref2, ref3, ref4, ref5 medical treatment and ref1, ref2, ref3 predictive policing and ref1, ref2, ref3, ref4, ref5 radiologists ref1, ref2, ref3, ref4, ref5, ref6 Raji, Deborah ref1, ref2 Rappi ref1 recruitment systems ref1 Red Caps ref1 red-teamers ref1 Reddit ref1, ref2, ref3 regulation ref1 benefits fraud ref1 content moderators ref1 deepfakes ref1, ref2, ref3, ref4, ref5, ref6, ref7, ref8, ref9, ref10 exam grades ref1 facial recognition ref1, ref2, ref3, ref4 Foxglove and see Foxglove hateful content ref1 military weapons ref1, ref2, ref3, ref4 non-disclosure agreements (NDAs) ref1, ref2, ref3, ref4 organ-allocation algorithm ref1 political prisoners in Guantanamo Bay ref1 Right To Be Forgotten ref1 visa-awarding algorithms ref1 Reprieve ref1, ref2 responsible innovation ref1 Rest of the World ref1 retinopathy ref1 revenge porn ref1, ref2, ref3, ref4, ref5 Ricanek, Karl ref1, ref2 Ricaurte, Paola ref1, ref2 Right To Be Forgotten ref1 Roblox ref1 Roderick, Emily ref1 Rome Call ref1 Roose, Kevin ref1 Rosen, Rabbi David ref1, ref2 Russell, Stuart ref1 Salta, Argentina ref1, ref2 Sama ref1, ref2, ref3, ref4, ref5, ref6, ref7 Samii, Armin ref1, ref2, ref3, ref4, ref5 Sana’a, Yemen ref1 SAP ref1, ref2, ref3 Sardjoe, Damien ref1, ref2 Sardjoe, Diana ref1, ref2, ref3, ref4, ref5, ref6, ref7, ref8, ref9 Sardjoe, Nafayo ref1, ref2, ref3 Scale AI ref1 Scarlett, Cher ref1 Scarlet Letter, The ref1 Schwartz, Steven ref1 Scsky ref1 Secretariat for Early Childhood and Families ref1 self-attention ref1 SenseTime ref1 Sensity AI ref1, ref2, ref3 Sequoia Capital ref1 sexual assault ref1, ref2, ref3 Shelley, Mary: Frankenstein ref1 Silver Lake ref1 Singh, Dr Ashita ref1, ref2, ref3, ref4 Singh, Dr Deepak ref1 ‘slaveroo’ ref1 Slyck, Milo Van ref1 Smith, Brad ref1, ref2, ref3, ref4, ref5 Snow, Olivia ref1 social media ref1, ref2, ref3, ref4 content moderators ref1, ref2, ref3, ref4, ref5, ref6 deepfakes and ref1, ref2, ref3, ref4, ref5, ref6 electoral manipulation and ref1, ref2 SoftBank ref1 South Wales Police ref1 Sri Lanka: Easter Day bombings (2019) ref1 Stability AI ref1 Stable Diffusion ref1 Stratford, London ref1, ref2, ref3 Suleyman, Mustafa ref1 super-recognizers, Metropolitan Police ref1 Supreme Court, UK ref1 surveillance capitalism ref1, ref2 Syria ref1, ref2, ref3, ref4 targeting algorithms ref1, ref2, ref3, ref4 Taylor, Breonna ref1 Tekle, Fisseha ref1 Telegram ref1, ref2 Tesla ref1, ref2, ref3, ref4 Tezpur ref1 Thiel, Peter ref1 Thuo, David Mwangi ref1 Tiananmen Square massacre (1989) ref1, ref2, ref3, ref4 Tibet ref1, ref2 Tiger Global ref1 TikTok ref1, ref2, ref3, ref4, ref5 Top400 ref1, ref2, ref3, ref4, ref5, ref6, ref7 Top600 ref1, ref2, ref3, ref4, ref5, ref6, ref7, ref8, ref9, ref10 transformer ref1, ref2, ref3, ref4 translation ref1, ref2, ref3, ref4, ref5, ref6, ref7 TS-Cop ref1 Tséhootsooí Medical Center, Fort Defiance, Arizona ref1, ref2 tuberculosis ref1, ref2, ref3 Tuohy, Seamus ref1 Uber ref1, ref2, ref3, ref4, ref5, ref6, ref7, ref8 UberEats ref1, ref2 UberCheats ref1, ref2, ref3 Uganda ref1, ref2, ref3, ref4, ref5 Ukraine, war in ref1, ref2, ref3, ref4 UN (United Nations) Declaration of Human Rights ref1 Food and Agriculture Organization ref1 Special Rapporteur on extreme poverty and human rights report on emergence of a digital welfare state (2019) ref1 University of Buenos Aires ref1 University of California Berkeley ref1 Los Angeles ref1 University of Michigan ref1 University of Western Australia ref1, ref2 Urtubey, Juan Manuel ref1, ref2, ref3, ref4 US Department of Defense ref1, ref2 US National Institutes of Health ref1 US Navy ref1 Uszkoreit, Jakob ref1 Valbuena, Tess ref1 Vara, Vauhini ref1 Vaswani, Ashish ref1 Vatican, Rome ref1, ref2, ref3 Vazquez Llorente, Raquel ref1 Venezuela ref1, ref2, ref3 Vice News ref1 virtual private network (VPN) ref1 visa awards ref1 vocabulary, AI-driven work-related ref1 voice-over artists ref1 Wadhwani AI ref1 wages app workers ref1, ref2, ref3, ref4, ref5, ref6, ref7, ref8, ref9, ref10, ref11 data annotation/data-labelling and ref1, ref2, ref3, ref4, ref5, ref6 global wage for AI data workers ref1, ref2 Walmart ref1, ref2 Wang, Maya ref1 Washington Post ref1 Waymo ref1 webcams ref1 WeChat ref1, ref2, ref3 ‘weights’ (strength of connections) ref1 welfare systems ref1, ref2, ref3, ref4 digital welfare state ref1, ref2 White, Andrew ref1 Whittaker, Meredith ref1 Wichi ref1 Wientjes, Jacqueline ref1 Wine, Bobi (Robert Kyagulanyi Ssentamu) ref1, ref2 Wipro ref1 Wired ref1, ref2, ref3, ref4, ref5 WITNESS ref1 Witt, Hays ref1 Woodbridge, New Jersey ref1 Woods, Kat ref1 worker collectives ref1 Worker Info Exchange ref1 World Health Organization ref1 Wright, Robin ref1 Writers Guild of America ref1 Xi Jinping ref1 Xinjiang, China ref1, ref2 X-rays ref1, ref2, ref3, ref4, ref5, ref6, ref7, ref8, ref9, ref10 YouTube ref1, ref2, ref3, ref4 Yu, Amber ref1 Yuan Yang ref1 Zuboff, Shoshana ref1 Zuckerberg, Mark ref1 About the Author MADHUMITA MURGIA is the first Artificial Intelligence Editor of the Financial Times and has been writing about AI, for Wired and the FT, for over a decade.

Hello World: Being Human in the Age of Algorithms

by

Hannah Fry

Published 17 Sep 2018

Its analysis highlights how easily algorithms can perpetuate the inequalities of the past. Nor is it to excuse the COMPAS algorithm. Any company that profits from analysing people’s data has a moral responsibility (if not yet a legal one) to come clean about its flaws and pitfalls. Instead, Equivant (formerly Northpointe), the company that makes COMPAS, continues to keep the insides of its algorithm a closely guarded secret, to protect the firm’s intellectual property.39 There are options here. There’s nothing inherent in these algorithms that means they have to repeat the biases of the past. It all comes down to the data you give them.

…

Julia Angwin, Jeff Larson, Surya Mattu and Lauren Kirchner, ‘Machine bias’, ProPublica, 23 May 2016, https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing. 30. ‘Risk assessment’ questionnaire, https://www.documentcloud.org/documents/2702103-Sample-Risk-Assessment-COMPAS-CORE.html. 31. Tim Brennan, William Dieterich and Beate Ehret (Northpointe Institute), ‘Evaluating the predictive validity of the COMPAS risk and needs assessment system’, Criminal Justice and Behavior, vol. 36, no. 1, 2009, pp. 21–40, http://www.northpointeinc.com/files/publications/Criminal-Justice-Behavior-COMPAS.pdf. According to a 2018 study, the COMPAS algorithm has a similar accuracy to an ‘ensemble’ of humans.

…

‘ambiguous images 211n13’ 23andMe 108–9 profit 109 promises of anonymity 109 sale of data 109 volume of customers 110 52Metro 177 abnormalities 84, 87, 95 acute kidney injuries 104 Acxiom 31 Adele 193 advertising 33 online adverts 33–5 exploitative potential 35 inferences 35 personality traits and 40–1 political 39–43 targeted 41 AF447 (flight) 131–3, 137 Afigbo, Chukwuemeka 2 AI (artificial intelligence) 16–19 algorithms 58, 86 omnipotence 13 threat of 12 see also DeepMind AI Music 192 Air France 131–3 Airbnb, random forests 59 Airbus A330 132–3 algebra 8 algorithmic art 194 algorithmic regulating body 70 algorithms aversion 23 Alhambra 156 Alton Towers 20–1 ALVINN (Autonomous Land Vehicle In a Neural Network) 118–19 Alzheimer’s disease 90–1, 92 Amazon 178 recommendation engine 9 ambiguous images 211n13 American Civil Liberties Union (ACLU) 17 Ancestry.com 110 anchoring effect 73 Anthropometric Laboratory 107–8 antibiotics 111 AOL accounts 2 Apple 47 Face ID system 165–6 arithmetic 8 art 175–95 algorithms 184, 188–9 similarity 187 books 178 films 180–4 popularity 183–4 judging the aesthetic value of 184 machines and 194 meaning of 194 measuring beauty 184–5 music 176–80 piano experiment 188–90 popularity 177, 178, 179 quality 179, 180 quantifying 184–8 social proof 177–8, 179 artifacts, power of 1-2 artificial intelligence (AI) see AI (artificial intelligence) association algorithms 9 asthma 101–2 identifying warning signs 102 preventable deaths 102 Audi slow-moving traffic 136 traffic jam pilot 136 authority of algorithms 16, 198, 199, 201 misuse of 200 automation aircraft 131–3 hidden dangers 133–4 ironies of 133–7 reduction in human ability 134, 137 see also driverless cars Autonomous Emergency Braking system 139 autonomy 129, 130 full 127, 130, 134, 138 autopilot systems A330 132 driverless cars 134 pilot training 134 sloppy 137 Tesla 134, 135, 138 bail comparing algorithms to human judges 59–61 contrasting predictions 60 success of algorithms 60–1 high-risk scores 70 Bainbridge, Lisanne 133–4, 135, 138 balance 112 Banksy 147, 185 Baril, David 171–2 Barstow 113 Bartlett, Jamie 44 Barwell, Clive 145–7 Bayes’ theorem 121–4, 225n30 driverless cars 124 red ball experiment 123–4 simultaneous hypotheses 122–3 Bayes, Thomas 123–4 Bayesian inference 99 beauty 184–5 Beck, Andy 82, 95 Bell, Joshua 185–6 Berk, Richard 61–2, 64 bias of judges 70–1, 75 in machines 65–71 societal and cultural 71 biometric measurements 108 blind faith 14–16, 18 Bonin, Pierre-Cédric ‘company baby‘ 131–3 books 178 boost effect 151, 152 Bratton, Bill 148–50, 152 breast cancer aggressive screening 94 detecting abnormalities 84, 87, 95 diagnoses 82–4 mammogram screenings 94, 96 over-diagnosis and over-treatment 94–5 research on corpses 92–3 ‘in situ’ cancer cells 93 screening algorithms for 87 tumours, unwittingly carrying 93 bridges (route to Jones Beach) racist 1 unusual features 1 Brixton fighting 49 looting and violence 49–50 Brooks, Christopher Drew 64, 77 Brown, Joshua 135 browser history see internet browsing history buffer zone 144 Burgess, Ernest W. 55–6 burglary 150–1 the boost 151, 152 connections with earthquakes 152 the flag 150–1, 152 Caixin Media 45 calculations 8 calculus 8 Caldicott, Dame Fiona 223n48 Cambridge Analytica 39 advertising 42 fake news 42 personality profiles 41–2 techniques 41–2 whistleblowers 42 CAMELYON16 competition 88, 89 cameras 119–20 cancer benign 94 detection 88–9 and the immune system 93 malignant 94 ‘in situ’ 93, 94 uncertainty of tumours 93–4 see also breast cancer cancer diagnoses study 79–80 Car and Driver magazine 130–1 Carnegie 117 Carnegie Mellon University 115 cars 113–40 driverless see driverless cars see also DARPA (US Defence Advanced Research Projects Agency) categories of algorithms association 9 classification 9 filtering 9–10 prioritization 8 Centaur Chess 202 Charts of the Future 148–50 chauffeur mode 139 chess 5-7 Chicago Police Department 158 China 168 citizen scoring system 45–6 breaking trust 46 punishments 46 Sesame Credit 45–6, 168 smallpox inoculation 81 citizen scoring system 45–6 Citroen DS19 116, 116–17 Citymapper 23 classification algorithms 9 Clinical vs Statistical Prediction (Meehl) 21–2 Clinton Foundation 42 Clubcard (Tesco) 26 Cohen’s Kappa 215n12 cold cases 172 Cold War 18 Colgan, Steyve 155 Commodore 64 ix COMPAS algorithm 63, 64 ProPublica analysis accuracy of scores 65 false positives 66 mistakes 65–8 racial groups 65–6 secrecy of 69 CompStat 149 computational statistics 12 computer code 8 computer intelligence 13 see also AI (artificial intelligence) computer science 8 computing power 5 considered thought 72 cookies 34 Cope, David 189, 190–1, 193 cops on the dots 155–6 Corelogic 31 counter-intuition 122 creativity, human 192–3 Creemers, Rogier 46 creepy line 28, 30, 39 crime 141–73 algorithmic regulation 173 boost effect 151, 152 burglary 150–1 cops on the dots 155–6 geographical patterns 142–3 gun 158 hotspots 148, 149, 150–1, 155 HunchLab algorithm 157–8 New York City subway 147–50 predictability of 144 PredPol algorithm 152–7, 158 proximity of offenders’ homes 144 recognizable patterns 143–4 retail 170 Strategic Subject List 158 target hardening 154–5 see also facial recognition crime data 143–4 Crimewatch programme 142 criminals buffer zone 144 distance decay 144 knowledge of local geographic area 144 serial offenders 144, 145 customers data profiles 32 inferred data 32–4 insurance data 30–1 shopping habits 28, 29, 31 supermarket data 26–8 superstore data 28–31 cyclists 129 Daimler 115, 130 DARPA (US Defence Advanced Research Projects Agency) driverless cars 113–16 investment in 113 Grand Challenge (2004) 113–14, 117 course 114 diversity of vehicles 114 GPS coordinates 114 problems 114–15 top-scoring vehicle 115 vehicles’ failure to finish 115 Grand Challenge (2005) 115 targeting of military vehicles 113–14 data 25–47 exchange of 25, 26, 44–5 dangers of 45 healthcare 105 insurance 30–1 internet browsing history 36–7, 36–8 internet giants 36 manipulation and 39–44 medical records 102–7 benefits of algorithms 106 DeepMind 104–5 disconnected 102–3 misuse of data 106 privacy 105–7 patterns in 79–81, 108 personal 108 regulation of America 46–7 Europe 46–7 global trend 47 sale of 36–7 Sesame Credit 45–6, 168 shopping habits 28, 29, 31 supermarkets and 26–8 superstores and 28–31 data brokers 31–9 benefits provided by 32 Cambridge Analytica 39–42 data profiles 32 inferred data 32–4, 35 murky practices of 47 online adverts 33–5 rich and detailed datasets 103 Sesame Credit 45–6 unregulated 36 in America 36 dating algorithms 9 Davies, Toby 156, 157 decision trees 56–8 Deep Blue 5-7, 8 deep learning 86 DeepMind access to full medical histories 104–5 consent ignored 105 outrage 104 contract with Royal Free NHS Trust 104 dementia 90–2 Dewes, Andreas 36–7 Dhami, Mandeep 75, 76 diabetic retinopathy 96 Diaconis, Pesri 124 diagnostic machines 98–101, 110–11 differential diagnosis 99 discrimination 71 disease Alzheimer’s disease 90–1, 92 diabetic retinopathy 96 diagnosing 59, 99, 100 disease (continued) hereditary causes 108 Hippocrates’s understanding of 80 Huntington’s disease 110 motor neurone disease 100 pre-modern medicine 80 see also breast cancer distance decay 144 DNA (deoxyribonucleic acid) 106, 109 testing 164–5 doctors 81 unique skills of 81–2 Dodds, Peter 176–7 doppelgängers 161–3, 164, 169 Douglas, Neil 162–3 driver-assistance technology 131 driverless cars 113–40 advantages 137 algorithms and 117 Bayes’ red ball analogy 123–4 ALVINN (Autonomous Land Vehicle In a Neural Network) 118–19 autonomy 129, 130 full 127, 130, 134, 138 Bayes’ theorem 121–4 breaking the rules of the road 128 bullying by people 129 cameras and 117–18 conditions for 129 cyclists and 129 dealing with people 128–9 difficulties of building 117–18, 127–8 early technology 116–17 framing of technology 138 inevitability of errors 140 measurement 119, 120 neural networks 117–18 potential issues 116 pre-decided go-zones 130 sci-fi era 116 simulations 136–7 speed and direction 117 support for drivers 139 trolley problem 125–6 Uber 135 Waymo 129–30 driverless technology 131 Dubois, Captain 133, 137 Duggan, Mark 49 Dunn, Edwina 26 early warning systems 18 earthquakes 151–2 eBureau 31 Eckert, Svea 36–7 empathy 81–2 ensembles 58 Eppink, Richard 17, 18 Epstein, Robert 14–15 equations 8 Equivant (formerly Northpointe) 69, 217n38 errors in algorithms 18–19, 61–2, 76, 159–60, 197–9, 200–201 false negatives 62, 87, 88 false positives 62, 66, 87, 88 Eureka Prometheus Project 117 expectant mothers 28–9 expectations 7 Experiments in Musical Intelligence (EMI) 189–91, 193 Face ID (Apple) 165–6 Facebook 2, 9, 36, 40 filtering 10 Likes 39–40 news feeds experiment 42–3 personality scores 39 privacy issues 25 severing ties with data brokers 47 FaceFirst 170, 171 FaceNet (Google) 167, 169 facial recognition accuracy 171 falling 168 increasing 169 algorithms 160–3, 165, 201–2 2D images 166–7 3D model of face 165–6 Face ID (Apple) 165–6 FaceFirst 170 FaceNet (Google) 167, 169 measurements 163 MegaFace 168–9 statistical approach 166–7 Tencent YouTu Lab 169 in China 168 cold cases 172 David Baril incident 171–2 differences from DNA testing 164–5 doppelgängers 161–3, 164, 169 gambling addicts 169–70 identical looks 162–3, 164, 165 misidentification 168 neural networks 166–7 NYPD statistics 172 passport officers 161, 164 police databases of facial images 168 resemblance 164, 165 shoplifters 170 pros and cons of technology 170–1 software 160 trade-off 171–3 Youssef Zaghba incident 172 fairness 66–8, 201 tweaking 70 fake news 42 false negatives 62, 87, 88 false positives 62, 66, 87, 88 FBI (Federal Bureau of Investigation) 168 Federal Communications Commission (FCC) 36 Federal Trade Commission 47 feedback loops 156–7 films 180–4 algorithms for 183 edits 182–3 IMDb website 181–2 investment in 180 John Carter (film) 180 novelty and 182 popularity 183–4 predicting success 180–1 Rotten Tomatoes website 181 study 181–2 keywords 181–2 filtering algorithms 9–10 Financial Times 116 fingerprinting 145, 171 Firebird II 116 Firefox 47 Foothill 156 Ford 115, 130 forecasts, decision trees 57–8 free technology 44 Fuchs, Thomas 101 Galton, Francis 107–8 gambling addicts 169–70 GDPR (General Data Protection Regulation) 46 General Motors 116 genetic algorithms 191–2 genetic testing 108, 110 genome, human 108, 110 geographical patterns 142–3 geoprofiling 147 algorithm 144 Germany facial recognition algorithms 161 linking of healthcare records 103 Goldman, William 181, 184 Google 14–15, 36 creepy line 28, 30, 39 data security record 105 FaceNet algorithm 167, 169 high-paying executive jobs 35 see also DeepMind Google Brain 96 Google Chrome plugins 36–7 Google Images 69 Google Maps 120 Google Search 8 Google Translate 38 GPS 3, 13–14, 114 potential errors 120 guardian mode 139 Guerry, André-Michel 143–4 gun crime 158 Hamm, John 99 Hammond, Philip 115 Harkness, Timandra 105–6 Harvard researchers experiment (2013) 88–9 healthcare common goal 111–12 exhibition (1884) 107 linking of medical records 102–3 sparse and disconnected dataset 103 healthcare data 105 Hinton, Geoffrey 86 Hippocrates 80 Hofstadter, Douglas 189–90, 194 home cooks 30–1 homosexuality 22 hotspots, crime 148, 149, 150–1, 155 Hugo, Christoph von 124–5 human characteristics, study of 107 human genome 108, 110 human intuition 71–4, 77, 122 humans and algorithms opposite skills to 139 prediction 22, 59–61, 62–5 struggle between 20–4 understanding the human mind 6 domination by machines 5-6 vs machines 59–61, 62–4 power of veto 19 PredPol (PREDictive POLicing) 153–4 strengths of 139 weaknesses of 139 Humby, Clive 26, 27, 28 Hume, David 184–5 HunchLab 157–8 Huntington’s disease 110 IBM 97–8 see also Deep Blue Ibrahim, Rahinah 197–8 Idaho Department of Health and Welfare budget tool 16 arbitrary numbers 16–17 bugs and errors 17 Excel spreadsheet 17 legally unconstitutional 17 naive trust 17–18 random results 17 cuts to Medicaid assistance 16–17 Medicaid team 17 secrecy of software 17 Illinois prisons 55, 56 image recognition 11, 84–7, 211n13 inferred data 32–4, 35 personality traits 40 Innocence Project 164 Instagram 36 insurance 30–1 genetic tests for Huntington’s disease 110 life insurance stipulations 109 unavailability for obese patients 106 intelligence tracking prevention 47 internet browsing history 36–8 anonymous 36, 37 de-anonymizing 37–8 personal identifiers 37–8 sale of 36–7 Internet Movie Database (IMDb) 181–2 intuition see human intuition jay-walking 129 Jemaah Islam 198 Jemaah Islamiyah 198 Jennings, Ken 97–8 Jeopardy!

The Data Detective: Ten Easy Rules to Make Sense of Statistics

by

Tim Harford

Published 2 Feb 2021

One approach is that used by a team of investigative journalists at ProPublica, led by Julia Angwin. Angwin’s team wanted to scrutinize a widely used algorithm called COMPAS (Correctional Offender Management Profiling for Alternative Sanctions). COMPAS used the answers to a 137-item questionnaire to assess the risk that a criminal might be rearrested. But did it work? And was it fair? It wasn’t easy to find out. COMPAS is owned by a company, Equivant (formerly Northpointe), that is under no obligation to share the details of how it works. And so Angwin and her team had to judge it by analyzing the results, laboriously pulled together from Broward County in Florida, a state that has strong transparency laws.

…

Statistical Prediction (Meehl), 167 Clinton, Bill, 188–89 Clinton, Hillary, 147 CNN, 158 Cochrane, Archie, 132–33 Cochrane Collaboration, 132–34 Cochrane Library, 133–134 codes of ethics, 180 cognitive reflection test, 41–42 Colbert, Stephen, 274–75, 274n Colbert Report, The, 274–75, 274n commute times, 47–49 comparability of data and forecasting, 252, 254 and income inequality measures, 82 and infant mortality measures, 66–67 and official statistics, 189 and scale of data, 93–95 and value of imagery, 62–63 and visualization of data, 221–23, 228, 230–31, 235 COMPAS (Correctional Offender Management Profiling for Alternative Sanctions), 176–79 complex choices, 105–8 composite measures, 91–92 confidentiality, 208 confirmation bias, 33 conformity, 135–38 Congressional Budget Office (CBO), 186–89, 199, 204, 212 Conservative Party (UK), 146–47 consumer data, 159–64, 175–76 contactless payment data, 49 context of data, 88.

Nexus: A Brief History of Information Networks From the Stone Age to AI

by

Yuval Noah Harari

Published 9 Sep 2024

Starr, “Evidence-Based Sentencing and the Scientific Rationalization of Discrimination,” Stanford Law Review 66, no. 4 (2014): 803–72; Cecelia Klingele, “The Promises and Perils of Evidence-Based Corrections,” Notre Dame Law Review 91, no. 2 (2015): 537–84; Jennifer L. Skeem and Jennifer Eno Louden, “Assessment of Evidence on the Quality of the Correctional Offender Management Profiling for Alternative Sanctions (COMPAS),” Center for Public Policy Research, Dec. 26, 2007, cpb-us-e2.wpmucdn.com/sites.uci.edu/dist/0/1149/files/2013/06/CDCR-Skeem-EnoLouden-COMPASeval-SECONDREVISION-final-Dec-28-07.pdf; Julia Dressel and Hany Farid, “The Accuracy, Fairness, and Limits of Predicting Recidivism,” Science Advances 4, no. 1 (2018), article eaao5580; Julia Angwin et al., “Machine Bias,” ProPublica, May 23, 2016, www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing.

…

This algorithmic assessment influenced the judge to sentence Loomis to six years in prison—a harsh punishment for the relatively minor offenses he admitted to.27 Loomis appealed to the Wisconsin Supreme Court, arguing that the judge violated his right to due process. Neither the judge nor Loomis understood how the COMPAS algorithm made its evaluation, and when Loomis asked to get a full explanation, the request was denied. The COMPAS algorithm was the private property of the Northpointe company, and the company argued that the algorithm’s methodology was a trade secret.28 Yet without knowing how the algorithm made its decisions, how could Loomis or the judge be sure that it was a reliable tool, free from bias and error? A number of studies have since shown that the COMPAS algorithm might indeed have harbored several problematic biases, probably picked up from the data on which it had been trained.29 In Loomis v.

…

Banks and other institutions are increasingly relying on algorithms to make decisions, precisely because algorithms can take many more data points into account than humans can. But when it comes to providing explanations, this creates a potentially insurmountable obstacle. How can a human mind analyze and evaluate a decision made on the basis of so many data points? We may well think that the Wisconsin Supreme Court should have forced the Northpointe company to reveal how the COMPAS algorithm decided that Eric Loomis was a high-risk person. But if the full data was disclosed, could either Loomis or the court have made sense of it? It’s not just that we need to take numerous data points into account. Perhaps most important, we cannot understand the way the algorithms find patterns in the data and decide on the allocation of points.

Algorithms of Oppression: How Search Engines Reinforce Racism

by

Safiya Umoja Noble

Published 8 Jan 2018

At that meeting, I participated in a working group on artificial-intelligence social inequality, where tremendous concern was raised about deep-machine-learning projects and software applications, including concern about furthering social injustice and structural racism. In attendance was the journalist Julia Angwin, one of the investigators of the breaking story about courtroom sentencing software Northpointe, used for risk assessment by judges to determine the alleged future criminality of defendants.6 She and her colleagues determined that this type of artificial intelligence miserably mispredicted future criminal activity and led to the overincarceration of Black defendants. Conversely, the reporters found it was much more likely to predict that White criminals would not offend again, despite the data showing that this was not at all accurate.

…

See Google; technology monopolies Moore, Hunter, 120–21, 158 Morville, Peter, 87 “Mother” Emanuel African Methodist Episcopal Church, 11, 110 Ms. blog, 155 Nash, Jennifer C., 100–101 National Association for the Advancement of Colored People, 55; NAACP Image Awards, 191n74 National Endowment for the Arts, 183 Negroponte, Nicholas, 187n9 neoliberalism, 1; capitalism, 33, 36, 104, 133; misinformation and misrepresentation, 185; privatized web, 11, 61, 165, 179; technology policy, 32, 64, 91–92, 129, 131, 161 neutrality, expectation of, 1, 6, 18, 25, 56; content prioritization processes, 156 “new capitalism,” 92 Nichols, John, 49, 154 Niesen, Molly, 51 Nissenbaum, Helen, 26 nonconsensual pornography (NCP), 119–22 Northpointe, 27 Obama, Michelle, 6, 9 Off Our Backs, 132 Olson, Hope A., 138, 140–42 Omi, Michael, 80 O’Neil, Cathy, 27 online directories, 25 On Our Backs, 131–32 Open Internet Coalition, 156 Padilla, Melissa, 134 Page, Larry, 37, 38, 40–41, 47 Pasquale, Frank, 28 Peet, Lisa, 134 Peterson, Latoya, 4–5 Pew Internet and American Life Project, 35, 51, 53, 190n68 Pew Research Center, 51 Pinckney, Clementa, 110 police database mug shots, 123–24 political information online, 49; effect of information bias, 52–53 “politics of recognition,” 84–85 politics of technology, 70, 89 pornography: algorithm to suppress pornography, 104; commercial porn, 100–102; Google algorithm to suppress pornography, 104; male gaze and, 58–59; pornification of Black women, 10, 17, 32–33, 35, 49, 59, 102; pornification of Latinas and Asians, 4, 11, 75, 159; pornographic search results for Black women, 99–100.

Show Me the Bodies: How We Let Grenfell Happen

by

Peter Apps

Published 10 Nov 2022

The couple rented in Ealing for a few years, putting money to one side to save for a deposit on a flat. They applied for permanent residency and then citizenship. ‘Because I come from quite a conservative financial mindset, I wanted to be debt-free as soon as I possibly could,’ she said. ‘This was the first debt I had ever taken.’ The couple found a flat in a block, Northpoint in Bromley. ‘We completely fell in love with the flat,’ she recalls. They got the keys in December 2015. ‘That was an extremely happy time for us. Some little girls dream about having a family, I used to dream about having my own house,’ says Ritu. ‘I had saved a bit of money to buy nice furniture.

…

Still in denial about their own responsibility for Grenfell, they insisted it was not their fault. The best they could offer leaseholders was a weak-sounding and often repeated plea to private building owners to ‘do the right thing’. They insisted that – thanks to the waking watches – the buildings were safe to occupy. At Northpoint, as the waking watch remained in place, the cost pressure simply became too much for residents to bear. Ritu’s neighbours decided they would keep watch themselves. She spent Christmas of 2018 wearing a high-vis jacket, walking the empty corridors of her flat, checking for any signs of fire. And Ritu was not alone.

Underground, Overground

by

Andrew Martin

Published 13 Nov 2012

As a result, the purple of the Metropolitan Line ceased to appear on the Tube map west of Baker Street, and the line from there to Paddington was shown as belonging to the Hammersmith & City and the Circle Line only. The operational change this reflects means that today all Metropolitan trains approaching the historical platform at Baker Street from the east are whisked north at the last moment, to proceed along the Metropolitan’s north-pointing ‘country’ branch. There is no longer anything Metropolitan – neither ‘Railway’ nor ‘Line’ – running through the beautiful brick-vaulted station that is so liberally decorated with illustrated panels proclaiming ‘Metropolitan Railway 1863’ and ‘The world’s first Underground Railway’. Then again, most people don’t notice.

…

That serving the District and Piccadilly is on one side of an un-crossable road called Hammersmith Broadway; that serving the Hammersmith & City is on the other side. Whichever exit you emerge from, at whichever station, you are immediately lost. Before moving on to the Metropolitan’s painful encirclement of central London in supposed partnership with the District, there is an emerging north-pointing prong that ought to be noted. In April 1868 a line was constructed north from Baker Street by the Metropolitan & St John’s Wood Railway, a subsidiary of the Met that would be incorporated into the parent in 1872. The line went up to Swiss Cottage, occupying for much of the way a single-bore tunnel that only accommodated one train like a deep-level Tube tunnel, even though the line was built on the cut-and-cover principle associated with vault-like tunnels holding two trains.

System Error: Where Big Tech Went Wrong and How We Can Reboot

by

Rob Reich

,

Mehran Sahami

and

Jeremy M. Weinstein

Published 6 Sep 2021

At his sentencing, however, the judge relied on an algorithmically generated risk assessment called COMPAS that indicated that Loomis was highly likely to reoffend. The judge rejected Loomis’s request for probation and gave him a six-year prison term instead. Neither the judge nor the lawyers, and certainly not Loomis, understood how COMPAS works. They received only the output from the algorithm: a risk score. Northpointe, the company that produced the technology and had sold it to the state of Wisconsin, refused to divulge the algorithmic model, treating it as intellectual property. When Loomis’s lawyers sought to appeal his sentence, they demanded an explanation for the risk score he’d received, an explanation that no one could provide.

…

Furthermore, white defendants who would have committed another crime were mislabeled as low risk 70 percent more often than black defendants. The results of the ProPublica investigation led to a great deal of scholarly debate, including about whether ProPublica had employed appropriate statistical measures, using a relevant definition of fairness (which has been disputed by Northpointe and others), or had made claims that were too strong in the face of other mitigating evidence. The debate continues, but the narrative of racially biased algorithmic decision-making has nevertheless taken hold. Subsequent studies have reinforced the concern. In Kentucky, before the systematic introduction of algorithmic decision-making, white and black defendants were offered no-bail release at roughly the same rate.

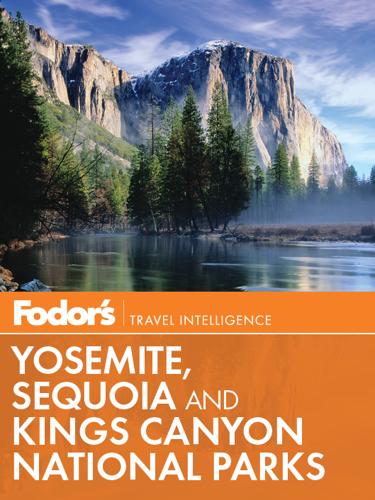

Yosemite, Sequoia & Kings Canyon

by

Fodor's

Published 17 Aug 2010

Regal solitude: To spend a day or two hiking in a subalpine world of your own, pick one of the 11 trailheads at Mineral King. GETTING ORIENTED The two parks comprise 865,952 acres, mostly on the western flank of the Sierra. A map of the adjacent parks looks vaguely like a mitten, with the palm of Sequoia National Park south of the north-pointing, skinny thumb and long fingers of Kings Canyon National Park. Between the western thumb and eastern fingers, north of Sequoia, lies part of Sequoia National Forest, which includes Giant Sequoia National Monument. 1 Giant Forest–Lodgepole Village. The most heavily visited area of Sequoia lies at the base of the "thumb" portion of Kings Canyon National Park and contains major sights such as Giant Forest, General Sherman Tree, Crystal Cave, and Moro Rock. 2 Grant Grove Village–Redwood Canyon.

Coders: The Making of a New Tribe and the Remaking of the World

by

Clive Thompson

Published 26 Mar 2019

In recent years, various AI systems have been rolled out in law enforcement, aimed at helping overloaded judges determine a defendant’s likelihood of reoffending. But when they’ve been analyzed, these systems appear to be riddled with racial biases. One well-known system, COMPAS—made by the firm Northpointe—was studied by the news agency ProPublica, which looked at 7,000 defendants who had been run through COMPAS. ProPublica found that COMPAS was almost twice as likely to label a black defendant as getting a high-risk recidivist score than a white defendant, even when they controlled for these defendants’ prior crimes, age, and gender.

…

Robot (TV show), ref1, ref2, ref3 Mueller, Robert, ref1 Mullenweg, Matt, ref1 munitions law, ref1, ref2 Murphy, Bobby, ref1 Musk, Elon, ref1 My Fair Ladies (IBM recruiting brochure), ref1 MySpace, ref1 Myst (game), ref1 Mythical Man-Month, The (Brooks), ref1 Nakamoto, Satoshi (pseudonym), ref1 National Cancer Institute, ref1 National Health Service hospitals, ransomware attack on, ref1 National Security Agency (NSA) Clipper Chip created by, ref1 cypherpunks and, ref1 munitions law, ref1, ref2 public/private key crypto and, ref1 Neopets, ref1, ref2 Net, The (film), ref1 Netscape, ref1, ref2, ref3 network effects, of scale, ref1 neural nets, ref1 bias in, ref1 black-box training, ref1 coding for, ref1 data gathering for, ref1 deep learning, ref1 LeCun first hypothesizes, in 1950s, ref1 training of, ref1, ref2 Newhouse, Ben, ref1, ref2, ref3 News Feed (Facebook), ref1, ref2, ref3 coding for and launch of, ref1 initial negative reaction to, ref1 measures to fix problems found at, ref1 optimization and, ref1 political partisanship and other negative side effects of, ref1 privacy code released, ref1 scale and, ref1 success of, ref1 viewership on Facebook increased by, ref1 New York Times, ref1 Ng, Andrew, ref1, ref2, ref3 Nightingale, Johnathan, ref1 Noble, Safiya Umoja, ref1, ref2 Noisebridge, ref1 Northpointe, ref1 Norvig, Peter, ref1 “Nosedive” (Black Mirror TV show), ref1 NSA. See National Security Agency (NSA) objective and tangible results, coder’s pride in achieving, ref1 Olson, Ryan, ref1 Once You’re Lucky, Twice You’re Good (Lacy), ref1 on demand services, ref1 O’Neil, Cathy, ref1, ref2 “On First Looking into Chapman’s Homer” (Keats), ref1 OpenAI, ref1, ref2, ref3 open source software, ref1, ref2, ref3 optimization.

Damsel in Distressed: My Life in the Golden Age of Hedge Funds

by

Dominique Mielle

Published 6 Sep 2021

From around September 2000 to 2002, the Dow Jones telecom index dropped 86 percent and the wireless index 89 percent. A telecom calamity. In 2002 alone, WorldCom, Global Crossing, Qwest Communications, XO Communications, and Adelphia all went bankrupt. Companies that were once darlings of the markets—like Covad, Focal Communications, McLeod, Northpoint, and Winstar in the local phone business; 360 Networks in fiber optic, cable, and internet; and nTelos, NextWave, and Pinnacle Holdings in wireless—were no more. Younger readers will not even recognize their names. The telecom crash gave rise not only to an unprecedented number of bankruptcies, but also bankruptcies of unprecedented scale.

Patricia Unterman's San Francisco Food Lover's Pocket Guide

by

Patricia Unterman

and

Ed Anderson

Published 1 Mar 2007

.; Moderate; Credit cards: D, DC, MC, V Whenever you pass this glamorous dining car, you want to stop in because it looks like everyone is having such a good time. The menu has changed very little over the years: perfectly dressed salads, hamburgers with fries, and braised pot roast. GARY DANKO 800 Northpoint (at Hyde); 415-749-2060; www.garydanko.com; Seating nightly 5:30 P.M. to 10:00 P.M.; Expensive; Credit cards: all major Gary Danko’s pure, California-style execution is dazzling: flavors sing of themselves, and his sauces are light yet evocative of the main ingredient on the plate. With its prix fixe structure, Danko rewards diners who order the fanciest dishes from a generous list of choices.

Against the Grain: A Deep History of the Earliest States

by

James C. Scott

Published 21 Aug 2017

Indiana University Uralic and Altaic Series 144, Stephen Halkovic, ed. Bloomington: Research Institute for Inner Asian Studies, Indiana University, 1983. Mann, Charles C. 1491: New Revelations of the Americas Before Columbus. New York: Knopf, 2005. Manning, Richard. Against the Grain: How Agriculture Has Hijacked Civilization. New York: Northpoint, 2004. Marston, John M. “Archaeological Markers of Agricultural Risk Management.” Journal of Archaeological Anthropology 30 (2011): 190–205. Matthews, Roger. The Archaeology of Mesopotamia: Theories and Approaches. Oxford: Routledge, 2003. Mayshar, Joram, Omer Moav, Zvika Neeman, and Luigi Pascali.

Rule of the Robots: How Artificial Intelligence Will Transform Everything

by

Martin Ford

Published 13 Sep 2021

The young woman was nonetheless arrested, and the COMPAS system was applied to her case when she was booked into jail to await a court appearance. It turned out that the algorithm assigned her a significantly higher risk of becoming a repeat offender than a forty-one-year-old white man who already had a prior conviction for armed burglary and had served five years in prison.24 The company that sells the COMPAS system, Northpoint, Inc., disputes the analysis performed by Propublica, and there continues to be a debate about the extent to which the system is actually biased. It is especially concerning, however, that the company is unwilling to share the computational details of its algorithm because it considers them to be proprietary.

The Knowledge: How to Rebuild Our World From Scratch

by

Lewis Dartnell

Published 15 Apr 2014

Chinese sailors first employed the incredible direction-seeking behavior of natural lodestones (in Middle English meaning “leading stone”) in the eleventh century, and later magnetized iron needles. The compass needle works by turning itself to lie parallel with the lines of the Earth’s magnetic field, and so aligning its length between the poles: it helps to mark the north-pointing end of the needle. Not only will a compass enable you to maintain a constant heading in total absence of any other external references, but if two (or more) prominent landmarks are in sight, you can take a compass bearing to them and so triangulate your position accurately on a map or chart. Although you can always find north or south by a clear night sky, the compass is a fantastic navigational tool when it’s overcast.

The Coming of Neo-Feudalism: A Warning to the Global Middle Class

by

Joel Kotkin

Published 11 May 2020

fbclid=IwAR2Qubw2ENnDLE_G1GHwGwsDaOUtwmBfRZalygyhQmO-Au7xAAd28CLXGwc; “Officials in Beijing worry about Marx-loving students,” Economist, September 27, 2018, https://www.economist.com/china/2018/09/27/officials-in-beijing-worry-about-marx-loving-students. 55 Guy Standing, “A ‘Precariat Charter’ is required to combat the inequalities and insecurities produced by global capitalism,” London School of Economics and Political Science, May 5, 2014, http://blogs.lse.ac.uk/europpblog/2014/05/05/a-precariat-charter-is-required-to-combat-the-inequalities-and-insecurities-produced-by-global-capitalism/; Aaron M. Renn, “Post-Work Won’t Work,” City Journal, August 4, 2017, https://www.city-journal.org/html/post-work-wont-work-15383.html. 56 Wendell Berry, What Are People For? (New York: Northpoint, 1990), 125. CHAPTER 16—THE NEW GATED CITY 1 Richard Florida, “How and Why American Cities Are Coming Back,” City Lab, May 17, 2012, https://www.citylab.com/life/2012/05/how-and-why-american-cities-are-coming-back/2015/; Lauren Nolan, “A Deepening Divide: Income Inequality Grows Spatially in Chicago,” Voorhees Center for Neighborhood and Community Improvement, March 11, 2015, https://voorheescenter. wordpress.com/2015/03/11/a-deepening-divide-income-inequality-grows-spatially-in-chicago/; Aaron M.

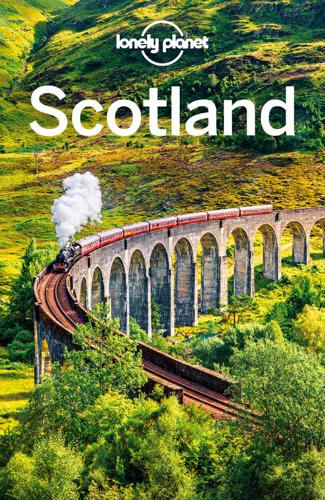

Lonely Planet Scotland

by

Lonely Planet

There's good karma if you are playing golf – Bobby Locke won the Open in 1957 while a guest here. 5Eating TailendFISH & CHIPS£ ( MAP GOOGLE MAP ; www.thetailend.co.uk; 130 Market St; takeaway £4-9; h11.30am-10pm)S Delicious fresh fish sourced from Arbroath, just up the coast, puts this a class above most chippies. It fries to order and it's worth the wait. The array of exquisite smoked delicacies at the counter will have you planning a picnic or fighting for a table in the licensed cafe out the back. Northpoint CafeCAFE£ ( MAP GOOGLE MAP ; %01334-473997; northpoint@dr.com; 24 North St; mains £3-7; h8.30am-5pm Mon-Fri, 9am-5pm Sat, 10am-4pm Sun; W) The cafe where Prince William famously met his future wife Kate Middleton while they were both students at St Andrews serves good coffee and a broad range of breakfast fare, from porridge topped with banana to toasted bagels, pancake stacks and classic fry-ups.

The Grid: The Fraying Wires Between Americans and Our Energy Future

by

Gretchen Bakke

Published 25 Jul 2016

Following the model provided by the telecommunications industry the utilities would like to remake electricity into a new, more easily graspable commodity while remaking themselves into providers of services and gadgets. If they can manage this double task they will stay alive. If they fail a great many of them will very likely die, as the telecommunications companies Covad, Focal Communications, McLeod, Northpoint, and Winstar died during the deregulation of that industry, as WorldCom died in 2002—at that point the largest bankruptcy in U.S. history. The success of the smart grid, therefore, has very real stakes. For utilities, it could stay their demise. For consumers, it offers a way of keeping something like a big grid and the equal access to affordable electric power that this enables.

Atlantic: Great Sea Battles, Heroic Discoveries, Titanic Storms & a Vast Ocean of a Million Stories

by

Simon Winchester

Published 27 Oct 2009

The original is long gone; but the copies that exist all show the same thing: an Atlantic—here called Mare Glaciale, the icebound sea, with islands such as the Faroes, Iceland, Shetland, and Orkney all in their more or less accurate relative positions—bordered by an almost wholly connected skein of landmasses. There was Norway, of course; then Gronlandia, then Helleland, Markland and Skralingeland (which Nordic scholars suggest—as flagstone land, forest land, and land of the savages—to be portions of Labrador); and then finally, jutting from the southwest of the chart, a slender, north-pointing peninsula—marked simply as Promonterium Vinlandiae, the Peninsula of Vinland. This was the clue that concluded a decades-long search. Ever since the Icelandic sagas had mentioned Vinland, Americans, and Canadians, mainly in the northeast, had been scouring their properties and their neighborhoods for anything that might suggest a onetime Norse settlement—for who would not wish to know that European feet had first been placed on their front garden, or that Nordic sailors had walked first on their own village beach?

Accessory to War: The Unspoken Alliance Between Astrophysics and the Military

by

Neil Degrasse Tyson

and

Avis Lang

Published 10 Sep 2018

Some scholars confidently apply the term “compass” to objects that began to be used by Chinese navigators around AD 500 as sea routes to Japan were established, and the first mention of a south-pointing shipboard needle appears in a Chinese navigational text written in AD 1100. A resident of Amalfi, a southern Italian maritime power in the twelfth century, has traditionally been credited with the invention of the north-pointing mariner’s compass, and a contemporary chronicler described medieval Amalfi itself as famous for showing sailors the paths of the sea and sky. The first Arab text to mention a compass, written in the thirteenth century, calls the instrument by its Italian name. To some historians, the fact that the Chinese referred to south-seeking needles and the Italians to north-seeking ones suggests the likelihood of independent invention.29 Whatever the origins, compasses worked, and the way they worked was well understood in the Mediterranean by 1200, when a French writer described in detail how to rely on a compass to navigate by “the star that never moves”: This is the star that the sailors watch whenever they can, for by it they keep course.

Lonely Planet Scotland

by

Lonely Planet

Balgove LarderCAFE£ (%01334-898145; www.balgove.com; Strathtyrum; mains £6-9; h9am-5pm; pWc) A bright and spacious agricultural shed in a rural setting a mile west of St Andrews houses this farm shop and cafe, serving good coffee, hearty breakfasts, and lunch dishes that use local produce such as roast-beetroot salad (grown on the farm itself), and smoked-haddock chowder (with fish from St Monans). Northpoint CafeCAFE£ (map Google map; %01334-473997; www.facebook.com/northpointcafe; 24 North St; mains £4-8; h8.30am-5pm Mon-Fri, 9am-5pm Sat, 10am-4pm Sun; W) The cafe where Prince William famously met his future wife, Kate Middleton, while they were both students at St Andrews serves good coffee and a broad range of breakfast fare, from porridge topped with banana to toasted bagels, pancake stacks and classic fry-ups.

Coastal California

by

Lonely Planet

Towns may look like idyllic rural hamlets, but the shops cater to cosmopolitan and expensive tastes. The ‘common’ folk here eat organic, vote Democrat and drive hybrids. Geographically, Marin County is a near mirror image of San Francisco. It’s a south-pointing peninsula that nearly touches the north-pointing tip of the city, and is surrounded by ocean and bay. But Marin is wilder, greener and more mountainous. Redwoods grow on the coast side of the hills, the surf crashes against cliffs, and hiking and cycling trails crisscross the blessed scenery of Point Reyes, Muir Woods and Mt Tamalpais. Nature is what makes Marin County such an excellent day trip or weekend escape from San Francisco.

Coastal California Travel Guide

by

Lonely Planet

Just across the Golden Gate Bridge from San Francisco, the region has a wealthy population that cultivates a laid-back lifestyle. Towns may look like idyllic rural hamlets, but the shops cater to cosmopolitan, expensive tastes. The ‘common’ folk here eat organic, vote Democrat and drive Teslas. Geographically, Marin County is a near mirror image of San Francisco. It’s a south-pointing peninsula that nearly touches the north-pointing tip of the city, and is surrounded by ocean and bay. But Marin is wilder, greener and more mountainous. Redwoods grow on the coast side of the hills, surf crashes against cliffs, and hiking and cycling trails crisscross blessedly scenic Point Reyes, Muir Woods and Mt Tamalpais. Nature is what makes Marin County such an excellent day trip or weekend escape from San Francisco.

Northern California Travel Guide

by

Lonely Planet