artificial general intelligence

description: theoretical class of AI able to perform any intelligence-based task a human can

117 results

Advances in Artificial General Intelligence: Concepts, Architectures and Algorithms: Proceedings of the Agi Workshop 2006

by

Ben Goertzel

and

Pei Wang

Published 1 Jan 2007

Adams, Eric Baum, Pei Wang, Steve Grand, Ben Goertzel and Phil Goetz 283 Author Index 295 Advances in Artificial General Intelligence: Concepts, Architectures and Algorithms B. Goertzel and P. Wang (Eds.) IOS Press, 2007 © 2007 The authors and IOS Press. All rights reserved. 1 Introduction: Aspects of Artificial General Intelligence Pei WANG and Ben GOERTZEL Introduction This book contains materials that come out of the Artificial General Intelligence Research Institute (AGIRI) Workshop, held in May 20-21, 2006 at Washington DC. The theme of the workshop is “Transitioning from Narrow AI to Artificial General Intelligence.” In this introductory chapter, we will clarify the notion of “Artificial General Intelligence”, briefly survey the past and present situation of the field, analyze and refute some common objections and doubts regarding this area of research, and discuss what we believe needs to be addressed by the field as a whole in the near future.

…

The next major step in this direction was the May 2006 AGIRI Workshop, of which this volume is essentially a proceedings. The term AGI, artificial general intelligence, was introduced as a modern successor to the earlier strong AI. Artificial General Intelligence What is artificial general intelligence? The AGIRI website lists several features, describing machines • • • • with human-level, and even superhuman, intelligence. that generalize their knowledge across different domains. that reflect on themselves. and that create fundamental innovations and insights. Even strong AI wouldn’t push for this much, and this general, an intelligence. Can there be such an artificial general intelligence? I think there can be, but that it can’t be done with a brain in a vat, with humans providing input and utilizing computational output.

…

This is the situation that led to the organization of the 2006 AGIRI (Artificial General Intelligence Research Institute) workshop; and to the decision to pull together a book from contributions by the speakers at the conference. The themes of the book and the contents of the chapters are discussed in the Introduction by myself and Pei Wang; so in this Preface I will restrict myself to a few brief and general comments. As it happens, this is the second edited volume concerned with Artificial General Intelligence (AGI) that I have co-edited. The first was entitled simply Artificial General Intelligence; it appeared in 2006 under the Springer imprimatur, but in fact most of the material in it was written in 2002 and 2003.

The Myth of Artificial Intelligence: Why Computers Can't Think the Way We Do

by

Erik J. Larson

Published 5 Apr 2021

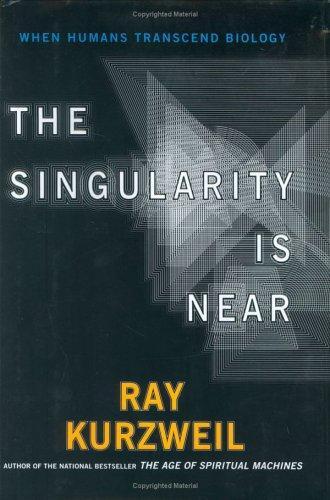

The notion of the prediction of radical conceptual innovation is itself conceptually incoherent.6 In other words, to suggest that we are on a “path” to artificial general intelligence whose arrival can be predicted presupposes that there is no conceptual innovation standing in the way—a view that even AI scientists convinced of the coming of artificial general intelligence and who are willing to offer predictions, like Ray Kurzweil, would not assent to. We all know, at least, that for any putative artificial general intelligence system to arrive at an as yet unknown facility for understanding natural language, there must be an invention or discovery of a commonsense, generalizing component.

…

The idea that we can predict the arrival of AI typically sneaks in a premise, to varying degrees acknowledged, that successes on narrow AI systems like playing games will scale up to general intelligence, and so the predictive line from artificial intelligence to artificial general intelligence can be drawn with some confidence. This is a bad assumption, both for encouraging progress in the field toward artificial general intelligence, and for the logic of the argument for prediction. Predictions about scientific discoveries are perhaps best understood as indulgences of mythology; indeed, only in the realm of the mythical can certainty about the arrival of artificial general intelligence abide, untrammeled by Popper’s or MacIntyre’s or anyone else’s doubts. Mythology about AI is not all bad.

…

An AI system can implement a syllogism, for instance, and also a planning algorithm (rules of the form: {A, B, C, …}→G, where A, B, and C are actions to be taken and G is the desired goal). There have been no major breakthroughs toward artificial general intelligence using such methods, but even modern AI scientists like Stuart Russell continue to insist that symbolic logic will be an important component of any eventual artificial general intelligence system—for intelligence is, among other things, about reasoning and planning. Aristotle thus kicked off formal studies of inference thousands of years ago. A few decades ago, he also helped kick off work on AI.

The Economic Singularity: Artificial Intelligence and the Death of Capitalism

by

Calum Chace

Published 17 Jul 2016

There is no reason to suppose that humans have attained anywhere near the maximum possible level of intelligence, and it seems highly probable that we will eventually create machines that are more intelligent than us in all respects – assuming we don't blow ourselves up first. We don't yet know whether those machines will be conscious, let alone whether they will be more conscious than us – if that is even a meaningful question. Artificial General Intelligence (AGI) and Superintelligence As we noted in chapter 1, the term for a machine which equals or exceeds human intelligence in all respects is artificial general intelligence, or AGI. The day when the first such machine is built will be a momentous one, as the arrival of superintelligence will not be far beyond it. The likelihood of an intelligence explosion is commonly referred to as the technological singularity.

…

The fact that Watson is an amalgam – some would say a kludge – of numerous different techniques does not in itself mark it out as different and perpetually inferior to human intelligence. It is nowhere near an artificial general intelligence which is human-level or beyond in all respects. It is not conscious. It does not even know that it won the Jeopardy match. But it may prove to be an early step in the direction of artificial general intelligence. In January 2016, an AI system called AlphaGo developed by Google's DeepMind beat Fan Hui, the European champion of Go, a board game. This was hailed as a major step forward: the game of chess has more possible moves (3580) than there are atoms in the visible universe, but Go has even more – 250150.

…

When you reach a singularity, the normal rules break down, and the future becomes even harder to predict than usual. In recent years, the term has been applied to the impact of technology on human affairs.[iv] Superintelligence and the technological singularity The technological singularity is most commonly defined as what happens when the first artificial general intelligence (AGI) is created – a machine which can perform any intellectual task that an adult human can. It continues to improve its capabilities and becomes a superintelligence, much smarter than any human. It then introduces change to this planet on a scale and at a speed which un-augmented humans cannot comprehend.

Surviving AI: The Promise and Peril of Artificial Intelligence

by

Calum Chace

Published 28 Jul 2015

Whether intelligence resides in the machine or in the software is analogous to the question of whether it resides in the neurons in your brain or in the electrochemical signals that they transmit and receive. Fortunately we don’t need to answer that question here. ANI and AGI We do need to discriminate between two very different types of artificial intelligence: artificial narrow intelligence (ANI) and artificial general intelligence (AGI (4)), which are also known as weak AI and strong AI, and as ordinary AI and full AI. The easiest way to do this is to say that artificial general intelligence, or AGI, is an AI which can carry out any cognitive function that a human can. We have long had computers which can add up much better than any human, and computers which can play chess better than the best human chess grandmaster.

…

As we saw in the introduction to this book, nobody suggested thirty years ago that we would have powerful AIs in our pockets in the form of telephones, even though now that it has happened it seems a natural and logical development. PART TWO: AGI Artificial General Intelligence CHAPTER 4 CAN WE BUILD AN AGI? 4.1 – Is it possible in principle? The three biggest questions about artificial general intelligence (AGI) are: Can we build one? If so, when? Will it be safe? The first of these questions is the closest to having an answer, and that answer is “probably, as long as we don’t go extinct first”. The reason for this is that we already have proof that it is possible for a general intelligence to be developed using very common materials.

…

TABLE OF CONTENTS TITLE PAGE INTRODUCTION: SURVIVING AI PART ONE: ANI (ARTIFICIAL NARROW INTELLIGENCE) CHAPTER 1 CHAPTER 2 CHAPTER 3 PART TWO: AGI (ARTIFICIAL GENERAL INTELLIGENCE) CHAPTER 4 CHAPTER 5 PART THREE: ASI (ARTIFICIAL SUPERINTELLIGENCE) CHAPTER 6 CHAPTER 7 PART FOUR: FAI (FRIENDLY ARTIFICIAL INTELLIGENCE) CHAPTER 8 CHAPTER 9 ACKNOWLEDGEMENTS ENDNOTES COMMENTS ON SURVIVING AI A sober and easy-to-read review of the risks and opportunities that humanity will face from AI. Jaan Tallinn, co-founder Skype, co-founder Centre for the Study of Existential Risk (CSER), co-founder Future of Life Institute (FLI) Understanding AI – its promise and its dangers – is emerging as one of the great challenges of coming decades and this is an invaluable guide to anyone who’s interested, confused, excited or scared.

The Big Nine: How the Tech Titans and Their Thinking Machines Could Warp Humanity

by

Amy Webb

Published 5 Mar 2019

With the G-MAFIA, federal government, and GAIA taking active roles in the transition from artificial narrow intelligence to artificial general intelligence, we feel comfortably nudged. 2049: The Rolling Stones Are Dead (But They’re Making New Music) By the 2030s, researchers working within the G-MAFIA published an exciting paper, both because of what it revealed about AI and because of how the work was completed. Working from the same set of standards and supported with ample funds (and patience) by the federal government, researchers collaborated on advancing AI. As a result, the first system to reach artificial general intelligence was developed. The system had passed the Contributing Team Member Test.

…

We will also take a deep dive into the unique situations faced by America’s Big Nine members and by Baidu, Alibaba, and Tencent in China. In Part II, you’ll see detailed, plausible futures over the next 50 years as AI advances. The three scenarios you’ll read range from optimistic to pragmatic and catastrophic, and they will reveal both opportunity and risk as we advance from artificial narrow intelligence to artificial general intelligence to artificial superintelligence. These scenarios are intense—they are the result of data-driven models, and they will give you a visceral glimpse at how AI might evolve and how our lives will change as a result. In Part III, I will offer tactical and strategic solutions to all the problems identified in the scenarios along with a concrete plan to reboot the present.

…

They started making wild, bold predictions about AI, saying that within ten years—meaning by 1967—computers would • beat all the top-ranked grandmasters to become the world’s chess champion, • discover and prove an important new mathematical theorem, and • write the kind of music that even the harshest critics would still value.26 Meantime, Minsky made predictions about a generally intelligent machine that could do much more than take dictation, play chess, or write music. He argued that within his lifetime, machines would achieve artificial general intelligence—that is, computers would be capable of complex thought, language expression, and making choices.27 The Dartmouth workshop researchers wrote papers and books. They sat for television, radio, newspaper, and magazine interviews. But the science was difficult to explain, and so oftentimes explanations were garbled and quotes were taken out of context.

Army of None: Autonomous Weapons and the Future of War

by

Paul Scharre

Published 23 Apr 2018

Patti Domm, “False Rumor of Explosion at White House Causes Stocks to Briefly Plunge; AP Confirms Its Twitter Feed Was Hacked,” April 23, 2013, http://www.cnbc.com/id/100646197. 185 deep neural networks to understand text: Xiang Zhang and Yann LeCun, “Text Understanding from Scratch,” April 4, 2016, https://arxiv.org/pdf/1502.01710v5.pdf. 185 Associated Press Twitter account was hacked: Domm, “False Rumor of Explosion at White House Causes Stocks to Briefly Plunge; AP Confirms Its Twitter Feed Was Hacked.” 186 design deep neural networks that aren’t vulnerable: “Deep neural networks are easily fooled.” 186 “counterintuitive, weird” vulnerability: Jeff Clune, interview, September 28, 2016. 186 “[T]he sheer magnitude, millions or billions”: JASON, “Perspectives on Research in Artificial Intelligence and Artificial General Intelligence Relevant to DoD,” 28–29. 186 “the very nature of [deep neural networks]”: Ibid, 28. 186 “As deep learning gets even more powerful”: Jeff Clune, interview, September 28, 2016. 186 “super complicated and big and weird”: Ibid. 187 “sobering message . . . tragic extremely quickly”: Ibid. 187 “[I]t is not clear that the existing AI paradigm”: JASON, “Perspectives on Research in Artificial Intelligence and Artificial General Intelligence Relevant to DoD,” Ibid, 27. 188 “nonintuitive characteristics”: Szegedy et al., “Intriguing Properties of Neural Networks.” 188 we don’t really understand how it happens: For a readable explanation of this broader problem, see David Berreby, “Artificial Intelligence Is Already Weirdly Inhuman,” Nautilus, August 6, 2015, http://nautil.us/issue/27/dark-matter/artificial-intelligence-is-already-weirdly-inhuman. 12 Failing Deadly: The Risk of Autonomous Weapons 189 “I think that we’re being overly optimistic”: John Borrie, interview, April 12, 2016. 189 “If you’re going to turn these things loose”: John Hawley, interview, December 5, 2016. 189 “[E]ven with our improved knowledge”: Perrow, Normal Accidents, 354. 191 “robo-cannon rampage”: Noah Shachtman, “Inside the Robo-Cannon Rampage (Updated),” WIRED, October 19, 2007, https://www.wired.com/2007/10/inside-the-robo/. 191 bad luck, not deliberate targeting: “ ‘Robotic Rampage’ Unlikely Reason for Deaths,” New Scientist, accessed June 12, 2017, https://www.newscientist.com/article/dn12812-robotic-rampage-unlikely-reason-for-deaths/. 191 35 mm rounds into a neighboring gun position: “Robot Cannon Kills 9, Wounds 14,” WIRED, accessed June 12, 2017, https://www.wired.com/2007/10/robot-cannon-ki/. 191 “The machine doesn’t know it’s making a mistake”: John Hawley, interview, December 5, 2016. 193 “incidents of mass lethality”: John Borrie, interview, April 12, 2016. 193 “If you put someone else”: John Hawley, interview, December 5, 2016. 194 “I don’t have a lot of good answers for that”: Peter Galluch, interview, July 15, 2016. 13 Bot vs.

…

A800 Mobile Autonomous Robotic System (MARS), 114 AAAI (Association for the Advancement of Artificial Intelligence), 243 AACUS (Autonomous Aerial Cargo/Utility System) helicopter, 17 ABM (Anti-Ballistic Missile) Treaty (1972), 301 accidents, see failures accountability gap, 258–63 acoustic homing seeker, 39 acoustic shot detection system, 113–14 active seekers, 41 active sensors, 85 adaptive malware, 226 advanced artificial intelligence; see also artificial general intelligence aligning human goals with, 238–41 arguments against regarding as threat, 241–44 building safety into, 238–41 dangers of, 232–33 drives for resource acquisition, 237–38 future of, 247–48 in literature and film, 233–36 militarized, 244–45 psychological dimensions, 233–36 vulnerability to hacking, 246–47 “advanced chess,” 321–22 Advanced Medium-Range Air-to-Air Missile (AMRAAM), 41, 43 Advanced Research Projects Agency (ARPA), 76–77 adversarial actors, 177 adversarial (fooling) images, 180–87, 181f, 183f, 185f, 253, 384n Aegis combat system, 162–67 achieving high reliability, 170–72 automation philosophy, 165–67 communications issues, 304 and fully autonomous systems, 194 human supervision, 193, 325–26 Patriot system vs., 165–66, 171–72 simulated threat test, 167–69 testing and training, 176, 177 and USS Vincennes incident, 169–70 Aegis Training and Readiness Center, 163 aerial bombing raids, 275–76, 278, 341–42 Afghanistan War (2001– ), 2–4 distinguishing soldiers from civilians, 253 drones in, 14, 25, 209 electromagnetic environment, 15 goatherder incident, 290–92 moral decisions in, 271 runaway gun incident, 191 AGI, see advanced artificial intelligence; artificial general intelligence AGM-88 high-speed antiradiation missile, 141 AI (artificial intelligence), 5–6, 86–87; see also advanced artificial intelligence; artificial general intelligence AI FOOM, 233 AIM-120 Advanced Medium-Range Air-to-Air Missile, 41 Air Force, U.S. cultural resistance to robotic weapons, 61 future of robotic aircraft, 23–25 Global Hawk drone, 17 nuclear weapons security lapse, 174 remotely piloted aircraft, 16 X-47 drone, 60–61 Air France Flight 447 crash, 158–59 Alexander, Keith, 216, 217 algorithms life-and-death decisions by, 287–90 for stock trading, see automated stock trading Ali Al Salem Air Base (Kuwait), 138–39 Alphabet, 125 AlphaGo, 81–82, 125–27, 150, 242 AlphaGo Zero, 127 AlphaZero, 410 al-Qaeda, 22, 253 “always/never” dilemma, 175 Amazon, 205 AMRAAM (Advanced Medium-Range Air-to-Air Missile), 41, 43 Anderson, Kenneth, 255, 269–70, 286, 295 anthropocentric bias, 236, 237, 241, 278 anthropomorphizing of machines, 278 Anti-Ballistic Missile (ABM) Treaty (1972), 301 antipersonnel autonomous weapons, 71, 355–56, 403n antipersonnel mines, 268, 342; see also land mines anti-radiation missiles, 139, 141, 144 anti-ship missiles, 62, 302 Anti-submarine warfare Continuous Trail Unmanned Vessel (ACTUV), 78–79 anti-vehicle mines, 342 Apollo 13 disaster, 153–54 appropriate human involvement, 347–48, 358 appropriate human judgment, 91, 347, 358 approval of autonomous weapons, see authorization of autonomous weapons Argo amphibious ground combat robot, 114 Arkhipov, Vasili, 311, 318 Arkin, Ron, 280–85, 295, 346 armed drones, see drones Arms and Influence (Schelling), 305, 341 arms control, 331–45 antipersonnel weapons, 355–56 ban of fully autonomous weapons, 352–55 debates over restriction/banning of autonomous weapons, 266–69 general principles on human judgment’s role in war, 357–59 inherent problems with, 284, 346–53 legal status of treaties, 340 limited vs. complete bans, 342–43 motivations for, 345 preemptive bans, 343–44 “rules of the road” for autonomous weapons, 356–57 successful/unsuccessful treaties, 332–44, 333t–339t types of weapons bans, 332f unnecessary suffering standards, 257–58 verification regimes, 344–45 arms race, 7–8, 117–19 Armstrong, Stuart, 238, 240–42 Army, U.S.

…

cultural resistance to robotic weapons, 61 Gray Eagle drone, 17 overcoming resistance to killing, 279 Patriot Vigilance Project, 171–72 Shadow drone, 209 ARPA (Advanced Research Projects Agency), 76–77 Article 36, 118 artificial general intelligence (AGI); See also advanced artificial intelligence and context, 238–39 defined, 231 destructive potential, 232–33, 244–45 ethical issues, 98–99 in literature and film, 233–36 narrow AI vs., 98–99, 231 timetable for creation of, 232, 247 as unattainable, 242 artificial intelligence (AI), 5–6, 86–87; see also advanced artificial intelligence; artificial general intelligence “Artificial Intelligence, War, and Crisis Stability” (Horowitz), 302, 312 Artificial Intelligence for Humans, Volume 3 (Heaton), 132 artificial superintelligence (ASI), 233 Art of War, The (Sun Tzu), 229 Asaro, Peter, 265, 285, 287–90 Asimov, Isaac, 26–27, 134 Assad, Bashar al-, 7, 331 Association for the Advancement of Artificial Intelligence (AAAI), 243 Atari, 124, 127, 247–48 Atlas ICBM, 307 atomic bombs, see nuclear weapons ATR (automatic target recognition), 76, 84–88 attack decision to, 269–70 defined, 269–70 human judgment and, 358 atypical events, 146, 176–78 Australia, 342–43 authorization of autonomous weapons, 89–101 DoD policy, 89–90 ethical questions, 90–93 and future of lethal autonomy, 96–99 information technology and revolution in warfare, 93–96 past as guide to future, 99–101 Auto-GCAS (automatic ground collision avoidance system), 28 automated machines, 31f, 32–33 automated (algorithmic) stock trading, 200–201, 203–4, 206, 210, 244, 387n automated systems, 31 automated weapons first “smart” weapons, 38–40 precision-guided munitions, 39–41 automatic machines, 31f automatic systems, 30–31, 110 automatic target recognition (ATR), 76, 84–88 automatic weapons, 37–38 Gatling gun as predecessor to, 35–36 machine guns, 37–38 runaway gun, 190–91 automation (generally) Aegis vs.

Superintelligence: Paths, Dangers, Strategies

by

Nick Bostrom

Published 3 Jun 2014

The first two were polls taken at academic conferences: PT-AI, participants of the conference Philosophy and Theory of AI in Thessaloniki 2011 (respondents were asked in November 2012), with a response rate of 43 out of 88; and AGI, participants of the conferences Artificial General Intelligence and Impacts and Risks of Artificial General Intelligence, both in Oxford, December 2012 (response rate: 72/111). The EETN poll sampled the members of the Greek Association for Artificial Intelligence, a professional organization of published researchers in the field, in April 2013 (response rate: 26/250). The TOP100 poll elicited the opinions among the 100 top authors in artificial intelligence as measured by a citation index, in May 2013 (response rate: 29/100). 82.

…

(The different lines in the plot correspond to different data sets, which yield slightly different estimates.6) Great expectations Machines matching humans in general intelligence—that is, possessing common sense and an effective ability to learn, reason, and plan to meet complex information-processing challenges across a wide range of natural and abstract domains—have been expected since the invention of computers in the 1940s. At that time, the advent of such machines was often placed some twenty years into the future.7 Since then, the expected arrival date has been receding at a rate of one year per year; so that today, futurists who concern themselves with the possibility of artificial general intelligence still often believe that intelligent machines are a couple of decades away.8 Two decades is a sweet spot for prognosticators of radical change: near enough to be attention-grabbing and relevant, yet far enough to make it possible to suppose that a string of breakthroughs, currently only vaguely imaginable, might by then have occurred.

…

A more relevant distinction for our purposes is that between systems that have a narrow range of cognitive capability (whether they be called “AI” or not) and systems that have more generally applicable problem-solving capacities. Essentially all the systems currently in use are of the former type: narrow. However, many of them contain components that might also play a role in future artificial general intelligence or be of service in its development—components such as classifiers, search algorithms, planners, solvers, and representational frameworks. One high-stakes and extremely competitive environment in which AI systems operate today is the global financial market. Automated stock-trading systems are widely used by major investing houses.

Architects of Intelligence

by

Martin Ford

Published 16 Nov 2018

MARTIN FORD: You’ve noted the limitations in current narrow or specialized AI technology. Let’s talk about the prospects for AGI, which promises to someday solve these problems. Can you explain exactly what Artificial General Intelligence is? What does AGI really mean, and what are the main hurdles we need to overcome before we can achieve AGI? STUART J. RUSSELL: Artificial General Intelligence is a recently coined term, and it really is just a reminder of our real goals in AI—a general-purpose intelligence much like our own. In that sense, AGI is actually what we’ve always called artificial intelligence.

…

The McKinsey Global Institute is a leader in conducting research into this area, and this conversation includes many important insights into the nature of the unfolding workplace disruption. The second question I directed at everyone concerns the path toward human-level AI, or what is typically called Artificial General Intelligence (AGI). From the very beginning, AGI has been the holy grail of the field of artificial intelligence. I wanted to know what each person thought about the prospect for a true thinking machine, the hurdles that would need to be surmounted and the timeframe for when it might be achieved. Everyone had important insights, but I found three conversations to be especially interesting: Demis Hassabis discussed efforts underway at DeepMind, which is the largest and best funded initiative geared specifically toward AGI.

…

However, it is also one of the most difficult challenges facing the field. A breakthrough that allowed machines to efficiently learn in a truly unsupervised way would likely be considered one of the biggest events in AI so far, and an important waypoint on the road to human-level AI. ARTIFICIAL GENERAL INTELLIGENCE (AGI) refers to a true thinking machine. AGI is typically considered to be more or less synonymous with the terms HUMAN-LEVEL AI or STRONG AI. You’ve likely seen several examples of AGI—but they have all been in the realm of science fiction. HAL from 2001 A Space Odyssey, the Enterprise’s main computer (or Mr.

Our Final Invention: Artificial Intelligence and the End of the Human Era

by

James Barrat

Published 30 Sep 2013

My informal survey of about two hundred computer scientists at a recent AGI conference confirmed what I’d expected. The annual AGI Conferences, organized by Goertzel, are three-day meet-ups for people actively working on artificial general intelligence, or like me who are just deeply interested. They present papers, demo software, and compete for bragging rights. I attended one generously hosted by Google at their headquarters in Mountain View, California, often called the Googleplex. I asked the attendees when artificial general intelligence would be achieved, and gave them just four choices—by 2030, by 2050, by 2100, or not at all? The breakdown was this: 42 percent anticipated AGI would be achieved by 2030; 25 percent by 2050; 20 percent by 2100; 10 percent by 2100, and 2 percent never.

…

Andrew Rubin, Google’s Senior Vice President of Mobile: Fried, Ina, “Android Chief Says Your Phone Should Not Be Your Assistant,” All Things D, October 19, 2011, http://allthingsd.com/20111019/android-chief-says-your-phone-should-not-be-your-assistant/ (accessed November 13, 2011). It may be that we need a scientific breakthrough: Goertzel, Ben, “Editor’s Blog Report on the Fourth Conference on Artificial General Intelligence,” H+ Magazine, September 1, 2011, http://hplusmagazine.com/2011/09/01/report-on-the-fourth-conference-on-artificial-general-intelligence/ (accessed November 22, 2011). LIDA scores like a human: Biever, Celeste, “Bot shows signs of consciousness,” New Scientist, April 1, 2011, http://www.newscientist.com/article/mg21028063.400-bot-shows-signs-of-consciousness.html (accessed June 1, 2011).

…

Aboujaoude, Elias accidents AI and, see risks of artificial intelligence nuclear power plant Adaptive AI affinity analysis agent-based financial modeling “Age of Robots, The” (Moravec) Age of Spiritual Machines, The: When Computers Exceed Human Intelligence (Kurzweil) AGI, see artificial general intelligence AI, see artificial intelligence AI-Box Experiment airplane disasters Alexander, Hugh Alexander, Keith Allen, Paul Allen, Robbie Allen, Woody AM (Automatic Mathematician) Amazon Anissimov, Michael anthropomorphism apoptotic systems Apple iPad iPhone Siri Arecibo message Aristotle artificial general intelligence (AGI; human-level AI): body needed for definition of emerging from financial markets first-mover advantage in jump to ASI from; see also intelligence explosion by mind-uploading by reverse engineering human brain time and funds required to develop Turing test for artificial intelligence (AI): black box tools in definition of drives in, see drives as dual use technology emotional qualities in as entertainment examples of explosive, see intelligence explosion friendly, see Friendly AI funding for jump to AGI from Joy on risks of, see risks of artificial intelligence Singularity and, see Singularity tight coupling in utility function of virtual environments for artificial neural networks (ANNs) artificial superintelligence (ASI) anthropomorphizing gradualist view of dealing with jump from AGI to; see also intelligence explosion morality of nanotechnology and runaway Artilect War, The (de Garis) ASI, see artificial superintelligence Asilomar Guidelines ASIMO Asimov, Isaac: Three Laws of Robotics of Zeroth Law of Association for the Advancement of Artificial Intelligence (AAAI) asteroids Atkins, Brian and Sabine Automated Insights availability bias Banks, David L.

Thinking Machines: The Inside Story of Artificial Intelligence and Our Race to Build the Future

by

Luke Dormehl

Published 10 Aug 2016

The Difference between Narrow and Wide A lifetime of sci-fi movies and books have ingrained in us the expectation that there will be some Singularity-style ‘tipping point’ at which Artificial General Intelligence will take place. Devices will get gradually smarter and smarter until, somewhere in a secret research lab deep in Silicon Valley, a message pops up on Mark Zuckerberg or Sergey Brin’s computer monitor, saying that AGI has been achieved. Like Ernest Hemingway once wrote about bankruptcy, Artificial General Intelligence will take place ‘gradually, then suddenly’. This is the narrative played out in films like James Cameron’s seminal Terminator 2: Judgment Day.

…

‘Unfortunately, the chatbots of today can only resort to trickery to hopefully fool a human into thinking they are sentient,’ one recent entrant in the Loebner Prize told me. ‘And it is highly unlikely without a yet-undiscovered novel approach to simulating an AI that any chatbot technology employed today could ever fool an experienced chatbot creator into believing they possess [artificial] general intelligence.’ Turing wasn’t particularly concerned with the metaphysical question of whether a machine can actually think. In his famous 1950 essay, ‘Computing Machinery and Intelligence’, he described it as ‘too meaningless to deserve discussion’. Instead he was interested in getting machines to perform activities that would be considered intelligent if they were carried out by a human.

…

Manipulating human leaders could meanwhile refer to the handing-over of important tasks to the AI assistants that will come to run our lives, while the development of AI weapons has been a goal since virtually the field’s earliest days. What he and Musk were specifically pointing towards was something called Artificial General Intelligence, or AGI. So far, all of the applications of Artificial Intelligence described in this book have come under the broad umbrella heading of ‘Narrow AI’ or ‘Weak AI’. This has nothing to do with how robust the technology is. As we saw in the early chapters, today’s deep learning neural networks are orders of magnitude less brittle than the symbol-crunching Artificial Intelligence that made up Good Old-Fashioned AI.

Supremacy: AI, ChatGPT, and the Race That Will Change the World

by

Parmy Olson

At one point Ben Goertzel, a singularity believer and AI scientist with long hippy hair, emailed Legg and several other scientists seeking ideas for a book title. It needed to describe artificial intelligence with human capabilities. Legg emailed him back, suggesting a phrase that would become a focal point for Hassabis and, eventually, a handful of the world’s largest tech companies: “artificial general intelligence.” For years, people like Hassabis, Legg, and other scientists exploring AI had used terms like strong AI or proper AI to refer to future software that displayed the same kind of intelligence as humans. But using the word general drove home an important point: the human brain was special because of all the different things it could do, from calculating numbers to peeling an orange to writing a poem.

…

In the 1990s and early 2000s, researchers managed to apply machine learning techniques to narrow tasks like recognizing faces or language, but by the time Hassabis was finishing his PhD in 2009, hardly anyone believed that machines could have general intelligence. They’d be laughed out of the room. It was a fringe theory. Fortunately, Goertzel was on the fringe, and while “artificial general intelligence,” or AGI, wasn’t snappy, he liked it enough that he slapped the term on his book and helped turn it into a common expression that would go on to help fuel hype about the field. Language and terminology would end up playing an enormous role in the development of AI, driving interest to sometimes maddening effect.

…

He knew that Hassabis wasn’t as worried about the apocalyptic risks of AI as he was, so he put pressure on the company to hire a team of people that would study all the different ways they could design AI to keep it aligned with human values and prevent it from going off the rails. DeepMind was about to get another investor with even deeper pockets who also wanted to steer it in a safe direction. Back in Silicon Valley, rumors were swirling of Peter Thiel’s involvement in a promising but secretive new start-up in London, UK, that was trying to build artificial general intelligence. Some of the region’s other technology billionaires were starting to hear about it, and one of them was Elon Musk. In 2012, two years after cofounding DeepMind, Hassabis was mingling at an exclusive conference in California that Thiel had organized when he bumped into Musk. “We hit it off straight away,” Hassabis says.

When Computers Can Think: The Artificial Intelligence Singularity

by

Anthony Berglas

,

William Black

,

Samantha Thalind

,

Max Scratchmann

and

Michelle Estes

Published 28 Feb 2015

Acknowledgements 4. Overview 2. Part I: Could Computers Ever Think? 1. People Thinking About Computers 1. The Question 2. Vitalism 3. Science vs. vitalism 4. The vital mind 5. Computers cannot think now 6. Diminishing returns 7. AI in the background 8. Robots leave factories 9. Intelligent tasks 10. Artificial General Intelligence (AGI) 11. Existence proof 12. Simulating neurons, feathers 13. Moore's law 14. Definition of intelligence 15. Turing Test 16. Robotic vs cognitive intelligence 17. Development of intelligence 18. Four year old child 19. Recursive self-improvement 20. Busy Child 21. AI foom 2. Computers Thinking About People 1.

…

AI programs often surprise their developers with what they can (and cannot) do. Kasparov stated that Deep Blue had produced some very creative chess moves even though it used a relatively simple brute force strategy. Certainly Deep Blue was a much better chess player than its creators. Artificial General Intelligence (AGI) It is certainly the case that computers are becoming ever more intelligent and capable of addressing a widening variety of difficult problems. This book argues that it is only a matter of time before they achieve general, human level intelligence. This would mean that they could reason not only about the tasks at hand but also about the world in general, including their own thoughts.

…

At some point computers will have basic human level-intelligence for every-day tasks but will not yet be intelligent enough to program themselves by themselves. These machines will be very intelligent in some ways, yet quite limited in others. It is unclear how long this intermediate period will last, it could be months or many decades. Such machines are often referred to as being an Artificial General Intelligence, or AGI. General meaning general purpose, not restricted in the normal way that programs are. Artificial intelligence techniques such as genetic algorithms are already being used to help create artificial intelligence software as is discussed in part II. This process is likely to continue, with better tools producing better machines that produce better tools.

Genius Makers: The Mavericks Who Brought A. I. To Google, Facebook, and the World

by

Cade Metz

Published 15 Mar 2021

See Project Maven Allen Institute for Artificial Intelligence, 272–74 Alphabet, 186, 216, 301 AlphaGo in China, 214–17, 223–24 in Korea, 169–78, 198, 216 Altman, Sam, 161–65, 282–83, 287–88, 292–95, 298–99 ALVINN project, 43–44 Amazon contest for developing robots for warehouse picking, 278–79 facial recognition technology (Amazon Rekognition), 236–38 Android smartphones and speech recognition, 77–79 Angelova, Anelia, 136–37 ANNA microchip, 52–53 antitrust concerns, 255 Aravind Eye Hospital, 179–80, 184 artificial general intelligence (AGI), 100, 109–10, 143, 289–90, 295, 299–300, 309–10 artificial intelligence (AI). See also intelligence ability to remove flagged content, 253 AI winter, 34–35, 288 AlphaGo competition as a milestone event, 176–78, 198 artificial general intelligence (AGI), 100, 109–10, 143, 289–90, 295, 299–300, 309–10 the black-box problem, 184–85 British government funding of, 34–35 China’s plan to become the world leader in AI by 2030, 224–25 content moderation system, 231 Dartmouth Summer Research Conference (1956), 21–22 early predictions about, 288 Elon Musk’s concerns about, 152–55, 156, 158–60, 244, 245, 289 ethical AI team at Google, 237–38 “Fake News Challenge,” 256–57 Future of Life Institute, 157–60, 244, 291–92 games as the starting point for, 111–12 GANs (generative adversarial networks), 205–06, 259–60 government investment in, 224–25 importance of human judgment, 257–58 as an industry buzzword, 140–41 major contributors, 141–42, 307–08, 321–26 possibilities regarding, 9–11 practical applications of, 113–14 pushback against the media hype regarding, 270–71 robots using dreaming to generate pictures and spoken words, 200 Rosewood Hotel meeting, 160–63 the Singularity Summit, 107–09, 325–26 superintelligence, 105–06, 153, 156–60, 311 symbolic AI, 25–26 timeline of events, 317–20 tribes, distinct philosophical, 192 unpredictability of, 10 use of AI technology by bad actors, 243 AT&T, 52–53 Australian Centre for Robotic Vision, 278 autonomous weapons, 240, 242, 244, 308 backpropagation ability to handle “exclusive-or” questions, 38–39 criticism of, 38 family tree identification, 42 Geoff Hinton’s work with, 41 Baidu auction for acquiring DNNresearch, 5–9, 11, 218–19 as competition for Facebook and Google, 132, 140 interest in neural networks and deep learning, 4–5, 9, 218–20 key players, 324 PaddlePaddle, 225 translation research, 149–50 Ballmer, Steve, 192–93 Baumgartner, Felix, 133–34 Bay Area Vision Meeting, 124–25 Bell Labs, 47, 52–53 Bengio, Yoshua, 57, 162, 198–200, 205–06, 238, 284, 305–08 BERT universal language model, 273–74 bias Black in AI, 233 of deep learning technology, 10–11 facial recognition systems, 231–32, 234–36, 308 of training data, 231–32 Billionaires’ Dinner, 154 Black in AI, 233 Bloomberg Businessweek, 132 Bloomberg News, 130, 237 the Boltzmann Machine, 28–30, 39–40, 41 Bostrom, Nick, 153, 155 Bourdev, Lubomir, 121, 124–26 Boyton, Ed, 287–88 the brain innate machinery, 269–70 interface between computers and, 291–92 mapping and simulating, 288 the neocortex’s biological algorithm, 82 understanding how the brain works, 31–32 using artificial intelligence to understand, 22–23 Breakout (game), 111–12, 113–14 Breakthrough Prize, 288 Brin, Sergey building a machine to win at Go, 170–71 and DeepMind, 301 at Google, 216 Project Maven meetings, 241 Brockett, Chris, 54–56 Brockman, Greg, 160–64 Bronx Science, 17, 21 Buolamwini, Joy, 234–38 Buxton, Bill, 190–91 Cambridge Analytica, 251–52 Canadian Institute for Advanced Research, 307 capture the flag, training a machine to play, 295–96 Carnegie Mellon University, 40–41, 43, 137, 195, 208 the Cat Paper, 88, 91 Chauffeur project, 137–38, 142 Chen, Peter, 283 China ability to develop self-driving vehicles, 226–27 development of deep learning research within, 220, 222 Google’s presence in, 215–17, 220–26 government censorship, 215–17 plan to become the world leader in AI by 2030, 224–25, 226–27 promotion of TensorFlow within, 220–22, 225 use of facial recognition technology, 308 Wuzhen AlphaGo match, 214–17, 223–24 Clarifai, 230–32, 235, 239–40, 249–50, 325 Clarke, Edmund, 195 cloud computing, 221–22, 245, 298 computer chips.

…

He had already landed Hinton, Sutskever, and Krizhevsky from the University of Toronto. Now, in the last days of December 2013, he was flying to London in pursuit of DeepMind. Founded around the same time as Google Brain, DeepMind was a start-up dedicated to an outrageously lofty goal. It aimed to build what it called “artificial general intelligence”—AGI—technology that could do anything the human brain could do, only better. That endgame was still years, decades, or perhaps even centuries away, but the founders of this tiny company were confident it would one day be achieved, and like Andrew Ng and other optimistic researchers, they believed that many of the ideas brewing at labs like the one at the University of Toronto were a strong starting point.

…

Hassabis, Legg, and Suleyman would each stamp a unique point of view on a company that looked toward the horizons of artificial intelligence but also aimed to solve problems in the nearer term, while openly raising concerns over the dangers of this technology in both the present and the future. Their stated aim—contained in the first line of their business plan—was artificial general intelligence. But at the same time, they told anyone who would listen, including potential investors, that this research could be dangerous. They said they would never share their technology with the military, and in an echo of Legg’s thesis, they warned that superintelligence could become an existential threat.

What We Owe the Future: A Million-Year View

by

William MacAskill

Published 31 Aug 2022

nuclear winter, 129 postcatastrophe recovery of, 132–134 slavery in agricultural civilisations, 47 suffering of farmed animals, 208–211 technological development feedback loop, 153 AI governance, 225 AI safety, 244 air pollution, 25, 25(fig.), 141, 227, 261 alcohol use and abuse, values and, 67, 78 alignment problem of AI, 87 AlphaFold 2, 81 AlphaGo, 80 AlphaZero, 80–81 al-Qaeda: bioweapons programme, 112–113 al-Zawahiri, Ayman, 112 Ambrosia start-up, 85 Animal Rights Militia, 240–241 animal welfare becoming vegetarian, 231–232 political activism, 72–73 the significance of values, 53 suffering of farmed animals, 208–211, 213 wellbeing of animals in the wild, 211–213 animals, evolution of, 56–57 anthrax, 109–110 anti-eutopia, 215–220 Apple iPhone, 198 Arab slavery, 47 “Are Ideas Getting Harder to Find?” 151 Armageddon (film), 106 Arrhenius, Svante, 42 artificial general intelligence (AGI) averting civilisational stagnation, 156 longterm importance of, 80–83 predicting the arrival of, 89–91 prioritising threats to improve on, 228 the pursuit of immortality, 83–86 reducing future uncertainty, 228–229 surpassing human abilities, 86–88 values lock-in, 92–95 artificial intelligence (AI) addressing neglected problems, 231 AI safety, 244 alignment problem, 87 artificial general intelligence, 80–83 defining, 80 future threats and benefits, 6 missing moments of plasticity, 43 prioritising future solutions, 228–229 uncertainty over the future, 224–226 value lock-in, 79 arts and literature preserving and projecting, 22–23 the value of non-wellbeing goods, 214–215 asteroids, collision with, 105–107, 113 Atari, 82–83 Atlantis, 12 Australia: effects of all-out nuclear war, 131 average view of wellbeing, 177–179, 179(fig.)

…

See value lock-in lock-in paradox, 101–102 Long Peace, 114 long reflection, 98–99 longtermism arguments for and against, 4–7, 257–261 concerns for future generations, 10–12 contingency of moral norms, 71–72 empowering future generations, 9 expedition into uncharted terrain, 6–7 longterm consequences of small actions, 173–175 perspective on civilisational stagnation, 159–163 population ethics, 168–171 the size of the future, 19 understanding the implications of, 229–230 values changes, 53–55 lottery winners, 203 Lustig, Richard, 203 lying, negative effects of, 241 Lyons, Oren, 11 Macaulay, Zachary, 69 MacFarquhar, Larissa, 168 machine learning artificial general intelligence development, 80–81 predicting AGI completion, 90–91 See also artificial general intelligence; artificial intelligence mammals evolution of, 4, 13(fig.) lifespan, 13, 13(fig.) megafauna, 29–30 Mao Zedong, 218–219 Marlowe, Frank, 206–207 Mars rovers, 189 mathematics Islamic Golden Age, 143 noncontingency, 32–33 Mauritania: abolition of slavery, 69–70 McKibben, Bill, 43 medicine: expected value theory in decisionmaking, 38 megafauna, 29–30 megatherium, 29 Mercy for Animals, 72–73 Metaculus forecasting platform, 113, 116 metaphors of humanity, history, and longtermism, 6–7 Middle Ages: history of civilisational stagnation, 157 migration.

…

When thinking about lock-in, the key technology is artificial intelligence.35 Writing gave ideas the power to influence society for thousands of years; artificial intelligence could give them influence that lasts millions. I’ll discuss when this might occur later; for now let’s focus on why advanced artificial intelligence would be of such great longterm importance. Artificial General Intelligence Artificial intelligence (AI) is a branch of computer science that aims to design machines that can mimic or replicate human intelligence. Because of the success of machine learning as a paradigm, we’ve made enormous progress in AI over the last ten years. Machine learning is a method of creating useful algorithms that does not require explicitly programming them; instead, it relies on learning from data, such as images, the results of computer games, or patterns of mouse clicks.

Smarter Than Us: The Rise of Machine Intelligence

by

Stuart Armstrong

Published 1 Feb 2014

See, for instance, Bill Hibbard, “Super-Intelligent Machines,” ACM SIGGRAPH Computer Graphics 35, no. 1 (2001): 13–15, http://www.siggraph.org/publications/newsletter/issues/v35/v35n1.pdf; Ben Goertzel and Joel Pitt, “Nine Ways to Bias Open-Source AGI Toward Friendliness,” Journal of Evolution and Technology 22, no. 1 (2012): 116–131, http://jetpress.org/v22/goertzel-pitt.htm. 4. Ben Goertzel, “CogPrime: An Integrative Architecture for Embodied Artificial General Intelligence,” OpenCog Foundation, October 2, 2012, accessed December 31, 2012, http://wiki.opencog.org/w/CogPrime_Overview. Chapter 10 A Summary There are no convincing reasons to assume computers will remain unable to accomplish anything that humans can. Once computers achieve something at a human level, they typically achieve it at a much higher level soon thereafter.

…

Amnon Eden et al., The Frontiers Collection (Berlin: Springer, 2012); Stuart Armstrong, Anders Sandberg, and Nick Bostrom, “Thinking Inside the Box: Controlling and Using an Oracle AI,” Minds and Machines 22, no. 4 (2012): 299–324, doi:10.1007/s11023-012-9282-2. 2. Stephen M. Omohundro, “The Basic AI Drives,” in Artificial General Intelligence 2008: Proceedings of the First AGI Conference, Frontiers in Artificial Intelligence and Applications 171 (Amsterdam: IOS, 2008), 483–492. 3. Roman V. Yampolskiy, “Leakproofing the Singularity: Artificial Intelligence Confinement Problem,” Journal of Consciousness Studies 2012, nos. 1–2 (2012): 194–214, http://www.ingentaconnect.com/content/imp/jcs/2012/00000019/F0020001/art00014. 4.

…

Journal of Consciousness Studies 17, nos. 9–10 (2010): 7–65. http://www.ingentaconnect.com/content/imp/jcs/2010/00000017/f0020009/art00001. Eden, Amnon, Johnny Søraker, James H. Moor, and Eric Steinhart, eds. Singularity Hypotheses: A Scientific and Philosophical Assessment. The Frontiers Collection. Berlin: Springer, 2012. Goertzel, Ben. “CogPrime: An Integrative Architecture for Embodied Artificial General Intelligence.” OpenCog Foundation. October 2, 2012. Accessed December 31, 2012. http://wiki.opencog.org/w/CogPrime_Overview. Goertzel, Ben, and Joel Pitt. “Nine Ways to Bias Open-Source AGI Toward Friendliness.” Journal of Evolution and Technology 22, no. 1 (2012): 116–131. http://jetpress.org/v22/goertzel-pitt.htm.

Rule of the Robots: How Artificial Intelligence Will Transform Everything

by

Martin Ford

Published 13 Sep 2021

OpenAI will be able to leverage massive computational resources hosted by Microsoft’s Azure service—something that is essential given its focus on building ever larger neural networks. Only cloud computing can deliver compute power on the scale that OpenAI requires for its research. Microsoft, in turn, will gain access to practical innovations that are spawned by OpenAI’s ongoing quest for artificial general intelligence. This will likely result in applications and capabilities that can be integrated into Azure’s cloud services. Perhaps just as importantly, the Azure brand will benefit from an association with one of the world’s leading AI research organizations and better position Microsoft to compete with Google, which enjoys a strong reputation for AI leadership, in part because of its ownership of DeepMind.14 This synergy extends far beyond this single example.

…

Many of the startup companies and university researchers working in this area believe, like Covariant, that a strategy founded on deep neural networks and reinforcement learning is the best way to fuel progress toward more dexterous robots. One notable exception is Vicarious, a small AI company based in the San Francisco Bay Area. Founded in 2010—two years before the 2012 ImageNet competition brought deep learning to the forefront—Vicarious’s long-term objective is to achieve human-level or artificial general intelligence. In other words, the company is, in a sense, competing directly with higher-profile and far better funded initiatives like those at DeepMind and OpenAI. We’ll delve into the paths being forged by those two companies and the general quest for human-level AI in Chapter 5. One of Vicarious’s major objectives has been to build applications that are more flexible—or as AI researchers would say, less “brittle”—than typical deep learning systems.

…

The manipulative work performed by doctors and nurses presents an extraordinary challenge for artificial intelligence because it requires extreme dexterity combined with problem solving and interpersonal skills, as well as the ability to handle an unpredictable environment where every situation, and every patient, is unique. As far as physical healthcare robots are concerned, the productivity scaling effect that we have seen in factories or warehouses likely lies in the distant future and will require not just vastly improved robotic dexterity, but quite possibly artificial general intelligence or something very close to it. Given the limitations of physical robots, it seems likely that any truly significant near-term AI impact on healthcare will emerge in activities that require no moving parts. In other words, artificial intelligence will make its mark in the processing of information and in purely intellectual endeavors, such as diagnosis or the development of treatment plans.

Possible Minds: Twenty-Five Ways of Looking at AI

by

John Brockman

Published 19 Feb 2019

He is the co-author (with Peter Norvig) of Artificial Intelligence: A Modern Approach. Computer scientist Stuart Russell, along with Elon Musk, Stephen Hawking, Max Tegmark, and numerous others, has insisted that attention be paid to the potential dangers in creating an intelligence on the superhuman (or even the human) level—an AGI, or artificial general intelligence, whose programmed purposes may not necessarily align with our own. His early work was on understanding the notion of “bounded optimality” as a formal definition of intelligence that you can work on. He developed the technique of rational metareasoning, “which is, roughly speaking, that you do the computations that you expect to improve the quality of your ultimate decision as quickly as possible.”

…

Chapter 8 LET’S ASPIRE TO MORE THAN MAKING OURSELVES OBSOLETE MAX TEGMARK Max Tegmark is an MIT physicist and AI researcher, president of the Future of Life Institute, scientific director of the Foundational Questions Institute, and the author of Our Mathematical Universe and Life 3.0: Being Human in the Age of Artificial Intelligence. I was introduced to Max Tegmark some years ago by his MIT colleague Alan Guth, the father of inflation theory. A distinguished theoretical physicist and cosmologist himself, Max’s principal concern nowadays is the looming existential risk posed by the creation of an AGI (artificial general intelligence—that is, one that matches human intelligence). Four years ago, Max co-founded, with Jaan Tallinn and others, the Future of Life Institute (FLI), which bills itself as “an outreach organization working to ensure that tomorrow’s most powerful technologies are beneficial for humanity.” While on a book tour in London, he was in the midst of planning for FLI, and he admits to being driven to tears in a tube station after a trip to the London Science Museum, with its exhibitions spanning the gamut of humanity’s technological achievements.

…

This suggests that we’ve seen just the tip of the intelligence iceberg; there’s an amazing potential to unlock the full intelligence latent in nature and use it to help humanity flourish—or flounder. Others, including some of the authors in this volume, dismiss the building of an AGI (artificial general intelligence—an entity able to accomplish any cognitive task at least as well as humans) not because they consider it physically impossible but because they deem it too difficult for humans to pull off in less than a century. Among professional AI researchers, both types of dismissal have become minority views because of recent breakthroughs.

The Alignment Problem: Machine Learning and Human Values

by

Brian Christian

Published 5 Oct 2020

You can use your device’s search function to locate particular terms in the text. 0–1 loss function, 354n47 3CosAdd algorithm, 316, 397n13 Abbeel, Pieter, 257, 258–59, 267–68, 297 Ackley, Dave, 171–72 actor-critic architecture, 138 actualism vs. possibilism, 234–40 effective altruism and, 237–38 imitation and, 235, 239–40, 379n71 Professor Procrastinate problem, 236–37, 379n61 actuarial models, 92–93 addiction, 135, 153, 205–08, 374n65 See also drug use AdSense, 343n72 adversarial examples, 279–80, 387n8 affine transformations, 383n16 African Americans. See racial bias Against Prediction (Harcourt), 78–79 age bias, 32, 396n7 AGI. See artificial general intelligence Agüera y Arcas, Blaise, 247 AI Now Institute, 396n9 AI safety artificial general intelligence delay risks and, 310 corrigibility, 295–302, 392–93n51 field growth, 12, 249–50, 263 gridworlds for, 292–93, 294, 295, 390n29 human-machine cooperation and, 268–69 irreversibility and, 291, 293 progress in, 313–14 reward optimization and, 368n56 uncertainty and, 291–92 See also value alignment AIXI, 206–07, 263 Alciné, Jacky, 25, 29, 50 ALE.

…

See child development inference, 251–53, 269, 323–24, 385n39, 398nn29–30 See also inverse reinforcement learning information theory, 34–35, 188, 197–98, 260–61 Innocent IX (Pope), 303 Institute for Ophthalmic Research, 287 intelligence artificial general intelligence and, 209 reinforcement learning and, 144, 149, 150–51 See also artificial general intelligence interest. See curiosity interface design, 269 interference, 292 interpretability, 113–17 See also transparency interventions medical predictive models and, 84, 86, 352n12 risk-assessment models and, 80–81, 317–18, 351nn87, 90 intrinsic motivation addiction and, 205–8, 374n65 boredom and, 188, 201, 202, 203–04 knowledge-seeking agents, 206–07, 209–10, 374n73 novelty and, 189–94, 207, 370–71nn29–30, 32, 35 reinforcement learning and, 186–89, 370n12 sole dependence on, 200–03, 373n58 surprise and, 195–200, 207–08, 372nn49–50, 373nn53–54, 58 inverse reinforcement learning (IRL), 253–68 aspiration and, 386–87n55 assumptions on, 324 cooperative (CIRL), 267–68, 385nn40, 43–44 demonstration learning for, 256–61, 323–24, 383nn22–23, 398n30 feedback learning and, 262, 263–66, 384–85n37 gait and, 253–55 as ill-posed problem, 255–56 inference and, 251–53, 385n39 maximum-entropy, 260–61 inverse reward design (IRD), 301–02 irreversibility, 290–91, 292, 293, 320, 391n39, 397n22 Irving, Geoffrey, 344n86 Irwin, Robert, 326 Isaac, William, 75–77, 349n76 Jackson, Shirley Ann, 9 Jaeger, Robert, 184 Jain, Anil, 31 James, William, 121, 122, 124 Jefferson, Geoffrey, 329 Jefferson, Thomas, 278 Jim Crow laws, 344n83 Jobs, Steve, 98 Johns Hopkins University, 196–97 Jorgeson, Kevin, 220–21 Jurafsky, Dan, 46 Kabat-Zinn, Jon, 321 Kaelbling, Leslie Pack, 266, 371n30 Kage, Earl, 28 Kahn, Gregory, 288 Kalai, Adam, 6–7, 38, 41–42, 48, 316 Kálmán, Rudolf Emil, 383n15 Kant, Immanuel, 37 Kasparov, Garry, 205, 235, 242 Kaufman, Dan, 87–88 Kellogg, Donald, 214–15, 375n7 Kellogg, Luella, 214–15, 375n7 Kellogg, Winthrop, 214–15, 375n7 Kelsey, Frances Oldham, 315 Kerr, Steven, 163, 164, 168 Kim, Been, 112–17 kinesthetic teaching, 261 Kleinberg, Jon, 67, 69, 70, 73–74 Klopf, Harry, 127, 129, 130, 133, 138, 150 knowledge-seeking agents, 206–07, 209–10, 374n73 Knuth, Donald, 311, 395n2 Ko, Justin, 104, 356n55 Kodak, 28–29 Krakovna, Victoria, 292–93, 295 Krizhevsky, Alex, 21, 23–25, 285, 339n20 l0-norm, 354n47 Labeled Faces in the Wild (LFW), 31–32, 340n44 Lab for Analytical Sciences, North Carolina State University, 88 labor recruiting, 22 Landecker, Will, 103–04, 355n54 language models.

…

Contemporary state-of-the-art reinforcement-learning systems really are general—at least in the domain of board and video games—in a way that Deep Blue was not. DQN could play dozens of Atari games with equal felicity. AlphaZero is just as adept at chess as it is at shogi and Go. What’s more, artificial general intelligence (AGI) of the kind that can learn to operate fluidly in the real world may indeed require the sorts of intrinsic-motivation architectures that can make it “bored” of a game it’s played too much. At the other side of the spectrum from boredom is addiction—not a disengagement but its dark reverse, a pathological degree of repetition or perseverance.

The Road to Conscious Machines

by

Michael Wooldridge

Published 2 Nov 2018

But remember: it isn’t real! 11. http://tinyurl.com/y7nbo58p. 12. Unfortunately, the terminology in the literature is imprecise and inconsistent. Most people seem to use ‘artificial general intelligence’ to refer to the goal of producing general-purpose human-level intelligence in machines, without being concerned with philosophical questions such as whether they are self-aware. In this sense, artificial general intelligence is roughly the equivalent of Searle’s weak AI. However, just to confuse things, sometimes the term is used to mean something much more like Searle’s strong AI. In this book, I use it to mean something like weak AI, and I will just call it ‘General AI’. 13. http://tinyurl.com/y76xdfd9

…

For this reason, although strong AI is an important and fascinating part of the AI story, it is largely irrelevant to contemporary AI research. Go to a contemporary AI conference, and you will hear almost nothing about it – except possibly late at night, in the bar. A lesser goal is to build machines that have general-purpose human-level intelligence. Nowadays, this is usually referred to as Artificial General Intelligence (AGI) or just General AI. AGI roughly equates to having a computer that has the full range of intellectual capabilities that a person has – this would include the ability to converse in natural language (cf. the Turing test), solve problems, reason, perceive its environment and so on, at or above the same level as a typical person.

…

agent-based interface The idea of having a computer interface mediated by an AI-powered software agent. The software agent works with us on a task, as an active assistant, rather than passively waiting to be told what to do, as is the case with regular computer applications. AGI see Artificial General Intelligence AI winter The period immediately following the publication of the Lighthill Report in the early 1970s, which was extremely critical of AI. Characterized by funding cuts to AI research, and considerable scepticism about the field as a whole. Followed by the era of knowledge-based AI. AlexNet A breakthrough image recognition system, which in 2012 demonstrated dramatic improvements in image recognition.

Empire of AI: Dreams and Nightmares in Sam Altman's OpenAI

by

Karen Hao

Published 19 May 2025

They maintained that they had carefully consulted lawyers in making the decision to fire Altman and had acted in accordance with their delineated responsibilities. OpenAI was not like a normal company, its board not like a normal board. It had a unique structure that Altman had designed himself, giving the board broad authority to act in the best interest not of OpenAI’s shareholders but of its mission: to ensure that AGI, or artificial general intelligence, benefits humanity. Altman had long touted the board’s ability to fire him as its most important governance mechanism. Toner underscored the point: “If this action destroys the company, it could in fact be consistent with the mission.” The leadership relayed her words back to employees in real time: Toner didn’t care if she destroyed the company.

…

From the beginning, OpenAI had presented itself as a bold experiment in answering this question. It was founded by a group including Elon Musk and Sam Altman, with other billionaire backers like Peter Thiel, to be more than just a research lab or a company. The founders asserted a radical commitment to develop so-called artificial general intelligence, what they described as the most powerful form of AI anyone had ever seen, not for the financial gains of shareholders but for the benefit of humanity. To that end, Musk and Altman had set it up as a nonprofit and pledged $1 billion for its operation. It would not work on commercial products; instead it would be dedicated fully to research, driven by only the purest intentions of ushering in a form of AGI that would unlock global utopia, and not its opposite.

…

OpenAI had grown competitive, secretive, and insular, even fearful of the outside world under the intoxicating power of controlling such a paramount technology. Gone were notions of transparency and democracy, of self-sacrifice and collaboration. OpenAI executives had a singular obsession: to be the first to reach artificial general intelligence, to make it in their own image. Over the next four years, OpenAI became everything that it said it would not be. It turned into a nonprofit in name only, aggressively commercializing products like ChatGPT and seeking unheard-of valuations. It grew even more secretive, not only cutting off access to its own research but shifting norms across the industry to bar a significant share of AI development from public scrutiny.

Succeeding With AI: How to Make AI Work for Your Business

by

Veljko Krunic

Published 29 Mar 2020

Available from: https://www.hhs.gov/hipaa/ for-professionals/security/laws-regulations/index.html June Life, Inc. The do-it-all oven. [Cited 2019 Jul 15.] Available from: https:// juneoven.com/ Hubbard DW. How to measure anything: Finding the value of intangibles in business. 2nd ed. Hoboken, NJ: Wiley; 2010. Wikimedia Foundation. Artificial general intelligence. Wikipedia. [Cited 2018 Jun 13.] Available from: https://en.wikipedia.org/w/index.php?title=Artificial _general_intelligence Shani G, Gunawardana A. Evaluating recommendation systems. In: Ricci F, Rokach L, Shapira B, Kantor PB, editors. Recommender systems handbook. New York: Springer; 2011. p. 257–297. Konstan JA, McNee SM, Ziegler , Torres R, Kapoor N, Riedl JT.

…

Imagine that you’re a CEO Suppose you’re running a company that’s making $30 billion a year, and you’re in a business that’s associated with AI. Let’s go a step further and assume that there’s a 1% chance that someone in the next 10 years might invent something approaching a strong, human-level AI—so called Artificial General Intelligence (AGI) [76]. If the search for AGI fails, there may still be an autonomous vehicle [38] as the consolation prize. Finally, you know that your competitors are investing heavily into AI. Will you invest substantial money into AI and hire accomplished researchers to help you advance the frontiers of AI knowledge?

…

AI will make actuarial mistakes that an average human, uninformed about AI, will see as malicious. Juries, whether in court or in the court of public opinion, are made up of humans. WARNING There’s no way to know if AI will ever develop common sense. It may not for quite a while; maybe not even until we get strong AI/Artificial General Intelligence [76]. Accounting for AI’s actuarial view is a part of your problem domain and part of why understanding your domain is crucial. It’s difficult to account for the differences between the actuarial view AI takes and human social expectations. Accounting for those differences is not an engineering problem and something that you should pass on to the engineering team to solve.

The Age of AI: And Our Human Future

by

Henry A Kissinger

,

Eric Schmidt

and

Daniel Huttenlocher

Published 2 Nov 2021

Some primates have brains similar in size to or even larger than human brains, but they do not exhibit anything approaching human acumen. Likely, development will yield AI “savants”—programs capable of dramatically exceeding human performance in specific areas, such as advanced scientific fields. THE DREAM OF ARTIFICIAL GENERAL INTELLIGENCE Some developers are pushing the frontiers of machine-learning techniques to create what has been dubbed artificial general intelligence (AGI). Like AI, AGI has no precise definition. However, it is generally understood to mean AI capable of completing any intellectual task humans are capable of—in contrast to today’s “narrow” AI, which is developed to complete a specific task.

…

This notion also communicated the sense of possibility engendered by disrupting the established monopoly on information, which was largely in the hands of the church. Now the partial end of the postulated superiority of human reason, together with the proliferation of machines that can match or surpass human intelligence, promises transformations potentially more profound than even those of the Enlightenment. Even if advances in AI do not produce artificial general intelligence (AGI)—that is, software capable of human-level performance of any intellectual task and capable of relating tasks and concepts to others across disciplines—the advent of AI will alter humanity’s concept of reality and therefore of itself. We are progressing toward great achievements, but those achievements should prompt philosophical reflection.

…

In a world where an intelligence beyond one’s comprehension or control draws conclusions that are useful but alien, is it foolish to defer to its judgments? Spurred by this logic, a re-enchantment of the world may ensue, in which AIs are relied upon for oracular pronouncements to which some humans defer without question. Especially in the case of AGI (artificial general intelligence), individuals may perceive godlike intelligence—a superhuman way of knowing the world and intuiting its structures and possibilities. But deference would erode the scope and scale of human reason and thus would likely elicit backlash. Just as some opt out of social media, limit screen time for children, and reject genetically modified foods, so, too, will some attempt to opt out of the “AI world” or limit their exposure to AI systems in order to preserve space for their reason.

The Optimist: Sam Altman, OpenAI, and the Race to Invent the Future

by

Keach Hagey

Published 19 May 2025

Louis, 27 rent strikes, 27 slum clearance, 26, 34 tax credits, 22, 34–35, 53 universal basic income (UBI) and, 12–14, 194, 205, 256 AGI (artificial general intelligence), see AI (artificial intelligence) “agile” method of software development, 127 aging, the fight against, see immortality AI (artificial intelligence) belief we’re living in a simulation created by, 17 competitive arms race in, 9, 166, 211, 233, 267, 270, 312–14 exacerbating the problem of affordable housing, 254–56, 302 existential risk from, 4–6, 141, 144–45, 167–68, 177, 190, 300 game theory, 166, 285 generative AI, 1, 3, 9, 219, 221, 270 the goal of artificial general intelligence (AGI), 3, 5–10, 12–14, 133, 146–47, 170, 181, 189–90, 192, 198–200, 208, 211, 222–26, 230, 233, 237, 245, 287, 304–5 “godfathers of AI,” 188, 312 large carbon footprint of, 252 “Manhattan Project” for AI, 8, 145, 147, 219 national security implications of AI, 166, 267, 285 neural network–based AI, 147, 164, 176, 179–82, 190–93, 219, 221, 243, 314 toward the singularity, 140–42, 144–45, 168, 199–200, 305 “Sky,” an AI voice, 307–8 the three tribes of humans involved in AI (research, safety, and policy), 208–9, 276 “weights” in, 181 “wrappers” around, 278–79 see also AI research/training; AI safety; chatbots; OpenAI AI Doomer Industrial Complex, 299 AI Dungeon (video game), 247–48, 254–55 “AI Principles,” 5 AI research/training, 5, 143–45, 167–71, 177–88, 207–9, 305–6 backpropagation and, 179, 181 bias encoded in AI, 252 chess, 67, 151, 145–46, 191, 215–16 “deep learning,” 146–47 dialog as method of alignment, 265 diffusion model trained by adding digital “noise,” 262–63 “few shot” learning, 244 Go (game), 191–92, 216–17 going from “agent” to “transformer,” 218–21, 265 going from “training” to “alignment,” 8, 264–66, 276, 284, 305–6 ImageNet competition, 182, 184 the issue of Books1 and Books2, 244 large language models (LLMs), 16–17, 218, 221, 244–46, 252–53, 270–71, 275 learning means AIs have souls, 179 machine translation, 168, 219, 245 neural networks, 147, 164, 176, 179–82, 190–93, 219, 221, 243, 314 parse trees, 174 passing the bar exam, 3, 272 reinforcement learning, 147, 185, 191–93, 264, 265, 284 Rubik’s cube–solving robot hand, 218 sentiment neuron, 218 “sequence to sequence” learning, 178 software engineers and AI researchers, 191, 194 Test of Time Award, 305 the “Turing test,” 173–74 “value-lock,” 252 “zero-shot” responses, 220–21 see also video games AI safety, 2, 4–5, 8–9, 165–67 Asilomar AI Principles, 208, 211, 213, 233–34 Deployment Safety Board (DSB) at OpenAI, 279–80, 287 Elon Musk as an AI doomer, 2, 5, 163, 167–72, 214–15, 273–74 existential risk from AI, 4–6, 141, 144–45, 167–68, 177, 190, 300 how AI could go wrong, 7–8, 143–44, 147, 154 moral balancing act between progress and safety, 135 national security implications of AI, 166, 267, 285 parable of the paperclip-making AI, 5, 143–44, 164 pedophilia and, 254 Puerto Rico conference on AI safety (2015), 167–70, 207, 211 the Stochastic Parrot critique of AI, 252–53 AI Superpowers (Lee), 267 AIDS crisis, 33, 43, 49 AIM (AOL Instant Messenger), 16, 50, 76, 162 Airbnb, 4, 123, 139, 150, 152,158, 263, 274, 292 Akin Grump Strauss Hauer & Feld law firm, 300 Alberta, Tim, 297 Alcor Life Extension Foundation, Scottsdale, AZ, 141 Alexander, Scott, 143, 165–66 “AlexNet” (Hinton), 178, 182, 266 “Algernon,” 140–41, see Yudkowsky, Eliezer Alinsky, Saul, 22, 40 Alito, Samuel, Jr., 52 Allen & Company investment bank, 117, 229, 263, 266 Allston Trading, 157 Alphabet, 194, 271 AlphaGo, 192, 217 Alt Capital, 311 Altman, Annie (Sam’s sister), 15, 36, 40–43, 134, 201–2, 226–28, 248–50, 281–82, 295 allegations against Sam, 261–62 as an escort and on OnlyFans, 249, 261–62, 281 after Jerry Altman’s death, 311–12 living on the Big Island of Hawaii, 227, 250, 311–12 Altman, Birdie, 23–25 Altman family, 15–16 Alt Capital, 311 American Millinery Company, 31 during Covid, 248–50 family therapy sessions, 249 living in Atlanta, 23 moving to Clayton outside St.

…

When OpenAI launched its uncannily humanlike chatbot, ChatGPT (short for generative pre-trained transformer) the previous November, it was an instant smash, reaching 100 million users in less than three months, the fastest-growing app in the world to date.4 When OpenAI, only a few months later, unveiled a more formidable successor, GPT-4—it could pass the bar exam and ace the AP biology test—the dizzying rate of progress suggested that the company’s audacious mission to safely create the world’s first artificial general intelligence, or AGI, might indeed be within reach. Even the most determined AI skeptics—including one Stanford computer science professor who memorably dismissed the original ChatGPT to me as a “dancing dog”—felt their doubts soften. For a few giddy months, as every company in America frantically spun up AI task forces and tried to estimate the productivity gains that AI would bring, it felt as if we were all collectively stepping into a science fiction short story, and Altman was the author.

…

(He was in favor of the former but not so much the latter.)23 Back in 2000, Yudkowsky came to speak at Goertzel’s company (which would go bankrupt within a year). Legg points to the talk as the moment when he started to take the idea of superintelligence seriously, going beyond the caricatures in the movies.24 Goertzel and Legg began referring to the concept as “artificial general intelligence.” Legg went on to get his own PhD, writing a dissertation on “Machine Super Intelligence” that noted the technology could become an existential threat, and then moved into a postdoctoral fellowship at University College London’s Gatsby Computational Neuroscience Unit, a lab that encompassed neuroscience, machine learning, and AI.

The Transhumanist Reader

by

Max More

and

Natasha Vita-More

Published 4 Mar 2013

Shapiro, Lee Silver, Gregory Stock, Natasha Vita-More, Roy Walford, and Michael West. See http://www.extropy.org/summitkeynotes.htm. Statement for Extropy Institute Vital Progress Summit February 18, 2004. Index accelerating change adaptability aesthetics ageless AGI, see artificial general intelligence aging alchemy alterity anti-aging Aristotle Armstrong, Rachel artifact artificial general intelligence artificial intelligence artificial life Ascott, Roy atheism atom atomic augmentation authoritarian autonomous self (agent) autonomy avatar Bacon, Francis Bailey, Ronald Bainbridge, William Beloff, Laura Berger, Ted Benford, Gregory Beyond Therapy: Biotechnology and the Pursuit of Happiness bias bioart bioconservative biocultural capital bioethics biofeedback biopolitics biotechnology Blackford, Russell Blue Brain body alternative body biological body biopolitic computer interaction and body cyborg body modification morphological freedom posthuman body prosthetic body regenerated simulated transformative transhuman body wearable, see Hybronaut Bostrom, Nick brain–computer interface brain–machine interface (BMI) brain preservation Brin, David Broderick, Damien Caplan, Arthur Chalmers, David Chislenko, Alexander “Sasha,” Church, George Clark, Andy Clarke, Arthur C.

…

Nanorobotics Nanorobotics Revolution by the 2020s Conclusions 7 Life Expansion Media Living Matter Degeneration/Regeneration Transmutation Dialectics of Desirability and Viability Cybernetics Human-machine Interfaces and the Prosthetic Body Life Expansion 8 The Hybronaut Affair Techno-Organic Environment The Umwelt Bubble Network and the Hybronaut The Appendix-tail Conclusion 9 Transavatars Avatars and Simulation Avatar Censuses Secondary and Posthumous Avatars Conclusion 10 Alternative Biologies Biology as Technology The Rise of Machines Complexity The Science of Complexity Synthetic Biology – Complex Embodied Technology Top-Down Synthetic Biology Bottom-Up Synthetic Biology Protocells Artificial Biology From Proposition to Reality Future Venice Artificial Biology and Human Enhancement Part III Human Enhancement: The Cognitive Sphere 11 Re-Inventing Ourselves I. Introduction: Where the Rubber Meets the Road II. What’s in an Interface? III. New Systemic Wholes IV. Incorporation Versus Use V. Extended Cognition VI. Profound Embodiment VII. Enhancement or Subjugation? VIII. Conclusions 12 Artificial General Intelligence and the Future of Humanity The Top Priority for Mankind AGI and the Transformation of Individual and Collective Experience AGI and the Global Brain What is a Mind that We Might Build One? Why So Little Work on AGI? Why the “AGI Sputnik” Will Change Things Dramatically and Launch a New Phase of the Intelligence Explosion The Risks and Rewards of Advanced AGI 13 Intelligent Information Filters and Enhanced Reality Preface Text Translation and Its Consequences Enhanced Multimedia Structure of Enhanced Reality Historical Observations Truth vs.

…

Ben Goertzel, PhD, is an AGI Researcher, Novamente LLC, Chief Scientist, Aidyia Holdings, and Vice Chair, Humanity+. He authored The Hidden Pattern: A Patternist Philosophy of Mind (Brown Walker Press, 2006); A Cosmist Manifesto: Practical Philosophy for the Posthuman Age (Humanity + Press, 2010); and co-edited with Cassio Pennachin Artificial General Intelligence (Springer, 2007). Robin Hanson, PhD, is Associate Professor of Economics, George Mason University. He authored “Meet the New Conflict, Same as the Old Conflict” (Journal of Consciousness Studies 19, 2012); “Enhancing our Truth Orientation” (Human Enhancement, Oxford University Press, 2009); and “Insider Trading and Prediction Markets” (Journal of Law, Economics, and Policy 4, 2008).

The Fourth Age: Smart Robots, Conscious Computers, and the Future of Humanity

by

Byron Reese