The Digital Doctor: Hope, Hype, and Harm at the Dawn of Medicine’s Computer Age

by Robert Wachter · 7 Apr 2015 · 309pp · 114,984 words

, physician offices, and pharmacies. Everyone will learn from Wachter’s intelligent assessment and become a believer that, despite today’s glitches and frustrations, the future computer age will make medicine much better for us all.” —Ezekiel J. Emanuel, MD, PhD Vice Provost for Global Initiatives and Chair, Departments of Medical Ethics and

…

. For those of us whose formative years were spent rummaging through shoeboxes, how could we help but greet healthcare’s reluctant, subsidized entry into the computer age with unalloyed enthusiasm? Yet once we clinicians started using computers to actually deliver care, it dawned on us that something was deeply wrong. Why were

…

and Tecco decided to launch the organization while sitting in Harvard Business School’s Rock Hall. Chapter 26 The Productivity Paradox You can see the computer age everywhere but in the productivity statistics. —Nobel Prize–winning MIT economist Robert Solow, writing in 1987 Between the time David Blumenthal stepped down as national

…

. Dougherty, “Google Buys Lift Labs in Further Biotech Push,” New York Times, September 10, 2014. Chapter 26: The Productivity Paradox 243 “You can see the computer age” R. M. Solow, review of Manufacturing Matters: The Myth of the Post-Industrial Economy, by S. Cohen and J. Zysman, New York Times Book Review

Gambling Man

by Lionel Barber · 3 Oct 2024 · 424pp · 123,730 words

becoming smaller and more affordable: pocket calculators, video games and, crucially, personal computers. The arrival of NEC’s PC-8001 in 1979 inaugurated the personal-computer age in Japan. Alongside miniaturization, Japan was moving rapidly towards digitization. Most consumer products such as washing machines came equipped with ‘micon’, the microcomputers or microcontrollers

…

news veteran not easily impressed. But when Masa, still only 24, presented him with a business card marked ‘CEO’ and started talking about the new computer age, Kawashima took note. After several exchanges over lunch and visits to the SoftBank office, Kawashima suggested that his colleagues at Asahi Weekly write a profile

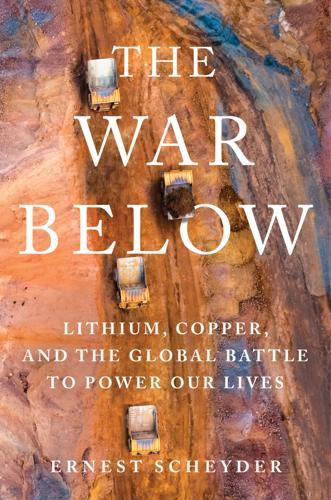

The War Below: Lithium, Copper, and the Global Battle to Power Our Lives

by Ernest Scheyder · 30 Jan 2024 · 355pp · 133,726 words

1983, General Motors and Japan’s Sumitomo Special Metals each announced the invention of neodymium-iron-boron magnets, a monumental breakthrough just as the personal computing age was dawning.44 All this growth did not go unnoticed by Wall Street or by China. In 1977, the Union Oil Company of California, known

The Paul Street Boys

by Ferenc Molnár · 14 Jun 1927 · 204pp · 53,592 words

-grandfathers. Perhaps the publishers felt that a novel written in 1907 in a somewhat dated 1927 translation would not appeal to the teenagers of our computer age. The sad truth is that some of the juvenile classics I re-read had lost their old magic, at least for me. But not this

Trade Your Way to Financial Freedom

by van K. Tharp · 1 Jan 1998

1750 (that is, in about 250 years). The next doubling took only about 150 years to the turn of the century. The onset of the computer age reduced the doubling time to about 5 years. And, with today’s computers offering electronic bulletin boards, DVDs, fiber optics, the Internet, and so on

Asset and Risk Management: Risk Oriented Finance

by Louis Esch, Robert Kieffer and Thierry Lopez · 28 Nov 2005 · 416pp · 39,022 words

.0039 + 0.0271 − 0.0794 − 0.0907 = −0.1391 12 750 2 3 An argument that no longer makes sense with the advent of the computer age. See, for example, the portfolio return shown below. 38 Asset and Risk Management Table 3.1 Classic and logarithmic returns Rt Rt∗ 0.0039 0

Working Effectively With Legacy Code

by Michael Feathers · 14 Jul 2004 · 508pp · 120,339 words

the affected things will affect, and so on. This type of reasoning is nothing new; people have been doing it since the dawn of the computer age. Programmers sit down and reason about their programs for many reasons. The funny thing is, we don’t talk about it much. We just assume

Kindle Fire: The Missing Manual

by Peter Meyers · 9 Feb 2012

. The Secret of Grisly Manor ($0.99). This app is one of the best examples of what some predicted would happen to novels in the Computer Age: They’d turn into audience-piloted explorations of richly illustrated worlds. Behind each door, and within each room, users could inspect objects, chew on clues

On Intelligence

by Jeff Hawkins and Sandra Blakeslee · 1 Jan 2004 · 246pp · 81,625 words

, but it was the best metaphor they had. Somehow information entered the brain and the brain-machine determined how the body should react. During the computer age, the brain has been viewed as a particular type of machine, the programmable computer. And as we saw in chapter 1, AI researchers have stuck

Speaking Code: Coding as Aesthetic and Political Expression

by Geoff Cox and Alex McLean · 9 Nov 2012

137 Bogost, Ian. Unit Operations: An Approach to Videogame Criticism. Cambridge, MA: MIT Press, 2004. Bolter, Jay David. Turing’s Man: Western Culture in the Computer Age. Chapel Hill: University of North Carolina Press, 1984. Bolter, Jay, and Richard Grusin. Remediation: Understanding New Media. Cambridge, MA: MIT Press, 1999. Borrelli, Loretta. “The

In Pursuit of the Traveling Salesman: Mathematics at the Limits of Computation

by William J. Cook · 1 Jan 2011 · 245pp · 12,162 words

@War: The Rise of the Military-Internet Complex

by Shane Harris · 14 Sep 2014 · 340pp · 96,149 words

The New Division of Labor: How Computers Are Creating the Next Job Market

by Frank Levy and Richard J. Murnane · 11 Apr 2004 · 187pp · 55,801 words

The Nature of Technology

by W. Brian Arthur · 6 Aug 2009 · 297pp · 77,362 words

Memory Machines: The Evolution of Hypertext

by Belinda Barnet · 14 Jul 2013 · 193pp · 19,478 words

The Fugitive Game: Online With Kevin Mitnick

by Jonathan Littman · 1 Jan 1996

Who Gets What — and Why: The New Economics of Matchmaking and Market Design

by Alvin E. Roth · 1 Jun 2015 · 282pp · 80,907 words

Obliquity: Why Our Goals Are Best Achieved Indirectly

by John Kay · 30 Apr 2010 · 237pp · 50,758 words

How to Predict the Unpredictable

by William Poundstone · 267pp · 71,941 words

Gray Day: My Undercover Mission to Expose America's First Cyber Spy

by Eric O'Neill · 1 Mar 2019 · 299pp · 88,375 words

The Intelligent Asset Allocator: How to Build Your Portfolio to Maximize Returns and Minimize Risk

by William J. Bernstein · 12 Oct 2000

The Future of the Brain: Essays by the World's Leading Neuroscientists

by Gary Marcus and Jeremy Freeman · 1 Nov 2014 · 336pp · 93,672 words

The Man Who Invented the Computer

by Jane Smiley · 18 Oct 2010 · 253pp · 80,074 words

The Computer Boys Take Over: Computers, Programmers, and the Politics of Technical Expertise

by Nathan L. Ensmenger · 31 Jul 2010 · 429pp · 114,726 words

A Demon of Our Own Design: Markets, Hedge Funds, and the Perils of Financial Innovation

by Richard Bookstaber · 5 Apr 2007 · 289pp · 113,211 words

Snakes in Suits: When Psychopaths Go to Work

by Dr. Paul Babiak and Dr. Robert Hare · 7 May 2007 · 345pp · 100,135 words

Predictive Analytics: The Power to Predict Who Will Click, Buy, Lie, or Die

by Eric Siegel · 19 Feb 2013 · 502pp · 107,657 words

Prediction Machines: The Simple Economics of Artificial Intelligence

by Ajay Agrawal, Joshua Gans and Avi Goldfarb · 16 Apr 2018 · 345pp · 75,660 words

Surviving AI: The Promise and Peril of Artificial Intelligence

by Calum Chace · 28 Jul 2015 · 144pp · 43,356 words

The Knowledge Illusion

by Steven Sloman · 10 Feb 2017 · 313pp · 91,098 words

Eat People: And Other Unapologetic Rules for Game-Changing Entrepreneurs

by Andy Kessler · 1 Feb 2011 · 272pp · 64,626 words

I, Warbot: The Dawn of Artificially Intelligent Conflict

by Kenneth Payne · 16 Jun 2021 · 339pp · 92,785 words

Final Jeopardy: Man vs. Machine and the Quest to Know Everything

by Stephen Baker · 17 Feb 2011 · 238pp · 77,730 words

Kill It With Fire: Manage Aging Computer Systems

by Marianne Bellotti · 17 Mar 2021 · 232pp · 71,237 words

Microchip: An Idea, Its Genesis, and the Revolution It Created

by Jeffrey Zygmont · 15 Mar 2003

Bitcoin: The Future of Money?

by Dominic Frisby · 1 Nov 2014 · 233pp · 66,446 words

Red Rover: Inside the Story of Robotic Space Exploration, From Genesis to the Mars Rover Curiosity

by Roger Wiens · 12 Mar 2013 · 265pp · 79,896 words

The Entrepreneurial State: Debunking Public vs. Private Sector Myths

by Mariana Mazzucato · 1 Jan 2011 · 382pp · 92,138 words

Intertwingled: The Work and Influence of Ted Nelson (History of Computing)

by Douglas R. Dechow · 2 Jul 2015 · 223pp · 52,808 words

Culture and Prosperity: The Truth About Markets - Why Some Nations Are Rich but Most Remain Poor

by John Kay · 24 May 2004 · 436pp · 76 words

Becoming Steve Jobs: The Evolution of a Reckless Upstart Into a Visionary Leader

by Brent Schlender and Rick Tetzeli · 24 Mar 2015 · 464pp · 155,696 words

Hit Refresh: The Quest to Rediscover Microsoft's Soul and Imagine a Better Future for Everyone

by Satya Nadella, Greg Shaw and Jill Tracie Nichols · 25 Sep 2017 · 391pp · 71,600 words

Richard Dawkins: How a Scientist Changed the Way We Think

by Alan Grafen; Mark Ridley · 1 Jan 2006 · 286pp · 90,530 words

The People's Republic of Walmart: How the World's Biggest Corporations Are Laying the Foundation for Socialism

by Leigh Phillips and Michal Rozworski · 5 Mar 2019 · 202pp · 62,901 words

Debtor Nation: The History of America in Red Ink (Politics and Society in Modern America)

by Louis Hyman · 3 Jan 2011

Where Wizards Stay Up Late: The Origins of the Internet

by Katie Hafner and Matthew Lyon · 1 Jan 1996 · 352pp · 96,532 words

What the Dormouse Said: How the Sixties Counterculture Shaped the Personal Computer Industry

by John Markoff · 1 Jan 2005 · 394pp · 108,215 words

Digital Accounting: The Effects of the Internet and Erp on Accounting

by Ashutosh Deshmukh · 13 Dec 2005

The Future of Ideas: The Fate of the Commons in a Connected World

by Lawrence Lessig · 14 Jul 2001 · 494pp · 142,285 words

Raw Data Is an Oxymoron

by Lisa Gitelman · 25 Jan 2013

Climbing Mount Improbable

by Richard Dawkins and Lalla Ward · 1 Jan 1996 · 309pp · 101,190 words

Billionaire, Nerd, Savior, King: Bill Gates and His Quest to Shape Our World

by Anupreeta Das · 12 Aug 2024 · 315pp · 115,894 words

Microserfs

by Douglas Coupland · 14 Feb 1995

Rule of the Robots: How Artificial Intelligence Will Transform Everything

by Martin Ford · 13 Sep 2021 · 288pp · 86,995 words

Life After Google: The Fall of Big Data and the Rise of the Blockchain Economy

by George Gilder · 16 Jul 2018 · 332pp · 93,672 words

Shape: The Hidden Geometry of Information, Biology, Strategy, Democracy, and Everything Else

by Jordan Ellenberg · 14 May 2021 · 665pp · 159,350 words

Artificial Intelligence: A Modern Approach

by Stuart Russell and Peter Norvig · 14 Jul 2019 · 2,466pp · 668,761 words

The Crash Detectives: Investigating the World's Most Mysterious Air Disasters

by Christine Negroni · 26 Sep 2016 · 269pp · 74,955 words

Broad Band: The Untold Story of the Women Who Made the Internet

by Claire L. Evans · 6 Mar 2018 · 371pp · 93,570 words

Surveillance Valley: The Rise of the Military-Digital Complex

by Yasha Levine · 6 Feb 2018 · 474pp · 130,575 words

Capitalism Without Capital: The Rise of the Intangible Economy

by Jonathan Haskel and Stian Westlake · 7 Nov 2017 · 346pp · 89,180 words

Commodore: A Company on the Edge

by Brian Bagnall · 13 Sep 2005 · 781pp · 226,928 words

Track Changes

by Matthew G. Kirschenbaum · 1 May 2016 · 519pp · 142,646 words

Circle of Greed: The Spectacular Rise and Fall of the Lawyer Who Brought Corporate America to Its Knees

by Patrick Dillon and Carl M. Cannon · 2 Mar 2010 · 613pp · 181,605 words

Turing's Cathedral

by George Dyson · 6 Mar 2012

The Googlization of Everything:

by Siva Vaidhyanathan · 1 Jan 2010 · 281pp · 95,852 words

From Airline Reservations to Sonic the Hedgehog: A History of the Software Industry

by Martin Campbell-Kelly · 15 Jan 2003

Rise of the Rocket Girls: The Women Who Propelled Us, From Missiles to the Moon to Mars

by Nathalia Holt · 4 Apr 2016 · 288pp · 92,175 words

Code: The Hidden Language of Computer Hardware and Software

by Charles Petzold · 28 Sep 1999 · 566pp · 122,184 words

Humble Pi: A Comedy of Maths Errors

by Matt Parker · 7 Mar 2019

The Rights of the People

by David K. Shipler · 18 Apr 2011 · 495pp · 154,046 words

Insanely Great: The Life and Times of Macintosh, the Computer That Changed Everything

by Steven Levy · 2 Feb 1994 · 244pp · 66,599 words

Data-Ism: The Revolution Transforming Decision Making, Consumer Behavior, and Almost Everything Else

by Steve Lohr · 10 Mar 2015 · 239pp · 70,206 words

Being You: A New Science of Consciousness

by Anil Seth · 29 Aug 2021 · 418pp · 102,597 words

Dark Territory: The Secret History of Cyber War

by Fred Kaplan · 1 Mar 2016 · 383pp · 105,021 words

Talk to Me: How Voice Computing Will Transform the Way We Live, Work, and Think

by James Vlahos · 1 Mar 2019 · 392pp · 108,745 words

User Friendly: How the Hidden Rules of Design Are Changing the Way We Live, Work & Play

by Cliff Kuang and Robert Fabricant · 7 Nov 2019

Whole Earth: The Many Lives of Stewart Brand

by John Markoff · 22 Mar 2022 · 573pp · 142,376 words

The Chip: How Two Americans Invented the Microchip and Launched a Revolution

by T. R. Reid · 18 Dec 2007 · 293pp · 91,110 words

The Friendly Orange Glow: The Untold Story of the PLATO System and the Dawn of Cyberculture

by Brian Dear · 14 Jun 2017 · 708pp · 223,211 words

Dealers of Lightning

by Michael A. Hiltzik · 27 Apr 2000 · 559pp · 157,112 words

Stealth

by Peter Westwick · 22 Nov 2019 · 474pp · 87,687 words

Empire of the Sum: The Rise and Reign of the Pocket Calculator

by Keith Houston · 22 Aug 2023 · 405pp · 105,395 words

The Secret War Between Downloading and Uploading: Tales of the Computer as Culture Machine

by Peter Lunenfeld · 31 Mar 2011 · 239pp · 56,531 words

The Dream Machine: J.C.R. Licklider and the Revolution That Made Computing Personal

by M. Mitchell Waldrop · 14 Apr 2001

Tools for Thought: The History and Future of Mind-Expanding Technology

by Howard Rheingold · 14 May 2000 · 352pp · 120,202 words

Cogs and Monsters: What Economics Is, and What It Should Be

by Diane Coyle · 11 Oct 2021 · 305pp · 75,697 words

The Man Behind the Microchip: Robert Noyce and the Invention of Silicon Valley

by Leslie Berlin · 9 Jun 2005

Irrational Exuberance: With a New Preface by the Author

by Robert J. Shiller · 15 Feb 2000 · 319pp · 106,772 words

The Charisma Machine: The Life, Death, and Legacy of One Laptop Per Child

by Morgan G. Ames · 19 Nov 2019 · 426pp · 117,775 words

If Then: How Simulmatics Corporation Invented the Future

by Jill Lepore · 14 Sep 2020 · 467pp · 149,632 words

Habeas Data: Privacy vs. The Rise of Surveillance Tech

by Cyrus Farivar · 7 May 2018 · 397pp · 110,222 words

When Einstein Walked With Gödel: Excursions to the Edge of Thought

by Jim Holt · 14 May 2018 · 436pp · 127,642 words

What to Think About Machines That Think: Today's Leading Thinkers on the Age of Machine Intelligence

by John Brockman · 5 Oct 2015 · 481pp · 125,946 words

Why Things Bite Back: Technology and the Revenge of Unintended Consequences

by Edward Tenner · 1 Sep 1997

Humans Are Underrated: What High Achievers Know That Brilliant Machines Never Will

by Geoff Colvin · 3 Aug 2015 · 271pp · 77,448 words

The Future of the Professions: How Technology Will Transform the Work of Human Experts

by Richard Susskind and Daniel Susskind · 24 Aug 2015 · 742pp · 137,937 words

The Runaway Species: How Human Creativity Remakes the World

by David Eagleman and Anthony Brandt · 30 Sep 2017 · 345pp · 84,847 words

Lost at Sea

by Jon Ronson · 1 Oct 2012 · 375pp · 106,536 words

Deep Thinking: Where Machine Intelligence Ends and Human Creativity Begins

by Garry Kasparov · 1 May 2017 · 331pp · 104,366 words

Profiting Without Producing: How Finance Exploits Us All

by Costas Lapavitsas · 14 Aug 2013 · 554pp · 158,687 words

Big Data: A Revolution That Will Transform How We Live, Work, and Think

by Viktor Mayer-Schonberger and Kenneth Cukier · 5 Mar 2013 · 304pp · 82,395 words

In the Age of the Smart Machine

by Shoshana Zuboff · 14 Apr 1988

Fun Inc.

by Tom Chatfield · 13 Dec 2011 · 266pp · 67,272 words

Machine, Platform, Crowd: Harnessing Our Digital Future

by Andrew McAfee and Erik Brynjolfsson · 26 Jun 2017 · 472pp · 117,093 words

The Trouble With Billionaires

by Linda McQuaig · 1 May 2013 · 261pp · 81,802 words

The Inevitable: Understanding the 12 Technological Forces That Will Shape Our Future

by Kevin Kelly · 6 Jun 2016 · 371pp · 108,317 words

From Gutenberg to Google: electronic representations of literary texts

by Peter L. Shillingsburg · 15 Jan 2006 · 224pp · 12,941 words

GDP: A Brief but Affectionate History

by Diane Coyle · 23 Feb 2014 · 159pp · 45,073 words

Alex's Adventures in Numberland

by Alex Bellos · 3 Apr 2011 · 437pp · 132,041 words

Troublemakers: Silicon Valley's Coming of Age

by Leslie Berlin · 7 Nov 2017 · 615pp · 168,775 words

Live Work Work Work Die: A Journey Into the Savage Heart of Silicon Valley

by Corey Pein · 23 Apr 2018 · 282pp · 81,873 words

Steve Jobs

by Walter Isaacson · 23 Oct 2011 · 915pp · 232,883 words

Rainbow Six

by Tom Clancy · 2 Jan 1998

Darwin Among the Machines

by George Dyson · 28 Mar 2012 · 463pp · 118,936 words

Fortune's Formula: The Untold Story of the Scientific Betting System That Beat the Casinos and Wall Street

by William Poundstone · 18 Sep 2006 · 389pp · 109,207 words

Darwin's Dangerous Idea: Evolution and the Meanings of Life

by Daniel C. Dennett · 15 Jan 1995 · 846pp · 232,630 words

Open Standards and the Digital Age: History, Ideology, and Networks (Cambridge Studies in the Emergence of Global Enterprise)

by Andrew L. Russell · 27 Apr 2014 · 675pp · 141,667 words

Crypto: How the Code Rebels Beat the Government Saving Privacy in the Digital Age

by Steven Levy · 15 Jan 2002 · 468pp · 137,055 words

Smart Cities: Big Data, Civic Hackers, and the Quest for a New Utopia

by Anthony M. Townsend · 29 Sep 2013 · 464pp · 127,283 words

Social Life of Information

by John Seely Brown and Paul Duguid · 2 Feb 2000 · 791pp · 85,159 words

Complexity: A Guided Tour

by Melanie Mitchell · 31 Mar 2009 · 524pp · 120,182 words

Digital Apollo: Human and Machine in Spaceflight

by David A. Mindell · 3 Apr 2008 · 377pp · 21,687 words

The End of Work

by Jeremy Rifkin · 28 Dec 1994 · 372pp · 152 words

The Road Ahead

by Bill Gates, Nathan Myhrvold and Peter Rinearson · 15 Nov 1995 · 317pp · 101,074 words

Model Thinker: What You Need to Know to Make Data Work for You

by Scott E. Page · 27 Nov 2018 · 543pp · 153,550 words

Genius Makers: The Mavericks Who Brought A. I. To Google, Facebook, and the World

by Cade Metz · 15 Mar 2021 · 414pp · 109,622 words

Shady Characters: The Secret Life of Punctuation, Symbols, and Other Typographical Marks

by Keith Houston · 23 Sep 2013

The Perfect Weapon: War, Sabotage, and Fear in the Cyber Age

by David E. Sanger · 18 Jun 2018 · 394pp · 117,982 words

12 Bytes: How We Got Here. Where We Might Go Next

by Jeanette Winterson · 15 Mar 2021 · 256pp · 73,068 words

How the World Ran Out of Everything

by Peter S. Goodman · 11 Jun 2024 · 528pp · 127,605 words

Artificial Intelligence: A Guide for Thinking Humans

by Melanie Mitchell · 14 Oct 2019 · 350pp · 98,077 words

The Cultural Logic of Computation

by David Golumbia · 31 Mar 2009 · 268pp · 109,447 words

Hacking Capitalism

by Söderberg, Johan; Söderberg, Johan;

The Book of Why: The New Science of Cause and Effect

by Judea Pearl and Dana Mackenzie · 1 Mar 2018

We Are Electric: Inside the 200-Year Hunt for Our Body's Bioelectric Code, and What the Future Holds

by Sally Adee · 27 Feb 2023 · 329pp · 101,233 words

Kingdom of Characters: The Language Revolution That Made China Modern

by Jing Tsu · 18 Jan 2022 · 408pp · 105,715 words

A People’s History of Computing in the United States

by Joy Lisi Rankin

From Counterculture to Cyberculture: Stewart Brand, the Whole Earth Network, and the Rise of Digital Utopianism

by Fred Turner · 31 Aug 2006 · 339pp · 57,031 words

The Rise and Fall of American Growth: The U.S. Standard of Living Since the Civil War (The Princeton Economic History of the Western World)

by Robert J. Gordon · 12 Jan 2016 · 1,104pp · 302,176 words

Nexus: A Brief History of Information Networks From the Stone Age to AI

by Yuval Noah Harari · 9 Sep 2024 · 566pp · 169,013 words

The Codebreakers: The Comprehensive History of Secret Communication From Ancient Times to the Internet

by David Kahn · 1 Feb 1963 · 1,799pp · 532,462 words

The Digital Divide: Arguments for and Against Facebook, Google, Texting, and the Age of Social Netwo Rking

by Mark Bauerlein · 7 Sep 2011 · 407pp · 103,501 words

The Long Good Buy: Analysing Cycles in Markets

by Peter Oppenheimer · 3 May 2020 · 333pp · 76,990 words

Hackers: Heroes of the Computer Revolution - 25th Anniversary Edition

by Steven Levy · 18 May 2010 · 598pp · 183,531 words

The Signal and the Noise: Why So Many Predictions Fail-But Some Don't

by Nate Silver · 31 Aug 2012 · 829pp · 186,976 words

Boom: Bubbles and the End of Stagnation

by Byrne Hobart and Tobias Huber · 29 Oct 2024 · 292pp · 106,826 words

How to Create a Mind: The Secret of Human Thought Revealed

by Ray Kurzweil · 13 Nov 2012 · 372pp · 101,174 words

Average Is Over: Powering America Beyond the Age of the Great Stagnation

by Tyler Cowen · 11 Sep 2013 · 291pp · 81,703 words

The Flat White Economy

by Douglas McWilliams · 15 Feb 2015 · 193pp · 47,808 words

Protocol: how control exists after decentralization

by Alexander R. Galloway · 1 Apr 2004 · 287pp · 86,919 words

The Cobweb

by Neal Stephenson and J. Frederick George · 31 May 2005 · 514pp · 153,274 words

Zucked: Waking Up to the Facebook Catastrophe

by Roger McNamee · 1 Jan 2019 · 382pp · 105,819 words

Computer: A History of the Information Machine

by Martin Campbell-Kelly and Nathan Ensmenger · 29 Jul 2013 · 528pp · 146,459 words

Trees on Mars: Our Obsession With the Future

by Hal Niedzviecki · 15 Mar 2015 · 343pp · 102,846 words

21 Lessons for the 21st Century

by Yuval Noah Harari · 29 Aug 2018 · 389pp · 119,487 words

The Music of the Primes

by Marcus Du Sautoy · 26 Apr 2004 · 434pp · 135,226 words

The Death of Money: The Coming Collapse of the International Monetary System

by James Rickards · 7 Apr 2014 · 466pp · 127,728 words

How Not to Network a Nation: The Uneasy History of the Soviet Internet (Information Policy)

by Benjamin Peters · 2 Jun 2016 · 518pp · 107,836 words

The Net Delusion: The Dark Side of Internet Freedom

by Evgeny Morozov · 16 Nov 2010 · 538pp · 141,822 words

Red Plenty

by Francis Spufford · 1 Jan 2007 · 544pp · 168,076 words

The Long Boom: A Vision for the Coming Age of Prosperity

by Peter Schwartz, Peter Leyden and Joel Hyatt · 18 Oct 2000 · 353pp · 355 words

Surfaces and Essences

by Douglas Hofstadter and Emmanuel Sander · 10 Sep 2012 · 1,079pp · 321,718 words

From Bacteria to Bach and Back: The Evolution of Minds

by Daniel C. Dennett · 7 Feb 2017 · 573pp · 157,767 words

To Be a Machine: Adventures Among Cyborgs, Utopians, Hackers, and the Futurists Solving the Modest Problem of Death

by Mark O'Connell · 28 Feb 2017 · 252pp · 79,452 words

The Truth Machine: The Blockchain and the Future of Everything

by Paul Vigna and Michael J. Casey · 27 Feb 2018 · 348pp · 97,277 words

The Wealth of Humans: Work, Power, and Status in the Twenty-First Century

by Ryan Avent · 20 Sep 2016 · 323pp · 90,868 words

Utopia Is Creepy: And Other Provocations

by Nicholas Carr · 5 Sep 2016 · 391pp · 105,382 words

Wonderland: How Play Made the Modern World

by Steven Johnson · 15 Nov 2016 · 322pp · 88,197 words

Jihad vs. McWorld: Terrorism's Challenge to Democracy

by Benjamin Barber · 20 Apr 2010 · 454pp · 139,350 words

An Optimist's Tour of the Future

by Mark Stevenson · 4 Dec 2010 · 379pp · 108,129 words

Who Owns the Future?

by Jaron Lanier · 6 May 2013 · 510pp · 120,048 words

The Formula: How Algorithms Solve All Our Problems-And Create More

by Luke Dormehl · 4 Nov 2014 · 268pp · 75,850 words

Heart of the Machine: Our Future in a World of Artificial Emotional Intelligence

by Richard Yonck · 7 Mar 2017 · 360pp · 100,991 words

The Innovators: How a Group of Inventors, Hackers, Geniuses and Geeks Created the Digital Revolution

by Walter Isaacson · 6 Oct 2014 · 720pp · 197,129 words

Rise of the Robots: Technology and the Threat of a Jobless Future

by Martin Ford · 4 May 2015 · 484pp · 104,873 words

Stocks for the Long Run 5/E: the Definitive Guide to Financial Market Returns & Long-Term Investment Strategies

by Jeremy Siegel · 7 Jan 2014 · 517pp · 139,477 words

The History and Uncertain Future of Handwriting

by Anne Trubek · 5 Sep 2016

The Big Score

by Michael S. Malone · 20 Jul 2021

50 Future Ideas You Really Need to Know

by Richard Watson · 5 Nov 2013 · 219pp · 63,495 words

Finance and the Good Society

by Robert J. Shiller · 1 Jan 2012 · 288pp · 16,556 words

The Age of Spiritual Machines: When Computers Exceed Human Intelligence

by Ray Kurzweil · 31 Dec 1998 · 696pp · 143,736 words

World Without Mind: The Existential Threat of Big Tech

by Franklin Foer · 31 Aug 2017 · 281pp · 71,242 words

Future Politics: Living Together in a World Transformed by Tech

by Jamie Susskind · 3 Sep 2018 · 533pp

The Man Who Solved the Market: How Jim Simons Launched the Quant Revolution

by Gregory Zuckerman · 5 Nov 2019 · 407pp · 104,622 words

AIQ: How People and Machines Are Smarter Together

by Nick Polson and James Scott · 14 May 2018 · 301pp · 85,126 words

A World Without Email: Reimagining Work in an Age of Communication Overload

by Cal Newport · 2 Mar 2021 · 350pp · 90,898 words

Palo Alto: A History of California, Capitalism, and the World

by Malcolm Harris · 14 Feb 2023 · 864pp · 272,918 words

Concepts, Techniques, and Models of Computer Programming

by Peter Van-Roy and Seif Haridi · 15 Feb 2004 · 931pp · 79,142 words

The Greatest Capitalist Who Ever Lived: Tom Watson Jr. And the Epic Story of How IBM Created the Digital Age

by Ralph Watson McElvenny and Marc Wortman · 14 Oct 2023 · 567pp · 171,072 words

The Jasons: The Secret History of Science's Postwar Elite

by Ann Finkbeiner · 26 Mar 2007

The Age of Entitlement: America Since the Sixties

by Christopher Caldwell · 21 Jan 2020 · 450pp · 113,173 words

The Great Divergence: America's Growing Inequality Crisis and What We Can Do About It

by Timothy Noah · 23 Apr 2012 · 309pp · 91,581 words

Power and Progress: Our Thousand-Year Struggle Over Technology and Prosperity

by Daron Acemoglu and Simon Johnson · 15 May 2023 · 619pp · 177,548 words

IBM and the Holocaust

by Edwin Black · 30 Jun 2001 · 735pp · 214,791 words

A Mind at Play: How Claude Shannon Invented the Information Age

by Jimmy Soni and Rob Goodman · 17 Jul 2017 · 415pp · 114,840 words

The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies

by Erik Brynjolfsson and Andrew McAfee · 20 Jan 2014 · 339pp · 88,732 words

Making It Happen: Fred Goodwin, RBS and the Men Who Blew Up the British Economy

by Iain Martin · 11 Sep 2013 · 387pp · 119,244 words

Inside the Robot Kingdom: Japan, Mechatronics and the Coming Robotopia

by Frederik L. Schodt · 31 Mar 1988 · 361pp · 83,886 words

Paper: A World History

by Mark Kurlansky · 3 Apr 2016 · 485pp · 126,597 words

Skyfaring: A Journey With a Pilot

by Mark Vanhoenacker · 1 Jun 2015 · 319pp · 105,949 words

Machines of Loving Grace: The Quest for Common Ground Between Humans and Robots

by John Markoff · 24 Aug 2015 · 413pp · 119,587 words

Operation Chaos: The Vietnam Deserters Who Fought the CIA, the Brainwashers, and Themselves

by Matthew Sweet · 13 Feb 2018 · 493pp · 136,235 words

Scale: The Universal Laws of Growth, Innovation, Sustainability, and the Pace of Life in Organisms, Cities, Economies, and Companies

by Geoffrey West · 15 May 2017 · 578pp · 168,350 words

Paper Knowledge: Toward a Media History of Documents

by Lisa Gitelman · 26 Mar 2014

Keeping Up With the Quants: Your Guide to Understanding and Using Analytics

by Thomas H. Davenport and Jinho Kim · 10 Jun 2013 · 204pp · 58,565 words

Cheap: The High Cost of Discount Culture

by Ellen Ruppel Shell · 2 Jul 2009 · 387pp · 110,820 words

Speculative Everything: Design, Fiction, and Social Dreaming

by Anthony Dunne and Fiona Raby · 22 Nov 2013 · 165pp · 45,397 words

Homo Deus: A Brief History of Tomorrow

by Yuval Noah Harari · 1 Mar 2015 · 479pp · 144,453 words

The Weightless World: Strategies for Managing the Digital Economy

by Diane Coyle · 29 Oct 1998 · 49,604 words

How the Mind Works

by Steven Pinker · 1 Jan 1997 · 913pp · 265,787 words

The Fourth Age: Smart Robots, Conscious Computers, and the Future of Humanity

by Byron Reese · 23 Apr 2018 · 294pp · 96,661 words

Deep Medicine: How Artificial Intelligence Can Make Healthcare Human Again

by Eric Topol · 1 Jan 2019 · 424pp · 114,905 words

The Elements of Statistical Learning (Springer Series in Statistics)

by Trevor Hastie, Robert Tibshirani and Jerome Friedman · 25 Aug 2009 · 764pp · 261,694 words

The Internet Is Not the Answer

by Andrew Keen · 5 Jan 2015 · 361pp · 81,068 words

Life Inc.: How the World Became a Corporation and How to Take It Back

by Douglas Rushkoff · 1 Jun 2009 · 422pp · 131,666 words

The Code: Silicon Valley and the Remaking of America

by Margaret O'Mara · 8 Jul 2019

A World Without Work: Technology, Automation, and How We Should Respond

by Daniel Susskind · 14 Jan 2020 · 419pp · 109,241 words

One Day in August: Ian Fleming, Enigma, and the Deadly Raid on Dieppe

by David O’keefe · 5 Nov 2020 · 1,243pp · 167,097 words

Your Computer Is on Fire

by Thomas S. Mullaney, Benjamin Peters, Mar Hicks and Kavita Philip · 9 Mar 2021 · 661pp · 156,009 words

The Myth of Capitalism: Monopolies and the Death of Competition

by Jonathan Tepper · 20 Nov 2018 · 417pp · 97,577 words

The Age of AI: And Our Human Future

by Henry A Kissinger, Eric Schmidt and Daniel Huttenlocher · 2 Nov 2021 · 194pp · 57,434 words

Digital Barbarism: A Writer's Manifesto

by Mark Helprin · 19 Apr 2009 · 272pp · 83,378 words

The Year's Best Science Fiction: Twenty-Sixth Annual Collection

by Gardner Dozois · 23 Jun 2009 · 1,263pp · 371,402 words

The Master and His Emissary: The Divided Brain and the Making of the Western World

by Iain McGilchrist · 8 Oct 2012

Exponential: How Accelerating Technology Is Leaving Us Behind and What to Do About It

by Azeem Azhar · 6 Sep 2021 · 447pp · 111,991 words

A Place for Everything: The Curious History of Alphabetical Order

by Judith Flanders · 6 Feb 2020 · 404pp · 110,942 words

The Capitalist Manifesto

by Johan Norberg · 14 Jun 2023 · 295pp · 87,204 words

Statistics in a Nutshell

by Sarah Boslaugh · 10 Nov 2012

How to Fix the Future: Staying Human in the Digital Age

by Andrew Keen · 1 Mar 2018 · 308pp · 85,880 words

Evil Geniuses: The Unmaking of America: A Recent History

by Kurt Andersen · 14 Sep 2020 · 486pp · 150,849 words

The Secret Life of Bletchley Park: The WWII Codebreaking Centre and the Men and Women Who Worked There

by Sinclair McKay · 24 May 2010 · 351pp · 107,966 words

The Last Tycoons: The Secret History of Lazard Frères & Co.

by William D. Cohan · 25 Dec 2015 · 1,009pp · 329,520 words

The Meritocracy Myth

by Stephen J. McNamee · 17 Jul 2013 · 440pp · 108,137 words

Future Files: A Brief History of the Next 50 Years

by Richard Watson · 1 Jan 2008

Ghost Wars: The Secret History of the CIA, Afghanistan, and Bin Laden, from the Soviet Invasion to September 10, 2011

by Steve Coll · 23 Feb 2004 · 956pp · 288,981 words

Utopia for Realists: The Case for a Universal Basic Income, Open Borders, and a 15-Hour Workweek

by Rutger Bregman · 13 Sep 2014 · 235pp · 62,862 words

The Evolution of Everything: How New Ideas Emerge

by Matt Ridley · 395pp · 116,675 words

The Information: A History, a Theory, a Flood

by James Gleick · 1 Mar 2011 · 855pp · 178,507 words

Outliers

by Malcolm Gladwell · 29 May 2017 · 230pp · 71,320 words

Selfie: How We Became So Self-Obsessed and What It's Doing to Us

by Will Storr · 14 Jun 2017 · 431pp · 129,071 words

The Rise of the Network Society

by Manuel Castells · 31 Aug 1996 · 843pp · 223,858 words

Give People Money

by Annie Lowrey · 10 Jul 2018 · 242pp · 73,728 words

PostgreSQL: introduction and concepts

by Bruce Momjian · 15 Jan 2001 · 490pp · 40,083 words

Bad Money: Reckless Finance, Failed Politics, and the Global Crisis of American Capitalism

by Kevin Phillips · 31 Mar 2008 · 422pp · 113,830 words

Global Catastrophic Risks

by Nick Bostrom and Milan M. Cirkovic · 2 Jul 2008

Rebel Ideas: The Power of Diverse Thinking

by Matthew Syed · 9 Sep 2019 · 280pp · 76,638 words

The Technology Trap: Capital, Labor, and Power in the Age of Automation

by Carl Benedikt Frey · 17 Jun 2019 · 626pp · 167,836 words

What Would the Great Economists Do?: How Twelve Brilliant Minds Would Solve Today's Biggest Problems

by Linda Yueh · 4 Jun 2018 · 453pp · 117,893 words

The AI Economy: Work, Wealth and Welfare in the Robot Age

by Roger Bootle · 4 Sep 2019 · 374pp · 111,284 words

Astounding: John W. Campbell, Isaac Asimov, Robert A. Heinlein, L. Ron Hubbard, and the Golden Age of Science Fiction

by Alec Nevala-Lee · 22 Oct 2018 · 622pp · 169,014 words

The Dawn of Innovation: The First American Industrial Revolution

by Charles R. Morris · 1 Jan 2012 · 456pp · 123,534 words

Shocks, Crises, and False Alarms: How to Assess True Macroeconomic Risk

by Philipp Carlsson-Szlezak and Paul Swartz · 8 Jul 2024 · 259pp · 89,637 words

The Psychology of Money: Timeless Lessons on Wealth, Greed, and Happiness

by Morgan Housel · 7 Sep 2020 · 209pp · 53,175 words

Head, Hand, Heart: Why Intelligence Is Over-Rewarded, Manual Workers Matter, and Caregivers Deserve More Respect

by David Goodhart · 7 Sep 2020 · 463pp · 115,103 words

The Better Angels of Our Nature: Why Violence Has Declined

by Steven Pinker · 24 Sep 2012 · 1,351pp · 385,579 words

The new village green: living light, living local, living large

by Stephen Morris · 1 Sep 2007 · 289pp · 112,697 words

Decoding Organization: Bletchley Park, Codebreaking and Organization Studies

by Christopher Grey · 22 Mar 2012

Mapping Mars: Science, Imagination and the Birth of a World

by Oliver Morton · 15 Feb 2003 · 409pp · 129,423 words

The Great Economists: How Their Ideas Can Help Us Today

by Linda Yueh · 15 Mar 2018 · 374pp · 113,126 words

The Man Who Knew Infinity: A Life of the Genius Ramanujan

by Robert Kanigel · 25 Apr 2016

Material World: A Substantial Story of Our Past and Future

by Ed Conway · 15 Jun 2023 · 515pp · 152,128 words

The Meritocracy Trap: How America's Foundational Myth Feeds Inequality, Dismantles the Middle Class, and Devours the Elite

by Daniel Markovits · 14 Sep 2019 · 976pp · 235,576 words

Don't Be Evil: How Big Tech Betrayed Its Founding Principles--And All of US

by Rana Foroohar · 5 Nov 2019 · 380pp · 109,724 words

A History of Modern Britain

by Andrew Marr · 2 Jul 2009 · 872pp · 259,208 words

Thinking in Numbers

by Daniel Tammet · 15 Aug 2012 · 212pp · 68,754 words

The Age of Stagnation: Why Perpetual Growth Is Unattainable and the Global Economy Is in Peril

by Satyajit Das · 9 Feb 2016 · 327pp · 90,542 words

Fully Automated Luxury Communism

by Aaron Bastani · 10 Jun 2019 · 280pp · 74,559 words

The Social Life of Money

by Nigel Dodd · 14 May 2014 · 700pp · 201,953 words

Overbooked: The Exploding Business of Travel and Tourism

by Elizabeth Becker · 16 Apr 2013 · 570pp · 158,139 words

Rust: The Longest War

by Jonathan Waldman · 10 Mar 2015 · 347pp · 112,727 words

The Sirens' Call: How Attention Became the World's Most Endangered Resource

by Chris Hayes · 28 Jan 2025 · 359pp · 100,761 words

The Clock Mirage: Our Myth of Measured Time

by Joseph Mazur · 20 Apr 2020 · 283pp · 85,906 words

Innovation and Its Enemies

by Calestous Juma · 20 Mar 2017

Automation and the Future of Work

by Aaron Benanav · 3 Nov 2020 · 175pp · 45,815 words

Blood in the Machine: The Origins of the Rebellion Against Big Tech

by Brian Merchant · 25 Sep 2023 · 524pp · 154,652 words

How the Railways Will Fix the Future: Rediscovering the Essential Brilliance of the Iron Road

by Gareth Dennis · 12 Nov 2024 · 261pp · 76,645 words

The Glass Half-Empty: Debunking the Myth of Progress in the Twenty-First Century

by Rodrigo Aguilera · 10 Mar 2020 · 356pp · 106,161 words

Germany

by Andrea Schulte-Peevers · 17 Oct 2010

Why geography matters: three challenges facing America : climate change, the rise of China, and global terrorism

by Harm J. De Blij · 15 Nov 2007 · 481pp · 121,300 words

Longevity: To the Limits and Beyond (Research and Perspectives in Longevity)

by Jean-Marie Robine, James W. Vaupel, Bernard Jeune and Michel Allard · 2 Jan 1997

Buy Now, Pay Later: The Extraordinary Story of Afterpay

by Jonathan Shapiro and James Eyers · 2 Aug 2021 · 444pp · 124,631 words

The Great Divide: Unequal Societies and What We Can Do About Them

by Joseph E. Stiglitz · 15 Mar 2015 · 409pp · 125,611 words

The Uninhabitable Earth: Life After Warming

by David Wallace-Wells · 19 Feb 2019 · 343pp · 101,563 words

AC/DC: The Savage Tale of the First Standards War

by Tom McNichol · 31 Aug 2006

The Great Shark Hunt: Strange Tales From a Strange Time

by Hunter S. Thompson · 6 Nov 2003 · 893pp · 282,706 words

The Price of Time: The Real Story of Interest

by Edward Chancellor · 15 Aug 2022 · 829pp · 187,394 words

The Wealth and Poverty of Nations: Why Some Are So Rich and Some So Poor

by David S. Landes · 14 Sep 1999 · 1,060pp · 265,296 words

The Bookshop: A History of the American Bookstore

by Evan Friss · 5 Aug 2024 · 493pp · 120,793 words

Capitalism: Money, Morals and Markets

by John Plender · 27 Jul 2015 · 355pp · 92,571 words

The Retreat of Western Liberalism

by Edward Luce · 20 Apr 2017 · 223pp · 58,732 words

Zbig: The Life of Zbigniew Brzezinski, America's Great Power Prophet

by Edward Luce · 13 May 2025 · 612pp · 235,188 words

The Rough Guide to Jamaica

by Thomas, Polly,Henzell, Laura.,Coates, Rob.,Vaitilingam, Adam.

Headache and Backache

by Ole Larsen · 24 Apr 2011 · 89pp · 31,802 words

The Story of Work: A New History of Humankind

by Jan Lucassen · 26 Jul 2021 · 869pp · 239,167 words

Fall: The Mysterious Life and Death of Robert Maxwell, Britain's Most Notorious Media Baron

by John Preston · 9 Feb 2021 · 374pp · 110,238 words

Revenge of the Tipping Point: Overstories, Superspreaders, and the Rise of Social Engineering

by Malcolm Gladwell · 1 Oct 2024 · 283pp · 85,644 words

The Reckoning: Financial Accountability and the Rise and Fall of Nations

by Jacob Soll · 28 Apr 2014 · 382pp · 105,166 words

The Most Powerful Idea in the World: A Story of Steam, Industry, and Invention

by William Rosen · 31 May 2010 · 420pp · 124,202 words

Everything That Makes Us Human: Case Notes of a Children's Brain Surgeon

by Jay Jayamohan · 20 Feb 2020 · 260pp · 80,230 words

Going Loco

by Tom Smith · 15 Mar 2011 · 277pp · 88,736 words

War of Shadows: Codebreakers, Spies, and the Secret Struggle to Drive the Nazis From the Middle East

by Gershom Gorenberg · 19 Jan 2021 · 555pp · 163,712 words

The Red and the Blue: The 1990s and the Birth of Political Tribalism

by Steve Kornacki · 1 Oct 2018 · 589pp · 167,680 words

Fire and Steam: A New History of the Railways in Britain

by Christian Wolmar · 1 Mar 2009 · 493pp · 145,326 words

Last Trains: Dr Beeching and the Death of Rural England

by Charles Loft · 27 Mar 2013 · 383pp · 98,179 words

The Boundless Sea: A Human History of the Oceans

by David Abulafia · 2 Oct 2019 · 1,993pp · 478,072 words

Transcending the Cold War: Summits, Statecraft, and the Dissolution of Bipolarity in Europe, 1970–1990

by Kristina Spohr and David Reynolds · 24 Aug 2016 · 627pp · 127,613 words

A Man and His Ship: America's Greatest Naval Architect and His Quest to Build the S.S. United States

by Steven Ujifusa · 9 Jul 2012 · 650pp · 155,108 words

Let them eat junk: how capitalism creates hunger and obesity

by Robert Albritton · 31 Mar 2009 · 273pp · 93,419 words