Custodians of the Internet: Platforms, Content Moderation, and the Hidden Decisions That Shape Social Media

by

Tarleton Gillespie

Published 25 Jun 2018

Personal interview, member of content policy team, Facebook In May 2017, the Guardian published a trove of documents it dubbed “The Facebook Files.” These documents instructed, over many Powerpoint slides and in unsettling detail, exactly what content moderators working on Facebook’s behalf should remove, approve, or escalate to Facebook for further review. The document offers a bizarre and disheartening glimpse into a process that Facebook and other social media platforms generally keep under wraps, a mundane look at what actually doing the work of content moderation requires. As a whole, the documents are a bit difficult to stomach. Unlike the clean principles articulated in Facebook’s community standards, they are a messy and disturbing hodgepodge of parameters, decision trees, and rules of thumb for how to implement those standards in the face of real content.

…

(In the original, the images on the first page are outlined in red, those on the second in green, to emphasize which are to be rejected and which are to be kept.) But the most important revelation of these leaked documents is no single detail within them—it is the fact that they had to be leaked (along with Facebook’s content moderation guidelines leaked to S/Z in 2016, or Facebook’s content moderation guidelines leaked to Gawker in 2012).3 These documents were not meant ever to be seen by the public. They instruct and manage the thousands of “crowdworkers” responsible for the first line of human review of Facebook pages, posts, and photos that have been either flagged by users or identified algorithmically as possibly violating the site’s guidelines.

…

Other sites, modeled after blogging tools and searchable archives, subscribed to an “information wants to be free” ethos that was shared by designers and participants alike.14 Facebook User Operations team members at the main campus in Menlo Park, California, May 2012. Photo by Robyn Beck, in the AFP collection. © Robyn Beck/Getty Images. Used with permission In fact, in the early days of a platform, it was not unusual for there to be no one in an official position to handle content moderation. Often content moderation at a platform was handled either by user support or community relations teams, generally more focused on offering users technical assistance; as a part of the legal team’s operations, responding to harassment or illegal activity while also maintaining compliance with technical standards and privacy obligations; or as a side task of the team tasked with removing spam.

Character Limit: How Elon Musk Destroyed Twitter

by

Kate Conger

and

Ryan Mac

Published 17 Sep 2024

J., 409 Brown, Michael, Jr., 18–19, 183 Buhari, Muhammadu, 75 Burger King, 290 Burning Man, 335 Business Insider, 200, 205, 285 BuzzFeed, 25, 285, 379 C Calacanis, Jason, 134–35, 143, 177–79, 241, 279, 285, 290, 291, 296–98, 313, 317, 335, 378 Caldwell, Nick, 271, 344 Capital Cities, 156 Cardaci, Chris, 374 Carlson, Tucker, 404–6, 424 Catturd2, 390 censorship, see content moderation; freedom of speech Center for Countering Digital Hate, 392 Cernovich, Mike, 104 Chappelle, Dave, 375 Charles Schwab, 284 Chase Center, 171–72, 344, 375 Chen, Jon, 260–63 child sexual exploitation, 16, 20, 92, 146, 372, 392–93 China, 198–99, 244 Christchurch mosque shootings, 42 Christie, Jen, 80 CIA, 27, 173, 183 Clinton, Bill, 223 Clinton, Hillary, 67, 193, 287, 288, 315 CNBC, 406, 408, 420 CNET, 30 CNN, 23, 225, 378 Coca-Cola, 294, 311, 363 CODE Conference, 420–22 Cohen, Lara, 309 Cohn, Jesse, 49–57, 76–78, 110 Coinbase, 179 Coleman, Keith, 173–74 Combs, Sean “Diddy,” 302 Comer, James, 391 Compaq, 29, 156 Confinity, 30 Conger, Kate, 6 Congress, U.S., 57, 58, 59–60, 62, 88, 147, 225, 264 conspiracy theories, 2, 4, 25, 44, 60, 61, 93, 287, 406, 432 Great Replacement Theory, 424 Pizzagate, 25, 104, 390, 426 QAnon, 25, 391, 392, 433 about September 11 attacks, 433 Constitution, U.S., 275, 282, 363 content moderation, 4–6, 13, 15, 146, 206, 435 activist groups and, 294, 320, 392 Digital Services Act and, 244 at Facebook, 92 at Twitter, see Twitter, content moderation at; Twitter under Elon Musk, content moderation at see also misinformation and disinformation Conway, Kellyanne, 65–66 Cook, Tim, 366, 420 Costolo, Dick, 11, 17, 20–21, 42, 149, 170 COVID-19, 53–57, 59, 69–70, 113, 148, 173, 174, 180, 218, 325, 326, 414 misinformation about, 61–64, 68–69, 91, 110, 114, 222, 391 Musk and, 62–64, 99, 114, 128, 136, 375 Tesla and, 63, 96, 99 Twitter and, 53, 56–57, 61–64, 68–69, 77, 83, 90, 91, 97, 98, 185, 186, 255 vaccines for, 68–69, 391 Craft Ventures, 177 Crawford, Esther, 79, 240, 241, 243, 284–86, 297–98, 306, 310–12, 314–19, 323–24, 328–29, 331, 332, 335, 388, 398–99, 402 firing of, 398–99 sleeping photo of, 316 credit card companies, 29–30 crime, 406, 432 Crown Estate, 364 Cruz, Ted, 334, 392 cryptocurrency, 79, 105, 106, 136, 179, 182, 253 Bitcoin, 44, 57, 78, 79, 87, 177, 179, 233, 308, 358, 433 Dogecoin, 141, 144, 179 Culver, Leah, 273–74 D Daily Mail, 373 Daily Stormer, 355 Daily Wire, 408–9 DARPA, 223, 224 data centers, 87, 349, 385–88, 394 Davis, Dantley, 84, 94 Davis, Steve, 134, 241, 260, 261, 267, 271, 279, 283, 298, 324–25, 344, 358–61, 364, 371, 386, 389, 395, 398, 411–12 DealBook Conference, 426–30 deepfakes, 61–62, 64 Delaware, 210, 351, 434 Court of Chancery, 210, 215–18, 232 Division of Corporations, 258 Deloitte, 236 Democrats, 43, 68, 100, 321, 393, 419, 432 Denholm, Robyn, 39 Derella, Matt, 72 DeSantis, Ron, 392 Deutch, Ted, 418 Digital Services Act, 244, 400 disinformation, see misinformation and disinformation Disney, 139, 156, 357, 364, 425, 427–29 diversity, equity, and inclusion, 99–100, 197 Dogecoin, 141, 144, 179 Dominion Voting Systems, 405 Döpfner, Mathias, 104, 115 Dorsey, Jack, 6, 11–17, 22, 84, 102, 114, 150, 153, 163–64, 172, 185, 206, 211, 371, 433 Africa trip of, 44, 45 Agrawal and, 78, 81–82, 86, 87, 91, 106, 150, 167, 168–69, 193, 212 Analyst Day goals and, 76–77 Berland and, 44–45, 196, 239 Bitcoin and, 44, 57, 78, 79, 87, 177, 233, 308, 433 Block company of, 233 Bluesky project of, 87–88, 90–91, 106, 174, 221, 433, 435 Cohn and Elliott Management and, 49–57, 77, 78, 94–95, 106, 120, 150 congressional testimonies of, 57, 58, 59–60 content moderation and, 15, 43, 57, 59–60, 62, 64–75, 91–92 child sexual exploitation material and, 372 early life of, 12 Ferguson protests and, 18–19, 183, 362 in Hawaii, 57–58 health regimen of, 22, 86 LiveJournal posts of, 13 Musk and, 50, 53, 78–79, 105–6, 110, 112, 113, 123, 163 Twitter acquisition and, 123, 142, 143, 163–67, 168–69, 232–33, 308, 432–33 Twitter employee layoffs and, 308, 309 NASA and, 40–41 Nostr and, 382, 433 at Odeo, 13, 14 at OneTeam events, 41–42, 44–48, 55, 86 physical appearance and personal style of, 11–14, 17, 19, 21, 57, 59, 77 politics and, 43, 321 in Polynesia, 69–70, 72 remote work policies and, 53, 57, 336 at Square, 16, 22, 40, 42, 44, 49, 51, 52, 55, 233 Trump ban and, 70–75, 245 as Twitter CEO, 5, 11, 12, 14, 15, 17, 21, 40–42, 49–58, 60, 77–78, 81, 84, 129, 138, 140, 142, 149, 165, 166, 177, 194, 308, 432, 433 resignation as, 79, 80–83, 91, 105, 106, 108, 163–64, 193 Twitter created by, 13–14, 16, 194 Twitter offices toured by, 44 Twitter’s board and, 15–17, 49–51, 53, 73, 77, 78, 81–82, 105, 112, 142, 164, 194, 433 on Twitter’s rebranding as X, 416 Zatko and, 223 Dorsey, Marcia, 21, 45 dot-com bubble, 30 DoubleClick, 81 Dow Chemical, 139 Dowell, Christian, 332, 393 D’Souza, Dinesh, 133 DuPont, 139 Durban, Egon, 54–56, 95, 106–7, 127, 138–39, 143–45, 151, 163, 194–95, 209, 228 E eBay, 31, 51 Eberhard, Martin, 31, 32 economy, 152, 158, 188–89, 204, 336, 364, 388 financial crisis of 2008 in, 160, 325 global, 324, 325, 364 inflation in, 314 Edgett, Sean, 66, 73, 80, 210, 226, 231, 247, 248, 257, 368 firing of, 263–65 Edwards, Jon, 201 elections, 317, 342 in Brazil, 257, 275, 283, 307, 317, 390 in U.S.

…

It felt like whiplash to Roth, flailing as they reacted to Musk’s chaotic proclamation that Twitter was failing to protect free speech. But it was also an opportunity for Roth to make dramatic repairs to the content moderation system he’d grappled with for a decade. The rules were patchwork, and the tools he had to enforce them were weak. Over the course of the pandemic and Trump’s final days in office, Roth had become convinced that Twitter needed a more sensible approach to content moderation, and while he had done his best to make the right decisions, he had only an inbox full of death threats to show for it. * * * >>> Having set Roth on the path to write up the rules for Project Saturn, Agrawal turned to Segal and began working on Prism, his plan to slash Twitter’s budgets.

…

Coleman’s idea was that Twitter could do the same, letting users fix misinformation on the platform faster than its content moderators could. In January 2021, he began rolling out Birdwatch, which quickly became popular with Twitter’s leadership. Alongside Dorsey’s nascent investment in Bluesky, Birdwatch showed that Twitter was opening its gates to users, letting them have more control over their experiences on social media. Since he’d become a darling of the C-suite based on Birdwatch’s success, Coleman seemed like a natural partner for Roth as he redesigned Twitter’s content moderation strategy. While Roth and Coleman tried to reimagine what Saturn would look like, Agrawal and Sullivan focused on rallying the troops.

Digital Empires: The Global Battle to Regulate Technology

by

Anu Bradford

Published 25 Sep 2023

The video was posted to awaken the international community to the horrors of the unfolding war, with the hope of galvanizing a global condemnation of the repressive Syrian regime. But as these examples reveal, drawing lines between permissible and impermissible speech in ways that are socially acceptable is exceedingly difficult. Yet, despite the delicate nature of content moderation, many government regulators have largely abdicated these types of decisions to the platforms themselves. In addition to the flawed outcomes from content moderation, the methods used in content moderation can be disconcerting as well. For example, alongside their reliance on algorithms, all platforms use human moderators that deploy so-called community guidelines to decide what content stays up and what is removed.

…

But as revealed by the German newspaper Sueddeutsche Zeitung in a 2018 story,10 there is a massive human toll borne by these moderators who work on the frontlines “cleaning” the internet. In return for meager pay and few employment protections, content moderators are exposed to a constant stream of graphic violence and cruelty. The story reported that a single Facebook moderator in Germany, for instance, was expected to handle 1,300 reports a day.11 A 2014 article published in Wired documented the work of Facebook’s content moderators in the Philippines, who clean the platform of illegal content while being paid $1–4 per hour for their work. These moderators are exposed hour after hour to the worst possible content posted to these internet platforms.

…

These moderators are exposed hour after hour to the worst possible content posted to these internet platforms. One Google moderator estimated having to filter through 15,000 images a day, including images of child pornography, beheadings, and animal abuse.12 In 2020, Meta settled a lawsuit brought by over 10,000 of its content moderators, agreeing to pay $52 million in mental health compensation.13 Content moderators pay an enormous psychological price in helping to keep the platforms safer and more civil for users around the world, but their plight also lays bare the distance between the highly compensated and powerful tech executives in Silicon Valley and the behind-the-scenes labor employed to scour the internet for harmful content.

Extremely Hardcore: Inside Elon Musk's Twitter

by

Zoë Schiffer

Published 13 Feb 2024

All any advertiser wanted was consistency and a basic transaction: to pay money and hope that an ad pushing a product, service, or brand might show up next to a benign tweet from LeBron James. Even the off chance that an ad might appear near anything resembling hate speech was an advertiser’s nightmare. “Advertisers play an underappreciated role in content moderation,” says Evelyn Douek, a professor and speech regulation expert. “So much of the content moderation discourse has always been a highfalutin discussion on free speech, on safety versus voice. But content moderation is a product, and brand safety has always been a key driver in terms of how these platforms create value.” All this sounded a lot like censorship to Musk. Once he owned Twitter, he planned to roll out a subscription product.

…

The attributes that made Musk good at tweeting—a combination of recklessness and shamelessness—made him exceedingly bad at running Twitter. His impulsiveness did not play well with advertisers. His thirst for speed alarmed regulators. And his opposition to content moderation alienated regular users. Six months after the deal closed, Twitter had lost two thirds of its value and found itself in hot water with lawmakers in the United States and Europe. As Twitter’s business went into free fall, Musk’s reputation took a hit. To many, he’d become more edgelord, less visionary. “Content moderation is really hard and apparently harder than rocket science,” noted Evelyn Douek, an assistant professor at Stanford Law School, who studies online speech regulation.

…

Anyone who worked at Twitter could have told him these goals were in direct conflict. Users might disagree about the type of content moderation they wanted to see, but few wanted no moderation whatsoever, which could devolve into a harassment-laden hellscape. More importantly, Twitter was hamstrung by outside forces. As long as Twitter was a company—even a private company—it was beholden to app stores, regulators, and (in all likelihood) advertisers. Yoel Roth’s trust and safety team knew this intimately. For years, they’d been working to balance content moderation with free speech. If Twitter failed to enforce its policies, it would get a call from Apple, hinting that the company’s next app release might be held up if it didn’t take stronger action.

System Error: Where Big Tech Went Wrong and How We Can Reboot

by

Rob Reich

,

Mehran Sahami

and

Jeremy M. Weinstein

Published 6 Sep 2021

The number of human content moderators needed to tackle the volume of information on these platforms is enormous. In 2017, YouTube CEO Susan Wojcicki announced that the company would hire 10,000 content moderators in the coming year. The following year, Mark Zuckerberg wrote that “the team responsible for enforcing these [community standards] policies is made up of around 30,000 people. . . . they review more than two million pieces of content every day.” Indeed, the big tech platforms should be credited for taking an aggressive stance toward content moderation and hiring armies of content moderators. But being a content moderator comes with a price.

…

But being a content moderator comes with a price. Selena Scola, a onetime content moderator at Facebook, sued the company in 2018, claiming that the stress of the job had caused her to develop post-traumatic stress disorder (PTSD). Her suit reported that her job involved viewing “distressing videos and photographs of rapes, suicides, beheadings and other killings.” The suit was joined by several other former content moderators who had had similar experiences, claiming that “Facebook had failed to provide them with a safe workspace.” In May 2020, Facebook agreed to settle the case with its former and current content moderators to the tune of $52 million.

…

The inability of AI alone to solve the problem was on full display at the outset of the COVID-19 pandemic. YouTube, Twitter, and Facebook wanted to limit the number of workers, including content moderators, coming into the office. Concerns for user privacy posed obstacles to accessing data from home computers and networks. Company guidelines often require that content moderators do the job only in secure locations in corporate offices. Twitter acknowledged the impact this greater reliance on AI for content moderation would have on its platform, writing in a March 2020 post that it was “increasing our use of machine learning and automation to take a wide range of actions on potentially abusive and manipulative content.

Your Computer Is on Fire

by

Thomas S. Mullaney

,

Benjamin Peters

,

Mar Hicks

and

Kavita Philip

Published 9 Mar 2021

I use the term “elite content reviewers” to denote the class (and often racial) difference between “commercial content moderators” (in the sense used by Sarah T. Roberts) and the reviewers I study on the other end, at police agencies, NCMEC, and Facebook and Microsoft’s child safety teams. Sarah T. Roberts’s important work on commercial content moderators has documented the intense precarity and psychological burden of CCM as outsourced, contractual labor. See Sarah T. Roberts, “Digital Detritus: ‘Error’ and the Logic of Opacity in Social Media Content Moderation,” First Monday 23, no. 3 (2018), http://dx.doi.org/10.5210/fm.v23i3.8283. 19.

…

Roberts, “Digital Detritus: ‘Error’ and the Logic of Opacity in Social Media Content Moderation,” First Monday 23, no. 3 (2018), http://dx.doi.org/10.5210/fm.v23i3.8283. 19. See Roberts, “Your AI Is a Human,” this volume, on initial stages of content moderation flagging. 20. Adrian Chen, “The Laborers Who Keep Dick Pics and Beheadings Out of Your Facebook Feed,” Wired (October 2014), https://www.wired.com/2014/10/content-moderation/. 21. Cf. Roberts, “Your AI Is a Human,” this volume; Sarah T. Roberts, “Digital Refuse: Canadian Garbage, Commercial Content Moderation and the Global Circulation of Social Media’s Waste,” Wi: Journal of Mobile Media 10, no. 1 (2016): 1–18, http://wi.mobilities.ca/digitalrefuse/. 22. Michael C. Seto et al., “Production and Active Trading of Child Sexual Exploitation Images Depicting Identified Victims.

…

What I discovered, through subsequent research of the state of computing (including various aspects of AI, such as machine vision and algorithmic intervention), the state of the social media industry, and the state of its outsourced, globalized low-wage and low-status commercial content moderation (CCM) workforce10 was that, indeed, computers did not do that work of evaluation, adjudication, or gatekeeping of online UGC—at least hardly by themselves. In fact, it has been largely down to humans to undertake this critical role of brand protection and legal compliance on the part of social media firms, often as contractors or other kinds of third-party employees (think “lean workforce” here again). While I have taken up the issue of what constitutes this commercial, industrialized practice of online content moderation as a central point of my research agenda over the past decade and in many contexts,11 what remained a possibly equally fascinating and powerful open question around commercial content moderation was why I had jumped to the conclusion in the first place that AI was behind any content evaluation that may have been going on.

Internet for the People: The Fight for Our Digital Future

by

Ben Tarnoff

Published 13 Jun 2022

It’s true that these damages can be softened somewhat, and that larger firms can soften them more easily than smaller ones. But here too the market sets limits: Facebook can only spend so much on content moderation before its shareholders revolt. More importantly, its addiction to engagement, and the symbiosis with the Right that this addiction has engendered, is the very basis of its business model—which creates the problems that content moderation is supposed to address. The comparison that comes to mind is the tragicomedy of coal companies embracing carbon capture: it would be easier to simply stop burning coal. Online malls are not inequality machines purely on account of their effects, however.

…

Another way to think of these interventions is as investments in care. The scholar Lindsay Bartkowski argues that content moderation is best understood as a form of care work and, like other forms of care work, is systematically under-valued. Certain kinds of caring go entirely unpaid—mothers bearing and raising children, for instance—while others are performed for menial pay, as in the case of the home health aides and nursing home staff who care for the elderly. Similarly, content moderation is typically a low-wage job, despite being as essential to the reproduction of our online social worlds as other kinds of care are to the reproduction of our offline social worlds.

…

Google is especially reliant on contingent labor; the company has more temps, vendors, and contractors (TVCs) than full-time employees. 131, They also perform … Gray and Suri, Ghost Work. 132, This shadow workforce is just … A 2016 report found that average earnings for direct employees in Silicon Valley was $113,300, while white-collar contract workers made $53,200 and blue-collar contract workers made $19,900. The same study found that contract workers receive few benefits: 31 percent of blue-collar contract workers had no health insurance at all. See Silicon Valley Rising, “Tech’s Invisible Workforce,” March 2016. Traumatizing working conditions for content moderators: Sarah T. Roberts, Behind the Screen: Content Moderation in the Shadows of Social Media (New Haven, CT: Yale University Press, 2019). 132, Predatory inclusion, argues … Predatory inclusion: Tressie McMillan Cottom, “Where Platform Capitalism and Racial Capitalism Meet: The Sociology of Race and Racism in the Digital Society,” Sociology of Race and Ethnicity 6, no. 4 (2020): 441–49.

Empire of AI: Dreams and Nightmares in Sam Altman's OpenAI

by

Karen Hao

Published 19 May 2025

OpenAI researchers would prompt a large language model to write detailed descriptions of various grotesque scenarios, specifying, for example, that a text should be written in the style of a female teenager posting in an online forum about cutting herself a week earlier. In that sense, the work did differ from traditional content moderation. Where content moderators for Meta reviewed actual user-generated posts to determine whether they should stay on Facebook, Okinyi and his team were annotating content to train OpenAI’s content-moderation filter in order to prevent the company’s models from producing those kinds of outputs in the first place. To cover enough breadth in examples, some of them were at least partly dreamed up by the company’s own software to imagine the worst of the worst

…

GO TO NOTE REFERENCE IN TEXT numbering just over: Erin Woo and Stephanie Palazzolo, “OpenAI Overhauls Content Moderation Efforts as Elections Loom,” The Information, December 18, 2023, theinformation.com/articles/openai-overhauls-content-moderation-efforts-as-elections-loom. GO TO NOTE REFERENCE IN TEXT The severe shortage: “Behind the Scenes Scaling ChatGPT—Evan Morikawa at LeadDev West Coast 2023,” posted October 26, 2023, by LeadDev, YouTube, 27 min., 12 sec., youtu.be/PeKMEXUrlq4. GO TO NOTE REFERENCE IN TEXT In an attempt to leverage: OpenAI, “Using GPT-4 for Content Moderation,” Open AI (blog), August 15, 2023, openai.com/index/using-gpt-4-for-content-moderation.

…

Okinyi’s managers at Sama gave him an assessment they called a resiliency screening. He read some unsettling passages of text and was told to categorize them based on a set of instructions. When he passed with flying colors, he was given a choice to join a new team to do work he considered to be similar to content moderation. He had never done content moderation before, but the texts in the assessment seemed manageable enough. Not only would it be absurd to turn down a job in the middle of the pandemic, but he was thinking about his future. He was living in Pipeline, a chaotic, slum-like neighborhood in southeast Nairobi, jammed with tenements and twenty-four-hour street vendors, buzzing with the restless energy of twentysomethings jostling their way to something better.

Code Dependent: Living in the Shadow of AI

by

Madhumita Murgia

Published 20 Mar 2024

Together, they have potentially global implications for the employment conditions of a hidden army of tens of thousands of workers employed to perform outsourced digital work for large technology companies. The content-moderation work was distinct from the labelling tasks that Ian, Benja and their colleagues had been doing. Sama’s chief executive, Wendy Gonzalez, told me she believed content moderation was ‘important work’, but ‘quite, quite challenging’, adding that that type of work had only ever been 2 per cent of Sama’s business.8 Sama was, at its heart, a data-labelling outfit, she said. In early 2023, as it faced multiple lawsuits, Sama exited the content-moderation business and this entire office was shut down. The closure of the Meta content hub was sparked by Daniel Motaung, a twenty-seven-year-old employee of Sama who had worked in this very building.

…

She observed, first-hand, that the most lucrative parts of Silicon Valley products – AI recommendation engines, such as Instagram and TikTok’s main feeds or X’s For You tab that grab our attention – are often built on the shoulders of the most vulnerable, including poor youth, women and migrant labourers whose right to live and work in a country is dependent on their job. Without the labour of outsourced content moderators, these feeds would be simply unusable, too poisonous for our society to consume as greedily as we do. It wasn’t just the nature of the content itself that was a problem – it was their working conditions, this reimagining of a human worker as an automaton, simultaneously training AI to do their own jobs. Content moderators were expected to process hundreds of pieces of content every day, irrespective of the nature of their toxicity. They had to watch details in the videos to determine intent and context and zoom into unpleasant imagery of wounds or body parts, to classify their nature.

…

Gray and Siddharth Suri, Ghost Work: How to Stop Silicon Valley from Building a New Global Underclass (Houghton Mifflin Harcourt Publishing, 2019). 5 Sama, ‘Sama by the Numbers’, February 11, 2022, https://www.sama.com/blog/building-an-ethical-supply-chain/. 6 Sama, ‘Environmental & Social Impact Report’, June 14, 2022, https://etjdg74ic5h.exactdn.com/wp-content/uploads/2023/07/Impact-Report-2023-2.pdf. 7 Ayenat Mersie, ‘Court Rules Meta Can Be Sued in Kenya over Alleged Unlawful Redundancies’, Reuters, April 20, 2023, https://www.reuters.com/technology/court-rules-meta-can-be-sued-kenya-over-alleged-unlawful-redundancies-2023-04-20/. 8 David Pilling and Madhumita Murgia, ‘“You Can’t Unsee It”: The Content Moderators Taking on Facebook’, Financial Times, May 18, 2023, https://www.ft.com/content/afeb56f2-9ba5-4103-890d-91291aea4caa. 9 Billy Perrigo, ‘Inside Facebook’s African Sweatshop’, Time, February 17, 2022, https://time.com/6147458/facebook-africa-content-moderation-employee-treatment/. 10 Milagros Miceli and Julian Posada, ‘The Data-Production Dispositif’, CSCW 2022. Forthcoming in the Proceedings of the ACM on Human-Computer Interaction, May 24, 2022, 1–37. 11 Dave Lee, ‘Why Big Tech Pays Poor Kenyans to Teach Self-Driving Cars’, BBC News, November 3, 2018, https://www.bbc.co.uk/news/technology-46055595.

Ghost Work: How to Stop Silicon Valley From Building a New Global Underclass

by

Mary L. Gray

and

Siddharth Suri

Published 6 May 2019

The Brothers Reuther and the Story of the UAW. Boston: Houghton Mifflin, 1976. Roberts, Sarah T. Behind the Screen: Content Moderation in the Shadows of Social Media. New Haven, CT: Yale University Press, forthcoming. ———. “Digital Detritus: ‘Error’ and the Logic of Opacity in Social Media Content Moderation.” First Monday 23, no. 3 (March 1, 2018). http://firstmonday.org/ojs/index.php/fm/article/view/8283. ———. “Social Media’s Silent Filter.” The Atlantic, March 8, 2017. https://www.theatlantic.com/technology/archive/2017/03/commercial-content-moderation/518796/. Roediger, David R. The Wages of Whiteness: Race and the Making of the American Working Class.

…

(For example, if a person wants to find an expensive wedding gift, would they enter “fine china” or “fancy dinnerware”?) Kala’s children also help when she picks up tasks identifying “adult content,” a common job that information studies scholar Sarah T. Roberts refers to as “commercial content moderation.”25 This kind of content moderation requires someone like Kala in the loop precisely because words, as plain as they may seem, can mean many different things depending on who is reading and writing them. Artificial intelligence can learn and model some human deliberation like that between Kala and her sons, but it must be constantly updated to account for new slang or unexpected word use.

…

It’s time for social media companies that benefit greatly from this volunteer work to pitch in to the commons and pay for the diligence of co-moderators willing to take on the trolls. This could look like, following Amara’s example, the threading together of flash team members invested in content moderation as community work and, as the need arises, infusing those communities with the software upgrades, equipment, and pay to keep the commons of content moderation well nourished. There is no easy, free alternative, unless everyone decides to delete their social media accounts. FIX 5: RÉSUMÉ 2.0 AND PORTABLE REPUTATION SYSTEMS Since requesters can seamlessly enter and exit the market, independent workers are often at a disadvantage when it comes to getting a rating or recommendation after they finish a task.

Battle for the Bird: Jack Dorsey, Elon Musk, and the $44 Billion Fight for Twitter's Soul

by

Kurt Wagner

Published 20 Feb 2024

they wanted to haul Dorsey back to Washington: Kurt Wagner, “Twitter Allows Links to N.Y. Post Story, Backtracking Again,” Bloomberg, October 16, 2020, https://www.bloomberg.com/news/articles/2020-10-16/twitter-allows-links-to-n-y-post-story-backtracking-again?sref=dZ65CIng. “Content moderation is incredibly… along the way”: Vijaya Gadde (@vijaya), “I’m grateful for everyone who has provided feedback and insights over the past day. Content moderation is incredibly difficult, especially in the critical context of an election. We are trying to act responsibly & quickly to prevent harms, but we’re still learning along the way.” Twitter, October 15, 2020, 7:06 p.m., https://twitter.com/vijaya/status/1316923560175296514.

…

Twitter, October 28, 2022, 5:09 a.m., https://twitter.com/elonmusk/status/1585966869122457600. he would investigate whether it was being shadow-banned: Elon Musk (@elonmusk), “I will be digging in more today.” Twitter, October 28, 2022, 4:41 a.m., https://twitter.com/elonmusk/status/1585959864454459393. pledged to form a “content moderation council”: Elon Musk (@elonmusk), “Twitter will be forming a content moderation council with widely diverse viewpoints. No major content decisions or account reinstatements will happen before that council convenes.” Twitter, October 28, 2022, 11:18 a.m., https://twitter.com/elonmusk/status/1586059953311137792. “anyone suspended for minor & dubious reasons will be freed from Twitter jail”: Elon Musk (@elonmusk), “Anyone suspended for minor & dubious reasons will be freed from Twitter jail.”

…

A ABC, 199 Adeshola, TJ, 87, 103 advertising, 277 civil rights groups and, 238, 240 direct response, 119–20, 152 on Facebook, 120, 152, 234, 238 advertising on Twitter, 2, 5, 10–11, 33, 47–50, 53, 59, 72, 86–87, 108, 111, 119–20, 147, 152–53, 157, 164, 187, 188, 199, 218, 228, 258, 272, 276, 279, 289 Musk and, 232–36, 238–40, 243, 245–46, 248–52, 254, 264, 267, 284–85 Affleck, Ben, 57 Africa, 86–89, 91, 97, 103, 107–8, 139, 140 Agrawal, Parag, 5–6, 87, 88, 126, 143–44 executives fired by, 193, 199, 201 Mudge and, 207 Musk and, 148, 158, 159, 161–65, 180–81, 190, 191, 195, 199, 200, 202, 210, 220 Musk’s firing of, 221 as Twitter CEO, 143–48, 151–53, 157, 159, 161, 165, 180–84, 187, 190–93 and Twitter’s lawsuit against Musk, 202 Ahrendts, Angela, 24 Airbnb, 101 Ai Weiwei, 20 Al Adham, Mo, 82–83, 119 Alexander, Ali, 73, 284 Alibaba, 102 Allen & Company, 37 annual retreat of, 198–200 All-In, 190–91, 282 Al-Mahmoud, Mansoor Bin Ebrahim, 277 Alphabet, 4, 148 Amazon, 3, 24, 33, 37, 50, 62, 106 Alexa, 81 Web Services, 24 Amnesty International, 59 Andreessen, Marc, 205–6 Andreessen Horowitz, 186–87, 205–6, 217 Anti-Defamation League, 232, 238 antisemitism, 56, 232, 268, 284–85 AOL, 14, 24 Apple, 3, 24, 37, 50, 91, 233, 254, 285 App Store, 32–33 iPhone, 21, 152 iTunes, 16 Apprentice, The, 44 Arab Spring, 4, 10, 38 Ardern, Jacinda, 85 Argentina, 99 Armstrong, Tim, 24 artificial intelligence, 287 AT&T, 99 Athenahealth, 99 Atlantic, 209 Axel Springer SE, 160 B Babylon Bee, 153–54, 179, 271 Bain, Adam, 23, 25, 37, 40, 48 Baker, Jim, 124 Bankman-Fried, Sam, 174, 185 Baron Cohen, Sacha, 171 Barstool Sports, 138 Barton, Joe, 76–77 Bates, Tony, 24 Benioff, Marc, 35–36, 38, 158–59 Benson, Guy, 71, 72 Berland, Leslie, 65–67, 73, 195, 205, 213–14, 218, 236, 239, 280 Berlin, Erik, 240–42 Beykpour, Kayvon, 34, 81–83, 87, 119–21, 141, 152, 205, 224 firing of, 193, 199, 201, 224 Bezos, Jeff, 24, 50, 81, 199 Biden, Hunter, 123–25, 180–82, 220, 270 Biden, Joe, 123–24, 126–27, 206, 248, 270, 286 Bieber, Justin, 163 Biles, Simone, 92, 153 Bilton, Nick, 20 Birchall, Jared, 159, 161, 189, 217, 260 Bitcoin, 5, 88, 137–43, 147, 206, 209 Blackburn, Jeff, 37 Blackburn, Marsha, 72 Black Lives Matter, 4, 34, 46 Blair, Tony, 253 blockchain, 88, 139, 144, 176, 185 Blogger, 15, 19 Bloomberg, 33, 100, 206 Bluesky, 88, 136–37, 141, 142, 144, 288–89 Boko Haram, 92 Boring Company, 171, 176, 186, 217, 231, 281 Borrman, Brandon, 125 Box, 277 Brady, Tom, 95 Brand, Dalana, 103 Bravo, Orland, 185 Brazil, 223 Bridgewater Associates, 85 Brown, Michael, 46, 85 Buffett, Warren, 198 Burisma, 123, 270 Burke, Steve, 37 Bush, George W., 99, 253 Bush, Jeb, 45 Bush, Jonathan, 99 Business Insider, 193 ByteDance, 80 C Café Milano, 71–73 Calacanis, Jason, 174, 186, 190, 209, 217, 234, 282 Cape Coast Castle, 87 Carell, Steve, 9 Carlson, Tucker, 284 Carter, Flora, 138 CBS, 33, 162 CDC, 112, 113 celebrities, 10, 25, 57–59, 74, 92, 138, 141, 229, 250 Chancery Daily, 202–3 Chappelle, Dave, 273–74 Charlottesville white supremacist rally, 51, 59, 75 Charter Communications, 202 Chastain, Jessica, 57, 58 Chen, Jon, 225, 259 China, 196, 220 Christchurch mosque shootings, 85 Christie, Ron, 73 Cisco, 24 Citadel, 160 civil rights groups, 238, 240 Clinton, Hillary, 20, 34, 41, 42, 47–48, 50, 72, 86, 124 Clubhouse, 119 CNBC, 100, 224 CNN, 51, 72, 275 Coby, Gary, 48–49 Coca-Cola, 47 Code Conference, 47 Cohn, Jesse, 95, 99–101, 104–9, 143, 147, 156 Color of Change, 238 Comcast, 37, 198–99 Compaq Computer, 168 Compton, Kyle Wagner, 202 Congress, U.S., 83, 127, 134 Dorsey’s testimony before, 76–78, 125, 137–38, 140 conservatives, Republicans, 71–77, 84, 114, 125, 181, 196, 248, 269, 270, 284 conspiracy theories, 75, 233, 248 Consumer Price Index, 186 Conway, Kellyanne, 116, 272 Cook, Tim, 50 Cooper, Bradley, 25 Cornet, Manu, 215, 227–28 Costolo, Dick, 9–12, 20, 21, 53, 105, 181 Covid-19 pandemic, 106–8, 110–13, 115, 117–20, 124, 141, 142, 146, 147, 152, 153, 193, 196, 230, 232, 245, 267, 284 Cramer, Jim, 100 Crawford, Esther, 230–31, 242, 288 Crowell, Colin, 52, 84 Cruz, Ted, 45, 125 cryptocurrencies, 144, 172, 174, 176, 185 Bitcoin, 5, 88, 137–43, 147, 206, 209 Dogecoin, 176 Cue, Eddy, 37 Culbertson, Lauren, 84 Currie, Peter, 23, 25 Curtis, Jamie Lee, 57 D Daily Mail, 224 Dalio, Ray, 85 Daly, Carson, 31 DARPA, 206 Davis, Dantley, 119 Davis, Steve, 176, 217, 231, 237–38, 281 Deadmau5, 138 Defense Department, 206 Delaware Court of Chancery, 200–210 Dell, 101 Democrats, 73, 77, 83, 84, 92, 114, 127, 196, 248 DeSantis, Ron, 173 Dictator, The, 171 Dillon, Seth, 154 Diplo, 142 Disney, 36–38, 96, 177, 199, 285 Dispatch Management Services, 13–14 DNet, 14 Dogecoin, 176 Döpfner, Mathias, 160 Dorsey, Jack, 21–25, 223, 288–89 Africa trip and plans of, 86–89, 91, 97, 103, 107–8, 139, 140 Analyst Day and, 118–22 Bitcoin as interest of, 5, 88, 137–43, 147, 209 Bluesky service of, 88, 136–37, 141, 142, 144, 288–89 at Café Milano dinner, 71–73 celebrities and, 25, 74, 138, 141 childhood of, 12–13, 66, 110 as coder, 13, 16, 139 as college student, 13 in congressional hearings, 76–78, 125, 137–38, 140 conservative outreach of, 71–74 Covid-19 pandemic and, 110, 112 early internet use of, 13 Elliott Management and, 96, 99–109, 118, 121, 143, 145–47, 156, 157, 228, 273, 289 first tech job of, 13 forty-second birthday of, 79 Gadde and, 53, 54, 84, 129–30, 172–73, 180–82 as introvert, 12, 15, 26, 66, 101 Kidd and, 13–15 lifestyle of, 26, 64–65, 79–80 Loomer and, 77, 140 management style of, 19, 31–32, 85–86, 109, 142, 182 meditation practice of, 26, 64, 66, 79, 86, 90 Miami trip of, 138–41 move to New York, 13–14 move to San Francisco, 14 Mudge and, 206–7 Musk and, 1–3, 68, 92–94, 103–4, 109, 142, 148, 155–57, 159, 161, 165, 172–73, 176, 179, 80–81, 186, 194, 209–10, 244–45, 263, 288–89 and Musk’s acquisition of Twitter, 1–3, 5, 176, 179, 180–82, 186, 210, 244–45, 288, 289 at Odeo, 15–17 political views of, 73 Rogen and, 58–59 as Square CEO, 12, 21–23, 25, 26–27, 74, 86–88, 96, 97, 100, 101, 105, 139, 141, 145, 147 STAT.US idea of, 14 Storytime and, 110, 182 on Today show, 31, 32 travels and meetings in 2019, 85 Trump and, 46–47, 49–50, 54, 73, 84 “Trust” email of, 40–41 Twitter acquisition bids and, 35–41 Twitter Blue and, 228 Twitter board’s relationship with, 3, 101, 109, 145, 176, 182, 194 as Twitter CEO, 4, 9–12, 18–25, 26–29, 31–32, 35, 37–38, 40, 45, 54, 76, 79, 80, 96, 97, 100–109, 110, 118, 126, 135, 141, 143–45, 147–48, 153, 169, 182, 261 as Twitter chairman, 19, 20, 147 Twitter content moderation policies and, 54, 56, 58–61, 71–72, 112, 125, 129, 130, 135–37, 140, 155–57, 181–82, 268, 272–73, 288 Twitter Files and, 272 and Twitter lawsuit against Musk, 205 Twitter resignation of, 141–48, 151, 155, 156, 161, 273 at Twitter retreats, 28–30, 64–68, 85–86, 90–94 and Twitter’s banning of Trump, 130, 132, 133, 135–37, 147 Twitter’s transition from idea to company as viewed by, 1–2 Twitter three-year business plan and, 119–21 Dorsey, Marcia, 27, 64, 66, 73, 76, 90, 135 Dorsey, Tim, 66, 73, 90, 135 Dot Collector, 85 Dotcom, Kim, 267, 277 Duke, David, 51 Duncan, Jeff, 77 Durban, Egon, 101–6, 108, 157, 158 Duysak, Bahtiyar, 61–62 Dweck, Carol, 28 E eBay, 99–100, 169 Edgett, Sean, 117, 221 Ehrenpreis, Ira, 162 elections2016 presidential, 34, 41, 42–52, 61–63, 77, 86, 114, 124, 125 2020 presidential, 73, 87, 111, 113–16, 118, 123–29, 131, 152, 270, 284 2022 midterm, 238, 243, 248, 254 Eli Lilly, 253–54 Elliott Management, 95–109, 118, 121, 126, 143, 145–47, 156, 157, 158, 228, 273, 289 Ellison, Larry, 163, 175, 177, 185, 186, 205, 209 ElonJet, 266, 275 email, 136 engineers, 217–18, 224–26, 243, 247, 261–65 Erdogan, Recep Tayyip, 277 ESPN, 38 Ethiopia, 87–88 Ezekwesili, Obiageli “Oby,” 92 F Facebook, 23, 28, 29, 32, 33, 36, 50, 57, 75, 77, 78, 80, 81, 85, 91, 106, 112, 119, 137, 158, 177, 277 advertising on, 120, 152, 234, 238 revenues of, 4, 98, 148 size of, 10 stock of, 98 Trump and, 49, 51–52, 134, 135 Falck, Bruce, 67, 90, 119–21, 152–53, 205 firing of, 193, 199, 201 FBI, 270 Federal Trade Commission, 177, 207, 208, 258–60, 281 Ferguson protests, 45–46, 85 Fidelity, 279 Fiorina, Carly, 45 Flores, Mayra, 196, 197 Floyd, George, 117 Fogarty, Marianne, 259 Ford Motor Company, 238, 239 Fortune, 35 Fox, Martha Lane, 98, 158, 161 Fox & Friends, 116 Fox News, 71, 73, 116, 271, 284 free speech, 2, 5, 46, 47, 53–54, 59, 60, 154–57, 173, 196, 238–40, 246, 268, 269, 271, 275, 278, 284, 288 Fridman, Lex, 277, 279 Friedberg, David, 282 FriendFeed, 158 Frohnhoefer, Eric, 261–62 FTC, 177, 207, 208, 258–60, 281 FTX, 174, 185 Fuentes, Nick, 284 G Gadde, Vijaya, 37, 46, 53, 54, 60, 84, 117, 128–29, 132, 172–73, 192, 199 Biden laptop story and, 124, 125, 180–82, 220 Musk and, 180–81, 220–21 Musk’s firing of, 221–23 Trump ban and, 129–30, 135, 220 Gates, Bill, 95 Gates, Melinda, 153 G-Eazy, 138 General Electric, 198–99, 239 Ghana, 87, 99 Ginsburg, Ruth Bader, 201 Giuliani, Rudy, 123, 124 Gizmodo, 74–75 Glass, Noah, 17–18 Goldman, Jason, 5, 289 Goldman Sachs, 30, 37, 99, 104, 105, 178 Goodell, Roger, 33, 235, 240 Google, 3, 4, 9, 15, 24, 25, 37, 50, 99, 106, 120, 136, 137, 148, 158, 177, 234 GoPro, 24 Gracias, Antonio, 217, 226, 237, 260 Graham, Donald, 199 Graham, Lindsey, 125, 134 Graham, Paul, 277 Greene, Marjorie Taylor, 232–33 Greenfield, Ben, 79–80 Griffin, Ken, 160 Grimes (musician), 274–75 Grimes, Michael, 185, 188, 209 Gross, Andy, 257 Grutman, David, 138, 139 H hackers, 13, 206, 207, 258, 267 Hamas, 286 Hannity, Sean, 73 Harris, Kamala, 153 Harvey, Del, 52–54, 60, 67, 112, 117, 129, 132, 133 Hatching Twitter (Bilton), 20 hate speech, 38, 71, 114, 232, 233, 238, 245 Hawkins, Tracy, 231 Hayes, Julianna, 225, 259 Health and Human Services Department, 153 Ho, Ed, 60 Hoffman, Reid, 185 Horizon Media, 234 House Energy and Commerce Committee, 137 Huffington, Arianna, 45 Hulu, 24, 36 Hurricane Harvey, 92 I IBM, 285 Iger, Bob, 36, 38–39 Instagram, 29, 33, 39, 57, 80, 82, 98, 141, 275, 277, 278, 286, 287 International Space Station, 10, 91, 142 IPG, 238 iPhone, 21, 152 Iraq, 20 Isaacson, Walter, 151, 159, 214, 227, 281 ISIS, 11 Israel, 286 iTunes, 16 J James, LeBron, 34 Jamil, Jameela, 92 January 6 United States Capitol attack, 127–29, 131–33, 134–35, 197, 284 Jassy, Andy, 24, 25 Jay-Z, 1, 74, 204 Jeremy’s, 16 Jobs, Laurene Powell, 103 Jobs, Steve, 16, 103 Johnson, Peggy, 37 Jolly, David, 61 Jones, Alex, 75–77 JP Morgan, 178 Justice Department, 177, 206 K Kaiden, Robert, 237 Kardashian, Kim, 184 Kennedy, John F., 184 Kennedy, Trenton, 125 Kessler, Jason, 75 Kidd, Greg, 13–15 Kieran, Damien, 259, 281 Kilar, Jason, 24 Killian, Joseph, 280–81 Kim Jong-un, 54–55, 63 King, Gayle, 162, 173–74, 209 King, Stephen, 229 Kirk, Charlie, 73 Kissner, Lea, 259, 281 KKR, 201 Koenigsberg, Bill, 234–35 Kordestani, Omid, 24, 25, 37, 39, 74, 95, 97–99, 104, 108 Kraft, Robert, 204 Krishnan, Sriram, 217 Ku Klux Klan, 51 Kushner, Jared, 277 Kutcher, Ashton, 10 L Labor Department, 244 Lady Gaga, 91 Lamar, Kendrick, 210 Lauer, Matt, 31 Lawdragon, 202 Legend, John, 92 Leib, Ben, 261, 262 Lemkau, Gregg, 99, 101–2, 104, 105 Levchin, Max, 169 Levie, Aaron, 277 Levine, Rachel, 153–54 LinkedIn, 35, 37, 185 Lively, Blake, 184 Lonsdale, Joe, 160, 173 Loomer, Laura, 77, 140 López Obrador, Andrés Manuel, 134 Lord, Sierra, 87 Lucasfilm, 38 M Macron, Emmanuel, 85, 134–35, 266, 267 Mad Money, 100 Maheu, JP, 67, 235, 236, 239 Mars, 169–71, 183, 196, 200, 256, 271, 274 Massachusetts Institute of Technology, 14 Mastodon, 248, 275, 277 Maxwell, Ghislaine, 253 Mayer, Kevin, 36 Mayweather, Floyd, 138 Mbappé, Kylian, 277 McCain, John, 42 McConnell, Mitch, 116 McCormick, Kathaleen, 203, 204, 207–9 McGowan, Rose, 56–59, 71 McKelvey, Jim, 21 Mckesson, DeRay, 46 Merkel, Angela, 134 Merrill, Marc, 174 Messi, Lionel, 277 Messinger, Adam, 34 Meta, 3, 91 Met Gala, 184–86, 191 MeToo movement, 4 Miami, FL, 138–41, 190 Microsoft, 24, 35, 37, 136, 233 Middle East, 4, 10, 38 midterm elections of 2022, 238, 243, 248, 254 Milano, Alyssa, 57 Mindset (Dweck), 28 Minneapolis protests, 117–18 Monroe, Marilyn, 184 Montano, Mike, 87 Morgan Stanley, 107, 174, 185 Mudge, 206–9, 222 Mujica, Maryam, 43 Murdoch, Rupert, 198 Musk, Andrew, 217, 226, 262 Musk, Elon, 50, 67–68, 103–4, 142, 166–71, 206 advisors of, 216–17, 224, 226, 282 Agrawal and, 148, 158, 159, 161–65, 180–81, 190, 191, 195, 199, 200, 202, 210, 220 Agrawal fired by, 221 at Allen & Company retreat, 198–200 Boring Company of, 171, 176, 186, 217, 231, 281 Calacanis and, 174, 186, 190, 209, 217, 234, 282 at Chappelle’s show, 273–74 childhood of, 166–68 children of, 171, 214, 216, 222, 234, 274, 283 college years of, 168 Dorsey and, 1–3, 68, 92–94, 103–4, 109, 142, 148, 155–57, 159, 161, 165, 172–73, 176, 179, 180–82, 186, 194, 209–10, 244–45, 263, 288–89 Dorsey and Twitter acquisition of, 1–3, 5, 176, 179, 180–82, 186, 210, 244–45, 288, 289 Ellison and, 175, 177, 185, 186, 205, 209 engineers and, 217–18, 247, 261–65 Gadde and, 180–81, 220–21 Gadde fired by, 221–23 journalists suspended by, 275, 276, 278 at Met Gala, 184–86, 191 move to Canada, 168 net worth of, 151, 171, 186, 189, 191, 192 Neuralink company of, 171 “pedo guy” tweet of, 93, 162, 172, 204 political views of, 196, 197, 217, 248 private plane of, 266–67, 275–76 Riley and, 154, 157, 179 Roth and, 222–23, 271–72, 287 sense of humor of, 162, 171–72, 182, 194, 213, 247–48 sexual harassment allegations against, 193–95 sink stunt of, 213, 218, 220, 251, 280, 282, 283 SpaceX company of, 2, 142, 151, 169–72, 186, 193, 200, 217, 226, 257, 260, 264, 266, 278, 281–82, 288 stalker and, 274–75 Taylor and, 158–59, 164–65 Tesla company of, see Tesla text messages of, 208–10 Trump reinstatement and, 234–35, 238, 239, 267–69, 278 Twitter acquired by, 1–6, 171–79, 180–97, 210, 213–15, 217, 221, 222, 224, 241, 244–45, 269, 271, 275, 277, 279, 287–89 Twitter acquisition as idea for, 154, 157, 164, 165 Twitter acquisition termination attempt, 5, 189–91, 195, 199–210, 213 Twitter advertising and, 232–36, 238–40, 243, 245–46, 248–52, 254, 264, 267, 284–85 Twitter all-hands meeting held by, 255–56, 259, 260, 262 Twitter Blue and, 218–19, 228–30, 249–55, 260, 285 Twitter board of directors and, 103–4, 109, 156–65, 173, 175, 177–79, 186, 200, 210, 221, 281 Twitter content moderation and, 154–57, 160, 196, 222–23, 225, 233, 238–40, 243–46, 249, 266–72, 275–78, 284, 288 Twitter criticized by, 5, 164, 172–73, 175, 181, 190, 192, 195, 244 in Twitter due diligence meeting, 187–88 Twitter employees fired by, 262–63 Twitter employees’ view of, 162–63, 183, 193–97, 219, 241, 244–45, 264 Twitter Files and, 269–72 Twitter investment of, 151, 155, 157–61, 168, 173, 174, 179, 195, 199 Twitter lawsuit against, 200–210, 213, 221, 222, 269, 280 Twitter layoffs under, 196, 197, 216, 224–27, 231, 236–37, 240–45, 247, 254, 258–60, 264 Twitter office spaces turned into hotel rooms by, 280–81 Twitter #OneTeam appearance of, 92–94 Twitter plans and vision of, 174–75, 194–97, 217–18, 256–57, 269, 283–84, 287 in Twitter Q&A session, 194–97 Twitter remote workers and, 196, 197, 216, 226, 252, 255, 257, 260, 264 Twitter rent payments not paid by, 280 Twitter resignation poll conducted by, 278–79 Twitter senior leaders ousted by, 221 Twitter servers cut by, 280 Twitter under ownership of, 213–31, 232–46, 247–65, 266–82, 283–89 as Twitter user, 93–94, 103–4, 148, 151, 154–55, 159, 162–64, 171, 172, 181, 188–91, 194, 195, 215, 219, 220, 223, 225, 233–36, 240, 244, 245, 247–49, 255, 261–62, 267–68, 271–72, 274, 277, 278, 281, 284–87 work habits and expectations of, 227, 230, 257, 258, 260, 263, 265 at World Cup, 276–78 X.com company of, 169, 186, 283 Zip2 company of, 168–69, 227 Zuckerberg and, 287 Musk, Errol, 167 Musk, James, 217, 226, 262 Musk, Justine, 168, 169 Musk, Kimbal, 163, 168, 281 Musk, Maye, 166–68, 184, 185, 234 Musk, X, 214, 216, 222, 234, 274, 283 Myanmar, 78–79 N NAACP, 238 Nadella, Satya, 37 NASA, 10, 90–91 Navaroli, Anika, 131 Nawfal, Mario, 267 NBA, 33, 34, 235 NBC, 33, 53, 239 NBCUniversal, 37, 198–99 Netflix, 36 Neuralink, 171 Nevo, Vivi, 25 New England Patriots, 204 Newman, Omarosa Manigault, 59 Newsom, Gavin, 111, 114 Newsweek, 261 Newton, Casey, 81 New Yorker, 56, 99 New York Post, 123–24, 126, 183, 270 New York Stock Exchange, 1, 10, 27, 178 New York Times, 20, 51, 56, 75, 79, 83, 85, 100, 202, 203, 275, 286 NFL, 30, 33–35, 40, 62, 63, 80, 204, 235 Nigeria, 87, 92 Norquist, Grover, 71 North Korea, 54–55, 63 Noto, Anthony, 30, 33, 34, 37, 39, 40, 80 Novak, Kim, 45 NPR, 100 O Obama, Barack, 10, 43 Observer, 203 Ocasio-Cortez, Alexandria, 185 Odeo, 15–18 Okonjo-Iweala, Ngozi, 87 Olbermann, Keith, 275 Omnicom Group, 254 Oracle, 175 Owens, Candace, 73 Owens, Rick, 85 P Pacini, Kathleen, 226 Page, Larry, 50 Palantir, 160 Paltrow, Gwyneth, 79 Paris Fashion Week, 85, 142, 210 Paul, Logan, 138 PayPal, 169, 186, 206, 216, 257 Pelosi, Nancy, 233 Pelosi, Paul, 233 Pence, Mike, 84, 128 Perica, Adrian, 37 Periscope, 34, 59 Perry, Katy, 163 Personette, Sarah, 236 Perverted Justice, 53 Philadelphia Eagles, 286 Pichai, Sundar, 137 Pichette, Patrick, 99, 101, 104, 108, 146 Pixar, 38 podcasts, 15–17 Podesta, John, 124 Politico, 286 Portnoy, Dave, 138–39 presidential election of 2016, 34, 41, 42–52, 61–63, 77, 86, 114, 124, 125 presidential election of 2020, 73, 87, 111, 113–16, 118, 123–29, 131, 152, 270, 284 Principles (Dalio), 85 Pringle, Lauren, 202–3 Project Veritas, 205 Putin, Vladimir, 188 Pyin Oo Lwin, 78 Q QAnon, 197 Qatar, 276–77 Qatar Investment Authority, 277 Quip, 158 R racism, 38, 46, 54, 56, 71, 80, 136, 232, 233, 245, 284–85 Raiyah bint Al-Hussein, Princess, 154 Read, Mark, 233–35, 238, 240 Reddit, 134 Republicans, conservatives, 71–77, 84, 114, 125, 181, 196, 248, 269, 270, 284 Rezaei, Behnam, 247, 260 Rice, Kathleen, 137–38 Riley, Talulah, 154, 157, 179 Riot Games, 174 Roberts, Brian, 37 Rock, Jay, 65 Roetter, Alex, 28 Rogan, Joe, 160, 172, 181, 209 Rogen, Seth, 58–59 Rohingya people, 78 Roth, Yoel, 113–17, 124, 129, 132, 239, 244, 246, 247, 249, 251, 254, 259 Musk and, 222–23, 271–72, 287 resignation of, 258, 260 sexual consent tweet of, 271–72, 287 Rubin, Rick, 25, 142 Rubio, Marco, 45 Ruffalo, Mark, 57 Russia, 51–52, 124, 186, 188 S Sabet, Bijan, 19 Sacks, David, 186, 190, 206, 216–17, 224, 237, 282 Salesforce, 35–39, 98, 145, 158, 177 Salon, 271 Salt Bae, 277 Sandberg, Sheryl, 23, 50, 77–78 Sandy Hook Elementary School shooting, 75 SAP, 99 Saturday Night Live, 9 Savitt, Bill, 201–2 Scarborough, Joe, 83 Scavino, Dan, 84 Schlapp, Mercedes, 71–73 Second City, 9 Securities and Exchange Commission, 93, 155, 160, 172, 173, 177, 186, 195, 199 Segal, Ned, 67, 96, 97, 102, 108, 121, 187, 200, 221 Sequoia Capital, 186 Shevat, Amir, 197, 227, 242 Silver Lake, 101–6, 108, 109, 118, 157 Singer, Paul, 99 Snapchat, 80, 82, 98, 134, 141 Snowdon, Edward, 277 social networking protocol, 136–37 SoFi, 80 Solomon, Sasha, 262 Sotheby’s, 201 South Africa, 86, 87 South by Southwest, 18 Space Balls, 171 SpaceX, 2, 142, 151, 169–72, 186, 193, 200, 217, 226, 257, 260, 264, 266, 278, 281–82, 288 Spark Capital, 19 Spencer Stuart, 23–24 Spicer, Sean, 48–49, 51 Spiegel, Evan, 80 Spiro, Alex, 172–73, 189, 204, 207–8, 217, 235, 259–60 Squad, 230 Square, 21–22 Bitcoin and, 139, 141, 147 Dorsey as CEO of, 12, 21–23, 25, 26–27, 74, 86–88, 96, 97, 100, 101, 105, 139, 141, 145, 147 Stalin, Joseph, 148 Stanton, Katie, 28 Starbucks, 47 Starlink, 220 State Department, 20 STAT.US, 14 Steinberg, Marc, 99 stocks, 98 Tesla, 185, 186, 206, 257, 279 Twitter, 27, 28, 30, 35, 37, 39, 86, 87, 96, 98, 102, 109, 122, 143, 151, 55, 157–62, 173, 178, 199–200, 237 Stone, Biz, 17, 20 Stone, Roger, 72 Stop the Steal rally, 73, 284 Sullivan, Jay, 184 Sun Valley, ID, 198–200 Super Bowl, 286 Sweeney, Jack, 275 Swift, Taylor, 10, 163 Systrom, Kevin, 29 T Taibbi, Matt, 269 Taylor, Bret, 98, 145, 146, 157–59, 164–65, 200, 205, 221 Teigen, Chrissy, 57, 58, 92 Terrell, Alphonzo, 242 Tesla, 2, 50, 67, 93–94, 158, 163, 169–75, 177, 182, 217, 219, 220, 224, 226, 227, 234, 239, 256, 257, 264, 266, 278, 281–82, 288 imposter account and, 253 stock of, 185, 186, 206, 257, 279 Thiel, Peter, 169 Threads, 286–87 Tidal, 1, 74 TikTok, 39–40, 80–81, 256 Timberlake, Justin, 95 Time Warner, 202 Today, 31, 32 Toff, Jason, 28 transgender people, 153–54, 162 Trump, Donald, 65, 71–73, 75, 92, 217, 277 Biden laptop story and, 123–24 Covid-19 pandemic and, 110, 111 Dorsey and, 46–47, 49–50, 54, 73, 84 Facebook and, 49, 51–52, 134, 135 Minneapolis protests and, 117–18 in presidential election of 2016, 34, 41, 42–52, 61–63 in presidential election of 2020, 73, 111, 114–16, 118, 123–29, 131, 152, 284 tweets of, 4, 41, 42–49, 51, 52, 54–55, 57, 59, 62–63, 75–77, 80, 83–87, 92, 111, 113–18, 123–24, 127–33, 219, 235, 248, 284 Twitter account deactivated by employee, 61–62, 223 Twitter offices visited by, 42–43, 46 Twitter’s banning of, 5, 129–33, 134–37, 147, 180, 192, 200, 220, 225, 270, 272–73 Twitter’s reinstatement of, 234–35, 238, 239, 267–69, 278 Trump Tower, 43, 50 Truth Social, 277 Turner, Sylvester, 92 Turning Point USA, 73 Twitteradvertising on, 2, 5, 10–11, 33, 47–50, 53, 59, 72, 86–87, 108, 111, 119–20, 147, 152–53, 157, 164, 187, 188, 199, 218, 228, 258, 272, 276, 279, 289 advertising on, and Musk, 232–36, 238–40, 243, 245–46, 248–52, 254, 264, 267, 284–85 Agrawal as CEO of, 143–48, 151–53, 157, 159, 161, 165, 180–84, 187, 190–93 algorithms of, 28, 32, 63, 78, 80, 144, 218 alternatives to, 286–87, 289 Babylon Bee and, 154, 179, 271 Biden laptop story and, 124–25, 180–82, 220, 270 Big Sur event of, 85–86, 143 blue check verification on, 58, 162, 219, 229–30, 249–51, 253–55, 285–86 Bluesky offshoot of, 88, 136–37, 141, 142, 144, 288–89 Blue subscription service of, 152, 218–19, 228–30, 249–55, 260, 285 board of directors of, 3–5, 11–12, 19–21, 23–25, 27, 37–40, 74, 89, 96–98, 100–104, 106, 108, 109, 110, 120, 126, 143–47, 156–65, 173, 175–79, 182, 183, 186, 194, 200, 210, 221, 281, 288 bot and spam accounts and, 38, 71, 84, 93–94, 103, 114, 172–73, 187–91, 199–201, 204, 205, 207–8, 223, 229, 250, 252, 254, 267, 278 celebrity users of, 57–59, 229, 250 character limit imposed by, 1, 17, 28, 31 conservatives’ accusation of bias from, 71–77, 125, 181, 269, 270, 284 content moderation policies of, 2, 38, 46, 52–55, 56–63, 71–72, 75, 77, 112–13, 128–32, 135–37, 140, 144, 180–82, 191–92, 220, 273, 288 content moderation policies of, and Musk, 154–57, 160, 196, 222–23, 225, 233, 238–40, 243–46, 249, 266–72, 275–78, 284, 288 corporate culture of, 5, 45–46, 72, 162, 182, 194, 230–31, 252, 262, 283 cost-cutting at, 39, 96, 175, 187, 192–93, 204, 216, 231, 237–38, 257, 260, 280–81, 288 Costolo as CEO of, 9–12, 20, 21, 105 Covid-19 and, 106–8, 110–13, 115, 118–20, 141, 142, 146, 147, 152, 153, 193, 196, 230, 245, 267, 284 disappearing post features on (Scribbles; Fleets), 82–83, 119, 141–42 Dorsey as CEO of, 4, 9–12, 18–25, 26–29, 31–32, 35, 37–38, 40, 45, 54, 76, 79, 80, 96, 97, 100–109, 110, 118, 126, 135, 141, 143–45, 147–48, 153, 169, 182, 261 Dorsey as chairman of, 19, 20, 147 Dorsey’s Africa plans and, 86–89, 91, 97, 107–8 Dorsey’s management style and, 19, 31–32, 85–86, 109, 142, 182 Dorsey’s resignation from, 141–48, 151, 155, 156, 161, 273 Dorsey’s “Trust” email on, 40–41 doxing and, 57, 275 Elliott Management and, 95–109, 118, 121, 126, 143, 145–47, 156, 157, 158, 228, 273, 289 emoji hashtag feature on, 47–49 engineers at, 217–18, 224–26, 243, 247, 261–65 founding and launch of, 1, 17–18 growth of, 1, 17–19, 62–63, 66, 80, 147 hacking of, 206, 207, 258 hiring at, 120–21, 153, 193, 201, 205 impersonator accounts and, 253–55, 260 important accounts at, 223, 229 influence of, 3–4, 10, 50, 147 Influence Council of, 239, 240, 248 investor Analyst Days of, 118–22, 137, 152 investors in, 1, 2, 10–11, 19, 28, 95–109, 118–22, 160, 162, 179, 183, 188, 199 layoffs at, 27, 28, 35, 37, 39, 40, 65, 96, 153, 175, 187, 192, 196, 197, 216, 224–27, 231, 236–37, 240–45, 247, 254, 258–60, 264 links to other social media sites on, 277–78 live content on, 32–36, 62, 80, 119 “lonely birds” problem of, 219 misinformation flagging by, 112–18, 127, 222, 232, 238, 243, 267, 284 Mudge’s whistleblower complaint against, 206–9, 222 Musk as user of, 93–94, 103–4, 148, 151, 154–55, 159, 162–64, 171, 172, 181, 188–91, 194, 195, 215, 219, 220, 223, 225, 233–36, 240, 244, 245, 247–49, 255, 261–62, 267–68, 271–72, 274, 277, 278, 281, 284–87 Musk as viewed by employees at, 162–63, 183, 193–97, 219, 241, 244–45, 264 Musk’s acquisition of, 1–6, 171–79, 180–97, 210, 213–15, 217, 221, 222, 224, 241, 244–45, 269, 271, 275, 277, 279, 287–89 Musk’s acquisition as possibility, 154, 157, 164, 165 Musk’s acquisition termination attempt, 5, 189–91, 195, 199–210, 213 Musk’s all-hands meeting at, 255–56, 259, 260, 262 Musk’s conversion of office spaces into hotel rooms at, 280–81 Musk’s criticisms of, 5, 164, 172–73, 175, 181, 190, 192, 195, 244 Musk’s due diligence meeting at, 187–88 Musk’s firing of employees at, 262–63 Musk’s investment in, 151, 155, 157–61, 168, 173, 174, 179, 195, 199 Musk’s nonpayment of bills of, 280 Musk’s ownership of, 213–31, 232–46, 247–65, 266–82, 283–89 Musk’s plans and vision for, 174–75, 194–97, 217–18, 256–57, 269, 283–84, 287 Musk’s Q&A session at, 194–97 Musk’s removal of servers at, 280 Musk’s resignation poll on, 278–79 Musk’s sink entrance at, 213, 218, 220, 251, 280, 282, 283 Musk’s suspensions of journalists on, 275, 276, 278 Musk sued by, 200–210, 213, 221, 222, 269, 280 Musk’s work expectations and, 227, 230, 257, 258, 260, 263, 265 as news source, 3, 4, 32–34, 41, 62, 283, 285–87 office space reductions at, 231 #OneTeam events of, 64–68, 74, 90–94, 96, 106, 143, 144 Periscope app of, 34, 59 presidential election of 2016 and, 34, 46–50, 77, 86, 114, 124, 125 privacy programs of, 207, 258–60, 281 as private company, 2, 3, 5, 161–62, 164–65, 173, 179, 237 product organization of, 80–83, 105 Project Saturn at, 191–92, 220 as public company, 1, 10, 109, 121, 161–62, 164–65, 176, 179, 289 rebranded as X, 283–88 remote work and, 107, 121, 128, 174, 196, 197, 216, 226, 252, 255, 257, 260, 264 resignations of leaders at, 28–30, 258–61, 263 retreats for executives of, 28–30, 64–68, 74, 85–86, 90–94, 96, 106, 143, 144, 182 revenues of, 10, 23, 39, 86, 96–98, 108, 109, 111, 118–21, 147, 152–53, 162, 178, 204, 208, 228, 251, 279, 285 sale possibility and offers, 30, 35–41, 65, 177 shadow-banning by, 75–77, 220, 225, 270 Silver Lake and, 101–6, 108, 109, 118 size of, 4, 10, 50 Spaces feature of, 119, 242, 249, 251, 267 spike in offensive tweets and, 232–33, 238, 245 stock of, 27, 28, 30, 35, 37, 39, 86, 87, 96, 98, 102, 109, 122, 143, 151, 155, 157–62, 173, 178, 199–200, 237 succession plan at, 108, 126 Tea Time meetings of, 29, 30, 40 three-year plan of, 119–21, 147, 152, 192, 193 topic following as feature on, 83, 119 Trump banned by, 5, 129–33, 134–37, 147, 180, 192, 200, 220, 225, 270, 272–73 Trump reinstated by, 234–35, 238, 239, 267–69, 278 Trump’s account deactivated by employee at, 61–62, 223 Trump’s use of, 4, 41, 42–49, 51, 52, 54–55, 57, 59, 62–63, 75–77, 80, 83–87, 92, 111, 113–18, 123–24, 127–33, 219, 235, 248, 284 Trump’s visit to offices of, 42–43, 46 Trust and Safety team of, 52, 60, 61, 67, 112–15, 117, 124, 128, 131, 132, 192, 217, 222, 223, 239, 243–44, 246, 247, 254, 271 tweet view counts on, 219–20 Twitter Files dump and, 269–72 user base of, 1, 3, 10, 17, 18, 23, 28, 31, 53, 65, 82, 96, 98, 108, 109, 111, 118–21, 147, 152, 165, 178, 179, 187, 190, 191, 195, 196, 199–201, 204, 207, 208, 248, 276 Vine app of, 28, 35, 39–40, 81, 218, 256 World Cup and, 47, 48, 276–77, 279 Twitter, Inc. v.

The Internet of Garbage

by

Sarah Jeong

Published 14 Jul 2015

ON MODERN-DAY SOCIAL MEDIA CONTENT MODERATION I will acknowledge that there is a very good reason why the debate focuses on content over behavior. It’s because most social media platforms in this era focus on content over behavior. Abuse reports are often examined in isolation. In an article for WIRED in 2014, Adrian Chen wrote about the day-to-day job of a social media content moderator in the Philippines, blasting through each report so quickly that Chen, looking over the moderator’s shoulder, barely had time to register what the photo was of. Present-day content moderation, often the realm of U.S.

…

All rights reserved. Cover Design: Uyen Cao Edited by Jennifer Eum and Annabel Lau CONTENTS I. THE INTERNET IS GARBAGE INTRODUCTION A THEORY OF GARBAGE II. ON HARASSMENT HARASSMENT ON THE NEWS IS HARASSMENT GENDERED? ON DOXING A TAXONOMY OF HARASSMENT ON MODERN-DAY SOCIAL MEDIA CONTENT MODERATION III. LESSONS FROM COPYRIGHT LAW THE INTERSECTION OF COPYRIGHT AND HARASSMENT HOW THE DMCA TAUGHT US ALL THE WRONG LESSONS TURNING HATE CRIMES INTO COPYRIGHT CRIMES IV. A DIFFERENT KIND OF FREE SPEECH STUCK ON FREE SPEECH THE MARKETPLACE OF IDEAS SOCIAL MEDIA IS A MONOPOLY AGORAS AND RESPONSIBILITIES V.

…

But most importantly, even if the focus on creating bright-line rules specific to harassment-as-content never shifts, looking at harassing behavior as the real harm is helpful. Bright-line rules should be crafted to best address the behavior, even if the rule itself applies to content. Beyond Deletion The odd thing about the new era of major social media content moderation is that it focuses almost exclusively on deletion and banning (respectively, the removal of content and the removal of users). Moderation isn’t just a matter of deleting and banning, although those are certainly options. Here are a range of options for post hoc content management, some of which are informed by James Grimmelmann’s article, “The Virtues of Moderation,” which outlines a useful taxonomy for online communities and moderation: • Deletion Self-explanatory

Likewar: The Weaponization of Social Media

by

Peter Warren Singer

and

Emerson T. Brooking

Published 15 Mar 2018

There Were More Than You Think,” Now I Know, December 10, 2012, http://nowiknow.com/remember-all-those-aol-cds-there-were-more-than-you-think/. 245 special screen names: Lisa Margonelli, “Inside AOL’s ‘Cyber-Sweatshop,’” Wired, October 1, 1999, https://www.wired.com/1999/10/volunteers/. 245 three-month training process: Jim Hu, “Former AOL Volunteers File Labor Suit,” CNET, January 2, 2002, https://www.cnet.com/news/former-aol-volunteers-file-labor-suit/. 245 minimum of four hours: Ibid. 245 14,000 volunteers: Margonelli, “Inside AOL’s ‘Cyber-Sweatshop.’” 245 “cyber-sweatshop”: Ibid. 245 $15 million: Lauren Kirchner, “AOL Settled with Unpaid ‘Volunteers’ for $15 Million,” Columbia Journalism Review, February 20, 2011, http://archives.cjr.org/the_news_frontier/aol_settled_with_unpaid_volunt.php. 245 a thousand graphic images: Olivia Solon, “Underpaid and Overburdened: The Life of a Facebook Moderator,” The Guardian, May 25, 2017, https://www.theguardian.com/news/2017/may/25/facebook-moderator-underpaid-overburdened-extreme-content. 246 a million pieces of content: Buni and Chemaly, “The Secret Rules of the Internet.” 246 a 74-year-old grandfather: Olivia Solon, “Facebook Killing Video Puts Moderation Policies Under the Microscope, Again,” The Guardian, April 17, 2017, https://www.theguardian.com/technology/2017/apr/17/facebook-live-murder-crime-policy. 246 an estimated 150,000 workers: Benjamin Powers, “The Human Cost of Monitoring the Internet,” Rolling Stone, September 9, 2017, https://www.rollingstone.com/culture/features/the-human-cost-of-monitoring-the-internet-w496279. 246 India and the Philippines: Adrian Chen, “The Laborers Who Keep Dick Pics and Beheadings out of Your Facebook Feed,” Wired, October 23, 2014, https://www.wired.com/2014/10/content-moderation/. 246 bright young college graduates: Sarah T. Roberts, “Behind the Screen: The People and Politics of Commercial Content Moderation” (presentation at re:publica 2016, Berlin, May 2, 2016), transcript available at Open Transcripts, http://opentranscripts.org/transcript/politics-of-commercial-content-moderation/. 247 reduced libido: Brad Stone, “Policing the Web’s Lurid Precincts,” New York Times, July 28, 2010, http://www.nytimes.com/2010/07/19/technology/19screen.html. 247 regular psychological counseling: Abby Ohlheiser, “The Work of Monitoring Violence Online Can Cause Real Trauma.

…

The rise and fall of AOL’s digital serfs foreshadowed how all big internet companies would come to handle content moderation. If the internet of the mid-1990s had been too vast for paid employees to patrol, it was a mission impossible for the internet of the 2010s and beyond. Especially when social media startups were taking off, it was entirely plausible that there might have been more languages spoken on a platform than total employees at the company. But as companies begrudgingly accepted more and more content moderation responsibility, the job still needed to get done. Their solution was to split the chore into two parts.

…

The first part was crowdsourced to users (not just volunteers but everyone), who were invited to flag content they didn’t like and prompted to explain why. The second part was outsourced to full-time content moderators, usually contractors based overseas, who could wade through as many as a thousand graphic images and videos each day. Beyond establishing ever-evolving guidelines and reviewing the most difficult cases in-house, the companies were able to keep their direct involvement in content moderation to a minimum. It was a tidy system tacked onto a clever business model. In essence, social media companies relied on their users to produce content; they sold advertising on that content and relied on other users to see that content in order to turn a profit.

Elon Musk

by

Walter Isaacson

Published 11 Sep 2023

Maybe he would tell them, either out of calculation or his compulsion to be brutally honest, what he really thought: that they were wrong to kick off Trump, that their content moderation policies crossed the line into unjustifiable censorship, that the staff had been infected by the woke-mind virus, that people should show up to work in person, and that the company was way overstaffed. The ensuing explosion might not scuttle the deal, but it could shake up the chessboard. Musk didn’t do that. Instead, he was rather conciliatory on these hot-button issues. Leslie Berland, the chief marketing officer of Twitter, began with the issue of content moderation. Instead of simply invoking his mantra about the goodness of free speech, Musk went deeper and made a distinction between what people should be allowed to post and what Twitter should cause to be amplified and spread.

…

“He seemed like someone who isn’t afraid of offending people,” Musk says, which for him is a more unalloyed compliment than it would be for most people. He invited Taibbi to spend time at Twitter headquarters rummaging through the old files, emails, and Slack messages of the company’s employees who had wrestled with content-moderating issues. Thus was launched what became known as “the Twitter Files,” which could and should have been a healthy linen-airing and transparency exercise, suitable for judicious reflection about media bias and the complexities of content moderation, except that it got caught in the vortex that these days sends people scurrying into their tribal bunkers on talk shows and social media. Musk helped stoke the reaction with excited arm-waving as he heralded the forthcoming Twitter threads with popcorn and fireworks emojis.

…

He also explained why he wanted to “open the aperture” of what speech was permissible on Twitter and avoid permanent bans of people, even those with fringe ideas. On talk radio and cable TV, there were separate information sources for progressives and conservatives. By pushing away right-wingers, the content moderators at Twitter, more than 90 percent of whom, he believed, were progressive Democrats, might be creating a similar Balkanization of social media. “We want to prevent a world in which people split off into their own echo chambers on social media, like going to Parler or Truth Social,” he said. “We want to have one place where people with different viewpoints can interact.

An Ugly Truth: Inside Facebook's Battle for Domination

by

Sheera Frenkel

and

Cecilia Kang

Published 12 Jul 2021

A few months after the riots and the internet shutdown, he discovered why the platform might be so slow to respond to problems on the ground, or even to read the comments under posts. A member of Facebook’s PR team had solicited advice from the group on a journalist’s query regarding how Facebook handled content moderation for a country like Myanmar, in which more than one hundred languages are spoken. Many in the group had been asking the same thing, but had never been given answers either on how many content moderators Facebook had or on how many languages they spoke. Whatever Schissler and the others had assumed, they were stunned when a Facebook employee in the chat typed, “There is one Burmese-speaking rep on community operations,” and named the manager of the team.

…

One of the members of the Kenosha Guard who had administrative privileges to the page removed it.8 At the next day’s Q&A, Zuckerberg said there had been an “an operational mistake” involving content moderators who hadn’t been trained to refer these types of posts to specialized teams. He added that upon the second review, those responsible for dangerous organizations realized that the page violated their policies, and “we took it down.” Zuckerberg’s explanation didn’t sit well with employees, who believed he was scapegoating content moderators, the lowest-paid contract workers on Facebook’s totem pole. And when an engineer posted on a Tribe board that Zuckerberg wasn’t being honest about who had actually removed the page, many felt they had been misled.

…

The First Amendment was meant to protect society. And ad targeting that prioritized clicks and salacious content and data mining of users was antithetical to the ideals of a healthy society. The dangers present in Facebook’s algorithms were “being co-opted and twisted by politicians and pundits howling about censorship and miscasting content moderation as the demise of free speech online,” in the words of Renée DiResta, a disinformation researcher at Stanford’s Internet Observatory. “There is no right to algorithmic amplification. In fact, that’s the very problem that needs fixing.”10 It was a complicated issue, but to some, at least, the solution was simple.

Facebook: The Inside Story

by

Steven Levy

Published 25 Feb 2020

had only been viewed live: VP and Deputy General Counsel of Facebook Chris Sonderby posted “Update on New Zealand,” Facebook Newsroom, March 18, 2019. came from academics: There have been several deep studies of content moderators and policy by academics, notably Sarah T. Roberts, Behind the Screen: Content Moderation in the Shadows of Social Media (Yale University Press, 2019); Tarleton Gillespie, Custodians of the Internet: Platforms, Content Moderation, and the Hidden Decisions That Shape Social Media (Yale University Press, 2018); and Kate Klonick, “The New Governors: The People, Rules, and Processes Governing Online Speech,” Harvard Law Review, April 10, 2018.

…

Since 2012, when Facebook started centers in Manila and India, it began outsourcing companies to hire and employ the workers. They can’t attend all-hands meetings and they don’t get Facebook swag. Facebook is not the only company to use content moderators: Google, Twitter, and even dating apps like Tinder have the need to monitor what happens on their platforms. But Facebook uses the most. While a global workforce of content moderators was slowly building to tens of thousands, it was at first a largely silent phenomenon. The first awareness came from academics. Sarah T. Roberts, then a graduate student, assumed, like most people, that artificial intelligence did the job, until a computer-science classmate explained how primitive AI was back then.

…

Meanwhile, it’s left to the 15,000 or so content moderators to actually determine what stuff crosses the line, forty seconds at a time. In Phoenix I asked the moderators I was interviewing whether they felt that artificial intelligence could ever do their jobs. The room burst out in laughter. * * * • • • THE MOST DIFFICULT calls that Facebook has to make are the ones where following the rule book creates an outcome that seems just plain wrong. For some of these, moderators “elevate” the situation to full-time employees, and sometimes off-site to the people who sit in the Content Moderation meetings. The toughest ones are sometimes elevated to Everest, to the worktables of Sandberg and Zuckerberg.

Reset

by

Ronald J. Deibert

Published 14 Aug 2020

It was a “bug,” said Guy Rosen: Associated Press. (2020, March 17). Facebook bug wrongly deleted authentic coronavirus news. Retrieved from https://www.ctvnews.ca/health/coronavirus/facebook-bug-wrongly-deleted-authentic-coronavirus-news-1.4857517; Human social media content moderators have extremely stressful jobs, given the volume of potentially offensive and harmful posts: Roberts, S. T. (2019). Behind the screen: Content moderation in the shadows of social media. Yale University Press; Kaye, D. A. (2019). Speech police: The global struggle to govern the internet. Columbia Global Reports; Jeong, S. (2015). The internet of garbage. Forbes Media..

…

Retrieved from https://www.theverge.com/2020/3/16/21182726/coronavirus-covid-19-facebook-google-twitter-youtube-joint-effort-misinformation-fraud “Platforms should be forced to earn the kudos they are getting”: Douek, E. (2020, March 25). COVID-19 and social media content moderation. Retrieved from https://www.lawfareblog.com/covid-19-and-social-media-content-moderation Mandatory or poorly constructed measures could be perverted as an instrument of authoritarian control: Lim, G., & Donovan, J. (2020, April 3). Republicans want Twitter to ban Chinese Communist Party accounts. That’s a dangerous idea. Retrieved from https://slate.com/technology/2020/04/republicans-want-twitter-to-ban-chinese-communist-party-accounts-thats-dangerous.html; Lim, G. (2020).

…

At first, Facebook announced that it would remove posts containing misinformation about the virus, while YouTube, Twitter, and Reddit claimed that inaccurate information about health was not a violation of their terms-of-service policies, leaving the algorithms to surface content that grabbed attention, stoked fear, and fuelled conspiracy theorizing. After sending human content moderators into self-isolation, Facebook turned to artificial intelligence tools instead, with unfortunate results. The machine-based moderation system swept through, but mistakenly removed links to even official government-related health information.92 Gradually, each of the platforms introduced measures to point users to credible health information, flag unverified information, and remove disinformation — but in an uncoordinated fashion and with questionable results.93 The platforms’ intrinsic bias towards speed and volume of posts made the measures inherently ineffective, allowing swarms of false information to circulate unimpeded.

Superbloom: How Technologies of Connection Tear Us Apart

by

Nicholas Carr

Published 28 Jan 2025

One woman in the field compared herself and her colleagues to the “sin eaters” of the seventeenth century, desperate paupers who would be given a little money to eat bread at funerals in order to assume the sins of the deceased.24 Social media firms have gone to great lengths to keep the grim modern ritual of content moderation hidden from the public. The work conflicts with the upbeat image they like to promote. It also grates against their political ideals. Like other internet entrepreneurs, the founders of the major social networks lean toward libertarianism. They’re free-speech absolutists who celebrate radical “openness” and “transparency” and find any form of censorship repellent, especially when it occurs on the net. Given the choice, most of them would not have gotten into the content-moderation business at all. But as executives beholden to shareholders, they had no choice.

…

(New York: NYU Stern Center for Business and Human Rights, 2020). 20.Adrian Chen, “Inside Facebook’s Outsourced Anti-Porn and Gore Brigade, Where ‘Camel Toes’ Are More Offensive than ‘Crushed Heads,’ ” Gawker, February 16, 2012. The manual itself, oDesk, Abuse Standards 6.2: Operation Manual for Live Content Moderators, is archived at https://perma.cc/2JQF-AWMY. 21.Alex Hern, “FamilyOFive: YouTube Bans ‘Pranksters’ after Child Abuse Conviction,” Guardian, July 19, 2018. 22.Interview on Good Morning America, ABC News, April 28, 2017. 23.Lauren Weber and Deepa Seetharaman, “The Worst Job in Technology: Keeping Facebook Clean,” Wall Street Journal, December 28, 2017. 24.Sarah T. Roberts, Behind the Screen: Content Moderation in the Shadows of Social Media (New Haven, CT: Yale University Press, 2019), 162–165. 25.Klonick, “New Governors.” 26.Quoted in Miguel Helft, “Facebook’s Mean Streets,” New York Times, December 13, 2010. 27.John Milton, Areopagitica, in The Essential Prose of John Milton (New York: Modern Library, 2013), 209. 28.The Life and Selected Writings of Thomas Jefferson (New York: Modern Library, 2004), 299. 29.Abrams et al. v United States, 250 U.S. 616 (1919). 30.Michael J.

…

After Google bought YouTube in October of 2006, the search giant immediately faced difficult decisions about disturbing videos posted to the site, often by anonymous users. In December, two videos of Saddam Hussein’s execution appeared. One showed his hanging; the other, his corpse. Though both seemed to violate a company policy prohibiting graphic violence, YouTube’s content-moderation team, led by one of Google’s top corporate lawyers, decided to allow the video of the hanging, deeming it of historical importance, even as it removed the video of the body. Not long after, a mysterious, grainy video of a man being severely beaten by several other men was uploaded to the site.

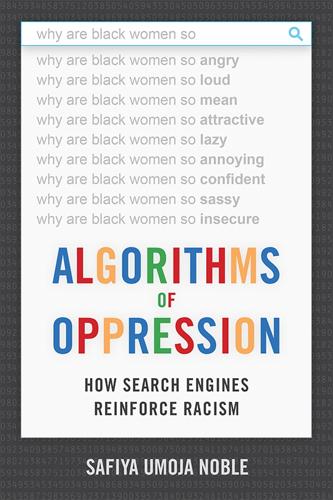

Algorithms of Oppression: How Search Engines Reinforce Racism

by

Safiya Umoja Noble

Published 8 Jan 2018

Commercial platforms such as Facebook and YouTube go to great lengths to monitor uploaded user content by hiring web content screeners, who at their own peril screen illicit content that can potentially harm the public.78 The expectation of such filtering suggests that such sites vet content on the Internet on the basis of some objective criteria that indicate that some content is in fact quite harmful to the public. New research conducted by Sarah T. Roberts in the Department of Information Studies at UCLA shows the ways that, in fact, commercial content moderation (CCM, a term she coined) is a very active part of determining what is allowed to surface on Google, Yahoo!, and other commercial text, video, image, and audio engines.79 Her work on video content moderation elucidates the ways that commercial digital media platforms currently outsource or in-source image and video content filtering to comply with their terms of use agreements. What is alarming about Roberts’s work is that it reveals the processes by which content is already being screened and assessed according to a continuum of values that largely reflect U.S.

…