Machine Learning Design Patterns: Solutions to Common Challenges in Data Preparation, Model Building, and MLOps

by

Valliappa Lakshmanan

,

Sara Robinson

and

Michael Munn

Published 31 Oct 2020

To solve this with transfer learning, we’ll need to find a model that has already been trained on a large dataset to do image classification. We’ll then remove the last layer from that model, freeze the weights of that model, and continue training using our 400 x-ray images. We’d ideally find a model trained on a dataset with similar images to our x-rays, like images taken in a lab or another controlled condition. However, we can still utilize transfer learning if the datasets are different, so long as the prediction task is the same. In this case we’re doing image classification. You can use transfer learning for many prediction tasks in addition to image classification, so long as there is an existing pre-trained model that matches the task you’d like to perform on your dataset.

…

We recommend using Adam and ReLU—in our experience, the potential for improvements in performance by making different choices in these sorts of things tends to be minor. ML model architectures If you are doing image classification, we recommend that you use an off-the-shelf model like ResNet or whatever the latest hotness is at the time you are reading this. Leave the design of new image classification or text classification models to researchers who specialize in this problem. Model layers You won’t find convolutional neural networks or recurrent neural networks in this book. They are doubly disqualified—first, for being a building block and second, for being something you can use off-the-shelf.

…

For example, use a model that has been pre-trained on photographs if you are going to use it for photograph classification and a model that has been pre-trained on remotely sensed imagery if you are going to use it to classify satellite images. By similar task, we’re referring to the problem being solved. To do transfer learning for image classification, for example, it is better to start with a model that has been trained for image classification, rather than object detection. Continuing with the example, let’s say we’re building a binary classifier to determine whether an image of an x-ray contains a broken bone. We only have 200 images of each class: broken and not broken. This isn’t enough to train a high-quality model from scratch, but it is sufficient for transfer learning.

Hands-On Machine Learning With Scikit-Learn and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems

by

Aurélien Géron

Published 13 Mar 2017

These popular activation functions and their derivatives are represented in Figure 10-8. Figure 10-8. Activation functions and their derivatives An MLP is often used for classification, with each output corresponding to a different binary class (e.g., spam/ham, urgent/not-urgent, and so on). When the classes are exclusive (e.g., classes 0 through 9 for digit image classification), the output layer is typically modified by replacing the individual activation functions by a shared softmax function (see Figure 10-9). The softmax function was introduced in Chapter 3. The output of each neuron corresponds to the estimated probability of the corresponding class. Note that the signal flows only in one direction (from the inputs to the outputs), so this architecture is an example of a feedforward neural network (FNN).

…

In summary, for many problems you can start with just one or two hidden layers and it will work just fine (e.g., you can easily reach above 97% accuracy on the MNIST dataset using just one hidden layer with a few hundred neurons, and above 98% accuracy using two hidden layers with the same total amount of neurons, in roughly the same amount of training time). For more complex problems, you can gradually ramp up the number of hidden layers, until you start overfitting the training set. Very complex tasks, such as large image classification or speech recognition, typically require networks with dozens of layers (or even hundreds, but not fully connected ones, as we will see in Chapter 13), and they need a huge amount of training data. However, you will rarely have to train such networks from scratch: it is much more common to reuse parts of a pretrained state-of-the-art network that performs a similar task.

…

The vanishing gradients problem was strongly reduced, to the point that they could use saturating activation functions such as the tanh and even the logistic activation function. The networks were also much less sensitive to the weight initialization. They were able to use much larger learning rates, significantly speeding up the learning process. Specifically, they note that “Applied to a state-of-the-art image classification model, Batch Normalization achieves the same accuracy with 14 times fewer training steps, and beats the original model by a significant margin. […] Using an ensemble of batch-normalized networks, we improve upon the best published result on ImageNet classification: reaching 4.9% top-5 validation error (and 4.8% test error), exceeding the accuracy of human raters.”

AIQ: How People and Machines Are Smarter Together

by

Nick Polson

and

James Scott

Published 14 May 2018

Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) Craven, John credit cards digital assistants and fraud Crimean War criminal justice system cucumbers data, missing data accumulation, pace of data mining data science anomaly detection and assumptions and democracy and feature engineering health care and imputation institutional commitment and legacy of Florence Nightingale lurking variable pattern recognition and personalization and prediction rules and user-based collaborative filtering data sets anomalies in assumptions and bias in, bias out ImageNet Visual Recognition Challenge massive pattern recognition and privacy sharing databases compilers and health care natural language processing Netflix smart cities de Moivre’s equation (square-root rule) decision-making anomaly detection and human voting deep learning corn yields and electricity demands and gender portrayals in film and honeybees and prediction rules and privacy and Descartes Labs Dickens, Charles Christmas Carol, A Martin Chuzzlewit digital assistants Alexa (Amazon) algorithms and Google Home medicine and speech recognition and DiMaggio, Joe Dole, Bob Duke University early-warning systems Earth Echo, Amazon e-commerce Eggo, Rosalind Einstein, Albert energy industry Facebook advertisers anomaly detection “data for gossip” bargain data sets data storage image classification and recognition market dominance pattern-recognition system personalization presidential election of 2016 and targeted marketing Facebook Messenger fake news financial industry Bayes’s rule and investing gambling strategy indexing strategy Fitbit Ford, Henry Formula 1 racing Fowler, Samuel Lemuel Friedman, Milton Friends (television series) Gawande, Atul: The Checklist Manifesto Geena Davis Institute on Gender in Media gender bias in films stereotypes word vectors and Google anomaly detection data sets data storage image classification Inception (neural-network model) market dominance pattern-recognition system personalization search engine self-driving car speech recognition TensorFlow word2vec model Google Google DeepMind Google Doodle Google Flu Trends Google Home Google Ngram Viewer Google Translate Google Voice Gould, Stephen Jay GPS technology Great Andromeda Nebula.

…

Google debuted its first such car in 2009. But you’ll learn in chapter 3 that one of the main ideas behind how these cars work was discovered by a Presbyterian minister in the 1750s—and that this idea was used by a team of mathematicians over 50 years ago to solve one of the Cold War’s biggest blockbuster mysteries. Or take image classification, like the software that automatically tags your friends in Facebook photos. Algorithms for image processing have gotten radically better over the last five years. But in chapter 2, you’ll learn that the key ideas here date to 1805—and that these ideas were used a century ago, by a little-known astronomer named Henrietta Leavitt, to help answer one of the deepest scientific questions that humans have ever posed: How big is the universe?

…

You may recall the story of Makoto Koike, who built a cucumber sorter that exploited the pattern-recognition capabilities of AI. There the input was an image, the output was a decision to sort the cucumber into one of nine different classes, and the pattern was the relationship between the cucumber’s visual features and its class. In AI, that’s called “image classification,” and it’s used everywhere—by toilets in Beijing; by Facebook, to identify your friends in untagged photos; and by CERN, the huge physics lab in Geneva, to detect collisions between subatomic particles in images from high-energy physics experiments. But the input doesn’t have to be an image.

Four Battlegrounds

by

Paul Scharre

Published 18 Jan 2023

If the distribution of the real-world data is shifted (changed) from the training data, then the system may not be robust—that is, it may not be capable enough to handle that change without a degradation in performance. A computer vision system trained on video or images taken during sunny days may not perform as well during cloudy days. These problems are common in image classification systems, and have resulted in increased scrutiny of high-consequence applications, such as law enforcement use of facial recognition systems. In some settings, these failures can have fatal consequences. Problems with computer vision and image classification have contributed to self-driving cars and Tesla cars on autopilot striking pedestrians, semitrailers, concrete barriers, fire trucks, and parked cars, leading to several fatal accidents.

…

(“Trump Discusses China, ‘Political Fairness’ with Google CEO,” Reuters, March 27, 2019, https://www.reuters.com/article/us-usa-trump-google/trump-discusses-china-political-fairness-with-google-ceo-idUSKCN1R82CB.) 63“AI was largely hype”: Liz O’Sullivan, interview by author, February 12, 2020. 64Machine learning systems in particular can fail: Ram Shankar Siva Kumar et al., “Failure Modes in Machine Learning,” Microsoft Docs, November 11, 2019, https://docs.microsoft.com/en-us/security/engineering/failure-modes-in-machine-learning; Dario Amodei et al., Concrete Problems in AI Safety (arXiv.org, July 25, 2016), https://arxiv.org/pdf/1606.06565.pdf. 64perform poorly on people of a different gender, race, or ethnicity: Joy Buolamwini and Timnit Gebru, “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification,” Proceedings of Machine Learning Research 81 (2018), 1–15, https://dam-prod.media.mit.edu/x/2018/02/06/Gender%20Shades%20Intersectional%20 Accuracy%20Disparities.pdf. 64Google Photos image recognition algorithm: Tom Simonite, “When It Comes to Gorillas, Google Photos Remains Blind,” Wired, January 11, 2018, https://www.wired.com/story/when-it-comes-to-gorillas-google-photos-remains-blind/; Alistair Barr, “Google Mistakenly Tags Black People as ‘Gorillas,’ Showing Limits of Algorithms,” Wall Street Journal, July 1, 2015, https://blogs.wsj.com/digits/2015/07/01/google-mistakenly-tags-black-people-as-gorillas-showing-limits-of-algorithms/. 64insufficient representation of darker faces: Barr, “Google Mistakenly Tags Black People as ‘Gorillas.’” 64distributional shift in the data: Rohan Taori, Measuring Robustness to Natural Distribution Shifts in Image Classification (arXiv.org, September 14, 2020), https://arxiv.org/pdf/2007.00644.pdf. 64problems are common in image classification systems: Maggie Zhang, “Google Photos Tags Two African-Americans as Gorillas Through Facial Recognition Software,” Forbes, July 1, 2015, https://www.forbes.com/sites/mzhang/2015/07/01/google-photos-tags-two-african-americans-as-gorillas-through-facial-recognition-software/#60111f6713d8. 65several fatal accidents: Rob Stumpf, “Tesla on Autopilot Crashes into Parked California Police Cruiser,” The Drive, May 30, 2018, https://www.thedrive.com/news/21172/tesla-on-autopilot-crashes-into-parked-california-police-cruiser; Rob Stumpf, “Autopilot Blamed for Tesla’s Crash Into Overturned Truck,” The Drive, June 1, 2020, https://www.thedrive.com/news/33789/autopilot-blamed-for-teslas-crash-into-overturned-truck; James Gilboy, “Officials Find Cause of Tesla Autopilot Crash Into Fire Truck: Report,” The Drive, May 17, 2018, https://www.thedrive.com/news/20912/cause-of-tesla-autopilot-crash-into-fire-truck-cause-determined-report; Phil McCausland, “Self-Driving Uber Car That Hit and Killed Woman Did Not Recognize That Pedestrians Jaywalk,” NBC News, November 9, 2019, https://www.nbcnews.com/tech/tech-news/self-driving-uber-car-hit-killed-woman-did-not-recognize-n1079281; National Transportation Safety Board, “Collision Between a Sport Utility Vehicle Operating With Partial Driving Automation and a Crash Attenuator” (presented at public meeting, February 25, 2020), https://www.ntsb.gov/news/events/Documents/2020-HWY18FH011-BMG-abstract.pdf; Aaron Brown, “Tesla Autopilot Crash Victim Joshua Brown Was an Electric Car Buff and a Navy SEAL,” The Drive, July 1, 2016, https://www.thedrive.com/news/4249/tesla-autopilot-crash-victim-joshua-brown-was-an-electric-car-buff-and-a-navy-seal. 65drone footage from a different region: Marcus Weisgerber, “The Pentagon’s New Artificial Intelligence Is Already Hunting Terrorists,” Defense One, December 21, 2017, https://www.defenseone.com/technology/2017/12/pentagons-new-artificial-intelligence-already-hunting-terrorists/144742/. 65Tesla has come under fire: Andrew J.

…

Yet some of these ties have come under fire for being too close to Chinese surveillance applications, human rights abuses, and military use. Pan proudly outlined Microsoft Research Asia’s contributions to basic AI research, which are impressive. In 2016, a research team out of the Beijing office was the first to surpass human-level performance in image classification. The team’s 2015 paper on “deep residual learning” was the most-cited AI paper from 2014 to 2019. Over the past twenty years, the Beijing research lab has published 5,000 papers in top-tier journals or conferences. “That’s, on average, every working day we publish one paper,” Pan said. Yet it was the handful of papers reportedly coauthored with PLA scientists that had landed Microsoft in hot water with U.S. legislators.

Rage Inside the Machine: The Prejudice of Algorithms, and How to Stop the Internet Making Bigots of Us All

by

Robert Elliott Smith

Published 26 Jun 2019

Similar stories include the almost comical demonstration of a ‘racist’ soap dispenser, which refused to squirt cleanser onto dark-skinned hands,3 and the troubling 2015 Guardian report that Google’s image classification system had labelled a dark-skinned couple as ‘gorillas’.4 Google quickly reported that they had taken action to prevent this offensive categorization of people as animals from happening again. It wasn’t clear what Google had done to correct it until three years later, when Wired magazine tested Google’s image classification with pictures of 40,000 animals. They found that while other animals received the correct labels, none of the images of great apes did. Wired asked Google what had happened, and they confirmed that they had fixed the racist image classification error by simply removing their algorithm’s ability to classify any image as being of an ‘ape’, ‘gorilla’, ‘chimpanzee’, and so on.5 Why was the response to this algorithm’s error the prevention of any image being labelled as being of apes, even when that classification was correct?

…

The great body of human text from the past contains biases about things like gender, race and religion. Since many of those ideas are about simplified features of people leading to their categorization, it seems natural that algorithms that explicitly search for simplifying features by which to categorize things are liable to tune into these biases. Moreover, just like today’s advanced image classification algorithms, most advanced algorithms for understanding and generating human language are based on incomprehensible, deep-learning neural networks, such that biases are lost in a sea of parameters and maths. As with image processing, these algorithms have no understanding of what they are doing; they are merely processing numerical features, in a manner that has little to do with the way humans think or communicate.

Machine, Platform, Crowd: Harnessing Our Digital Future

by

Andrew McAfee

and

Erik Brynjolfsson

Published 26 Jun 2017

One of Logic Theorist’s proofs, in fact, was so much more elegant than the one in the book that Russell himself “responded with delight” to it. Simon announced that he and his colleagues had “invented a thinking machine.” Other challenges, however, proved much less amenable to a rule-based approach. Decades of research in speech recognition, image classification, language translation, and other domains yielded unimpressive results. The best of these systems achieved much worse than human-level performance, and the worst were memorably bad. According to a 1979 collection of anecdotes, for example, researchers gave their English-to-Russian translation utility the phrase “The spirit is willing, but the flesh is weak.”

…

The definitions of “enough” and “strong enough” here change over time, depending on feedback, as does the importance, called the “weight,” that a neuron gives to each of its inputs. Out of this strange, complex, constantly unfolding process come memories, skills, System 1 and System 2, flashes of insight and cognitive biases, and all the other work of the mind. The Perceptron didn’t try to do much of this work. It was built just to do simple image classification. It had 400 light-detecting photocells randomly connected (to stimulate the brain’s messiness) to a single layer of artificial neurons. An early demonstration of this “neural network,” together with Rosenblatt’s confident predictions, led the New York Times to write in 1958 that it was “the embryo of an electronic computer that [the Navy] expects will be able to walk, talk, see, write, reproduce itself and be conscious of its existence.”

…

The phenomenon of “big data”—the recent explosion of digital text, pictures, sounds, videos, sensor readings, and so on—has been almost as important to machine learning as Moore’s law. Just as a young child needs to hear a lot of words and sentences in order to learn language, machine learning systems need to be exposed to many examples in order to improve in speech recognition, image classification, and other tasks.# We now have an effectively endless supply of such data, with more generated all the time. The kinds of systems built by Hinton, LeCun, Ng, and others have the highly desirable property that their performance improves as they see more and more examples. This happy phenomenon led Hinton to say, a bit modestly, “Retrospectively, [success with machine learning] was just a question of the amount of data and the amount of computations.”

The Alignment Problem: Machine Learning and Human Values

by

Brian Christian

Published 5 Oct 2020

It’s a beautiful, if painstaking, prescription for artistic practice: anyone who can tell good art from bad can be a creator. All you need is good taste, random variations, and plenty of time. Techniques like these not only open up a vast space of aesthetic possibility but have important diagnostic uses as well. For example, Mordvintsev, Olah, and Tyka used their start-from-static technique to have an image classification system “generate” images that would maximally resemble all of its different categories. “In some cases,” they write, “this reveals that the neural net isn’t quite looking for the thing we thought it was.” For example, pictures that maximized the “dumbbell” categorization included surreal, flesh-colored, disembodied arms.

…

If deep networks could look at tens of thousands of raw pixels and figure out whether they were a bagel, a banjo, or a butterfly, maybe they could do whatever feature-construction was needed to render an Atari screen intelligible. He recalls, “The group said, Hey, we have these convolutional networks. They’ve been phenomenal at doing image classification. Um, what if we replace your feature-construction mechanism, which is still a bit of a kludge, by just a convolutional neural network?” Bellemare, again, wasn’t buying it. “I was actually a disbeliever for a very long time. . . . The idea of doing perceptual RL was very, very strange. And, you know, there was a healthy dose of skepticism as to what you could do with neural networks.”

…

See Zeiler et al., “Deconvolutional Networks,” and Zeiler, Taylor, and Fergus, “Adaptive Deconvolutional Networks for Mid and High Level Feature Learning.” 66. By 2014, nearly all of the groups competing on the ImageNet benchmark were using these techniques and insights. See Simonyan and Zisserman, “Very Deep Convolutional Networks for Large-Scale Image Recognition”; Howard, “Some Improvements on Deep Convolutional Neural Network Based Image Classification”; and Simonyan, Vedaldi, and Zisserman, “Deep Inside Convolutional Networks.” In 2018 and 2019, there was some internal controversy within Clarifai over whether its image-recognition software would be used for military applications; see Metz, “Is Ethical A.I. Even Possible?” 67. Inspiration for their approach included Erhan et al., “Visualizing Higher-Layer Features of a Deep Network,” along with other prior and contemporaneous research; see Olah, “Feature Visualization” for a more complete history and bibliography.

The Road to Conscious Machines

by

Michael Wooldridge

Published 2 Nov 2018

If you look at the ‘frisbee’ category, then you’ll see that really the only thing they feature in common is, well, frisbees. In some images, of course, the frisbees are being thrown from one person to another, but in some, the frisbee is on a table, with nobody in view. They are all different – except that they all feature frisbees. The eureka moment for image classification came in 2012, when Geoff Hinton and two colleagues, Alex Krizhevsky and Ilya Sutskever, demonstrated a system called AlexNet, a neural net that dramatically improved performance in an international image recognition competition.10 The final ingredient required to make deep learning work was raw computer-processing power.

…

Figure 17 Panda or gibbon? A neural network correctly classifies image (a) as a panda. However, it is possible to adjust this image in a way that is not detectable to a human so that the same neural network classifies the resulting image (b) as a gibbon. While we might not be overly concerned if our image classification program incorrectly classified our collection of animal images, adversarial machine learning has thrown up much more disturbing examples. For example, it turns out that road signs can be altered in such a way that, while a human has no difficulty interpreting them, they are completely misunderstood by the neural nets in a driverless car.

…

A A* 77 À la recherche du temps perdu (Proust) 205–8 accountability 257 Advanced Research Projects Agency (ARPA) 87–8 adversarial machine learning 190 AF (Artificial Flight) parable 127–9, 243 agent-based AI 136–49 agent-based interfaces 147, 149 ‘Agents That Reduce Work and Information Overload’ (Maes) 147–8 AGI (Artificial General Intelligence) 41 AI – difficulty of 24–8 – ethical 246–62, 284, 285 – future of 7–8 – General 42, 53, 116, 119–20 – Golden Age of 47–88 – history of 5–7 – meaning of 2–4 – narrow 42 – origin of name 51–2 – strong 36–8, 41, 309–14 – symbolic 42–3, 44 – varieties of 36–8 – weak 36–8 AI winter 87–8 AI-complete problems 84 ‘Alchemy and AI’ (Dreyfus) 85 AlexNet 187 algorithmic bias 287–9, 292–3 alienation 274–7 allocative harm 287–8 AlphaFold 214 AlphaGo 196–9 AlphaGo Zero 199 AlphaZero 199–200 Alvey programme 100 Amazon 275–6 Apple Watch 218 Argo AI 232 arithmetic 24–6 Arkin, Ron 284 ARPA (Advanced Research Projects Agency) 87–8 Artificial Flight (AF) parable 127–9, 243 Artificial General Intelligence (AGI) 41 artificial intelligence see AI artificial languages 56 Asilomar principles 254–6 Asimov, Isaac 244–6 Atari 2600 games console 192–6, 327–8 augmented reality 296–7 automated diagnosis 220–1 automated translation 204–8 automation 265, 267–72 autonomous drones 282–4 Autonomous Vehicle Disengagement Reports 231 autonomous vehicles see driverless cars autonomous weapons 281–7 autonomy levels 227–8 Autopilot 228–9 B backprop/backpropagation 182–3 backward chaining 94 Bayes nets 158 Bayes’ Theorem 155–8, 365–7 Bayesian networks 158 behavioural AI 132–7 beliefs 108–10 bias 172 black holes 213–14 Blade Runner 38 Blocks World 57–63, 126–7 blood diseases 94–8 board games 26, 75–6 Boole, George 107 brains 43, 306, 330–1 see also electronic brains branching factors 73 Breakout (video game) 193–5 Brooks, Rodney 125–9, 132, 134, 243 bugs 258 C Campaign to Stop Killer Robots 286 CaptionBot 201–4 Cardiogram 215 cars 27–8, 155, 223–35 certainty factors 97 ceteris paribus preferences 262 chain reactions 242–3 chatbots 36 checkers 75–7 chess 163–4, 199 Chinese room 311–14 choice under uncertainty 152–3 combinatorial explosion 74, 80–1 common values and norms 260 common-sense reasoning 121–3 see also reasoning COMPAS 280 complexity barrier 77–85 comprehension 38–41 computational complexity 77–85 computational effort 129 computers – decision making 23–4 – early developments 20 – as electronic brains 20–4 – intelligence 21–2 – programming 21–2 – reliability 23 – speed of 23 – tasks for 24–8 – unsolved problems 28 ‘Computing Machinery and Intelligence’ (Turing) 32 confirmation bias 295 conscious machines 327–30 consciousness 305–10, 314–17, 331–4 consensus reality 296–8 consequentialist theories 249 contradictions 122–3 conventional warfare 286 credit assignment problem 173, 196 Criado Perez, Caroline 291–2 crime 277–81 Cruise Automation 232 curse of dimensionality 172 cutlery 261 Cybernetics (Wiener) 29 Cyc 114–21, 208 D DARPA (Defense Advanced Research Projects Agency) 87–8, 225–6 Dartmouth summer school 1955 50–2 decidable problems 78–9 decision problems 15–19 deduction 106 deep learning 168, 184–90, 208 DeepBlue 163–4 DeepFakes 297–8 DeepMind 167–8, 190–200, 220–1, 327–8 Defense Advanced Research Projects Agency (DARPA) 87–8, 225–6 dementia 219 DENDRAL 98 Dennett, Daniel 319–25 depth-first search 74–5 design stance 320–1 desktop computers 145 diagnosis 220–1 disengagements 231 diversity 290–3 ‘divide and conquer’ assumption 53–6, 128 Do-Much-More 35–6 dot-com bubble 148–9 Dreyfus, Hubert 85–6, 311 driverless cars 27–8, 155, 223–35 drones 282–4 Dunbar, Robin 317–19 Dunbar’s number 318 E ECAI (European Conference on AI) 209–10 electronic brains 20–4 see also computers ELIZA 32–4, 36, 63 employment 264–77 ENIAC 20 Entscheidungsproblem 15–19 epiphenomenalism 316 error correction procedures 180 ethical AI 246–62, 284, 285 European Conference on AI (ECAI) 209–10 evolutionary development 331–3 evolutionary theory 316 exclusive OR (XOR) 180 expected utility 153 expert systems 89–94, 123 see also Cyc; DENDRAL; MYCIN; R1/XCON eye scans 220–1 F Facebook 237 facial recognition 27 fake AI 298–301 fake news 293–8 fake pictures of people 214 Fantasia 261 feature extraction 171–2 feedback 172–3 Ferranti Mark 1 20 Fifth Generation Computer Systems Project 113–14 first-order logic 107 Ford 232 forward chaining 94 Frey, Carl 268–70 ‘The Future of Employment’ (Frey & Osborne) 268–70 G game theory 161–2 game-playing 26 Gangs Matrix 280 gender stereotypes 292–3 General AI 41, 53, 116, 119–20 General Motors 232 Genghis robot 134–6 gig economy 275 globalization 267 Go 73–4, 196–9 Golden Age of AI 47–88 Google 167, 231, 256–7 Google Glass 296–7 Google Translate 205–8, 292–3 GPUs (Graphics Processing Units) 187–8 gradient descent 183 Grand Challenges 2004/5 225–6 graphical user interfaces (GUI) 144–5 Graphics Processing Units (GPUs) 187–8 GUI (graphical user interfaces) 144–5 H hard problem of consciousness 314–17 hard problems 84, 86–7 Harm Assessment Risk Tool (HART) 277–80 Hawking, Stephen 238 healthcare 215–23 Herschel, John 304–6 Herzberg, Elaine 230 heuristic search 75–7, 164 heuristics 91 higher-order intentional reasoning 323–4, 328 high-level programming languages 144 Hilbert, David 15–16 Hinton, Geoff 185–6, 221 HOMER 141–3, 146 homunculus problem 315 human brain 43, 306, 330–1 human intuition 311 human judgement 222 human rights 277–81 human-level intelligence 28–36, 241–3 ‘humans are special’ argument 310–11 I image classification 186–7 image-captioning 200–4 ImageNet 186–7 Imitation Game 30 In Search of Lost Time (Proust) 205–8 incentives 261 indistinguishability 30–1, 37, 38 Industrial Revolutions 265–7 inference engines 92–4 insurance 219–20 intelligence 21–2, 127–8, 200 – human-level 28–36, 241–3 ‘Intelligence Without Representation’ (Brooks) 129 Intelligent Knowledge-Based Systems 100 intentional reasoning 323–4, 328 intentional stance 321–7 intentional systems 321–2 internal mental phenomena 306–7 Internet chatbots 36 intuition 311 inverse reinforcement learning 262 Invisible Women (Criado Perez) 291–2 J Japan 113–14 judgement 222 K Kasparov, Garry 163 knowledge bases 92–4 knowledge elicitation problem 123 knowledge graph 120–1 Knowledge Navigator 146–7 knowledge representation 91, 104, 129–30, 208 knowledge-based AI 89–123, 208 Kurzweil, Ray 239–40 L Lee Sedol 197–8 leisure 272 Lenat, Doug 114–21 lethal autonomous weapons 281–7 Lighthill Report 87–8 LISP 49, 99 Loebner Prize Competition 34–6 logic 104–7, 121–2 logic programming 111–14 logic-based AI 107–11, 130–2 M Mac computers 144–6 McCarthy, John 49–52, 107–8, 326–7 machine learning (ML) 27, 54–5, 168–74, 209–10, 287–9 machines with mental states 326–7 Macintosh computers 144–6 magnetic resonance imaging (MRI) 306 male-orientation 290–3 Manchester Baby computer 20, 24–6, 143–4 Manhattan Project 51 Marx, Karl 274–6 maximizing expected utility 154 Mercedes 231 Mickey Mouse 261 microprocessors 267–8, 271–2 military drones 282–4 mind modelling 42 mind-body problem 314–17 see also consciousness minimax search 76 mining industry 234 Minsky, Marvin 34, 52, 180 ML (machine learning) 27, 54–5, 168–74, 209–10, 287–9 Montezuma’s Revenge (video game) 195–6 Moore’s law 240 Moorfields Eye Hospital 220–1 moral agency 257–8 Moral Machines 251–3 MRI (magnetic resonance imaging) 306 multi-agent systems 160–2 multi-layer perceptrons 177, 180, 182 Musk, Elon 238 MYCIN 94–8, 217 N Nagel, Thomas 307–10 narrow AI 42 Nash, John Forbes Jr 50–1, 161 Nash equilibrium 161–2 natural languages 56 negative feedback 173 neural nets/neural networks 44, 168, 173–90, 369–72 neurons 174 Newell, Alan 52–3 norms 260 NP-complete problems 81–5, 164–5 nuclear energy 242–3 nuclear fusion 305 O ontological engineering 117 Osborne, Michael 268–70 P P vs NP problem 83 paperclips 261 Papert, Seymour 180 Parallel Distributed Processing (PDP) 182–4 Pepper 299 perception 54 perceptron models 174–81, 183 Perceptrons (Minsky & Papert) 180–1, 210 personal healthcare management 217–20 perverse instantiation 260–1 Phaedrus 315 physical stance 319–20 Plato 315 police 277–80 Pratt, Vaughan 117–19 preference relations 151 preferences 150–2, 154 privacy 219 problem solving and planning 55–6, 66–77, 128 programming 21–2 programming languages 144 PROLOG 112–14, 363–4 PROMETHEUS 224–5 protein folding 214 Proust, Marcel 205–8 Q qualia 306–7 QuickSort 26 R R1/XCON 98–9 radiology 215, 221 railway networks 259 RAND Corporation 51 rational decision making 150–5 reasoning 55–6, 121–3, 128–30, 137, 315–16, 323–4, 328 regulation of AI 243 reinforcement learning 172–3, 193, 195, 262 representation harm 288 responsibility 257–8 rewards 172–3, 196 robots – as autonomous weapons 284–5 – Baye’s theorem 157 – beliefs 108–10 – fake 299–300 – indistinguishability 38 – intentional stance 326–7 – SHAKEY 63–6 – Sophia 299–300 – Three Laws of Robotics 244–6 – trivial tasks 61 – vacuum cleaning 132–6 Rosenblatt, Frank 174–81 rules 91–2, 104, 359–62 Russia 261 Rutherford, Ernest (1st Baron Rutherford of Nelson) 242 S Sally-Anne tests 328–9, 330 Samuel, Arthur 75–7 SAT solvers 164–5 Saudi Arabia 299–300 scripts 100–2 search 26, 68–77, 164, 199 search trees 70–1 Searle, John 311–14 self-awareness 41, 305 see also consciousness semantic nets 102 sensors 54 SHAKEY the robot 63–6 SHRDLU 56–63 Simon, Herb 52–3, 86 the Singularity 239–43 The Singularity is Near (Kurzweil) 239 Siri 149, 298 Smith, Matt 201–4 smoking 173 social brain 317–19 see also brains social media 293–6 social reasoning 323, 324–5 social welfare 249 software agents 143–9 software bugs 258 Sophia 299–300 sorting 26 spoken word translation 27 STANLEY 226 STRIPS 65 strong AI 36–8, 41, 309–14 subsumption architecture 132–6 subsumption hierarchy 134 sun 304 supervised learning 169 syllogisms 105, 106 symbolic AI 42–3, 44, 181 synapses 174 Szilard, Leo 242 T tablet computers 146 team-building problem 78–81, 83 Terminator narrative of AI 237–9 Tesla 228–9 text recognition 169–71 Theory of Mind (ToM) 330 Three Laws of Robotics 244–6 TIMIT 292 ToM (Theory of Mind) 330 ToMnet 330 TouringMachines 139–41 Towers of Hanoi 67–72 training data 169–72, 288–9, 292 translation 204–8 transparency 258 travelling salesman problem 82–3 Trolley Problem 246–53 Trump, Donald 294 Turing, Alan 14–15, 17–19, 20, 24–6, 77–8 Turing Machines 18–19, 21 Turing test 29–38 U Uber 168, 230 uncertainty 97–8, 155–8 undecidable problems 19, 78 understanding 201–4, 312–14 unemployment 264–77 unintended consequences 263 universal basic income 272–3 Universal Turing Machines 18, 19 Upanishads 315 Urban Challenge 2007 226–7 utilitarianism 249 utilities 151–4 utopians 271 V vacuum cleaning robots 132–6 values and norms 260 video games 192–6, 327–8 virtue ethics 250 Von Neumann and Morgenstern model 150–5 Von Neumann architecture 20 W warfare 285–6 WARPLAN 113 Waymo 231, 232–3 weak AI 36–8 weapons 281–7 wearable technology 217–20 web search 148–9 Weizenbaum, Joseph 32–4 Winograd schemas 39–40 working memory 92 X XOR (exclusive OR) 180 Z Z3 computer 19–20 PELICAN BOOKS Economics: The User’s Guide Ha-Joon Chang Human Evolution Robin Dunbar Revolutionary Russia: 1891–1991 Orlando Figes The Domesticated Brain Bruce Hood Greek and Roman Political Ideas Melissa Lane Classical Literature Richard Jenkyns Who Governs Britain?

Mastering Machine Learning With Scikit-Learn

by

Gavin Hackeling

Published 31 Oct 2014

ISBN 978-1-78398-836-5 www.packtpub.com Cover image by Amy-Lee Winfield (abjure@outlook.com) www.it-ebooks.info Credits Author Project Coordinator Gavin Hackeling Danuta Jones Reviewers Proofreaders Fahad Arshad Simran Bhogal Sarah Guido Tarsonia Sanghera Mikhail Korobov Lindsey Thomas Aman Madaan Indexer Monica Ajmera Mehta Acquisition Editor Meeta Rajani Graphics Content Development Editor Neeshma Ramakrishnan Sheetal Aute Ronak Dhruv Disha Haria Technical Editor Faisal Siddiqui Production Coordinator Kyle Albuquerque Copy Editors Roshni Banerjee Adithi Shetty Cover Work Kyle Albuquerque www.it-ebooks.info About the Author Gavin Hackeling develops machine learning services for large-scale documents and image classification at an advertising network in New York. He received his Master's degree from New York University's Interactive Telecommunications Program, and his Bachelor's degree from the University of North Carolina. To Hallie, for her support, and Zipper, without whose contributions this book would have been completed in half the time.

…

While K-Means learns from experience without supervision, its performance is still measurable; you learned to use distortion and the silhouette coefficient to evaluate clusters. We applied K-Means to two different problems. First, we used K-Means for image quantization, a compression technique that represents a range of colors with a single color. We also used K-Means to learn features in a semi-supervised image classification problem. In the next chapter, we will discuss another unsupervised learning task called dimensionality reduction. Like the semi-supervised feature representations we created to classify images of cats and dogs, dimensionality reduction can be used to reduce the dimensions of a set of explanatory variables while retaining as much information as possible

AI in Museums: Reflections, Perspectives and Applications

by

Sonja Thiel

and

Johannes C. Bernhardt

Published 31 Dec 2023

In what follows, a few suggestions are given on how to approach the field. A multidimensional approach seems useful for assessing what artificial intelligence might mean in museums. One strategy may be to narrow the term to technological definitions, for instance, the analysis of processes or tasks such as natural language processing, image classification, or chatbot technologies for suitability to or actual use in museums. A related strategy would be to start with existing algorithms, models, and solutions such as kmeans, tSne, UMAP, Pixplot, Huggingface, or GPT and to analyse what results, as well as to achieve added value by applying them to museum collections or processes.

…

Some projects from the archive and library sector are particularly noteworthy in this context (AI4LAM 2023; Staatsbibliothek zu Berlin 2023, Klindworth/Rosemann 2022; Jaillant 2022) and are producing transferable solutions for indexing and processing collections with the aid of AI technologies, for example, through text recognition or image classification. Other approaches help to make museum content richer and more accessible by providing a new experience. Solutions that support chatbot interaction open up collections in 91 92 Part 1: Reflections greater depth by means of new contexts or connections (for instance, High-Steskal and Gustke in this volume).

…

Artists were among the first to raise public attention by exposing flaws in AI datasets. In their ImageNet Roulette, Paglen and Crawford (2019) allowed people to upload a selfie and then classified it based on ImageNet (Deng/Dong/Socher et al. 2009), one of the largest image databases with image classifications. The resulting classifications revealed offensive or derogatory statements, such as classifying an image of a pregnant person as ‘lazy’. A large part of the ImageNet dataset was subsequently removed from public access. Prabu and Birhane (2020) investigated ImageNet further and still found many harmful representations of women.

Prediction Machines: The Simple Economics of Artificial Intelligence

by

Ajay Agrawal

,

Joshua Gans

and

Avi Goldfarb

Published 16 Apr 2018

As Princeton professor and computer scientist Olga Russakovsky notes, “2012 was really the year when there was a massive breakthrough in accuracy, but it was also a proof of concept for deep learning models, which had been around for decades.”8 Rapid improvements in the algorithms continued, and a team beat the human benchmark in the competition for the first time in 2015. By 2017, the vast majority of the thirty-eight teams did better than the human benchmark, and the best team had fewer than half as many mistakes. Machines could identify these types of images better than people.9 FIGURE 3-1 * * * Image classification error over time * * * The Consequences of Cheap Prediction The current generation of AI is a long way from the intelligent machines of science fiction. Prediction does not get us HAL from 2001: A Space Odyssey, Skynet from The Terminator, or C3PO from Star Wars. If modern AI is just prediction, then why is there so much fuss?

…

P., 93–94 Duke University Teradata Center, 35 earthquakes, 59–60 eBay, 199 economics, 3 of AI, 8–9 of cost reductions, 9–11 data collection and, 49–50 on externalities, 116–117 New Economy and, 10–11 economies of scale, 49–50, 215–217 Edelman, Ben, 196–197 education, income inequality and, 214 electricity, cost of light and, 11 emergency braking, automatic, 111–112 error, tolerance for, 184–186 ethical dilemmas, 116 Etzioni, Oren, 220 Europe, privacy regulation in, 219–220 exceptions, prediction by, 67–68 Executive Office of the US President, 222–223 experience, 191–193 experimentation, 88, 99–100 AI tool development and, 159–160 expert prediction, 55–58 externalities, 116–117 Facebook, 176, 190, 195–196, 215, 217 facial recognition, 190, 219–220 Federal Aviation Administration, 185 Federal Trade Commission, 195 feedback data, 43, 46 in decision making, 74–76, 134–138 experience and, 191–193 risks with, 204–205 financial crisis of 2008, 36–37 flexibility, 36 Forbes, Silke, 168–169 Ford, 123–134, 164 Frankston, Bob, 141, 164 fraud detection, 24–25, 27, 84–88, 91 Frey, Carl, 149 fulfillment industry, 105, 143–145 Furman, Jason, 213 Gates, Bill, 163, 210, 213, 221 gender discrimination, 196–198 Gildert, Suzanne, 145 Glozman, Ron, 53–54 Goizueta, Robert, 43 Goldin, Claudia, 214 Goldman Sachs, 125 Google, 7–8, 43, 50, 187, 215, 223 advertising, 176, 195–196, 198–199 AI-first strategy at, 179–180 AI tool development at, 160 anti-spam team sting, 202–203 bias in ads and, 195–196 China, 219 Inbox, 185, 187 market share of, 216–217 Now, 106 privacy policy, 190 search engine optimization and, 64 search tool, 19 translation service, 25–26 video content algorithm, 200 Waymo, 95 Waze and, 89–90 Grammarly, 96 Greece, ancient, 23 Griliches, Zvi, 159 Grove, Andy, 155 hackers, 200 Hacking, Ian, 40 Hammer, Michael, 123–134 Harford, Tim, 192–193 Harvard Business School cases, 141 Hawking, Stephen, 8, 210–211, 221 Hawkins, Jeff, 39 health insurance, 28 heart disease, diagnosing, 44–45, 47–49 Heifets, Abraham, 135, 136 Hemingway, Ernest, 25–26 heuristics, 55 Hinton, Geoffrey, 145 hiring, 58, 98 ZipRecruiter and, 93–94, 100 Hoffman, Mitchell, 58 homogeneity, data, 201–202 hotel industry, 63–64 Houston Astros, 161 Howe, Kathryn, 14 human resource (HR) management, 172–173 IBM’s Watson, 146 identity verification, 201, 219–220 iFlytek, 26–27 if-then logic, 91, 104–109 image classification, 28–29 ImageNet, 7 ImageNet Challenge, 28–29 imitation of algorithms, 202–204 income inequality, 19, 212–214 independent variables, 45 inequality, 19, 212–214 initial public offerings (IPOs), 9–10, 125 innovation, 169–170, 171, 218–219 innovator’s dilemma, 181–182 input data, 43 in decision making, 74–76, 134–138 identifying required, 139 Integrate.ai, 14 Intel, 15, 215 intelligence churn prediction and, 32–36 human, 39 prediction as, 2–3, 29, 31–41 internet advertising, 175–176 browsers, 9–10 delivery time uncertainty and commerce via, 157–158 development of the commercial, 9–10 inventory management, 28, 105 Iowa, hybrid corn adoption in, 158–160, 181 iPhone, 129–130, 155 iRobot, 104 James, Bill, 56 Jelinek, Frederick, 108 jobs, 19.

Your Computer Is on Fire

by

Thomas S. Mullaney

,

Benjamin Peters

,

Mar Hicks

and

Kavita Philip

Published 9 Mar 2021

Digital vigilantism based on facial recognition is made banal, even playful,12 in the proliferation of everyday software rooted in recognition, from automated photo album tagging of friends to Google’s Arts and Culture app matching one’s face with a portrait from (Western) art history. Recognition of one’s likeness is comfortable, pleasurable. It is made easy through popular encounters with image-classification algorithms. Recognition thrills. Self-recognition offers joy; recognition of the other, however—that which is not the self or an object of admiration—elicits a spike of repulsion and malice. Recognition is pleasure for this conjoined moment: the marking and apprehension of lovable selves and reprehensible others.

…

Seeing Like an Image-Recognition Algorithm The image forensics software used by NCMEC searches through an in-house database of known images of child pornography to see if the new image might be similar, or even identical, to an image that has already gone through that system. If two images are exactly identical, they will have the same hash value. Hash matching is a simple form of image classification conducted at many of the agencies that are involved in child pornography detection. However, as one expert explained to me, hash matching techniques have historically prioritized detecting offenders over victims. I interviewed Special Agent Jim Cole, of US Homeland Security, to better understand law enforcement’s use of hash sets.

…

Similarly, Safiya Umoja Noble discusses the gulf between the imagined perfection and objectivity of many technologies (from facial recognition to predictive policing) and their inaccurate and biased realities. Mitali Thakor uses the complex relationship between human experts and software, in the case of high-stakes image classification, to outline the gulf between the imagined future in which all labor is automated and the complex sociotechnical realities ahead of us. And there are many other examples between these covers—because tracing such gulfs is one of the foundations of the work in Your Computer Is on Fire. These gulfs matter for a variety of reasons.

Artificial Intelligence: A Modern Approach

by

Stuart Russell

and

Peter Norvig

Published 14 Jul 2019

The primary reason for this success seems to be that the features that are being used by CNN classifiers are learned from data, not hand-crafted by a researcher; this ensures that the features are actually useful for classification. Progress in image classification has been rapid because of the availability of large, challenging data sets such as ImageNet; because of competitions based on these data sets that are fair and open; and because of the widespread dissemination of successful models. The winners of competitions publish the code and often the pretrained parameters of their models, making it easy for others to fiddle with successful architectures and try to make them better. 27.4.2Why convolutional neural networks classify images well Image classification is best understood by looking at data sets, but ImageNet is much too large to look at in detail.

…

The resulting regions, called superpixels, provide a significant reduction in computational complexity for various algorithms, as the number of superpixels may be in the hundreds, compared to millions of raw pixels. Exploiting high-level knowledge of objects is the subject of the next section, and actually detecting the objects in images is the subject of Section 27.5. 27.4Classifying Images Image classification applies to two main cases. In one, the images are of objects, taken from a given taxonomy of classes, and there’s not much else of significance in the picture—for example, a catalog of clothing or furniture images, where the background doesn’t matter, and the output of the classifier is “cashmere sweater” or “desk chair.”

…

In one, the images are of objects, taken from a given taxonomy of classes, and there’s not much else of significance in the picture—for example, a catalog of clothing or furniture images, where the background doesn’t matter, and the output of the classifier is “cashmere sweater” or “desk chair.” In the other case, each image shows a scene containing multiple objects. So in grassland you might see a giraffe and a lion, and in the living room you might see a couch and lamp, but you don’t expect a giraffe or a submarine in a living room. We now have methods for large-scale image classification that can accurately output “grassland” or “living room.” Modern systems classify images using appearance (i.e., color and texture, as opposed to geometry). There are two difficulties. First, different instances of the same class could look different—some cats are black and others are orange.

Applied Artificial Intelligence: A Handbook for Business Leaders

by

Mariya Yao

,

Adelyn Zhou

and

Marlene Jia

Published 1 Jun 2018

Deep Learning Deep learning is a subfield of machine learning that builds algorithms by using multi-layered artificial neural networks, which are mathematical structures loosely inspired by how biological neurons fire. Neural networks were invented in the 1950s, but recent advances in computational power and algorithm design—as well as the growth of big data—have enabled deep learning algorithms to approach human-level performance in tasks such as speech recognition and image classification. Deep learning, in combination with reinforcement learning, enabled Google DeepMind’s AlphaGo to defeat human world champions of Go in 2016, a feat that many experts had considered to be computationally impossible. Much media attention has been focused on deep learning, and an increasing number of sophisticated technology companies have successfully implemented deep learning for enterprise-scale products.

Deep Medicine: How Artificial Intelligence Can Make Healthcare Human Again

by

Eric Topol

Published 1 Jan 2019

Wang, D., et al., Deep Learning for Identifying Metastatic Breast Cancer. arXiv, 2016. 47. Yu, K. H., et al., “Predicting Non–Small Cell Lung Cancer Prognosis by Fully Automated Microscopic Pathology Image Features.” Nat Commun, 2016. 7: p. 12474. 48. Hou, L., et al., Patch-Based Convolutional Neural Network for Whole Slide Tissue Image Classification. arXiv, 2016. 49. Liu, Y., et al., Detecting Cancer Metastases on Gigapixel Pathology Images. arXiv, 2017. 50. Cruz-Roa, A., et al., “Accurate and Reproducible Invasive Breast Cancer Detection in Whole-Slide Images: A Deep Learning Approach for Quantifying Tumor Extent.” Sci Rep, 2017. 7: p. 46450. 51.

…

Cell, 2018. 173(3): pp. 546–548. 68. Ounkomol, C., et al., “Label-Free Prediction of Three-Dimensional Fluorescence Images from Transmitted-Light Microscopy.” Nat Methods, 2018. 69. Sullivan, D. P., et al., “Deep Learning Is Combined with Massive-Scale Citizen Science to Improve Large-Scale Image Classification.” Nat Biotechnol, 2018. 36(9): pp. 820–828. 70. Ota, S., et al., “Ghost Cytometry.” Science, 2018. 360(6394): pp. 1246–1251. 71. Nitta, N., et al., “Intelligent Image-Activated Cell Sorting.” Cell, 2018. 175(1): pp. 266–276 e13. 72. Weigert, M., et al., Content-Aware Image Restoration: Pushing the Limits of Fluorescence Microscopy, bioRxiv. 2017; Yang, S.

Succeeding With AI: How to Make AI Work for Your Business

by

Veljko Krunic

Published 29 Mar 2020

(For the job to count as a solution to this exercise, it must be a job that’s so unrelated to the software development team that’s building the AI, that the person hired for the job is unlikely to ever meet that team.) Question 3: Suppose you’re using an AI algorithm in the context of a medical facility— let’s say a radiology department of a large hospital. You’re lucky to have on the team the best AI expert in the field of image classification, who has you covered on the AI side. While you’re confident that expert will be able to develop an AI algorithm to classify medical images as either normal or abnormal, that expert has never worked in a healthcare setting before. What other considerations do you need to address to develop a working AI product applicable to healthcare?

…

The “Creating new jobs with AI” sidebar in section 2.5.3 gives one such example when using AI to monitor pets while owners are at work. Question 3: Suppose you’re using an AI algorithm in the context of a medical facility—let’s say a radiology department of a large hospital. You’re lucky to have on the team the best AI expert in the field of image classification, who has you covered on the AI side. While you’re confident that expert will be able to develop an AI algorithm to classify medical images as either normal or abnormal, that expert has never worked in a healthcare setting before. What other considerations do you need to address to develop a working AI product applicable to healthcare?

The Elements of Statistical Learning (Springer Series in Statistics)

by

Trevor Hastie

,

Robert Tibshirani

and

Jerome Friedman

Published 25 Aug 2009

The second set of methods generalize Fisher’s linear discriminant analysis (LDA). The generalizations include flexible discriminant analysis which facilitates construction of nonlinear boundaries in a manner very similar to the support vector machines, penalized discriminant analysis for problems such as signal and image classification where the large number of features are highly correlated, and mixture discriminant analysis for irregularly shaped classes. 12.2 The Support Vector Classifier In Chapter 4 we discussed a technique for constructing an optimal separating hyperplane between two perfectly separated classes. We review this and generalize to the nonseparable case, where the classes may not be separable by a linear boundary. 418 12.

…

In the four-dimensional sphere example mentioned above and examined in Hastie and Tibshirani (1996a), four of the eigenvalues θℓ turn out to be large (having eigenvectors nearly spanning the interesting subspace), and the remaining six are near zero. Operationally, we project the data into the leading four-dimensional subspace, and then carry out nearest neighbor classification. In the satellite image classification example in Section 13.3.2, the technique labeled DANN in Figure 13.8 used 5-nearest-neighbors in a globally reduced subspace. There are also connections of this technique with the sliced inverse regression proposal of Duan and Li (1991). These authors use similar ideas in the regression setting, but do global rather than local computations.

…

Notably Ho (1995) introduced the term “random forest,” and used a consensus of trees grown in random subspaces of the features. The idea of using stochastic perturbation and averaging to avoid overfitting was introduced by Kleinberg (1990), and later in Kleinberg (1996). Amit and Geman (1997) used randomized trees grown on image features for image classification problems. Breiman (1996a) introduced bagging, a precursor to his version of random forests. Dietterich (2000b) also proposed an improvement on bagging using additional randomization. His approach was to rank the top 20 candidate splits at each node, and then select from the list at random.

The Ethical Algorithm: The Science of Socially Aware Algorithm Design

by

Michael Kearns

and

Aaron Roth

Published 3 Oct 2019

The technical name for the algorithmic framework we have been describing is a generative adversarial network (GAN), and the approach we’ve outlined above indeed seems to be highly effective: GANs are an important component of the collection of techniques known as deep learning, which has resulted in qualitative improvements in machine learning for image classification, speech recognition, automatic natural language translation, and many other fundamental problems. (The Turing Award, widely considered the Nobel Prize of computer science, was recently awarded to Yoshua Bengio, Geoffrey Hinton, and Yann LeCun for their pioneering contributions to deep learning.)

Designing Search: UX Strategies for Ecommerce Success

by

Greg Nudelman

and

Pabini Gabriel-Petit

Published 8 May 2011

IEEE MultiMedia, Volume 11, Issue 1, January–March 2004. Lam, Heidi and Patrick Baudisch. “Summary Thumbnails: Readable Overviews for Small Screen Web Browsers.” Proceedings of the SIGCHI Conference on Human Factors in Computing Systems CHI 2005, Portland, OR, USA, 2005. Maekawa, Takuya, Takahiro Hara, and Shojiro Nishio. “Image Classification for Mobile Web Browsing.” Proceedings of WWW 2006, Edinburgh, Scotland, 2006. Miller, Andrew D. and W. Keith Edwards. “Give and Take: A Study of Consumer Photo-Sharing Culture and Practice.” Proceedings of the SIGCHI Conference on Human Factors in Computing Systems CHI 2007, San Jose, CA, USA 2007.

12 Bytes: How We Got Here. Where We Might Go Next

by

Jeanette Winterson

Published 15 Mar 2021

Supermarket check-out jobs and secretarial positions were pushed out to 85% women. Driving jobs pinged into the boxes of a 75% Black male audience. Houses for sale – 75% white. Macho visuals were aimed at guys. Caring, cuddly, nature visuals circulated to women. The study concluded: ‘Facebook has an automated image classification mechanism used to steer different ads towards different subsets of the user population.’ Facebook, of course, as usual, responded to the study as it responds to every criticism: Facebook is making ‘important changes’. * * * OK … thanks. * * * The real problem the research exposes is how algorithms (Facebook’s or not) reinforce and amplify existing bias.

The Autistic Brain: Thinking Across the Spectrum

by

Temple Grandin

and

Richard Panek

Published 15 Feb 2013

Hazlett et al., “Teasing Apart the Heterogeneity of Autism: Same Behavior, Different Brains in Toddlers with Fragile X Syndrome and Autism,” Journal of Neurodevelopmental Disorders 1, no. 1 (March 2009): 81–90. [>] a study her group conducted: Grace Lai et al., “Speech Stimulation During Functional MR Imaging as a Potential Indicator of Autism,” Radiology 260, no. 2 (August 2011): 521–30. [>] a major study: Jeffrey S. Anderson et al., “Functional Connectivity Magnetic Resonance Imaging Classification of Autism,” Brain 134 (December 2011): 3742 –54. [>] A 2011 MRI study: A. Elnakib et al., “Autism Diagnostics by Centerline-Based Shape Analysis of the Corpus Callosum,” IEEE International Symposium on Biomedical Imaging: From Nano to Macro (March 30, 2011): 1843–46. [>] another MRI study from 2011: Lucina Q.

Outnumbered: From Facebook and Google to Fake News and Filter-Bubbles – the Algorithms That Control Our Lives

by

David Sumpter

Published 18 Jun 2018

It is often a far-off dream that drives our desire to find things out about the world. It isn’t money per se that motivates the people at DeepMind or Tesla. There is also a genuine feeling of excitement among the researchers. At the turn of the millennium, the idea of general AI seemed to be dead. When neural networks solved the image-classification problem in 2012, it felt like a change was finally coming. The truth behind current algorithms is often much simpler and more banal than the term ‘artificial intelligence’ implies. When I looked at the algorithms that try to classify us, I found they were statistical representations of more or less the same things we already know about ourselves.

Know Thyself

by

Stephen M Fleming

Published 27 Apr 2021

Representations play an important role in the general idea that the brain performs computations. If something inside my head can represent a house cat, then I can also do things like figure out that it is related to a lion, and that both belong to a larger family of animals known as cats.6 It is likely no coincidence that the way successful artificial image-classification networks are wired is similar to the hierarchical organization of the human brain. Lower layers contain neurons that handle only small parts of the image and keep track of features such as the orientation of lines or the difference between light and shade. Higher layers contain neurons that process the entire image and represent things about the object in the image (such as whether it contains features typical of a cat or a dog).

A Hacker's Mind: How the Powerful Bend Society's Rules, and How to Bend Them Back

by

Bruce Schneier

Published 7 Feb 2023

That, in a nutshell, is the explainability problem. Modern AI systems are essentially black boxes. Data goes in at one end, and an answer comes out the other. It can be impossible to understand how the system reached its conclusion, even if you are the system’s designer and can examine the code. Researchers don’t know precisely how an AI image-classification system differentiates turtles from rifles, let alone why one of them mistook one for the other. In 2016, the AI program AlphaGo won a five-game match against one of the world’s best Go players, Lee Sedol—something that shocked both the AI and the Go-playing worlds. AlphaGo’s most famous move was in game two: move thirty-seven.

The Future of the Brain: Essays by the World's Leading Neuroscientists

by

Gary Marcus

and

Jeremy Freeman

Published 1 Nov 2014

But as Steven Pinker and I showed, the details were rarely correct empirically; more than that, nobody was ever able to turn a neural network into a functioning system for understanding language. Today neural networks have finally found a valuable home—in machine learning, especially in speech recognition and image classification, due in part to innovative work by researchers such as Geoff Hinton and Yann LeCun. But the utility of neural networks as models of mind and brain remains marginal, useful, perhaps, in aspects of low-level perception but of limited utility in explaining more complex, higher-level cognition.

Driverless: Intelligent Cars and the Road Ahead

by

Hod Lipson

and

Melba Kurman

Published 22 Sep 2016

This is particularly true for multilayer convolutional networks that have an array organization that closely aligns with the graphics application for which GPUs were originally designed. In most computing applications, speed is of the essence; in a neural network, speed is critical. The speed in which the neural network calculates its answer to an image-classification problem is fractions of a second faster on a GPU that it is on a CPU. But the training of a neural network can be hundreds of times faster, because training requires millions of backprop iterations. Researchers who used GPUs to run their convolutional neural networks began seeing an improvement factor of almost ten compared to their colleagues who were using ordinary desktops.

Artificial Intelligence: A Guide for Thinking Humans

by

Melanie Mitchell

Published 14 Oct 2019

The ConvNet here is similar to the ones I described in chapter 4, except that this ConvNet doesn’t output object classifications; instead, the activations of its final layer are given as input to the decoder network. The decoder network “decodes” these activations to output a sentence. To encode the image, the authors used a ConvNet that had been trained for image classification on ImageNet, the huge image data set that I described in chapter 5. The task here is to train the decoder network to generate an appropriate caption for an input image. How does this system learn to produce reasonable captions? Recall that for language translation, the training data consists of pairs of sentences, in which the first sentence in a pair is in the source language and the second is a human translator’s translation into the target language.

Artificial Whiteness

by

Yarden Katz

FIGURE 3.4 Performance of Google’s Show and Tell. Reproduced from company paper; see text. There have been other critiques of existing artificial vision systems on similarly narrow, but still informative grounds. For instance, computer scientists showed that the perceptual space of deep network–based image classification systems differs wildly from that of people. Such systems are trained on the generic image data sets (mentioned above) and assign labels to images, usually with a confidence score. With a simple procedure, it is possible to get these systems to assign high-confidence labels to images that look like noise or abstract patterns.33 The procedure starts with a pool of random images and iteratively mutates them—randomly perturbing each image’s pixels—and then selects only those images that are most confidently classified as the label of interest.

Practical Doomsday: A User's Guide to the End of the World

by

Michal Zalewski

Published 11 Jan 2022

It wasn’t until the late 2000s that AI research made a comeback, aided with vastly superior computing resources and significant refinements to the architecture of neural networks and to deep learning algorithms. But the field focused on humble, utilitarian goals: building systems custom-tailored to perform highly specialized tasks, such as voice recognition, image classification, or the translation of text. Such architectures, although quite successful, still require quite a few quantum leaps to get anywhere close to AGI, and tellingly, the desire to build a digital “brain in a jar” is not an immediate goal for any serious corporate or academic research right now.

Doing Data Science: Straight Talk From the Frontline

by

Cathy O'Neil

and

Rachel Schutt

Published 8 Oct 2013

If you want to be more systematic, you can also do this using Python or R. Other parsing tools you might want to look into include: lynx and lynx --dump Good if you pine for the 1970s. Oh wait, 1992. Whatever. Beautiful Soup Robust but kind of slow. Mechanize (or here) Super cool as well, but it doesn’t parse JavaScript. PostScript Image classification. Thought Experiment: Image Recognition How do you determine if an image is a landscape or a headshot? Start with collecting data. You either need to get someone to label these things, which is a lot of work, or you can grab lots of pictures from flickr and ask for photos that have already been tagged.

The Equality Machine: Harnessing Digital Technology for a Brighter, More Inclusive Future

by

Orly Lobel

Published 17 Oct 2022

Today’s students of computer science continue to study from journals and courses that show the image of a Playboy model as the object of imaging. It was only in 2018 that the prestigious journal Nature, along with other established journals such as Scientific American, announced that they would no longer consider articles using the Lena image. That year, TensorFlow, a leading image classification software, used a photograph of pioneering computer scientist and U.S. Navy Rear Admiral Grace Hopper as a test image. Another article on advances in compressed sensing used a photo of model Fabio Lanzoni—as in long-haired, shirtless, just-first-name Fabio of 1990s romance novel cover fame—with an eye toward flipping the gender of the objectified test image.

What We Owe the Future: A Million-Year View

by

William MacAskill

Published 31 Aug 2022

Chen et al. 2020; Dosovitskiy et al. 2021), and video (Wang et al. 2021). The highest-profile AI achievements in real-time strategy games were DeepMind’s AlphaStar defeat of human grandmasters in the game StarCraft II and the OpenAI Five’s defeat of human world champions in Dota 2 (OpenAI et al. 2019; Vinyals et al. 2019). Early successes in image classification (see, e.g., Krizhevsky et al. 2012) are widely seen as having been key for demonstrating the potential of deep learning. See also the following: speech recognition, Abdel-Hamid et al. (2014); Ravanelli et al. (2019); music, Briot et al. (2020); Choi et al. (2018); Magenta (n.d.); visual art, Gatys et al. (2016); Lecoutre et al. (2017).

The Technology Trap: Capital, Labor, and Power in the Age of Automation

by

Carl Benedikt Frey

Published 17 Jun 2019

According to Cisco, worldwide internet traffic will increase nearly threefold over the next five years, reaching 3.3 zettabytes per year by 2021.8 To put this number in perspective, researchers at the University of California, Berkeley estimate that the information contained in all books worldwide is around 480 terabytes, while a text transcript of all the words ever spoken by humans would amount to some five exabytes.9 Data can justly be regarded as the new oil. As big data gets bigger, algorithms get better. When we expose them to more examples, they improve their performance in translation, speech recognition, image classification, and many other tasks. For example, an ever-larger corpus of digitalized human-translated text means that we are able to better judge the accuracy of algorithmic translators in reproducing observed human translations. Every United Nations report, which is always translated by humans into six languages, gives machine translators more examples to learn from.10 And as the supply of data expands, computers do better.

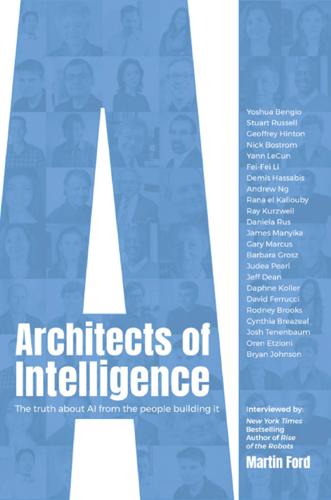

Architects of Intelligence

by

Martin Ford

Published 16 Nov 2018

This could a new project or application that yields new insights into a societal challenge or proposes a radical solution or leads to the development of a breakthrough technology. This could be in healthcare, climate science, humanitarian crises or in discovering new materials. This is another area that my colleagues and I are researching where it’s clear that AI techniques from image classification to natural language processing and object identification can make a big contribution in many of these domains. Having said all of that, if you say AI is good for business, good for economic growth, and helps tackle societal moonshots, then the big question is—what about work? I think this is a much more mixed and complicated story.