The Ethical Algorithm: The Science of Socially Aware Algorithm Design

by

Michael Kearns

and

Aaron Roth

Published 3 Oct 2019

In this way we turn playing backgammon into a machine learning problem, with the requisite data being provided by simulated self-play. This simple but brilliant idea was first successfully applied by Gerry Tesauro of IBM Research in 1992, whose self-trained TD-Gammon program achieved a level of play comparable to the best humans in the world. (The “TD” stands for “temporal difference,” a technical term referring to the complication that in games such as backgammon, feedback is delayed: you do not receive feedback about whether each individual move was good or bad, only whether you won the entire game or not.) In the intervening decades, simulated self-play has proven to be a powerful algorithmic technique for designing champion-level programs for a variety of games, including quite recently for Atari video games, and for the notoriously difficult and ancient game of Go.

…

In the intervening decades, simulated self-play has proven to be a powerful algorithmic technique for designing champion-level programs for a variety of games, including quite recently for Atari video games, and for the notoriously difficult and ancient game of Go. It might seem natural that internal self-play is an effective design principle if your goal is to actually create a good game-playing program. A more surprising recent development is the use of self-play in algorithms whose outward goal has nothing to do with games at all. Consider the challenge of designing a computer program that can generate realistic but synthetic images of cats. (We’ll return shortly to the question of why one might be interested in this goal, short of simply being a cat fanatic.)

…

A rather different and more recent use of game theory is for the internal design of algorithms, rather than for managing the preferences of an external population of users. In these settings, there is no actual human game, such as commuting, that the algorithm is helping to solve. Rather, a game is played in the “mind” of the algorithm, for its own purposes. An early example of this idea is self-play in machine learning for board games. Consider the problem of designing the best backgammon-playing computer program that you can. One approach would be to think very hard about backgammon strategy, probabilities, and the like, and hand-code rules dictating which move to make in any given board configuration.

Rust Programming by Example

by

Guillaume Gomez

and

Antoni Boucher

Published 11 Jan 2018

{ #[name="window"] gtk::Window { title: "Rusic", gtk::Box { orientation: Vertical, #[name="toolbar"] gtk::Toolbar { // … gtk::ToolButton { icon_widget: &new_icon("gtk-media-previous"), clicked => playlist@PreviousSong, }, // … gtk::ToolButton { icon_widget: &new_icon("gtk-media-next"), clicked => playlist@NextSong, }, }, // … }, delete_event(_, _) => (Quit, Inhibit(false)), } } We'll handle these messages in the Paylist::update() method: fn update(&mut self, event: Msg) { match event { AddSong(path) => self.add(&path), LoadSong(path) => self.load(&path), NextSong => self.next(), PauseSong => (), PlaySong => self.play(), PreviousSong => self.previous(), RemoveSong => self.remove_selection(), SaveSong(path) => self.save(&path), // To be listened by App. SongStarted(_) => (), StopSong => self.stop(), } } This requires some new methods: fn next(&mut self) { let selection = self.treeview.get_selection(); let next_iter = if let Some((_, iter)) = selection.get_selected() { if !self.model.model.iter_next(&iter) { return; } Some(iter) } else { self.model.model.get_iter_first() }; if let Some(ref iter) = next_iter { selection.select_iter(iter); self.play(); } } fn previous(&mut self) { let selection = self.treeview.get_selection(); let previous_iter = if let Some((_, iter)) = selection.get_selected() { if !

…

Then, we update the stopped field of the application state because the click handler for the play button will use it to decide whether we want to play or resume the music. We also call set_playing() to indicate to the player thread whether it needs to continue playing the song or not. This method is defined as such: fn set_playing(&self, playing: bool) { *self.event_loop.playing.lock().unwrap() = playing; let (ref lock, ref condition_variable) = *self.event_loop.condition_variable; let mut started = lock.lock().unwrap(); *started = playing; if playing { condition_variable.notify_one(); } } It sets the playing variable and then notifies the player thread to wake it up if playing is true.

…

We first need to create a new method in the Playlist: pub fn next(&self) -> bool { let selection = self.treeview.get_selection(); let next_iter = if let Some((_, iter)) = selection.get_selected() { if !self.model.iter_next(&iter) { return false; } Some(iter) } else { self.model.get_iter_first() }; if let Some(ref iter) = next_iter { selection.select_iter(iter); self.play(); } next_iter.is_some() } We start by getting the selection. Then we check whether an item is selected: in this case, we try to get the item after the selection. Otherwise, we get the first item on the list. Then, if we were able to get an item, we select it and start playing the song. We return whether we changed the selection or not.

The Ages of Globalization

by

Jeffrey D. Sachs

Published 2 Jun 2020

Then, to make matters even more dramatic, AlphaGo was decisively defeated by a next-generation AI system that learned Go from scratch in self-play over a few hours. Once again, hundreds of years of expert study and competition could be surpassed in a few hours of learning through self-play. The advent of learning through self-play, sometimes called “tabula rasa” or blank-slate learning, is mind-boggling. In tabula-rasa learning, the AI system is trained to play against itself, for example in millions of games of chess, with the weights of the neural networks updated depending on the wins and losses in self-play. Starting from no information whatsoever other than the rules of chess, the AI system plays against itself in millions of chess games and uses the results to update the neural-network weights in order to learn chess-playing skills.

…

With the vast increases in computational capacity and speed of computers represented by Moore’s law, artificial intelligence systems are now being built with hundreds of layers of digital neurons and very high-dimensional digital inputs and outputs. With sufficiently large “training sets” of data or ingenious designs of self-play described below, neural networks are achieving superhuman skills on a rapidly expanding array of challenges, from board games like Chess and Go, to interpersonal games such as poker, to sophisticated language operations such as real-time translation, and to professional medical skills such as complex diagnostics.

…

Starting from no information whatsoever other than the rules of chess, the AI system plays against itself in millions of chess games and uses the results to update the neural-network weights in order to learn chess-playing skills. Remarkably, in just four hours of self-play, an advanced computer AI system developed by the company DeepMind learned all of the skills needed to handily defeat the world’s best human chess players as well as the previous AI world-champion chess player!3 A few hours of blank-slate learning bested 600 years of learning of chess play by all of the chess experts in history. Technological Advances and the End of Poverty In 2006, I published a book titled The End of Poverty in which I suggested that the end of extreme poverty was within the reach of our generation, indeed by 2025, if we made increased global efforts to help the poor.4 I had in mind special efforts to bolster health, education, and infrastructure for the world’s poorest people, notably in sub-Saharan African and South Asia, home to most of the world’s extreme poverty.

Four Battlegrounds

by

Paul Scharre

Published 18 Jan 2023

For DeepMind’s next version, AlphaZero, three different versions of the same algorithm were trained to reach superhuman performance through self-play in chess (44 million self-play games), go (21 million self-play games), and the Japanese strategy game shogi (24 million self-play games). For each type of game, 5,000 AI-specialized computer chips were used to generate the simulated games, allowing compute to effectively act as a substitute for real-world data. Strategy games are a special case since they can be perfectly simulated, while the complexity of the real world oftentimes cannot, but synthetic data can help augment datasets when real-world data may be limited.

…

AlphaGo then refined its performance to superhuman levels through self-play, a form of training on synthetic data in which the computer plays against itself. An updated version, AlphaGo Zero, released the following year, reached superhuman performance without any human training data at all, playing 4.9 million games against itself. AlphaGo Zero was able to entirely replace human-generated data with synthetic data. (This also had the benefit of allowing the algorithm to learn to play go without adopting any biases from human players.) A subsequent version of AlphaGo Zero was trained on 29 million games of self-play. For DeepMind’s next version, AlphaZero, three different versions of the same algorithm were trained to reach superhuman performance through self-play in chess (44 million self-play games), go (21 million self-play games), and the Japanese strategy game shogi (24 million self-play games).

…

“It splits its bets into three, four, five different sizes,” Daniel McAulay (who lost to Libratus) told Wired magazine. “No human has the ability to do that.” Chess grandmasters have pored over the moves of the chess-playing AI agent AlphaZero to analyze its style. AlphaZero learned to play chess entirely through self-play without any data from human games and has adopted a unique playing style. AlphaZero focuses its energies on attacking the opponent’s king, resulting in “ferocious, unexpected attacks,” according to experts who have studied its play. AlphaZero is willing to sacrifice material for positional advantage and strongly favors optionality—moves that give it more options in the future.

The Deep Learning Revolution (The MIT Press)

by

Terrence J. Sejnowski

Published 27 Sep 2018

Watson Research Center in Yorktown Heights, New York, Gerald Tesauro worked with me when he was at the Center for Complex Systems Research at the University of Illinois in Urbana-Champaign on the problem of teaching a neural network to play backgammon (figure 10.1).2 Our approach used expert supervision to train networks with backprop to evaluate game positions and possible moves. The flaw in this approach was that the program could never get better than our experts, who were not at world-championship level. But, with self-play, it might be possible to do better. The problem with self-play at that time was that the only learning signal was win or lose at the end of the game. But when one side won, which of the many moves were responsible? This is called the “temporal credit assignment problem.” A learning algorithm that can solve this temporal credit assignment problem was invented in 1988 by Richard Sutton,3 who had been working closely with Andrew Barto, his doctoral advisor, at the University of Massachusetts at Amherst, on difficult problems in reinforcement learning, a branch of machine learning inspired by associative learning in animal experiments (figure 10.2).

…

Robertie won most of the games but was surprised to lose several well-played ones and declared it the best backgammon program he had ever played. Some of TD-Gammon’s unusual moves he had never seen before; on closer examination, these proved to be improvements on human play overall. Robertie returned when the program had reached 1.5 million self-played games and was astonished when TD-Gammon played him to a draw. It had gotten so much better that he felt it had achieved human-championship level. One backgammon expert, Kit Woolsey, found that TD-Gammon’s positional judgment on whether to play “safe” (low risk/reward) or play “bold” (high risk/reward) was at that time better than that of any human he had seen.

…

Hassabis, “Mastering the Game of Go Without Human Knowledge,” Nature 550 (2017): 354–359. 36. David Silver, Thomas Hubert, Julian Schrittwieser, Ioannis Antonoglou, Matthew Lai, Arthur Guez, Marc Lanctot, Laurent Sifre, Dharshan Kumaran, Thore Graepel, Timothy Lillicrap, Karen Simonyan, Demis Hassabis, Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm, arXiv:1712.01815 (2017). 37. Harold Gardner, Frames of Mind: The Theory of Multiple Intelligences, 3rd ed. (New York: Basic Books, 2011). 38. J. R. Flynn, “Massive IQ Gains in 14 Nations: What IQ Tests Really Measure,” Psychological Bulletin 101, no. 2 (1987):171–191.

Artificial Intelligence: A Guide for Thinking Humans

by

Melanie Mitchell

Published 14 Oct 2019

This was the first of several times IBM saw its stock price increase after a demonstration of a game-playing program beating humans; as a more recent example, IBM’s stock price similarly rose after the widely viewed TV broadcasts in which its Watson program won in the game show Jeopardy! While Samuel’s checkers player was an important milestone in AI history, I made this historical digression primarily to introduce three all-important concepts that it illustrates: the game tree, the evaluation function, and learning by self-play. Deep Blue Although Samuel’s “tricky but beatable” checkers program was remarkable, especially for its time, it hardly challenged people’s idea of themselves as uniquely intelligent. Even if a machine could win against human checkers champions (as one finally did in 199413), mastering the game of checkers was never seen as a proxy for general intelligence.

…

In the popular media, it was Deep Blue versus Kasparov all over again, with an endless supply of think pieces on what AlphaGo’s triumph meant for the future of humanity. But this was even more significant than Deep Blue’s win: AI had surmounted an even greater challenge than chess and had done so in a much more impressive fashion. Unlike Deep Blue, AlphaGo acquired its abilities by reinforcement learning via self-play. Demis Hassabis noted that “the thing that separates out top Go players [is] their intuition” and that “what we’ve done with AlphaGo is to introduce with neural networks this aspect of intuition, if you want to call it that.”26 How AlphaGo Works There have been several different versions of AlphaGo, so to keep them straight, DeepMind started naming them after the human Go champions the programs had defeated—AlphaGo Fan and AlphaGo Lee—which to me evoked the image of the skulls of vanquished enemies in the collection of a digital Viking.

…

It’s certainly true that the deep Q-learning method used in AlphaGo can be used to learn other tasks, but the system itself would have to be wholly retrained; it would have to start essentially from scratch in learning a new skill. This brings us back to the “easy things are hard” paradox of AI. AlphaGo was a great achievement for AI; learning largely via self-play, it was able to definitively defeat one of the world’s best human players in a game that is considered a paragon of intellectual prowess. But AlphaGo does not exhibit human-level intelligence as we generally define it, or even arguably any real intelligence. For humans, a crucial part of intelligence is, rather than being able to learn any particular skill, being able to learn to think and to then apply our thinking flexibly to whatever situations or challenges we encounter.

Human Compatible: Artificial Intelligence and the Problem of Control

by

Stuart Russell

Published 7 Oct 2019

The program learned essentially from scratch, by playing against itself and observing the rewards of winning and losing.60 In 1992, Gerry Tesauro applied the same idea to the game of backgammon, achieving world-champion-level play after 1,500,000 games.61 Beginning in 2016, DeepMind’s AlphaGo and its descendants used reinforcement learning and self-play to defeat the best human players at Go, chess, and shogi. Reinforcement learning algorithms can also learn how to select actions based on raw perceptual input. For example, DeepMind’s DQN system learned to play forty-nine different Atari video games entirely from scratch—including Pong, Freeway, and Space Invaders.62 It used only the screen pixels as input and the game score as a reward signal.

…

Indeed, its behavior might be identical to that of a machine that just wants to give its opponent a really exciting game. So, saying that AlphaGo “has the purpose of winning” is an oversimplification. A better description would be that AlphaGo is the result of an imperfect training process—reinforcement learning with self-play—for which winning was the reward. The training process is imperfect in the sense that it cannot produce a perfect Go player: AlphaGo learns an evaluation function for Go positions that is good but not perfect, and it combines that with a lookahead search that is good but not perfect. The upshot of all this is that discussions beginning with “suppose that robot R has purpose P” are fine for gaining some intuition about how things might unfold, but they cannot lead to theorems about real machines.

…

The numbers go down as restrictions are imposed on how many units or groups of units can be moved at once. 48. On human–machine competition in StarCraft: Tom Simonite, “DeepMind beats pros at StarCraft in another triumph for bots,” Wired, January 25, 2019. 49. AlphaZero is described by David Silver et al., “Mastering chess and shogi by self-play with a general reinforcement learning algorithm,” arXiv:1712.01815 (2017). 50. Optimal paths in graphs are found using the A* algorithm and its many descendants: Peter Hart, Nils Nilsson, and Bertram Raphael, “A formal basis for the heuristic determination of minimum cost paths,” IEEE Transactions on Systems Science and Cybernetics SSC-4 (1968): 100–107. 51.

The Alignment Problem: Machine Learning and Human Values

by

Brian Christian

Published 5 Oct 2020

No sooner had AlphaGo reached the pinnacle of the game of Go, however, than it was, in 2017, summarily dethroned, by an even stronger program called AlphaGo Zero.86 The biggest difference between the original AlphaGo and AlphaGo Zero was in how much human data the latter had been fed to imitate: zero. From a completely random initialization, tabula rasa, it simply learned by playing against itself, again and again and again and again. Incredibly, after just thirty-six hours of self-play, it was as good as the original AlphaGo, which had beaten Lee Sedol. After seventy-two hours, the DeepMind team set up a match between the two, using the exact same two-hour time controls and the exact version of the original AlphaGo system that had beaten Lee. AlphaGo Zero, which consumed a tenth of the power of the original system, and which seventy-two hours earlier had never played a single game, won the hundred-game series—100 games to 0.

…

Fürnkranz and Kubat, Machines That Learn to Play Games. 84. There are, of course, many subtle differences between the architecture and training procedure for Deep Blue and those for AlphaGo. For more details on AlphaGo, see Silver et al., “Mastering the Game of Go with Deep Neural Networks and Tree Search.” 85. AlphaGo’s value network was derived from self-play, but its policy network was imitative, trained through supervised learning on a database of human expert games. Roughly speaking, it was conventional in the moves it considered, but thought for itself when deciding which of them was best. See Silver et al., “Mastering the Game of Go with Deep Neural Networks and Tree Search.” 86.

…

In 2018, AlphaGo Zero was further refined into an even stronger program—and a more general one, capable of record-breaking strength in not just Go but chess and shogi—called AlphaZero. For more detail about AlphaZero, see Silver et al., “A General Reinforcement Learning Algorithm That Masters Chess, Shogi, and Go Through Self-Play.” In 2019, a subsequent iteration of the system called MuZero matched this level of performance with less computation and less advance knowledge of the rules of the game, while proving flexible enough to excel at not just board games but Atari games as well; see Schrittwieser et al., “Mastering Atari, Go, Chess and Shogi by Planning with a Learned Model.” 87.

Artificial Intelligence: A Modern Approach

by

Stuart Russell

and

Peter Norvig

Published 14 Jul 2019

But this year, it became like a god of Go.” ALPHAGO benefited from studying hundreds of thousands of past games by human Go players, and from the distilled knowledge of expert Go players that worked on the team. A followup program, ALPHAZERO, used no input from humans (except for the rules of the game), and was able to learn through self-play alone to defeat all opponents, human and machine, at Go, chess, and shogi (Silver et al., 2018). Meanwhile, human champions have been beaten by AI systems at games as diverse as Jeopardy! (Ferrucci et al., 2010), poker (Bowling et al., 2015; Moravčík et al., 2017; Brown and Sandholm, 2019), and the video games Dota 2 (Fernandez and Mahlmann, 2018), StarCraft II (Vinyals et al., 2019), and Quake III (Jaderberg et al., 2019).

…

For some simple games, that happens to be the same answer as “what is the best move if both players play well?,” but for most games it is not. To get useful information from the playout we need a playout policy that biases the moves towards good ones. For Go and other games, playout policies have been successfully learned from self-play by using neural networks. Sometimes game-specific heuristics are used, such as “consider capture moves” in chess or “take the corner square” in Othello. Given a playout policy, we next need to decide two things: from what positions do we start the playouts, and how many playouts do we allocate to each position?

…

There is a theoretical argument that C should be , but in practice, game programmers try multiple values for C and choose the one that performs best. (Some programs use slightly different formulas; for example, ALPHAZERO adds in a term for move probability, which is calculated by a neural network trained from past self-play.) With C = 1.4, the 60/79 node in Figure 6.10 has the highest UCB1 score, but with C = 1.5, it would be the 2/11 node. Figure 6.11 shows the complete UCT MCTS algorithm. When the iterations terminate, the move with the highest number of playouts is returned. You might think that it would be better to return the node with the highest average utility, but the idea is that a node with 65/100 wins is better than one with 2/3 wins, because the latter has a lot of uncertainty.

Army of None: Autonomous Weapons and the Future of War

by

Paul Scharre

Published 23 Apr 2018

Within a mere four hours of self-play and with no training data, AlphaZero eclipsed the previous top chess program. The method behind AlphaZero, deep reinforcement learning, appears to be so powerful that it is unlikely that humans can add any value as members of a “centaur” human-machine team for these games. Tyler Cowen, “The Age of the Centaur Is *Over* Skynet Goes Live,” MarginalRevolution.com, December 7, 2017, http://marginalrevolution.com/marginalrevolution/2017/12/the-age-of-the-centaur-is-over.html. David Silver et al., “Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm,” December 5, 2017, https://arxiv.org/pdf/1712.01815.pdf. 325 as computers advance: Cowen, “What Are Humans Still Good For?”

…

They simply fed a neural network massive amounts of data and let it learn all on its own, and some of the things it learned were surprising. In 2017, DeepMind surpassed their earlier success with a new version of AlphaGo. With an updated algorithm, AlphaGo Zero learned to play go without any human data to start. With only access to the board and the rules of the game, AlphaGo Zero taught itself to play. Within a mere three days of self-play, AlphaGo Zero had eclipsed the previous version that had beaten Lee Sedol, defeating it 100 games to 0. These deep learning techniques can solve a variety of other problems. In 2015, even before DeepMind debuted AlphaGo, DeepMind trained a neural network to play Atari games. Given only the pixels on the screen and the game score as input and told to maximize the score, the neural network was able to learn to play Atari games at the level of a professional human video game tester.

Wonderland: How Play Made the Modern World

by

Steven Johnson

Published 15 Nov 2016

In a fitting echo of the musical innovations that preceded them, the Banu Musa even included a description of how their instrument could be embedded inside an automaton, creating the illusion that the robot musician was playing the encoded melody on a flute. Reconstruction of the Banu Musa’s self-playing music automaton The result was not just an instrument that played itself, as marvelous as that must have been. The Banu Musa were masters of automation, to be sure, but humans had been tinkering with the idea of making machines move in lifelike ways since the days of Plato. Animated peacocks, water clocks, robotic dancers—all these contraptions were engineering marvels, but they also shared a fundamental limitation.

…

This vast cycle of encoding and decoding is now as ubiquitous as electricity in our lives, and yet, like electricity, the cycle was for all practical purposes nonexistent just a hundred and fifty years ago. Not surprisingly, one of the very first technologies that introduced the coding/decoding cycle to everyday life took the form of a musical instrument, one with a direct lineage to Vaucanson and the Banu Musa: the player piano. Though its prehistory dates back to the House of Wisdom, a self-playing piano became a central focus for instrument designers in the second half of the nineteenth century; dozens of inventors from the United States and Europe contributed partial solutions to the problem of designing a machine that could mimic the feel of a human pianist. The new opportunities for expression that Cristofori’s pianoforte had introduced posed a critical challenge for automating that expression; it wasn’t enough to record the correct sequence of notes—the player piano also had to capture the loudness of each individual note, what digital music software now calls “velocity.”

The World Beyond Your Head: On Becoming an Individual in an Age of Distraction

by

Matthew B. Crawford

Published 29 Mar 2015

The freedom and dignity of this modern self depend on its being insulated from contingency—by layers of representation. As Thomas de Zengotita points out in his beautiful book Mediated, representations are addressed to us, unlike dumb nature, which just sits there. They are fundamentally flattering, placing each of us at the center of a little “me-world.”1 If the world encountered as something distinct from the self plays a crucial role for a person in achieving adult agency, then it figures that when our encounters with the world are increasingly mediated by representations that soften this boundary, this will have some effect on the kind of selves we become. To see this, consider children’s television. THE MOUSEKE-DOER In the old Mickey Mouse cartoons from the early and middle decades of the twentieth century, by far the most prominent source of hilarity is the capacity of material stuff to generate frustration, or rather demonic violence.

…

As we have seen, the dialectic between tradition and innovation allows the organ maker to understand his own inventiveness as a going further in a trajectory he has inherited. This is very different from the modern concept of creativity, which seems to be a crypto-theological concept: creation ex nihilo. For us the self plays the role of God, and every eruption of creativity is understood to be like a miniature Big Bang, coming out of nowhere. This way of understanding inventiveness cannot connect us to others, or to the past. It also falsifies the experience to which we give the name “creativity” by conceiving it to be something irrational, incommunicable, unteachable.

Ghost Work: How to Stop Silicon Valley From Building a New Global Underclass

by

Mary L. Gray

and

Siddharth Suri

Published 6 May 2019

It was also given a large database of games between human experts. That database was used with supervised learning to train the initial move selection function (the “policy function”) for AlphaGo. Then AlphaGo carried out a second phase of “self play” in which it played against a copy of itself (a technique first developed by Arthur Samuel in 1959, I believe) and applied reinforcement learning algorithms to refine the policy function. Finally, they ran additional self-play games to learn a “value function” (value network) that predicted which side would win in each board state. During game play, AlphaGo combines the value function with the policy function to select moves based on forward search (Monte Carlo tree search).

The Road to Conscious Machines

by

Michael Wooldridge

Published 2 Nov 2018

AlphaGo used two neural networks: the value network was solely concerned with estimating how good a given board position was, while the policy network made recommendations about which move to make, based on a current board position.14 The policy network contained 13 layers, and was trained by using supervised learning first, where the training data was examples of expert games played by humans, and then reinforcement learning, based on self-play. Finally, these two networks were embedded in a sophisticated search technique, called Monte Carlo tree search. Before the system was announced, DeepMind hired Fan Hui, a European Go champion, to play against AlphaGo: the system beat him five games to zero. This was the first time a Go program had beaten a human champion player in a full game.

…

The extraordinary thing about AlphaGo Zero is that it learned how to play to a super-human level without any human supervision at all: it just played against itself.16 To be fair, it had to play itself a lot, but nevertheless it was a striking result, and it was further generalized in another follow-up system called AlphaZero, which learned to play a range of other games, including chess: after just nine hours of self-play, AlphaZero was able to consistently beat or draw against Stockfish, one of the world’s leading dedicated chess-playing programs. Veterans from the computer chess community were astonished. The idea that AlphaZero had played itself for nine hours and taught itself to be a world-class chess player was almost beyond belief.

A World Without Work: Technology, Automation, and How We Should Respond

by

Daniel Susskind

Published 14 Jan 2020

The new machine, dubbed AlphaZero, was matched up against the champion chess computer Stockfish. Of the fifty games where AlphaZero played white, it won twenty-five and drew twenty-five; of the fifty games where it played black, it won three and drew forty-seven. David Silver, Thomas Hubert, Julian Schrittwieser, et al., “Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm,” https://arxiv.org, arXiv:1712.01815v1 (2017). 7. Tyler Cowen, “The Age of the Centaur Is Over Skynet Goes Live,” Marginal Revolution, 7 December 2017. 8. See Kasparov, Deep Thinking, chap. 11. 9. Data is from Ryland Thomas and Nicholas Dimsdale, “A Millennium of UK Data,” Bank of England OBRA data set (2017).

…

“Economic Scene; Structural Joblessness.” New York Times, 6 April 1983. Silver, David, Aja Huang, Chris Maddison, et al. “Mastering the Game of Go with Deep Neural Networks and Tree Search.” Nature 529 (2016): 484–89. Silver, David, Thomas Hubert, Julian Schrittwieser, et al. “Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm.” https://arxiv.org, arXiv:1712.01815v1 (2017) Silver, David, Julian Schrittwieser, Karen Simonyan, et al. “Mastering the Game of Go Without Human Knowledge.” Nature 550 (2017), 354–9. Singh, Satinder, Andy Okun, and Andrew Jackson. “Artificial Intelligence: Learning to Play Go from Scratch.”

Deep Medicine: How Artificial Intelligence Can Make Healthcare Human Again

by

Eric Topol

Published 1 Jan 2019

To that he said, “They’re a very press hungry organization.”13 Marcus isn’t alone in the critique of AlphaGo Zero. A sharp critique by Jose Camacho Collados made several key points including the lack of transparency (the code is not publicly available), the overreach of the author’s claim of “completely learning from ‘self-play,’” considering the requirement for teaching the game rules and for some prior game knowledge, and the “responsibility of researchers in this area to accurately describe… our achievements and try not to contribute to the growing (often self-interested) misinformation and mystification of the field.”14 Accordingly, some of AI’s biggest achievements to date may have been glorified.

…

Silver, D., et al., “Mastering the Game of Go Without Human Knowledge.” Nature, 2017. 550(7676): pp. 354–359. 33. Singh, S., A. Okun, and A. Jackson, “Artificial Intelligence: Learning to Play Go from Scratch.” Nature, 2017. 550(7676): pp. 336–337. 34. Silver, D., et al., Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm. arXiv, 2017. 35. Tegmark, M., “Max Tegmark on Twitter.” Twitter, 2017. 36. Bowling, M., et al., “Heads-Up Limit Hold ’Em Poker Is Solved.” Science, 2015. 347(6218): pp. 145–149. 37. Moravcik, M., et al., “DeepStack: Expert-Level Artificial Intelligence in Heads-Up No-Limit Poker.”

The Coming Wave: Technology, Power, and the Twenty-First Century's Greatest Dilemma

by

Mustafa Suleyman

Published 4 Sep 2023

Just as today’s models produce detailed images based on a few words, so in decades to come similar models will produce a novel compound or indeed an entire organism with just a few natural language prompts. That compound’s design could be improved by countless self-run trials, just as AlphaZero became an expert chess or Go player through self-play. Quantum technologies, many millions of times more powerful than the most powerful classical computers, could let this play out at a molecular level. This is what we mean by hyper-evolution—a fast, iterative platform for creation. Nor will this evolution be limited to specific, predictable, and readily containable areas.

…

But what if you had a worm that improved itself using reinforcement learning, experimentally updating its code with each network interaction, each time finding more and more efficient ways to take advantage of cyber vulnerabilities? Just as systems like AlphaGo learn unexpected strategies from millions of self-played games, so too will AI-enabled cyberattacks. However much you war-game every eventuality, there’s inevitably going to be a tiny vulnerability discoverable by a persistent AI. Everything from cars and planes to fridges and data centers relies on vast code bases. The coming AIs make it easier than ever to identify and exploit weaknesses.

The People vs Tech: How the Internet Is Killing Democracy (And How We Save It)

by

Jamie Bartlett

Published 4 Apr 2018

This stunning result was quickly surpassed when, in late 2017, Deep Mind released AlphaGo Zero, a software that was given no human examples at all and was taught the rules of how to win by using a deep learning technique with no prior examples. It started off dreadfully bad but improved slightly with each game, and within 40 days of constant self-play it had become so strong that it thrashed the original AlphaGo 100–0. Go is now firmly in the category of ‘games that humans will never win against machines again’. Most people in Silicon Valley agree that machine learning is the next big thing, although some are more optimistic than others. Tesla and SpaceX boss Elon Musk recently said that AI is like ‘summoning the demon’, while others have compared its significance to the ‘scientific method, on steroids’, the invention of penicillin and even electricity.

I'm Just a Person

by

Tig Notaro

Published 13 Jun 2016

On my journey home to take my mother off life support, I thought about Ric on his wedding day about to marry my mother at my aunt and uncle’s house in Pass Christian. I imagined him somehow acquiring the knowledge that my mother would have a tragic fall in 2012, and I pictured him watching my four-year-old self playing in the yard and thinking about how, in thirty-seven years, he would have to tell this little girl in front of him that her mother wasn’t going to make it. He would have thirty-seven years to get ready to make that phone call. But could he? No one could. It was clear that as each word came out of his mouth, it stunned him.

Futureproof: 9 Rules for Humans in the Age of Automation

by

Kevin Roose

Published 9 Mar 2021

He didn’t win, but his warning of a looming AI revolution entered the zeitgeist and pushed the conversation about technological unemployment into the mainstream. Fears of job-killing machines aren’t new. In fact, they date back to roughly 350 b.c.e., when Aristotle mused that automated weavers and self-playing harps could reduce the demand for slave labor. Since then, machine-related anxieties have ebbed and flowed, often peaking during periods of rapid technological change. In 1928, The New York Times ran an article titled “March of the Machine Makes Idle Hands,” which featured experts predicting that a new invention—factory machinery that ran on electricity—would soon make manual labor obsolete.

The Age of AI: And Our Human Future

by

Henry A Kissinger

,

Eric Schmidt

and

Daniel Huttenlocher

Published 2 Nov 2021

The tactics AlphaZero deployed were unorthodox—indeed, original. It sacrificed pieces human players considered vital, including its queen. It executed moves humans had not instructed it to consider and, in many cases, humans had not considered at all. It adopted such surprising tactics because, following its self-play of many games, it predicted they would maximize its probability of winning. AlphaZero did not have a strategy in a human sense (though its style has prompted further human study of the game). Instead, it had a logic of its own, informed by its ability to recognize patterns of moves across vast sets of possibilities human minds cannot fully digest or employ.

Adult Children of Emotionally Immature Parents: How to Heal From Distant, Rejecting, or Self-Involved Parents

by

Lindsay C. Gibson

Published 31 May 2015

You have to be able to express enough of your true self to give the other person something real to relate to. Without that, the relationship is just playacting between two role-selves. Another problem with the role-self is that it doesn’t have its own source of energy. It has to steal vitality from the true self. Playing a role is much more tiring than just being yourself because it takes a huge effort to be something you are not. And because it’s made-up, the role-self is insecure and afraid of being revealed as an imposter. Playing a role-self usually doesn’t work in the long run because it can never completely hide people’s true inclinations.

WTF?: What's the Future and Why It's Up to Us

by

Tim O'Reilly

Published 9 Oct 2017

Every day, we teach the global brain new skills. DeepMind began its Go training by studying games played by humans. As its creators wrote in their January 2016 paper in Nature, “These deep neural networks are trained by a novel combination of supervised learning from human expert games, and reinforcement learning from games of self-play.” That is, the program began by observing humans playing the game, and then accelerated that learning by playing against itself millions of times, far outstripping the experience level of even the most accomplished human players. This pattern, by which algorithms are trained by humans, either explicitly or implicitly, is central to the explosion of AI-based services.

…

CHAPTER 11: OUR SKYNET MOMENT 230 The messages were powerful and personal: “We Are the 99 Percent,” tumblr.com, September 14, 2011, http://weare the99percent.tumblr.com/page/231. 231 “AI systems must do what we want them to do”: “An Open Letter: Research Priorities for Robust and Beneficial Artificial Intelligence,” Future of Life Institute, retrieved April 1, 2017, https://futureoflife.org/ai-open-letter/. 231 “unconstrained by a need to generate financial return”: Greg Brockman, Ilya Sutskever, and OpenAI, “Introducing OpenAI,” OpenAI Blog, December 11, 2015, https://blog.openai.com/introduc ing-openai/. 232 best friend of one autistic boy: Judith Newman, “To Siri, with Love,” New York Times, October 17, 2014, https://www.nytimes.com/2014/10/19/fashion/how-apples-siri-became-one-autistic-boys-bff.html. 234 overpopulation on Mars: “Andrew Ng: Why ‘Deep Learning’ Is a Mandate for Humans, Not Just Machines,” Wired, May 2015, retrieved April 1, 2017, https://www.wired.com/brandlab/2015/05/andrew-ng-deep-learning-mandate-humans-not-just-machines/. 235 change how we think and how we feel: Emeran A. Mayer, Rob Knight, Sarkis K. Mazmanian, John F. Cryan, and Kirsten Tillisch, “Gut Microbes and the Brain: Paradigm Shift in Neuroscience,” Journal of Neuroscience, 34, no. 46 (2014): 15490–96, doi:10.1523/JNEU ROSCI.3299-14.2014. 235 “games of self-play”: David Silver et al., “Mastering the Game of Go with Deep Neural Networks and Tree Search,” Nature 529 (2016): 484–89, doi:10.1038/nature16961. 236 “previously encountered examples”: Beau Cronin, “Untapped Opportunities in AI,” O’Reilly Ideas, June 4, 2014, https://www.oreilly.com/ideas/untapped-opportunities-in-ai. 237 “that for a computer is plenty of time”: Michael Lewis interviewed by Terry Gross, “On a ‘Rigged’ Wall Street, Milliseconds Make All the Difference,” NPR Fresh Air, April 1, 2014, http://www.npr.org/2014/04/01/297686724/on-a-rigged-wall-street-milliseconds-make-all-the-difference. 238 “Creating things that you don’t understand is really not a good idea”: Felix Salmon, “John Thain Comes Clean,” Reuters, October 7, 2009, http://blogs. reuters.com/felix-salmon/2009/10/07/john-thain-comes-clean/. 238 credit far in excess of the underlying real assets: Gary Gorton, “Shadow Banking,” The Region (Federal Reserve Bank of Minneapolis), December 2010, retrieved April 2, 2017, http://faculty.som. yale.edu/garygorton/documents/Interview withTheRegionFRBofMinneapolis.pdf. 239 “an existential threat to capitalism”: Mark Blyth, “Global Trumpism,” Foreign Affairs, November 15, 2016, https://www.foreignaffairs.com/articles/2016-11-15/global-trumpism. 240 “pure and unadulterated socialism”: Milton Friedman, “The Social Responsibility of Business Is to Increase Its Profits,” New York Times Magazine, September 13, 1970, retrieved April 2, 2017, http://www.colorado.edu/studentgroups/liber tarians/issues/friedman-soc-resp-business.html. 241 benefit the business and its actual owners: Michael C.

Elsewhere, U.S.A: How We Got From the Company Man, Family Dinners, and the Affluent Society to the Home Office, BlackBerry Moms,and Economic Anxiety

by

Dalton Conley

Published 27 Dec 2008

That is, before you can see yourself as a self—as how others might view you—the first step is to learn that they do indeed have their own points of view. This is something that the social philosopher George Herbert Mead pointed out almost a hundred years ago. It is also something readily apparent to anyone who has watched a toddler (who has not yet fully developed a sense of the other and the self) play peek-a-boo. When he or she covers their eyes, they think that you can’t see them. Autistics, however, are stuck in this pre-social selfhood and cannot take the point of view of others so easily; in fact, when someone attempts to point out something to them, they often stare at the fingertip rather than the object at which it is aimed.

Women and Other Monsters: Building a New Mythology

by

Jess Zimmerman

Published 9 Mar 2021

But the farther you go into the House, the more its blueprint bulges at the seams. In its depths—because once you leave those first, relatively predictable buildings, it does feel as if you’re descending into the hollow earth—are objects of baffling scale, collections of dizzying breadth. There are whole orchestras of self-playing instruments: insert a token into a slot and a room full of uninhabited chairs comes to life with song. There’s a 200-foot-tall statue of a toothy sea creature fighting a squid; it fills the center of a massive building the size of an airplane hangar, and visitors circumnavigate it by walking a ramp around the room’s perimeter.

Escape From Model Land: How Mathematical Models Can Lead Us Astray and What We Can Do About It

by

Erica Thompson

Published 6 Dec 2022

, Edward Elgar, 2018 Chapter 4: The Cat that Looks Most Like a Dog Berger, James, and Leonard Smith, ‘On the Statistical Formalism of Uncertainty Quantification’, Annual Review of Statistics and its Application, 6, 2019, pp. 433–60 Cartwright, Nancy, How the Laws of Physics Lie, Oxford University Press, 1983 Hájek, Alan, ‘The Reference Class Problem is Your Problem Too’, Synthese, 156(3), 2007 Mayo, Deborah, Statistical Inference as Severe Testing: How to Get Beyond the Statistics Wars, Cambridge University Press, 2018 Taleb, Nassim, Fooled by Randomness: The Hidden Role of Chance in Life and in the Markets, Penguin, 2007 Thompson, Erica, and Leonard Smith, ‘Escape from Model-Land’, Economics, 13(1), 2019 Chapter 5: Fiction, Prediction and Conviction Azzolini, Monica, The Duke and the Stars, Harvard University Press, 2013 Basbøll, Thomas, Any Old Map Won’t Do; Improving the Credibility of Storytelling in Sensemaking Scholarship, Copenhagen Business School, 2012 Davies, David, ‘Learning Through Fictional Narratives in Art and Science’, in Frigg and Hunter (eds), Beyond Mimesis and Convention, 2010, pp. 52–69 Gelman, Andrew, and Thomas Basbøll, ‘When Do Stories Work? Evidence and Illustration in the Social Sciences’, Sociological Methods and Research, 43(4), 2014 Silver, David, Thomas Hubert, Julian Schrittwieser, et al., ‘A General Reinforcement Learning Algorithm That Masters Chess, Shogi, and Go Through Self-Play’, Science, 362(6419), 2018 Tuckett, David, and Milena Nikolic, ‘The Role of Conviction and Narrative in Decision-Making under Radical Uncertainty’, Theory and Psychology, 27, 2017 Chapter 6: The Accountability Gap Birhane, Abeba, ‘The Impossibility of Automating Ambiguity’, Artificial Life, 27(1), 2021, pp. 44–61 ——, Pratyusha Kalluri, Dallas Card, William Agnew, et al., ‘The Values Encoded in Machine Learning Research’, arXiv preprint arXiv:2106.15590, 2021 Pfleiderer, Paul, ‘Chameleons: The Misuse of Theoretical Models in Finance and Economics’, Economica, 87(345), 2020 Chapter 7: Masters of the Universe Alves, Christina, and Ingrid Kvangraven, ‘Changing the Narrative: Economics after Covid-19’, Review of Agrarian Studies, 2020 Ambler, Lucy, Joe Earle, and Nicola Scott, Reclaiming Economics for Future Generations, Manchester University Press, 2022 Ayache, Elie, The Blank Swan – The End of Probability, Wiley, 2010.

Scary Smart: The Future of Artificial Intelligence and How You Can Save Our World

by

Mo Gawdat

Published 29 Sep 2021

After twenty-one days it had reached the level of AlphaGo Master, the version that defeated sixty top professionals online and the world champion, Ke Jie, in a three-out-of-three game. By day forty, AlphaGo Zero surpassed all other versions of AlphaGo and, arguably, this newly born intelligent being had already become the smartest being in existence on the task it had set out to learn. It learned all this on its own, entirely from self-play, with no human intervention and using no historical data. At the speed of AI, forty-five days is equivalent to the entire history of human evolution. If the COVID-19 global outbreak teaches us anything, it should be to recognize that we truly don’t have much time to react when things go wrong. Even more worryingly, because we are motivated by so many conflicting agendas . . . . . . we may not even find out that things went wrong until it’s too late.

Framers: Human Advantage in an Age of Technology and Turmoil

by

Kenneth Cukier

,

Viktor Mayer-Schönberger

and

Francis de Véricourt

Published 10 May 2021

AlphaZero’s specifics on model training: David Silver et al., “A General Reinforcement Learning Algorithm That Masters Chess, Shogi and Go,” DeepMind, December 6, 2018, https://deepmind.com/blog/article/alphazero-shedding-new-light-grand-games-chess-shogi-and-go; David Silver et al., “Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm,” DeepMind, December 5, 2017, https://arxiv.org/pdf/1712.01815.pdf. Note: a successor project to AlphaZero, called MuZero, can learn the rules of a board game by itself. See Julian Schrittwieser et al., “Mastering Atari, Go, Chess and Shogi by Planning with a Learned Model,” Nature 588, no. 7839 (December 23, 2020): 604–609, https://www.nature.com/articles/s41586-020-03051-4.

Seven Databases in Seven Weeks: A Guide to Modern Databases and the NoSQL Movement

by

Eric Redmond

,

Jim Wilson

and

Jim R. Wilson

Published 7 May 2012

redis/graph_sync.js function feedBandToNeo4j(band, progress) { var lookup = neo4jClient.lookupOrCreateNode, relate = neo4jClient.createRelationship; lookup('bands', 'name', band.name, function(bandNode) { progress.emit('progress', 'band'); band.artists.forEach(function(artist) { lookup('artists', 'name', artist.name, function(artistNode){ progress.emit('progress', 'artist'); relate(bandNode.self, artistNode.self, 'member', function(){ progress.emit('progress', 'member'); }); artist.role.forEach(function(role){ lookup('roles', 'role', role, function(roleNode){ progress.emit('progress', 'role'); relate(artistNode.self, roleNode.self, 'plays', function(){ progress.emit('progress', 'plays'); Let this service keep running in its own window. Every update to CouchDB that adds a new artist or role to an existing artist will trigger a new relationship in Neo4j and potentially new keys in Redis. As long as this service runs, they should be in sync.

Driverless: Intelligent Cars and the Road Ahead

by

Hod Lipson

and

Melba Kurman

Published 22 Sep 2016

In other words, the database was essentially a big statistical model. Figure 8.2 AI techniques used in driverless cars. Most robotic systems use a combination of techniques. Object recognition for real-time obstacle detection and traffic negotiation is the most challenging for AI (far left). As the software learned, it spent countless hours in “self-play,” amassing more gaming experience than any human could in a lifetime. As the database grew, Samuel had to develop more efficient data-lookup techniques, leading to the invention of hash tables that are still used in large databases. Another of Samuel’s innovations was to use the database to factor in how the opponent would mostly likely respond to each move, an algorithm known today as minimax.

Life After Google: The Fall of Big Data and the Rise of the Blockchain Economy

by

George Gilder

Published 16 Jul 2018

Also at Google in late October 2017, the DeepMind program launched yet another iteration of the AlphaGo program, which, you may recall, repeatedly defeated Lee Sedol, the five-time world champion Go player. The tree search in AlphaGo evaluated positions and selected moves using deep neural networks trained by immersion in records of human expert moves and by reinforcement from self-play. The blog Kurzweil.ai now reports a new iteration of AlphaGo based solely on reinforcement learning, without direct human input beyond the rules of the game and the reward structure of the program. In a form of “generic adversarial program,” AlphaGo plays against itself and becomes its own teacher.

The Rationalist's Guide to the Galaxy: Superintelligent AI and the Geeks Who Are Trying to Save Humanity's Future

by

Tom Chivers

Published 12 Jun 2019

Eliezer Yudkowsky, ‘Expected creative surprises’, LessWrong sequences, 2008 http://lesswrong.com/lw/v7/expected_creative_surprises/ 6. Eliezer Yudkowsky, ‘Belief in intelligence’, LessWrong sequences, 2008 http://lesswrong.com/lw/v8/belief_in_intelligence/ 7. Ibid. 8. Demis Hassabis et al., ‘Mastering chess and shogi by self-play with a general reinforcement learning algorithm’, Arxiv, 2017 https://arxiv.org/pdf/1712.01815.pdf 9. Russell and Norvig, Artificial Intelligence, p. 4 10. Nick Bostrom and Vincent C. Müller, ‘Future progress in artificial intelligence: A survey of expert opinion’, Fundamental Issues of Artificial Intelligence, 2016 https://nickbostrom.com/papers/survey.pdf 11.

Schild's Ladder

by

Greg Egan

Published 31 Dec 2003

Behind each barrier the sea changed color abruptly, the green giving way to other bright hues, like a fastidiously segregated display of bioluminescent plankton. The far side here was a honeycomb of different vendek populations, occupying cells about a micron wide. The boundaries between adjoining cells all vibrated like self-playing drums; none were counting out prime numbers, but some of the more complex rhythms made it seem almost plausible that the signaling layer had been nothing but a natural fluke. Even if that were true, though, Tchicaya doubted that it warranted relief at the diminished prospect that sentient life was at stake.

The Big Nine: How the Tech Titans and Their Thinking Machines Could Warp Humanity

by

Amy Webb

Published 5 Mar 2019

It took only 70 hours of play for Zero to gain the same level of strength AlphaGo had when it beat the world’s greatest players.39 And then something interesting happened. The DeepMind team applied its technique to a second instance of AlphaGo Zero using a larger network and allowed it to train and self-play for 40 days. It not only rediscovered the sum total of Go knowledge accumulated by humans, it beat the most advanced version of AlphaGo 90% of the time—using completely new strategies. This means that Zero evolved into both a better student than the world’s greatest Go masters and a better teacher than its human trainers, and we don’t entirely understand what it did to make itself that smart.40 Just how smart, you may be wondering?

Decoding the World: A Roadmap for the Questioner

by

Po Bronson

Published 14 Jul 2020

So now this chunk of RNA has (indirectly) the neural firing pattern encoded. These cognitive sequences can be copied, moved around the cell, and used for various purposes, including “replaying” the memory by retriggering the neural firing, using the same mechanisms in the reverse direction, the same way sheet music can control a self-playing piano. But that would be a really simple memory, because it describes only one neuron. Earlier this year, a UCLA scientist transferred this kind of memory from one snail to another, by transferring the RNA cognitive sequence. The first snail had been shocked; the second snail had not. But with the transferred memory, the second snail acted like it had been shocked before.

Range: Why Generalists Triumph in a Specialized World

by

David Epstein

Published 1 Mar 2019

Hermelin, “Visual and Graphic Abilities of the Idiot-Savant Artist,” Psychological Medicine 17 (1987): 79–90. (Treffert has helped replace the term “idiot-savant” with “savant syndrome.”) See also: E. Winner, Gifted Children: Myths and Realities (New York: BasicBooks, 1996), ch. 5. AlphaZero programmers touted: D. Silver et al., “Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm,” arXiv (2017): 1712.01815. “In narrow enough worlds”: In addition to an interview with Gary Marcus, I used video of his June 7, 2017, lecture at the AI for Good Global Summit in Geneva, as well as several of his papers and essays: “Deep Learning: A Critical Appraisal,” arXiv: 1801.00631; “In Defense of Skepticism About Deep Learning,” Medium, January 14, 2018; “Innateness, AlphaZero, and Artificial Intelligence,” arXiv: 1801.05667.

Who’s Raising the Kids?: Big Tech, Big Business, and the Lives of Children

by

Susan Linn

Published 12 Sep 2022

Winnicott, the midtwentieth-century British pediatrician and psychoanalyst whose work continues to influence my understanding of what children need and don’t need. Winnicott is perhaps best known for coining the term good enough mother, which frees parents from the burden of believing they must be perfect. What matters most to this discussion, however, are his writings about play as crucial to developing a healthy sense of self. Play is a conduit for true self-expression. It facilitates the ability to initiate action rather than merely react to stimuli, to wrestle with life to make it meaningful, and to envision new solutions to old problems. Winnicott believed, as do I, that the ability to play is a central component of a life well lived.

21 Lessons for the 21st Century

by

Yuval Noah Harari

Published 29 Aug 2018

, Congressional Research Service, Washington DC, 2016; ‘More Workers Are in Alternative Employment Arrangements’, Pew Research Center, 28 September 2016. 17 David Ferrucci et al.,‘Watson: Beyond Jeopardy!’, Artificial Intelligence 199–200 (2013), 93–105. 18 ‘Google’s AlphaZero Destroys Stockfish in 100-Game Match’, Chess.com, 6 December 2017; David Silver et al., ‘Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm’, arXiv (2017), https://arxiv.org/pdf/1712.01815.pdf; see also Sarah Knapton, ‘Entire Human Chess Knowledge Learned and Surpassed by DeepMind’s AlphaZero in Four Hours’, Telegraph, 6 December 2017. 19 Cowen, Average is Over, op. cit.; Tyler Cowen, ‘What are humans still good for?

Why Machines Learn: The Elegant Math Behind Modern AI

by

Anil Ananthaswamy

Published 15 Jul 2024

GO TO NOTE REFERENCE IN TEXT “Gender Shades”: Joy Buolamwini and Timnit Gebru, “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification,” Proceedings of Machine Learning Research 81 (2018): 1–15. GO TO NOTE REFERENCE IN TEXT following interaction with OpenAI’s GPT-4: Adam Tauman Kalai, “How to Use Self-Play for Language Models to Improve at Solving Programming Puzzles,” Workshop on Large Language Models and Transformers, Simons Institute for the Theory of Computing, August 15, 2023, https://tinyurl.com/56sct6n8. GO TO NOTE REFERENCE IN TEXT “Individual humans form their beliefs”: Celeste Kidd and Abeba Birhane, “How AI Can Distort Human Beliefs,” Science 380, No. 6651 (June 22, 2023): 1222–23.

Our Own Devices: How Technology Remakes Humanity

by

Edward Tenner

Published 8 Jun 2004

Roell, The Piano in America, 1800–1940 (Chapel Hill: University of North Carolina Press, 1989), 8–10. 17. Mueller, Concepts of Piano Pedagogy, 104–33. 18. Loesser, Men, Women, and Pianos, 291–92. 19. “Barrel Piano,” Encyclopedia of the Piano, 41–42; Arthur W. J. G. Ord-Hume, Pianola: The History of the Self-Playing Piano (London: Allen & Unwin, 1984), 9–22. 20. Mick Hamer, “Rave from the Grave,” New Scientist, vol. 160, no. 2165–66-67 (December 19/26, 1998/January 2, 1999), 18–19; Ord-Hume, Pianola, 31–35. 21. Roell, Piano in America, 14–15; Hamer, “Rave from the Grave,” 18. 22. Roell, Piano in America, 185–221; Loesser, Men, Women, and Pianos, 602–3; Ehrlich, Piano, 186–87, 222. 23.

Machine, Platform, Crowd: Harnessing Our Digital Future

by

Andrew McAfee

and

Erik Brynjolfsson

Published 26 Jun 2017

While AlphaGo did incorporate efficient searches through large numbers of possibilities—a classic element of rule-based AI systems—it was, at its core, a machine learning system. As its creators write, it’s “a new approach to computer Go that uses . . . deep neural networks . . . trained by a novel combination of supervised learning from human expert games, and reinforcement learning from games of self-play.” AlphaGo is far from an isolated example. The past few years have seen a great flourishing of neural networks. They’re now the dominant type of artificial intelligence by far, and they seem likely to stay on top for some time. For this reason, the field of AI is finally fulfilling at least some of its early promise.

Digital Photography: The Missing Manual

by

Chris Grover

and

Barbara Brundage

Published 7 Jul 2006

But with a little free software, you can control the time each image stays onscreen, add background music, and choose themes that include borders and other visual effects. When you create slideshows in PhotoShow Express, you share them by uploading them to a free home page on the PhotoShow Circle Web site (which the program creates with your permission). * * * Tip: Spring for the $50 PhotoShow Deluxe, and save your slideshows as self-playing files that you can store on your PC, send by email, burn to CDs, and so on. You also get more editing options, like control over the speed and type of transition between each photo. When you try to choose a command that's available only in the Deluxe version, PhotoShow asks if you want to upgrade.

Human Nature: The Categorial Framework

by

P. M. S. Hacker

Published 19 Aug 2007

Corresponding to this dichotomous account of predicates predicable of human beings is the equally unacceptable claim that the firstperson pronoun is systematically ambiguous, sometimes referring to the body that a person has and sometimes referring to the person – that is, to the mind that he allegedly is. Moreover, when the predicate of the ‘liaison brain’ and held one point of interaction to be the pyramidal cells of the motor cortex. And his philosopher friend Karl Popper held that the ‘self plays on the brain, as a pianist plays on a piano or as a driver plays on the controls of a car’ (K. R. Popper and J. C. Eccles, The Self and its Brain (Springer Verlag, Berlin, 1977), pp. 494f.; cf. p. 362). For detailed discussion, see M. R. Bennett and P. M. S. Hacker, Philosophical Foundations of Neuroscience (Blackwell, Oxford, 2003), ch. 2. 13 Localized sensations are accordingly allocated to the mind as subject, their physical localization held to be uniformly in the brain, and their phenomenal localization construed as an ‘as-if’ that seems warranted by the phenomena of phantom-limb pains (see Descartes, Principles of Philosophy, I, 46, 67, and esp.

The Future of Ideas: The Fate of the Commons in a Connected World

by

Lawrence Lessig

Published 14 Jul 2001

Clark was also one of the first to produce the player and the piano combined in a self-contained unit. See Harvey Roehl, Player Piano Treasury: The Scrapbook History of the Mechanical Piano in America as Told in Story, Pictures, Trade Journal Articles and Advertising (Vestal, N.Y.: Vestal Press, 1961); Arthur W. J. G. Ord-Hume, Pianola: The History of the Self-Playing Piano (London: George Allen & Unwin, 1984). 14 Edward Samuels, The Illustrated Story of Copyright (New York: St. Martin's Press, 2000), 34. 15 White-Smith Music Publishing Co. v. Apollo Co., 209 U.S. 1, 21 (1908). 16 Congress's initial statute was Act of March 4, 1909, ch. 320(e), 35 Stat. 1075 (1909), superseded by 17 U.S.C. §115 (1988).

Narrative Economics: How Stories Go Viral and Drive Major Economic Events

by

Robert J. Shiller

Published 14 Oct 2019

Siegel, Jeremy J. 2014 [1994]. Stocks for the Long Run. New York: Irwin. Silber, William. 2014. When Washington Shut Down Wall Street: The Great Financial Crisis of 1914 and the Origins of America’s Monetary Supremacy. Princeton, NJ: Princeton University Press. Silver, David, et al. 2017. “Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm.” Cornell University, arXiv:1712.01815 [cs.AI], https://arxiv.org/abs/1712.01815. Skousen, Mark. 2001. The Making of Modern Economics. Armonk, NY: M. E. Sharpe. Slater, Michael D., David B. Buller, Emily Waters, Margarita Archibeque, & Michelle LeBlanc. 2003.

The Precipice: Existential Risk and the Future of Humanity

by

Toby Ord

Published 24 Mar 2020

The Red Rockets’ Glare: Spaceflight and the Russian Imagination, 1857–1957. Cambridge University Press. Sidgwick, H. (1907). Book III, Chapter IX, in The Methods of Ethics (2nd ed., pp. 327–31). Macmillan (original work published 1874). Silver, D., et al. (2018). “A General Reinforcement Learning Algorithm that Masters Chess, Shogi, and Go through Self-Play.” Science, 362(6419), 1,140 LP – 1,144. Sims, L. D., et al. (2005). “Origin and Evolution of Highly Pathogenic H5N1 Avian Influenza in Asia.” Veterinary Record, 157(6), 159–64. Sivin, N. (1982). “Why the Scientific Revolution Did Not Take Place in China—or Didn’t It?” Chinese Science, 5, 45–66.

Robot Rules: Regulating Artificial Intelligence

by

Jacob Turner

Published 29 Oct 2018

Chapter 482A—Autonomous Vehicles, https://www.leg.state.nv.us/NRS/NRS-482A.html, accessed 1 June 2018. 45Ryan Calo, “Nevada Bill Would Pave the Road to Autonomous Cars”, Centre for Internet and Society Blog, 27 April 2011, http://cyberlaw.stanford.edu/blog/2011/04/nevada-bill-would-pave-road-autonomous-cars, accessed 1 June 2018. 46Will Knight, “Alpha Zero’s “Alien” Chess Shows the Power, and the Peculiarity, of AI”, MIT Technology Review, https://www.technologyreview.com/s/609736/alpha-zeros-alien-chess-shows-the-power-and-the-peculiarity-of-ai/, accessed 1 June 2018. See for the academic paper: David Silver, Thomas Hubert, Julian Schrittwieser, Ioannis Antonoglou, Matthew Lai, Arthur Guez, Marc Lanctot, Laurent Sifre, Dharshan Kumaran, Thore Graepel, Timothy Lillicrap, Karen Simonyan, and Demis Hassabis, “Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm”, Cornell University Library Research Paper, 5 December 2017, https://arxiv.org/abs/1712.01815, accessed 1 June 2018. See also Cade Metz, What the AI Behind AlphaGo Can Teach Us About Being Human”, Wired, 19 May 2016, https://www.wired.com/2016/05/google-alpha-go-ai/, accessed 1 June 2018. 47Russell and Norvig, Artificial Intelligence, para. 1.1. 48Nils J.

Fodor's Oregon

by

Fodor's Travel Guides

Published 13 Jun 2023

Coffee and Quick Bites Serendipity Ice Cream $ | ICE CREAM | FAMILY | Historic Cook’s Hotel, built in 1886, is the setting for a true, old-fashioned ice-cream-parlor experience. Try a sundae, and take home cookies made from scratch. Known for: locally made ice cream (dairy- and sugar-free varieties); old-school self-playing piano; the shop provides workplace experience and job training for adults with developmental disabilities. D Average main: $4 E 502 N.E. 3rd St., McMinnville P 503/474–9189 w serendipityicecream.com. h Hotels Atticus Hotel $$$$ | HOTEL | Smack in the middle of downtown McMinnville, this chic boutique hotel offers elegant, high-ceilinged rooms that feature an eclectic mix of local art and amenities and vaguely Edwardian soft furnishings.

The Book of Not Knowing: Exploring the True Nature of Self, Mind, and Consciousness

by

Peter Ralston

Published 30 Aug 2010

In our cultural environment it is just about the only possibility. But do you feel complete and whole? Why are you unhappy? Because 99.9 percent of everything you do is for yourself—and there isn’t one. —Wei Wu Wei The Cost of Our Assumptions 3:28 Our two main cultural assumptions regarding not-knowing and self play off each other and create a powerful interplay of consequences. In our culture, not-knowing is bad, and one of the main things we don’t know is who or what we are—what a self is. Since we feel we have to know what a self is in order to be one, we rely on our cultural perspectives to tell us what a self is or should be.

England

by

David Else

Published 14 Oct 2010

The large traceried stained-glass windows and collection of memorial brasses are unrivalled in the region. Near the square is Oak House, a 17th- century wool house that contains Keith Harding’s World of Mechanical Music (01451-860181; www.mechanicalmusic.co.uk; adult/child £7.50/3; 10am-6pm), a fascinating museum of self-playing musical instruments, where you can hear Rachmaninoff’s works played on a reproducing piano. Just outside town is Chedworth Roman Villa (NT; 01242-890256; Yanworth; adult/under 18yr £5.70/3.35; 10am-5pm Tue-Sun Mar-Nov), one of the largest Roman villas in England. Built as a stately home in about AD 120, it contains some wonderful mosaics illustrating the seasons, bathhouses, and, a short walk away, a temple by the River Coln.

…

Siam House ( 01904-624677; 63a Goodramgate; mains £8-14; dinner Mon-Sat) Delicious, authentic Thai food in about as authentic an atmosphere as you could muster up 6000km from Bangkok. The early bird, three-course special (£12, order before 6.30pm) is an absolute steal. La Vecchia Scuola ( 01904-644600; 62 Low Petergate; mains £8-15; lunch & dinner) Housed in the former York College for Girls, the faux elegant dining room – complete with self-playing grand piano – is straight out of Growing Up Gotti, but there’s nothing fake about the food: authentic Italian cuisine served in suitably snooty style by proper Italian waiters. Fiesta Mexicana ( 01904-610243; 14 Clifford St; mains £9-12; dinner) Chimichangas, tostadas and burritos served in a relentlessly cheerful atmosphere, while students and party groups on the rip add to the fiesta; it’s neither subtle nor subdued, but when is Mexican food ever so?

On the Edge: The Art of Risking Everything

by

Nate Silver

Published 12 Aug 2024

GO TO NOTE REFERENCE IN TEXT pioneering chess computer: Murray Campbell, “Knowledge Discovery in Deep Blue,” Communications of the ACM 42, no. 11 (November 1999): 65–67, doi.org/10.1145/319382.319396. GO TO NOTE REFERENCE IN TEXT playing against themselves: David Silver et al., “Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm,” arXiv, December 5, 2017, arxiv.org/abs/1712.01815. GO TO NOTE REFERENCE IN TEXT ChatGPT said verbatim: With some minor cuts for length. GO TO NOTE REFERENCE IN TEXT some deliberate randomization: Eric Glover, “Controlled Randomness in LLMs/ChatGPT with Zero Temperature: A Game Changer for Prompt Engineering,” AppliedIngenuity.ai: Practical AI Solutions (blog), May 12, 2023, appliedingenuity.substack.com/p/controlled-randomness-in-llmschatgpt.

Programming Ruby 1.9: The Pragmatic Programmer's Guide

by

Dave Thomas

,

Chad Fowler

and

Andy Hunt

Published 15 Dec 2000

This current object is referenced by the built-in, read-only variable self. self has two significant roles in a running Ruby program. First, self controls how Ruby finds instance variables. We already said that every object carries around a set of instance variables. When you access an instance variable, Ruby looks for it in the object referenced by self. Second, self plays a vital role in method calling. In Ruby, each method call is made on some object. This object is called the receiver of the call. When you make a method call such as items.size, the object referenced by the variable items is the receiver and size is the method to invoke. If you make a method call such as puts "hi", there’s no explicit receiver.

Theory and Practice of Group Psychotherapy

by

Irvin D. Yalom

and

Molyn Leszcz

Published 1 Jan 1967

Although a few still do, the trend is downward and, regrettably, fewer residents choose to enter therapy.34 I consider my personal psychotherapy experience, a five-times-a-week analysis during my entire three-year residency, the most important part of my training as a therapist.35 I urge every student entering the field not only to seek out personal therapy but to do so more than once during their career—different life stages evoke different issues to be explored. The emergence of personal discomfort is an opportunity for greater self-exploration that will ultimately make us better therapists.36 Our knowledge of self plays an instrumental role in every aspect of the therapy. An inability to perceive our countertransference responses, to recognize our personal distortions and blind spots, or to use our own feelings and fantasies in our work will severely limit our effectiveness. If you lack insight into your own motivations, you may, for example, avoid conflict in the group because of your proclivity to mute your feelings; or you may unduly encourage confrontation in a search for aliveness in yourself.

Melody Beattie 4 Title Bundle: Codependent No More and 3 Other Best Sellers by Melody Beattie: A Collection of Four Melody Beattie Best Sellers

by

Melody Beattie

Published 30 May 2010

But a second, more common denominator seemed to be the unwritten, silent rules that usually develop in the immediate family and set the pace for relationships.8 These rules prohibit discussion about problems; open expression of feelings; direct, honest communication; realistic expectations, such as being human, vulnerable, or imperfect; selfishness; trust in other people and one’s self; playing and having fun; and rocking the delicately balanced family canoe through growth or change—however healthy and beneficial that movement might be. These rules are common to alcoholic family systems but can emerge in other families, too. Now, I return to an earlier question: Which definition of codependency is accurate?

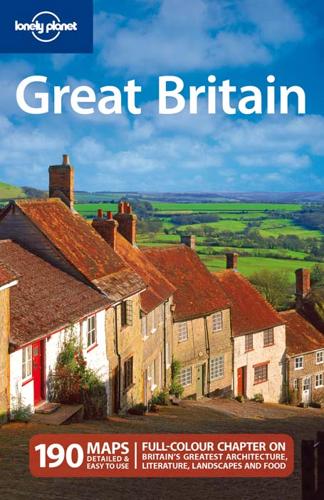

Great Britain

by

David Else

and

Fionn Davenport

Published 2 Jan 2007

Siam House ( 01904-624677; 63a Goodramgate; mains £8-14; dinner Mon-Sat) Delicious, authentic Thai food in about as authentic an atmosphere as you could muster up 6000km from Bangkok. The early bird, three-course special (£12, order before 6.30pm) is an absolute steal. La Vecchia Scuola ( 01904-644600; 62 Low Petergate; mains £8-15; lunch & dinner) Housed in the former York College for Girls, the faux elegant dining room – complete with self-playing grand piano – is straight out of Growing Up Gotti, but there’s nothing fake about the food: authentic Italian cuisine served in suitably snooty style by proper Italian waiters. Fiesta Mexicana ( 01904-610243; 14 Clifford St; mains £9-12; dinner) Chimichangas, tostadas and burritos served in a relentlessly cheerful atmosphere, while students and party groups on the rip add to the fiesta; it’s neither subtle nor subdued, but when is Mexican food ever so?